Command Palette

Search for a command to run...

Jensen Huang's Latest Speech: 5 Innovations, Rubin Performance Data Revealed for the First Time; Diverse Open Source, Covering Agent/Robot/Autonomous Driving/AI4S

At the start of the new year, CES 2026 (Consumer Electronics Show), often referred to as the "Spring Festival Gala of Technology," kicked off in Las Vegas, USA. Besides the continued presence of bionics, humanoid robots, and autonomous driving technologies at the core of the exhibition, the fierce competition among manufacturers such as Intel, AMD, Qualcomm, and Nvidia—a major showcase for new chips—was also a highlight of CES.

According to various sources, Intel plans to officially launch its Panther Lake processors, the third generation of Core Ultra, at CES. Qualcomm will showcase the latest developments of its Snapdragon X2 Elite and Snapdragon X2 Elite Extreme platforms for PCs. AMD CEO Lisa Su plans to release new Ryzen chips during her keynote address on the evening of January 5th, such as the recently leaked Ryzen 7 9850X3D and the Ryzen 9000G series based on the Zen 5 architecture.

Although Jensen Huang was not on the official CES keynote list, he was still busy making appearances at various events. Of particular note was his solo presentation at NVIDIA LIVE, scheduled for 5:00 AM Beijing time on January 5th. It is speculated that Huang will reveal the latest progress on the Rubin platform, as well as related developments in Physical AI and autonomous driving.

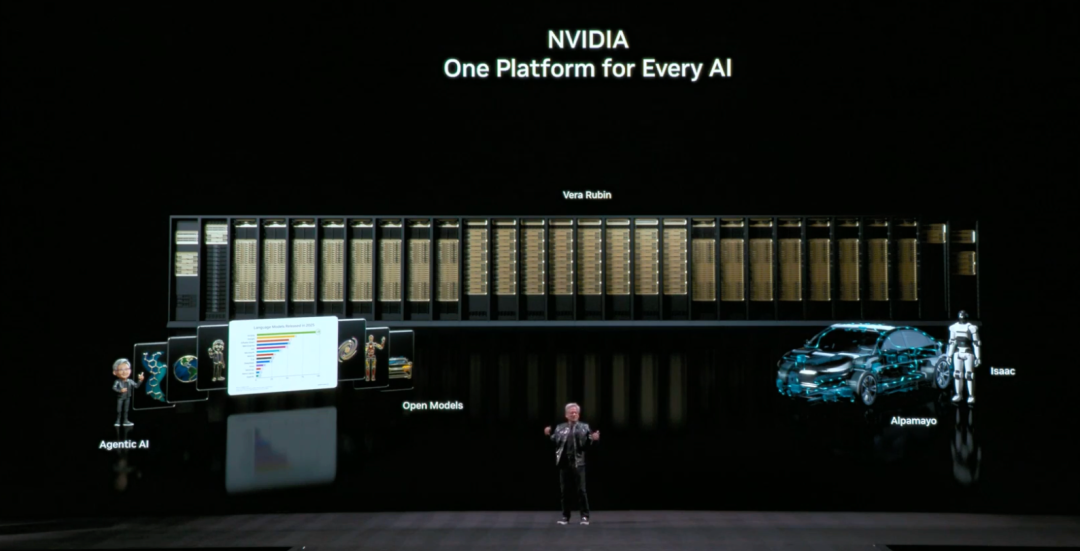

Jensen Huang did not disappoint the industry; in his recently concluded speech,Wearing his signature black leather jacket, Lao Huang further introduced the Rubin platform, which incorporates five innovations, and released several open-source achievements.Specifically:

* NVIDIA Nemotron series for Agentic AI

* NVIDIA Cosmos platform for Physical AI

* NVIDIA Alpamayo series for autonomous driving research and development

* NVIDIA Isaac GR00T for the robotics field

* NVIDIA Clara serving the biomedical field

With five innovative features, Rubin arrived at the perfect time.

"The computational demands of AI for training and inference are currently escalating dramatically, and Rubin's release comes at the perfect time."Jensen Huang has high hopes for the Rubin platform, stating that Rubin is now in full production and is expected to be delivered to the first batch of users in the second half of 2026.

Focusing on platform performance, the Rubin platform achieves "extreme codesign" across six chips, including the NVIDIA Vera CPU, NVIDIA Rubin GPU, NVIDIA NVLink 6 switch, NVIDIA ConnectX-9 SuperNIC, NVIDIA BlueField-4 DPU, and NVIDIA Spectrum-6 Ethernet switch. Based on this, compared to the NVIDIA Blackwell platform,It can reduce the cost per token in the inference phase by up to 10 times and the number of GPUs required to train the MoE (Hybrid Expert) model by 4 times.

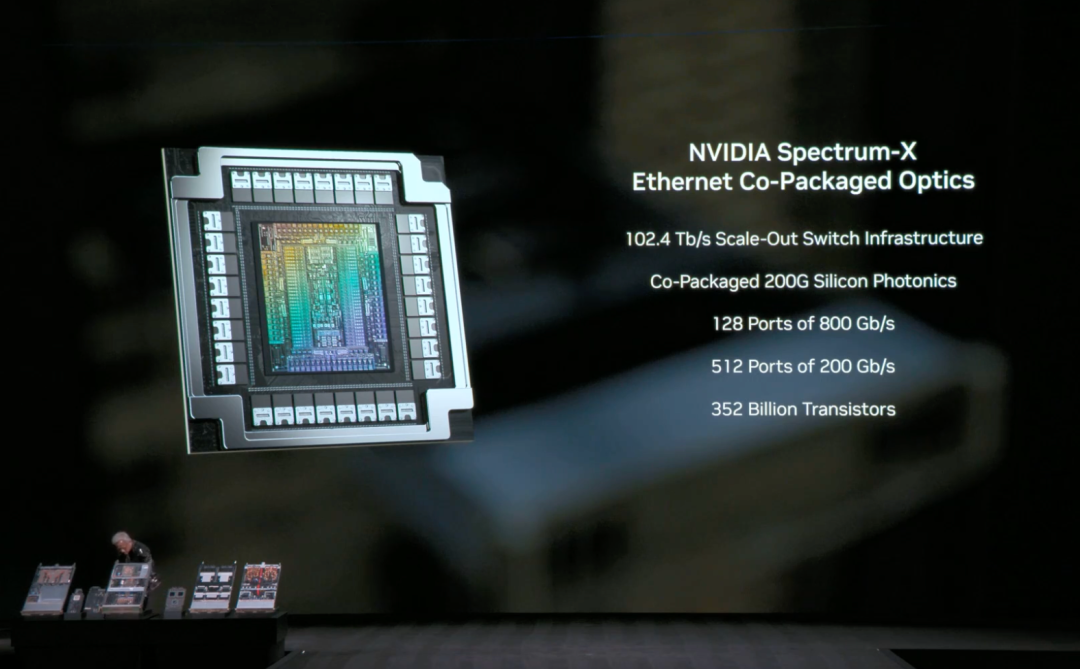

Among them, NVIDIA Spectrum-6 Ethernet is the next-generation Ethernet for AI networks, employing 200G SerDes, co-packaged optics, and an AI-optimized network architecture to provide Rubin's AI factory with higher efficiency and greater resilience. The Spectrum-X Ethernet photonic switching system, based on the Spectrum-6 architecture, achieves 5x energy efficiency while providing 10x reliability and 5x longer uptime.

According to the official introduction, the Rubin platform introduces five innovations:

6th Generation NVIDIA NVLink

Provides high-speed, seamless GPU-GPU communication for large-scale MoE models. Single GPU bandwidth reaches 3.6TB/s, and the total bandwidth of a Vera Rubin NVL72 rack reaches 260TB/s, exceeding the total bandwidth of the entire Internet. The NVLink 6 switch chip integrates network computing capabilities, accelerating aggregated communication and introducing new features in maintainability and resilience, enabling faster and more efficient large-scale AI training and inference.

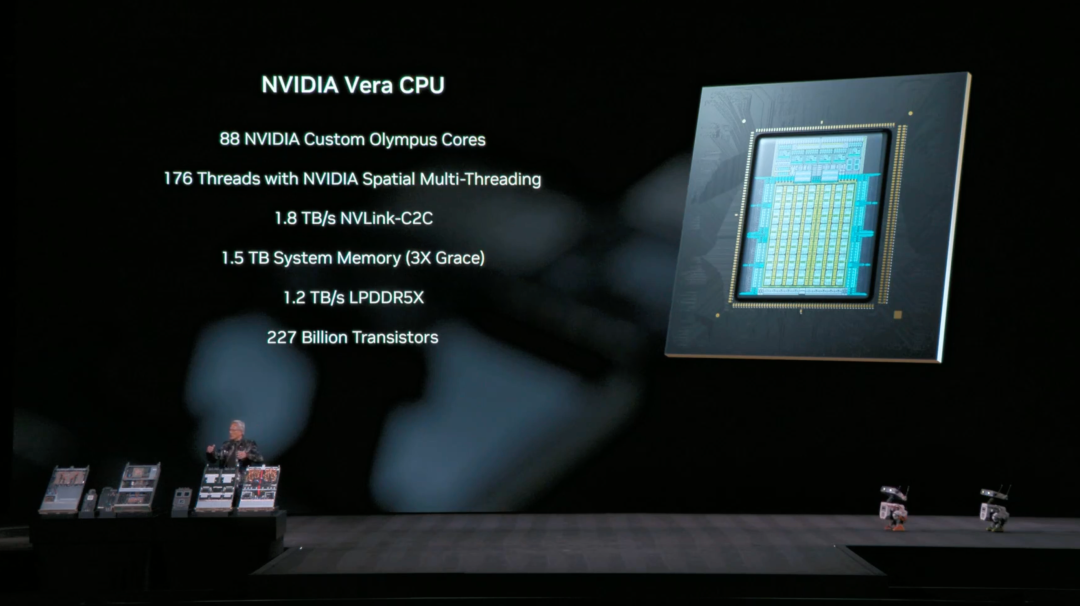

* NVIDIA Vera CPU

Designed specifically for agent inference, it is the most energy-efficient CPU in large-scale AI factories. It uses 88 NVIDIA Olympus cores, is fully compatible with Armv9.2, and supports ultra-high-speed NVLink-C2C interconnect, providing superior performance, bandwidth and industry-leading energy efficiency for modern data center workloads.

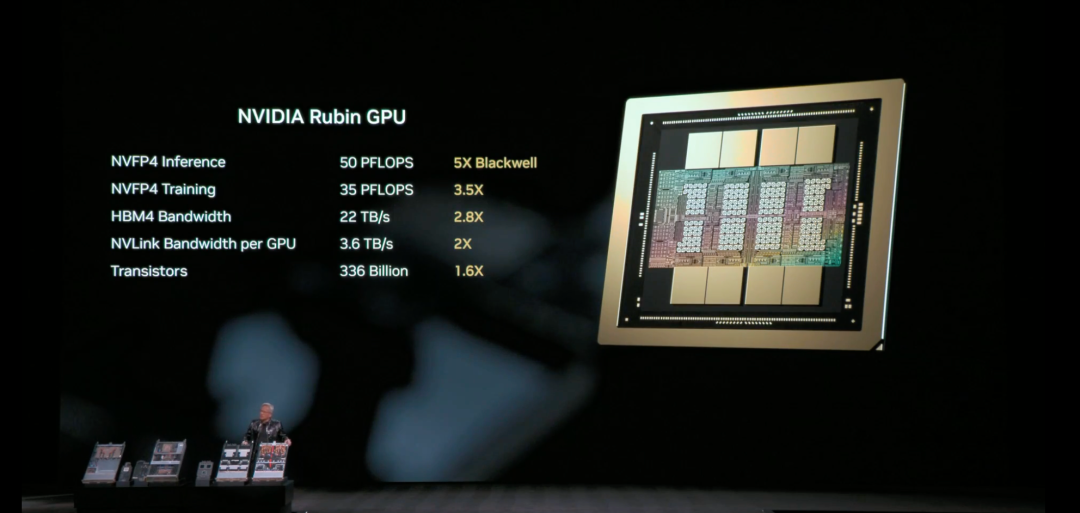

* NVIDIA Rubin GPU

Equipped with a third-generation Transformer Engine and supporting hardware-accelerated adaptive compression, it delivers 50 PFLOPS of NVFP4 computing performance in AI inference.

* Third Generation NVIDIA Confidential Computing

The Vera Rubin NVL72 is the first platform to implement NVIDIA confidential computing at the rack level, ensuring data security across the CPU, GPU, and NVLink domains, protecting the world’s largest proprietary model and its training and inference tasks.

* Second-generation RAS Engine

Real-time health monitoring, fault tolerance, and predictive maintenance mechanisms covering GPU, CPU, and NVLink maximize system productivity; modular, cableless tray design enables assembly and maintenance speeds up to 18 times faster than Blackwell.

Meanwhile, the Rubin platform introduces the NVIDIA Inference Context Memory Storage Platform, a new AI-native storage infrastructure designed to enable gigabit-scale inference context scaling. Powered by NVIDIA BlueField-4, this platform enables efficient sharing and reuse of key-value cache data within the AI infrastructure, improving responsiveness and throughput while achieving predictable, low-power agent AI scaling.

While the Rubin platform hasn't truly left the factory yet, it has already garnered support from a host of industry leaders. In an official NVIDIA blog post, OpenAI CEO Sam Altman, Anthropic CEO Dario Amodei, Meta CEO Mark Zuckerberg, Elon Musk (who appeared as xAI CEO), and the heads of major tech companies like Microsoft, Google, AWS, and Dell all gave it high praise—Musk stated directly,"Rubin will once again prove to the world that NVIDIA is the gold standard in the industry."

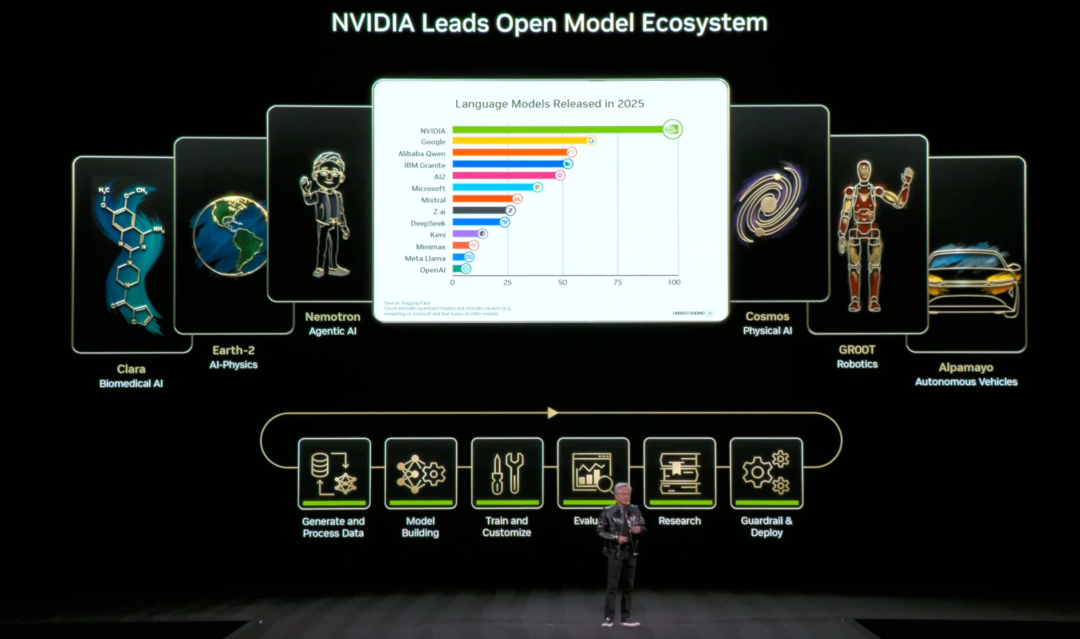

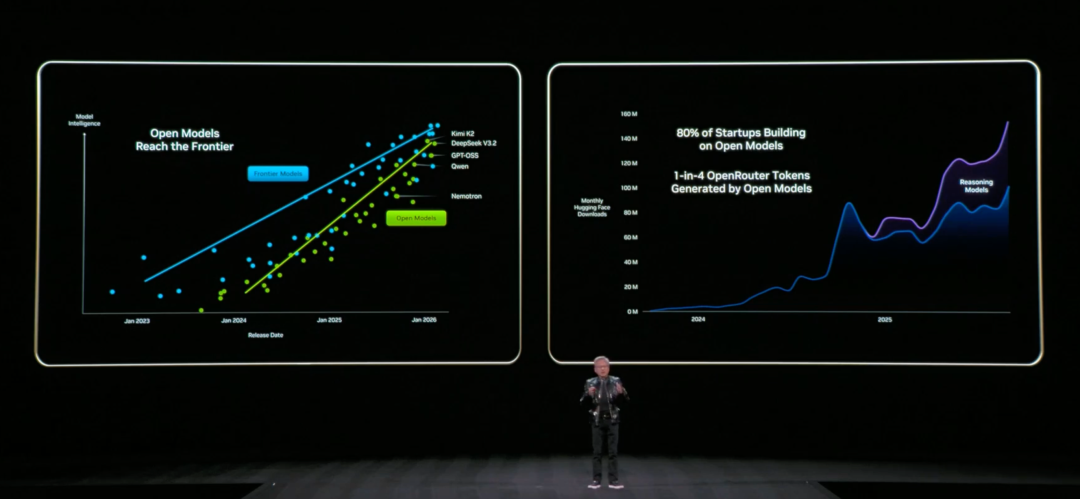

Diverse open source technologies: Agents, AI4S, autonomous driving, and robotics.

Besides the much-discussed Rubin platform, "open source" was another important keyword in Jensen Huang's speech.

First is NVIDIA Nemotron for AI Agents. Building on the previously released NVIDIA Nemotron 3 open model and data, NVIDIA has further launched Nemotron models for speech, multimodal retrieval augmented generation (RAG), and security.

* Nemotron Speech

Composed of several leading open models, including a brand-new ASR (Automatic Speech Recognition) model, it provides low-latency, real-time speech recognition capabilities for real-time captioning and speech AI applications. Daily and Modal benchmarks show that it is 10 times faster than similar models.

* Nemotron RAG

It includes a brand-new embedding model and a reordered visual language model (VLM), which can provide high-precision multilingual and multimodal data insights, significantly improving document search and information retrieval capabilities.

* Nemotron Safety

The model system designed to enhance the security and trustworthiness of AI applications now includes the Llama Nemotron content security model (which supports more languages) and Nemotron PII, which can identify sensitive data with high accuracy.

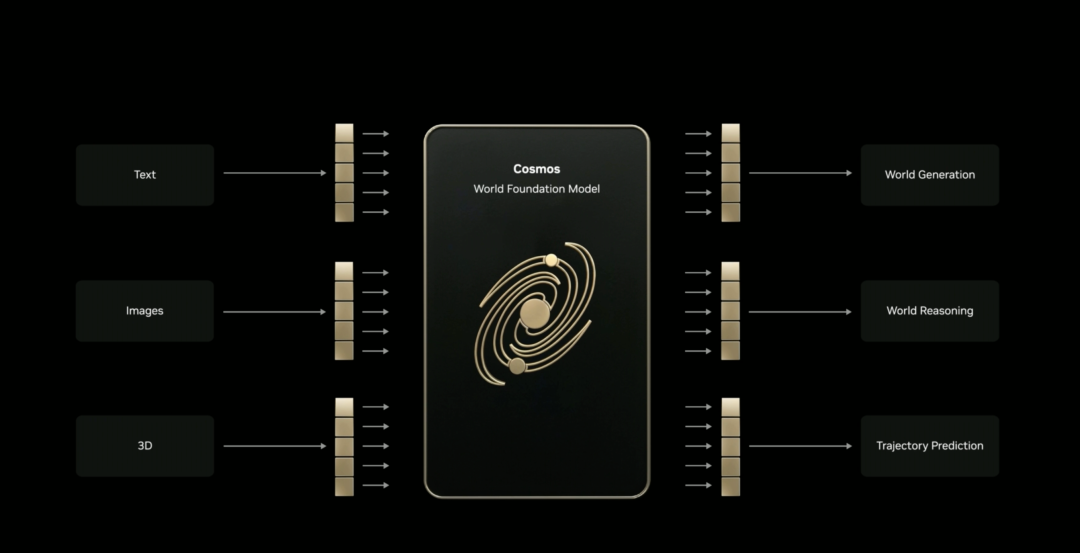

Secondly, NVIDIA updated its Cosmos series models for the fields of Physical AI and robotics:

* Cosmos Reason 2

The new, top-ranked inference-based VLM helps robots and AI agents achieve higher precision in perception, understanding, and interaction in the physical world.

* Cosmos Transfer 2.5 and Cosmos Predict 2.5

It can generate large-scale synthetic videos under diverse environments and conditions.

Based on Cosmos, NVIDIA has also released open-source models for different physical AI paradigms:

* Isaac GR00T N1.6

An open-reasoning vision-language-action (VLA) model for humanoid robots, enabling full-body control and enhancing reasoning and contextual understanding capabilities with the help of Cosmos Reason.

* Video Search and Summary NVIDIA Blueprint

Belonging to the NVIDIA Metropolis platform, it provides a reference workflow for building visual AI agents that can analyze large amounts of recorded and real-time video to improve operational efficiency and public safety.

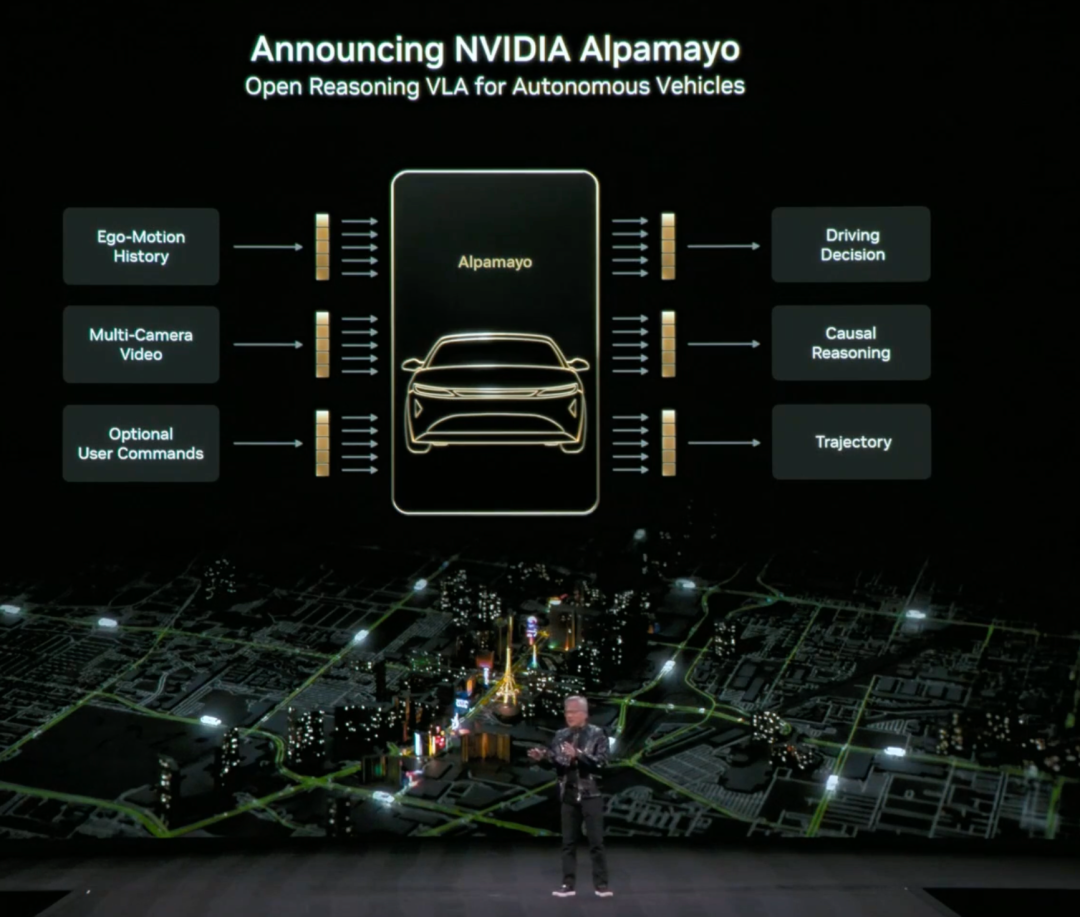

Third, for the autonomous driving industry, it has newly open-sourced NVIDIA Alpamayo—which includes open-source models, simulation tools, and large-scale datasets.

* Alpamayo 1

The first open-source, large-scale inference-based VLA model for autonomous vehicles (AVs) enables vehicles to not only understand their environment but also explain their own behavior.

* AlpaSim

An open-source simulation framework that supports closed-loop training and evaluation of inference-based autonomous driving models in diverse environments and complex edge scenarios.

In addition, NVIDIA released the Physical AI Open Dataset, which contains more than 1,700 hours of real driving data from the widest range of geographic regions and environmental conditions around the world, covering a large number of rare and complex real-world edge scenarios, which is crucial for advancing inference architectures.

Finally, for the AI4S domain, NVIDIA launched the Clara AI model, which includes:

* La-Proteina

It supports the design of large-scale, atomic-level proteins for scientific research and drug candidate development, providing new tools for studying diseases previously considered "untreatable".

* ReaSyn v2

Introducing manufacturing blueprints into the drug discovery process ensures that AI-designed drugs are synthetically viable.

* KERMT

By predicting drug-human interactions, it provides highly accurate computational safety testing at an early stage.

* RNAPro

Unlocking the potential of personalized medicine by predicting the complex three-dimensional structure of RNA molecules.

In addition, NVIDIA released a dataset containing 455,000 synthetic protein structures to help researchers build more accurate AI models.

Conclusion

As the Las Vegas spotlight once again shines on AI and its underlying hardware support, whether it's Jensen Huang's eloquent discussion of the Rubin platform or Lisa Su's major new product unveiling tonight, it's not just about releasing a new generation of chips or a performance leap; it's more about setting boundaries for the next stage of AI development: how computing power can be organized, how costs can be compressed, how models can truly move towards inference, and how agents can be deeply coupled with the real world.

CES 2026 is no longer just a battle of specifications between manufacturers, but a collective choice surrounding the form of AI infrastructure. It's clear that the focus of competition is shifting from the models themselves to who can more efficiently and stably support the large-scale deployment of intelligence.

References

1.https://nvidianews.nvidia.com/news/rubin-platform-ai-supercomputer

2.https://blogs.nvidia.com/blog/open-models-data-tools-accelerate-ai/