Command Palette

Search for a command to run...

IQuest-Coder-V1: A Programming Logic Enhancement Model Trained on Code Flow; Human Face Emotions: A multi-annotated Facial Emotion Recognition dataset.

Currently, although AI code generation tools are widely used, they often face challenges such as rigid generated code logic, insufficient contextual understanding, and difficulty in mimicking real development processes.Many models only learn from "static snapshots" of code snippets, lacking a deep understanding of why and how the code should be modified, resulting in limited usability of the generated code.

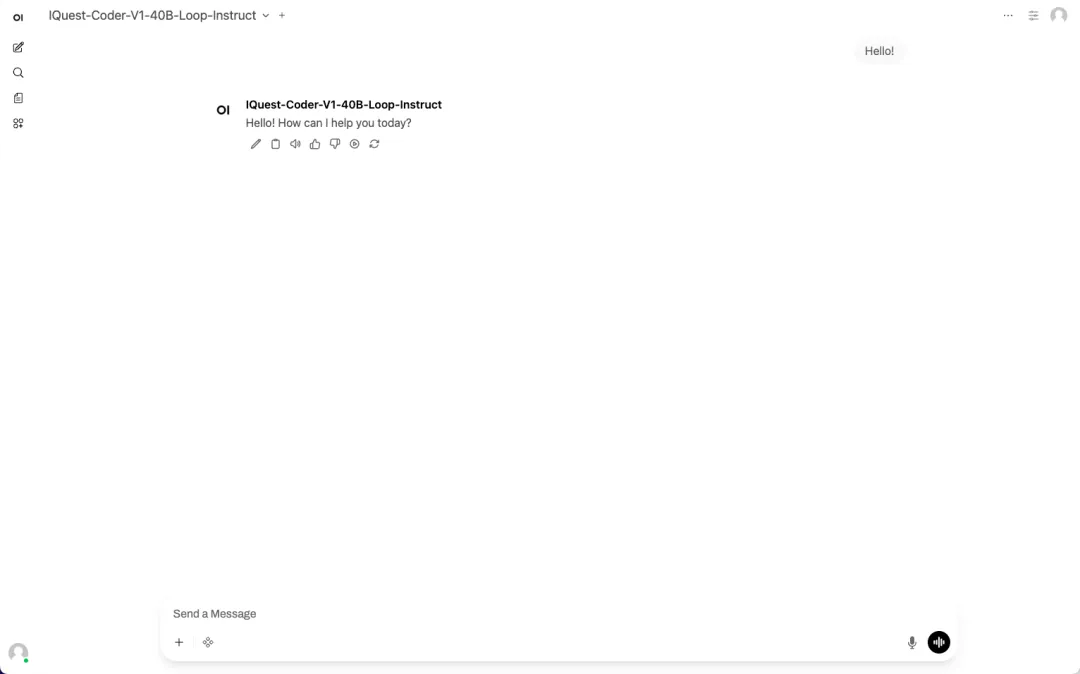

Based on this, Zhizhi Innovation Research Institute, under Jiukun Investment, released the IQuest-Coder-V1 code model series in January 2026.This model is built on the unique concept of "code flow". Its core innovation lies in enabling the model to learn from a massive amount of real code change history, allowing it to understand the dynamic process of software evolution like an experienced developer.Furthermore, the code generated by the model performs exceptionally well in terms of correctness, maintainability, and alignment with developer intent, and is better able to handle complex programming tasks that require multi-step reasoning.Its main version has 40 billion parameters and adopts a Loop architecture that allows for internal iterative code optimization and native support for 128K long contexts, which significantly improves its ability to handle complex programming tasks.

The "IQuest-Coder-V1 model" is now available on the HyperAI website. Give it a try!

Online use:https://go.hyper.ai/vk4K2

A quick overview of hyper.ai's official website updates from January 12th to January 16th:

* High-quality public datasets: 6

* Selection of high-quality tutorials: 3

* Community article interpretation: 3 articles

* Popular encyclopedia entries: 5

Top conferences with January deadlines: 8

Visit the official website:hyper.ai

Selected public datasets

1. Human Face Emotions Dataset

Human Face Emotions is an image classification dataset for facial expression recognition tasks. It aims to provide foundational training and evaluation data for research in computer vision and emotion recognition. The dataset is organized according to emotion categories, containing five categories of facial emotions, each with approximately 8,000+ facial image samples. The dataset uses individual facial images as the basic sample unit and is organized into folders based on emotion categories. The image data comes from multiple publicly available online platforms, covering different people, shooting conditions, and background scenes, demonstrating good diversity.

Direct use:https://go.hyper.ai/Z2ouP

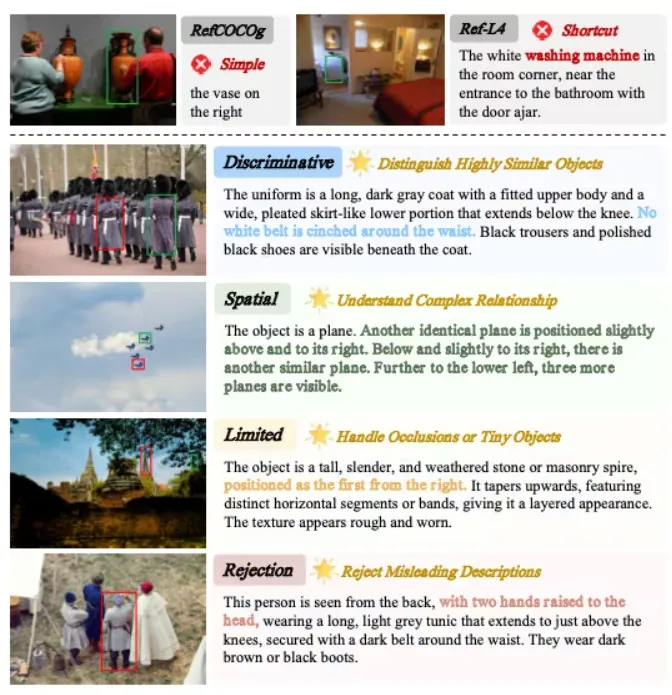

2. GroundingME Complex Scene Understanding Evaluation Dataset

GroundingME is a visual reference evaluation dataset for multimodal large language models (MLLMs) released in 2025 by Tsinghua University in collaboration with Xiaomi and the University of Hong Kong. It aims to systematically evaluate the model's ability to accurately map natural language to visual targets in real-world complex scenes, with particular attention to understanding and safety performance in ambiguous references, complex spatial relationships, small targets, occlusion, and unreferential situations.

Direct use:https://go.hyper.ai/nJSaK

3. Nemotron-Math-v2 Mathematical Inference Dataset

Nemotron-Math-v2 is a mathematical reasoning dataset released by NVIDIA Corporation in 2025. It is primarily used to train LLMs to perform structured mathematical reasoning, study the differences between tool-enhanced reasoning and pure language reasoning, and build long-context or multi-track reasoning systems.

Direct use:https://go.hyper.ai/6OKuq

4. HydroBASINS Global River Partition Dataset

HydroBASINS is a global river subdivision dataset based on the hydrological core layer of HydroSHEDS, providing seamless global river subdivisions at a spatial resolution of 15 arcseconds. This dataset is designed to support hydrological, ecological, and environmental analyses, providing consistent, hierarchical catchment boundaries and river network topologies. It is suitable for a variety of applications, including hydrology and water resources, climate and Earth system modeling, biodiversity and conservation programs, catchment environmental analysis, large-scale spatial modeling, and GIS workflows.

Direct use:https://go.hyper.ai/53vN4

5. Battery Failure Surfaces: A dataset simulating battery failures.

Battery Failure Surfaces is a battery failure simulation dataset designed to study the lifecycle and failure modes of batteries under different operating stresses. This physics-inspired synthetic dataset simulates the state changes of batteries during charge-discharge cycles and is suitable for risk modeling and safety boundary discovery.

Direct use:https://go.hyper.ai/hRapq

6. Global Green Energy Pulse Dataset

The Global Green Energy Pulse dataset is a dataset for renewable energy analysis in major cities around the world. This dataset aims to help researchers and policymakers understand the green energy potential of different cities, especially in the context of cities transitioning to net-zero economies, providing a rich data foundation for research in time series forecasting, geospatial analysis, and global energy optimization.

Direct use:https://go.hyper.ai/p8NAY

Selected Public Tutorials

1. Deploying IQuest-Coder-V1 using vLLM+Open WebUI

IQuest-Coder-V1 is an advanced AI model released by IQuestLab, focusing on code generation, understanding, and optimization. It offers various parameter scales (7B, 14B, 40B) and versions (Instruct, Thinking, Loop) to meet diverse development needs. Employing a "multi-stage code flow training" strategy, it learns from static code snippets, acquiring knowledge from the code evolution process, significantly improving its understanding of real-world development scenarios.

Run online:https://go.hyper.ai/vk4K2

2.vLLM+Open WebUI Deployment of QwenLong-L1.5

QwenLong-L1.5 is a series of long-context reasoning and memory management models launched by Alibaba Tongyi Lab. This tutorial uses QwenLong-L1.5-30B-A3B, a decoding Transformer model with approximately 30 billion parameters. It is obtained through systematic post-training based on the base model Qwen3-30B-A3B-Thinking and is released as open source on platforms such as Hugging Face. It employs a series of post-training techniques, including a long-context data synthesis pipeline, stable reinforcement learning for long sequences, and an ultra-long-context framework for memory enhancement, resulting in superior performance in long-context benchmarks. Furthermore, these capabilities have been transferred to general domain tasks, including mathematical reasoning, tool use, and long-dialogue consistency.

Run online:https://go.hyper.ai/6mD9U

3. Qwen-Image-2512: Generating more realistic portraits and natural landscapes

Qwen-Image-2512 is a foundational text-to-image model in the Qwen-Image series, an upgraded version released at the end of the year. This model is primarily designed for high-quality image generation and complex multimodal content expression scenarios. Compared to previous versions, Qwen-Image-2512 has undergone systematic optimization in several key dimensions, significantly improving the overall realism and usability of the generated images. Specifically, the naturalness of portrait generation is significantly enhanced, with facial structure, skin texture, and lighting relationships more closely resembling realistic photographic effects. In natural scenes, the model can generate more detailed terrain textures, vegetation details, and high-frequency information such as animal fur. Simultaneously, the model's ability to generate and format text within images has also been improved, enabling more stable presentation of readable text and complex text layouts.

Run online:https://go.hyper.ai/rODFG

Community article interpretation

1. Online Tutorial | Qwen-Image-2512 Officially Open Source: Say Goodbye to the Plastic Look of AI Raw Images, Achieve Realistic Hair Rendering with Just Text Commands

While open-source image generation models can quickly produce images, they often fall short in achieving ultimate realism, especially when handling portraits and complex natural scenes. Generated faces often appear "plastic" or have blurred features, and skin lacks realistic texture. For natural landscapes, models often appear stiff when representing the delicate textures of water and vegetation. To address this, Alibaba's Tongyi Lab recently open-sourced its next-generation image generation model, Qwen-Image-2512. Its features are very distinct, focusing on a leap forward in three core capabilities: more realistic human textures; more delicate natural textures; and stronger rendering of complex text.

View the full report:https://go.hyper.ai/S3HJE

2. Accuracy reaches 97%! Princeton University and others propose MOFSeq-LMM, efficiently predicting whether MOFs can be synthesized.

Free energy is a crucial indicator for assessing the thermodynamic stability and synthesizability of MOFs, but traditional computational methods are prohibitively expensive on large-scale MOF datasets, hindering rapid screening. To address this challenge, a joint research team from Princeton University and the Colorado School of Mines proposed an efficient machine learning-based prediction method. This method utilizes a Large Language Model (LLM) to directly predict the free energy from the structural sequences of MOFs, significantly reducing computational costs and enabling high-throughput, scalable MOF thermodynamic assessment. The model exhibits high versatility without requiring retraining: its F1 score reaches 97% when determining whether the MOF free energy is above or below an empirically based synthetic feasibility threshold.

View the full report:https://go.hyper.ai/gBEeA

3. ChatGPT boasts hundreds of millions of users but a paid conversion rate of less than 10%. How can AI be converted into sustainable profits?

Since 2025, the "gap" between massive investments in artificial intelligence and commercial returns has increasingly become a focal point of public opinion. On the one hand, global tech giants continue to bet on AI infrastructure and algorithm development, endorsing AI as the engine of the next industrial revolution; on the other hand, capital markets, macro analysts, and independent observers have also begun to closely monitor the stock performance of AI companies. Against this backdrop, the assessment of AI investment and commercial returns has shifted from technological elitism to a pragmatic financial examination, primarily focusing on the two core sectors of B2B and B2C that bear huge amounts of capital.

View the full report:https://go.hyper.ai/hE5yN

Popular Encyclopedia Articles

1. Frames per second (FPS)

2. Bidirectional Long Short-Term Memory (Bi-LSTM)

3. Embodied Navigation

4. RewardMap, a multi-stage reinforcement learning framework

5. Guess – Think – Answer

Here are hundreds of AI-related terms compiled to help you understand "artificial intelligence" here:

One-stop tracking of top AI academic conferences:https://go.hyper.ai/event

The above is all the content of this week’s editor’s selection. If you have resources that you want to include on the hyper.ai official website, you are also welcome to leave a message or submit an article to tell us!

See you next week!