Command Palette

Search for a command to run...

Open Source, Best Value! Mistral AI Releases the Ministral 3 Series of Models, Integrating Multimodal Understanding and Intelligent Execution Capabilities; From high-dynamic Dance to Everyday Behavior, the X-Dance Dataset Unlocks multi-dimensional Testing for Human Animation generation.

recently,The Mistral AI team has open-sourced its high-efficiency model series, Ministral 3, offering three parameter sizes: 3B, 8B, and 14B.Each parameter comes in three versions: Basic, Command, and Inference, all of which are licensed under the Apache 2.0 license.

The Ministral-3-14B, as the model with the largest parameters in the series, offers the most advanced performance in its class, comparable to the even larger Mistral Small 3.2-24B model. It is optimized for local deployment, maintaining high performance on small, resource-constrained devices.

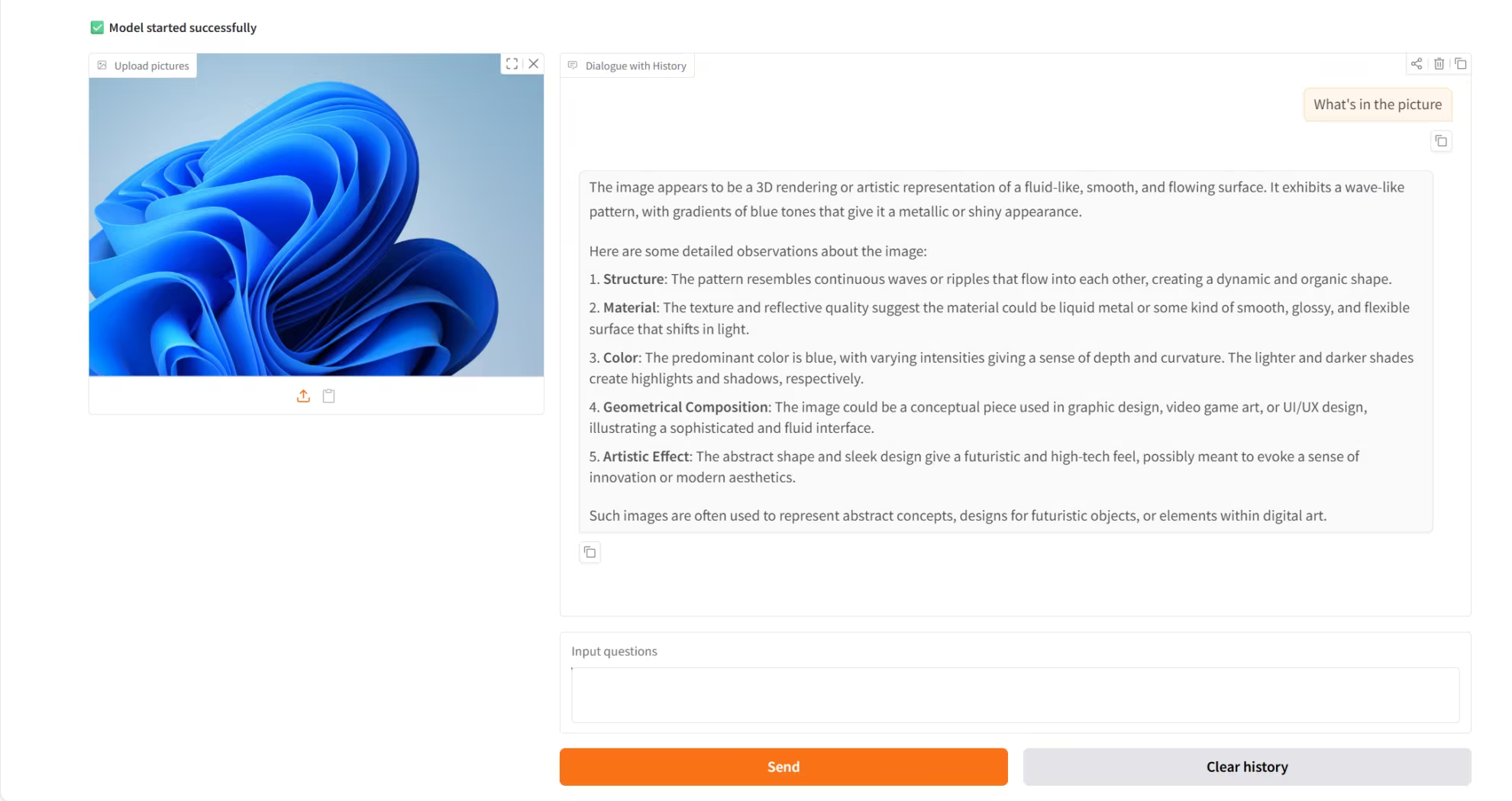

Ministral-3-14B integrates multimodal understanding and intelligent execution capabilities:In terms of vision, it can directly analyze image content and generate text content based on visual information; at the same time, its multilingual support covers dozens of mainstream languages, including English, Chinese, Japanese, etc. The model relies on its powerful 256K context window, which provides solid support for handling complex, long sequence tasks.

The HyperAI website now offers a one-click deployment of the Ministral-3-14B Instruct. Give it a try!

Online use:https://go.hyper.ai/EGIY2

A quick overview of hyper.ai's official website updates from December 1st to December 5th:

* High-quality public datasets: 5

* High-quality tutorial selection: 5

* This week's recommended papers: 5

* Community article interpretation: 5 articles

* Popular encyclopedia entries: 5

Top conferences with December deadlines: 1

Visit the official website:hyper.ai

Selected public datasets

1. UniCode Evolutionary Algorithm Problem Generation Dataset

UniCode is an automated dataset of algorithm problems and test cases built using an evolutionary generation strategy. It aims to replace traditional static, manually generated problem sets, providing more diverse, challenging, and robust programming problem resources. Through a systematic problem generation and verification pipeline, this dataset constructs structured, challenging, and uncontaminated problem and test data, suitable for algorithm research, code generation model evaluation, and competition training.

Direct use:https://go.hyper.ai/YBBcI

2. VAP-Data Visual Motion Performance Dataset

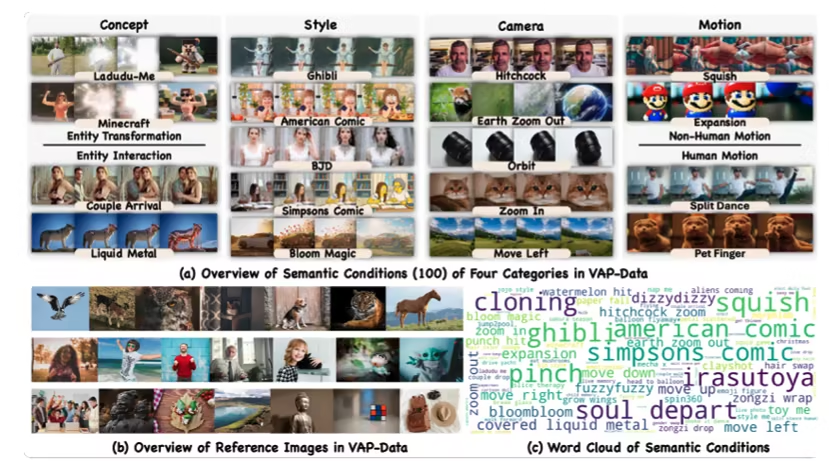

VAP-Data, jointly released by ByteDance and the Chinese University of Hong Kong, is currently the largest semantically controlled video generation dataset. It aims to provide high-quality training and evaluation benchmarks for controlled video generation, controlled motion synthesis, and multimodal video models. The dataset contains over 90,000 carefully curated paired samples, covering 100 fine-grained semantic conditions across four semantic categories: concept, style, action, and shot. Each semantic category includes multiple sets of mutually aligned video instances.

Direct use:https://go.hyper.ai/wUrHs

3. Fungi MultiClass Microscopic Fungal Microscopic Image Dataset

Fungi MultiClass Microscopic is a high-quality microscopic image dataset for image classification and deep learning research, designed to provide reliable training and evaluation data resources for fields such as medical mycology and agricultural pathology diagnosis.

Direct use:https://go.hyper.ai/ZHUaY

4. X-Dance Image-Driven Dance Motion Dataset

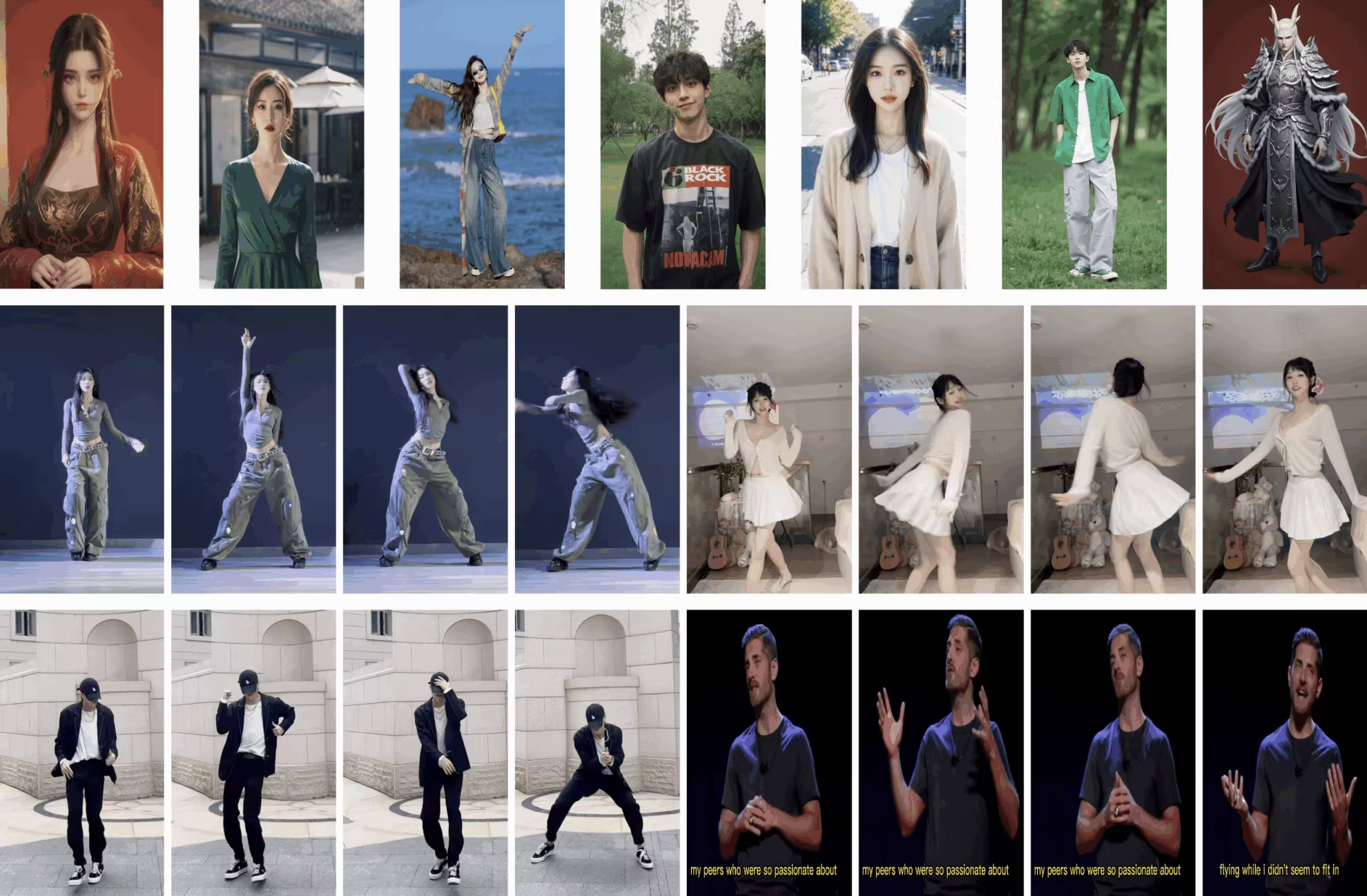

X-Dance is a test dataset released by Nanjing University in collaboration with Tencent and the Shanghai Artificial Intelligence Laboratory. It is specifically designed for image-to-video animation generation and aims to evaluate the robustness and generalization ability of models in real-world scenarios when dealing with challenges such as identity preservation, temporal coherence, and spatiotemporal misalignment.

Direct use:https://go.hyper.ai/QXsNo

5. 3EED Language-Driven 3D Understanding Dataset

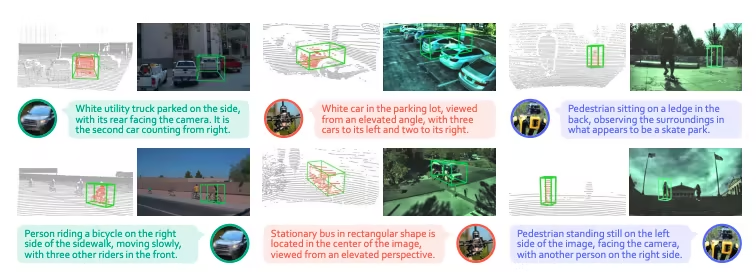

3EED is a multi-platform, multi-modal 3D visual grounding dataset released by Hong Kong University of Science and Technology (Guangzhou) in conjunction with Nanyang Technological University, Hong Kong University of Science and Technology and other institutions. It has been accepted by NeurIPS 2025 and aims to support models in completing language-driven 3D target localization tasks in real outdoor scenes, and to comprehensively evaluate the cross-platform robustness and spatial understanding capabilities of the models.

Use it directly: https://go.hyper.ai/gC8Fq

Selected Public Tutorials

1. A 3D Christmas tree based on gesture recognition

3D Christmas Tree is an innovative project released by moleculemmeng020425. It delivers an immersive, cinematic visual experience. Built on React and Three.js (R3F), the project utilizes advanced AI gesture recognition technology, allowing users to easily control the shape of the Christmas tree (aggregating and dispersing) and freely rotate the viewpoint using gestures.

Run online:https://go.hyper.ai/LpApP

2. One-click deployment of Ministry-3-14B-Instruct

Ministral-3-14B-Instruct-2512 is a multimodal model released by Mistral AI. It supports multimodal (text and image) and multilingual capabilities, offering high performance and cost-effectiveness. Combined with optimization technologies from partners like NVIDIA, the model can run efficiently on various hardware and is suitable for edge computing, enterprise deployments, and other scenarios, providing developers with powerful tools to build and deploy AI applications.

Run online:https://go.hyper.ai/EGIY2

3. SAM3: Visual Segmentation Model

SAM3 is an advanced computer vision model developed by Meta AI. This model can detect, segment, and track objects in images and videos using text, examples, and visual cues. It supports open-vocabulary phrase input, possesses powerful cross-modal interaction capabilities, and can correct segmentation results in real time. SAM3 delivers superior performance in image and video segmentation tasks, outperforming existing systems by twice as much, and supports zero-shot learning.

Run online:https://go.hyper.ai/PEaVo

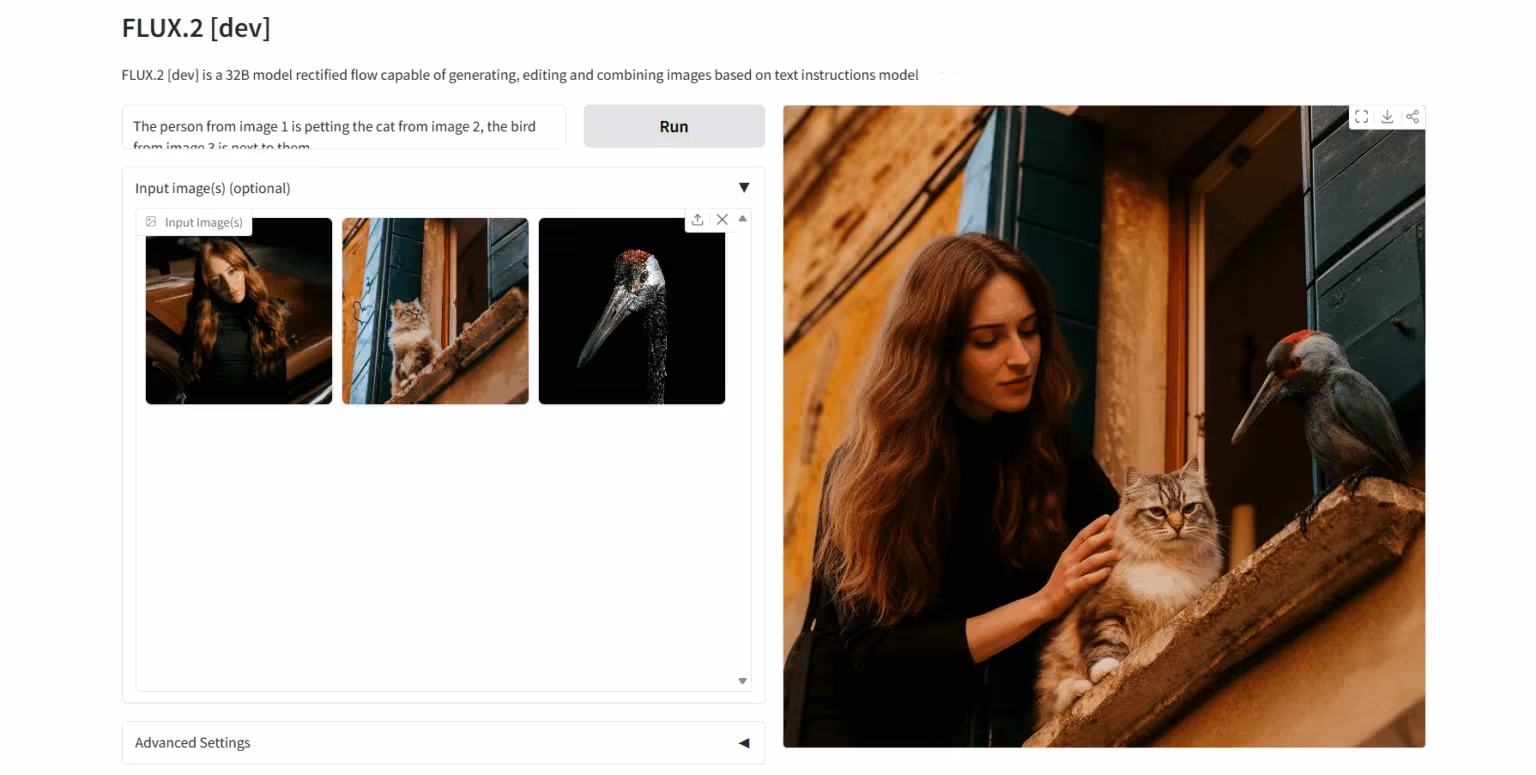

4. FLUX.2-dev: Image Generation and Editing Model

FLUX.2 is an AI image model developed by Black Forest Labs. It is designed specifically for real-world creative workflows. The model supports multi-image references of up to 10 images, generating high-quality images up to 4MP resolution with exceptional detail and text rendering capabilities. Combining a visual language model with a stream transformer architecture, the model significantly improves real-world knowledge understanding and image generation quality, driving open innovation and widespread application of visual intelligence technologies.

Run online:https://go.hyper.ai/4abhg

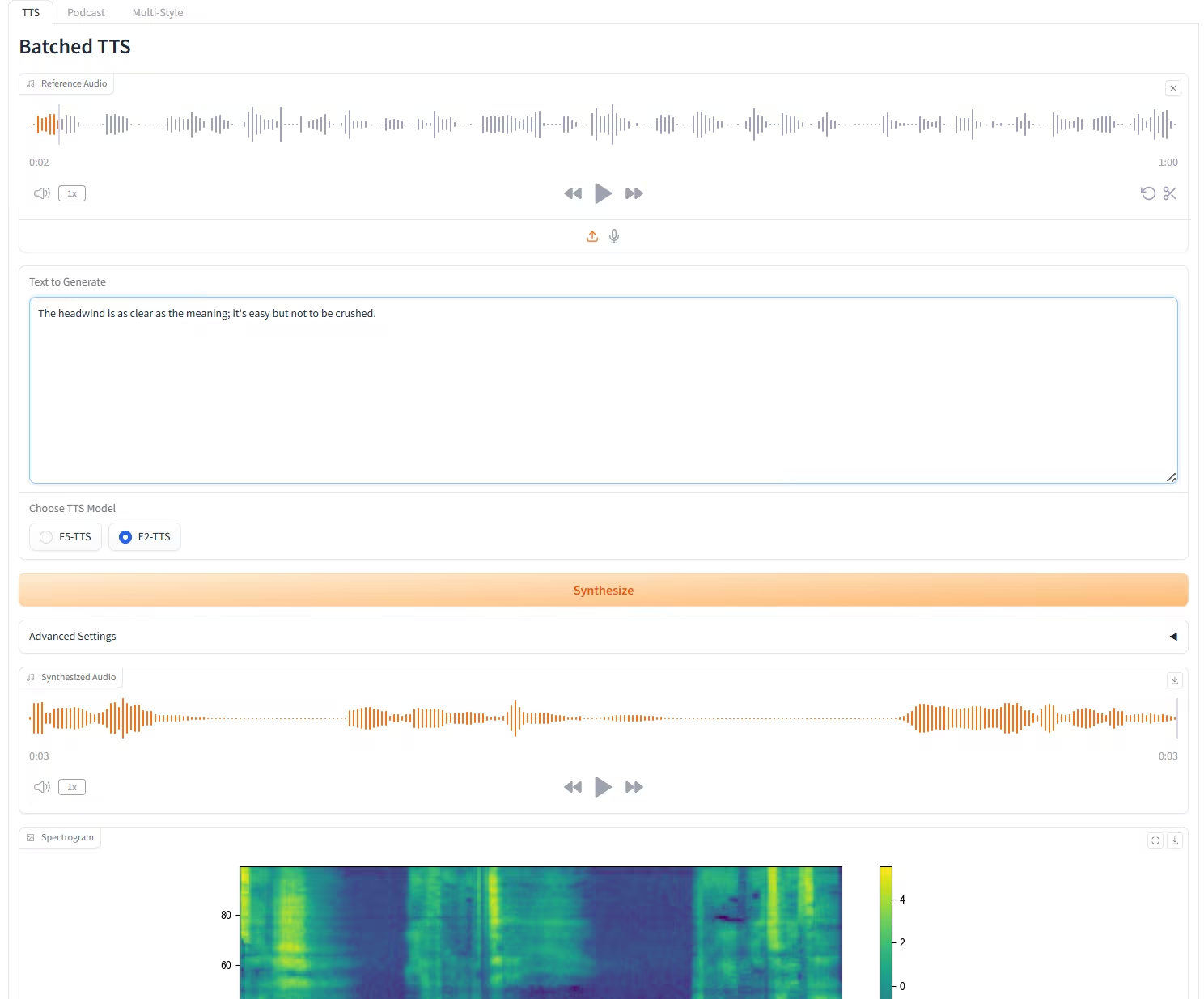

5. The F5-E2 TTS can clone any tone in just 3 seconds.

F5-TTS is a high-performance text-to-speech (TTS) system jointly open-sourced by Shanghai Jiao Tong University, Cambridge University, and Geely Automobile Research Institute (Ningbo) Co., Ltd. It is based on a non-autoregressive generation method using stream matching, combined with Diffusion Transformer (DiT) technology. This system can quickly generate natural, fluent, and faithful speech from the original text through zero-shot learning without additional supervision. It supports multilingual synthesis, including Chinese and English, and can effectively synthesize speech from long texts.

Run online:https://go.hyper.ai/8YCMD

This week's paper recommendation

1. From Code Foundation Models to Agents and Applications: A Comprehensive Survey and Practical Guide to Code Intelligence

This study systematically integrates and provides a comprehensive set of integrated analysis and practice guidelines (including a series of analytical and exploratory experiments) to explore the complete lifecycle of code-based LLMs, covering data construction, pre-training, prompting paradigms, code pre-training, supervised fine-tuning, reinforcement learning, and the construction of autonomous programming agents.

Paper link:https://go.hyper.ai/xvPZN

2. DeepSeek-V3.2: Pushing the Frontier of Open Large Language Models

This paper introduces DeepSeek-V3.2, a model that achieves superior inference capabilities and agent performance while maintaining high computational efficiency. The key technological breakthroughs of DeepSeek-V3.2 mainly include the following three aspects: the sparse attention mechanism DeepSeek Sparse Attention (DSA), a scalable reinforcement learning framework, and a large-scale agent task synthesis pipeline.

Paper link:https://go.hyper.ai/pVyE9

3. LongVT: Incentivizing “Thinking with Long Videos” via Native Tool Calling

This paper proposes LongVT, an end-to-end intelligent body framework that enables "deep thinking about long videos" through an interleaved multimodal chain of tool-thought. It leverages the inherent temporal positioning capabilities of LMMs as a native video trimming tool, precisely focusing on specific video segments and performing finer-grained resampling of video frames.

Paper link:https://go.hyper.ai/ho70t

4. Z-Image: An Efficient Image Generation Foundation Model with Single-Stream Diffusion Transformer

This paper proposes Z-Image, a highly efficient generative model with 6 billion parameters based on the Scalable Single-Stream Diffusion Transformer (S3-DiT) architecture, challenging the "scale-only" paradigm. Building upon this, researchers further developed the Z-Image-Turbo model using a few-step distillation scheme combined with reward post-training. This model achieves sub-second inference latency on enterprise-grade H800 GPUs while remaining compatible with consumer-grade hardware (less than 16GB of VRAM), significantly lowering the deployment threshold.

Paper link:https://go.hyper.ai/qqSwp

5. Qwen3-VL Technical Report

This article introduces Qwen3-VL, the most powerful visual language model in the Qwen series to date, demonstrating outstanding performance across a wide range of multimodal benchmarks. This model natively supports interleaved contexts of up to 256K tokens, seamlessly integrating text, image, and video information. The model family includes dense architectures (2B/4B/8B/32B) and hybrid expert architectures (30B-A3B/235B-A22B) to accommodate latency and quality tradeoffs in different scenarios.

Paper link:https://go.hyper.ai/8HkMJ

More AI frontier papers:https://go.hyper.ai/iSYSZ

Community article interpretation

1. Reshaping the predictive power of disordered protein assemblies: NVIDIA, MIT, Oxford University, University of Copenhagen, Peptone, and others release generative models and new benchmarks.

A joint team comprised of Peptone, a UK-based protein analysis technology developer, NVIDIA, and MIT has achieved two key breakthroughs. The first is the PeptoneBench systematic evaluation framework: this framework integrates multi-source experimental data from SAXS, NMR, RDC, and PRE, and combines statistical methods such as maximum entropy reweighting to achieve rigorous quantitative comparison between experimental observations and theoretical predictions. The second is the generative model PepTron: trained on an expanded synthetic IDR dataset, it specifically enhances the modeling ability for disordered regions, enabling it to better capture the conformational diversity of disordered proteins.

View the full report:https://go.hyper.ai/YBd9t

2. Online Tutorial | FLUX.2, the new state-of-the-art in image generation, can reference up to 10 images simultaneously, achieving extremely high consistency in character/style.

Black Forest Labs, after a long period of silence, has made a comeback by open-sourcing its next-generation image generation and editing model, FLUX.2. FLUX.1, released in 2024, achieved near-realistic results when generating images of people, especially real people. Now, FLUX.2's upgrade reaches new heights in image quality and creative flexibility, achieving state-of-the-art (SOTA) levels in instruction understanding, visual quality, detail rendering, and output diversity.

View the full report:https://go.hyper.ai/wLDRW

3. Event Preview | Shanghai Innovation Lab, TileAI, Huawei, and Advanced Compiler Lab gather in Shanghai; TVM, TileRT, PyPTO, and Triton showcase their unique strengths.

The 8th Meet AI Compiler technical salon will be held on December 27th at the Shanghai Innovation Academy. This session features experts from the Shanghai Innovation Academy, the TileAI community, Huawei HiSilicon, and the Advanced Compiler Lab. They will share insights across the entire technology chain, from software stack design and operator development to performance optimization. Topics will include cross-ecosystem interoperability of TVM, optimization of PyPTO's fusion operators, low-latency systems with TileRT, and multi-architecture acceleration with Triton, presenting a complete technical path from theory to implementation.

View the full report:https://go.hyper.ai/x6po9

4. Stanford, Peking University, UCL, and UC Berkeley collaborated to use CNN to accurately identify seven rare lenticular samples from 810,000 quasars.

A team comprised of numerous research institutions, including Stanford University, SLAC National Accelerator Laboratory, Peking University, Brera Observatory of the Italian National Institute for Astrophysics, University College London, and University of California, Berkeley, has developed a data-driven workflow to identify quasars that act as strong gravitational lenses in the spectral data of DESI DR1, greatly expanding the previously small sample size of quasars.

View the full report:https://go.hyper.ai/6s2FB

5. With only 2% reached, Sam Altman's bet on human identity verification infrastructure faces a global regulatory dilemma.

In an era where the authenticity of AI is difficult to discern, Sam Altman and Alex Blania are building a global "human verification" system using iris recognition, but Tools for Humanity's expansion is facing immense pressure. The Philippines has suspended its data services citing privacy and undue influence, and several other countries have initiated reviews. The gap between their "one billion users" vision and their current mere 17.5 million users continues to widen. Despite ample funding and a top-tier team, privacy and regulatory concerns will remain a long-term concern for the future of Tools for Humanity.

View the full report: https://go.hyper.ai/KL1Dq

Popular Encyclopedia Articles

1. DALL-E

2. HyperNetworks

3. Pareto Front

4. Bidirectional Long Short-Term Memory (Bi-LSTM)

5. Reciprocal Rank Fusion

Here are hundreds of AI-related terms compiled to help you understand "artificial intelligence" here:

Top conference with a December deadline

One-stop tracking of top AI academic conferences:https://go.hyper.ai/event

The above is all the content of this week’s editor’s selection. If you have resources that you want to include on the hyper.ai official website, you are also welcome to leave a message or submit an article to tell us!

See you next week!

About HyperAI

HyperAI (hyper.ai) is the leading artificial intelligence and high-performance computing community in China.We are committed to becoming the infrastructure in the field of data science in China and providing rich and high-quality public resources for domestic developers. So far, we have:

* Provide domestic accelerated download nodes for 1800+ public datasets

* Includes 600+ classic and popular online tutorials

* Interpretation of 200+ AI4Science paper cases

* Supports 600+ related terms search

* Hosting the first complete Apache TVM Chinese documentation in China

Visit the official website to start your learning journey: