Command Palette

Search for a command to run...

AI Paper Weekly Report | NVIDIA Open Source Models / OpenAI Benchmarks / Agent Systems / Long Context Inference... A Quick Roundup of AI Updates

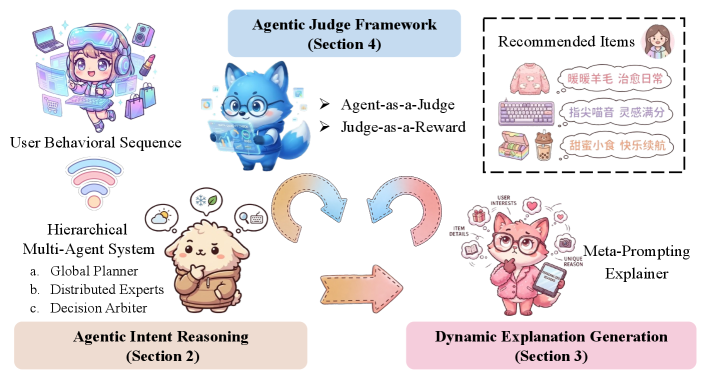

Large Language Models (LLMs) have shown significant potential in transforming recommender systems from implicit behavioral pattern matching to explicit intent inference. RecGPT-V1 successfully pioneered this paradigm by integrating LLM-based inference into user interest mining and item tag prediction, but it suffers from four fundamental limitations:

* Low computational efficiency and cognitive redundancy across multiple reasoning paths;

* Insufficient interpretability in fixed template generation;

* Limited applicability within the supervised learning paradigm;

* Simple, results-oriented assessments fail to meet human standards.

To overcome existing limitations, Alibaba's research team has released the latest version, RecGPT-V2. This iteration includes four core innovations:

* Construct a hierarchical multi-agent system.

* Propose a meta-prompting framework;

* Introduce a constraint-based reinforcement learning mechanism;

* Design an agent-as-a-Judge evaluation framework.

RecGPT-V2 not only verified the technical feasibility of intent reasoning based on large language models, but also proved its commercial feasibility in large-scale industrial scenarios, successfully bridging the gap between cognitive exploration and industrial application.

Paper link:https://go.hyper.ai/wftNU

Latest AI Papers:https://go.hyper.ai/hzChC

In order to let more users know the latest developments in the field of artificial intelligence in academia, HyperAI's official website (hyper.ai) has now launched a "Latest Papers" section, which updates cutting-edge AI research papers every day.Here are 5 popular AI papers we recommend, let’s take a quick look at this week’s cutting-edge AI achievements⬇️

This week's paper recommendation

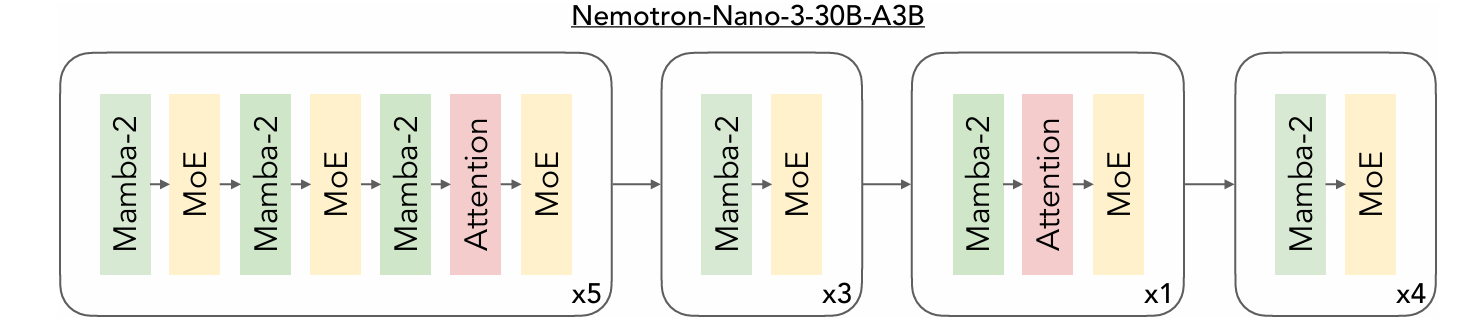

1.Nemotron 3 Nano: Open, Efficient Mixture-of-Experts Hybrid Mamba-Transformer Model for Agentic Reasoning

This paper introduces Nemotron 3 Nano 30B-A3B, a hybrid Mamba-Transformer language model based on a Mixture-of-Experts architecture. Nemotron 3 Nano was pre-trained on 25 trillion text tags, including over 3 trillion unique tags added compared to Nemotron 2, followed by supervised fine-tuning and large-scale reinforcement learning in diverse environments. The model significantly improves agent behavior, reasoning ability, and dialogue interaction, and supports context lengths of up to 1 million tags.

Paper link:https://go.hyper.ai/LtmY3

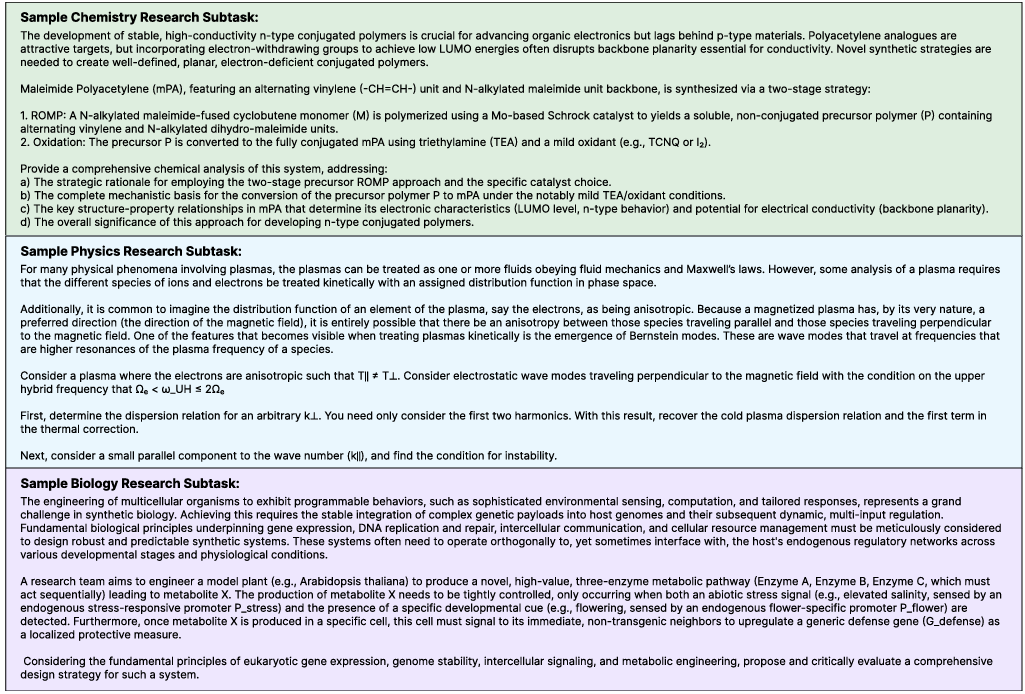

2. Frontier Science

This paper proposes FrontierScience, a benchmark test for evaluating the ability of artificial intelligence in expert-level scientific reasoning. FrontierScience consists of two tracks: (1) the Olympiad track, which covers problems from the International Olympiads (IPhO, IChO and IBO); and (2) the research track, which includes open-ended problems at the doctoral level, representing typical sub-problems in scientific research.

Paper link:https://go.hyper.ai/XanPc

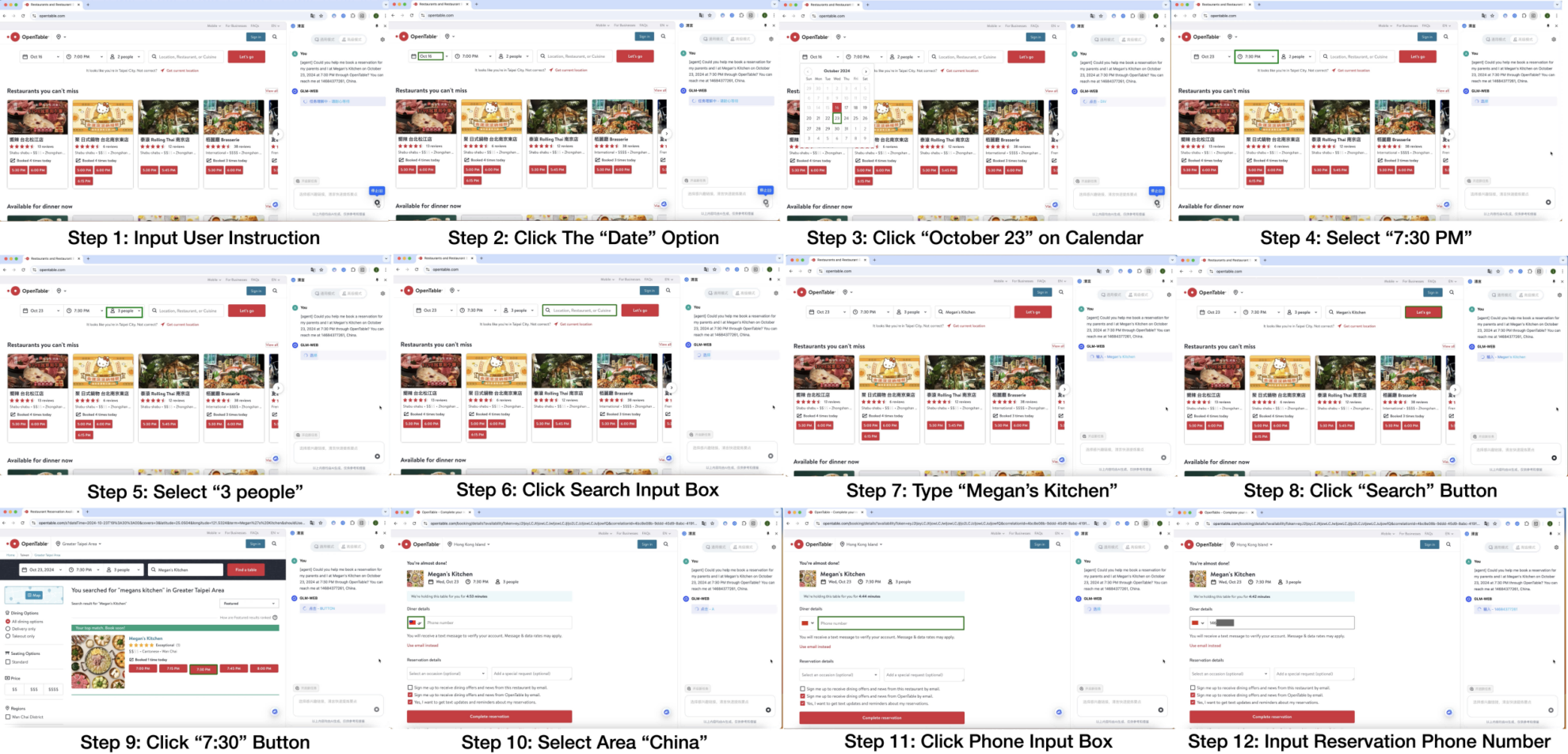

3.AutoGLM: Autonomous Foundation Agents for GUIs

This paper proposes AutoGLM, a new series within the ChatGLM family, designed as foundation agents to enable autonomous control of digital devices through graphical user interfaces (GUIs). The research team constructed AutoGLM using web browsers and mobile phones as typical GUI scenarios, creating a practical foundation agent system for real-world GUI interaction.

Paper link:https://go.hyper.ai/SLjro

4. RecGPT-V2 Technical Report

This paper proposes RecGPT-V2, which includes four core innovations: First, it constructs a hierarchical multi-agent system; second, it proposes a meta-prompting framework; third, it introduces a constrained reinforcement learning mechanism; and fourth, it designs an agent-as-a-judge evaluation framework. RecGPT-V2 not only verifies the technical feasibility of intent reasoning based on large language models but also demonstrates its commercial viability in large-scale industrial scenarios, successfully bridging the gap between cognitive exploration and industrial applications.

Paper link:https://go.hyper.ai/TdjZJ

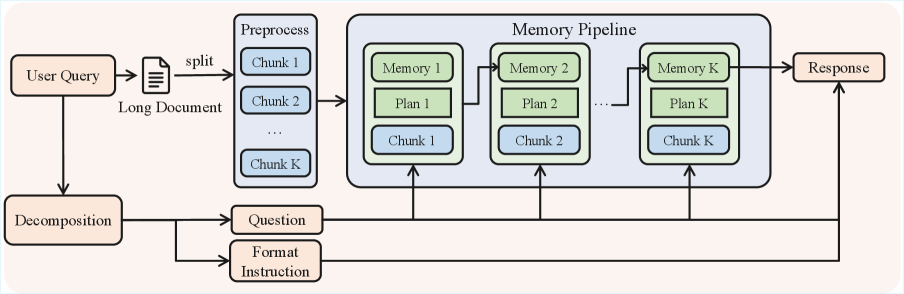

5.Deeper-GXX: Deepening Arbitrary GNNs

This paper introduces QwenLong-L1.5, a model that achieves superior long-context reasoning capabilities through systematic post-training innovations. Based on the Qwen3-30B-A3B-Thinking architecture, QwenLong-L1.5 performs close to GPT-5 and Gemini-2.5-Pro in long-context reasoning benchmarks, with an average improvement of 9.90 points compared to its baseline models. In ultra-long tasks (1 million to 4 million tokens), its memory-agent framework achieves a significant improvement of 9.48 points compared to the baseline agent.

Paper link:https://go.hyper.ai/vViJi

The above is all the content of this week’s paper recommendation. For more cutting-edge AI research papers, please visit the “Latest Papers” section of hyper.ai’s official website.

We also welcome research teams to submit high-quality results and papers to us. Those interested can add the NeuroStar WeChat (WeChat ID: Hyperai01).

See you next week!