Command Palette

Search for a command to run...

This week's editor's Picks: Tencent's WorldPlay Model; RFdiffusion3 Protein Design Model; Maya1, a Highly Realistic and Emotional Speech Generation service.

World models are driving a shift in the focus of computational intelligence from language tasks to visual and spatial reasoning. By constructing simulations of dynamic 3D environments, these models enable agents to perceive and interact with complex scenes, opening up new research and application prospects for fields such as embodied intelligence and game development. The forefront of world models is currently focused on real-time interactive video generation, and significant progress has been made.However, how to simultaneously achieve low latency in real-time generation and high consistency in long-term geometry remains a key unresolved issue in this field.

Based on this,Tencent's Hunyuan team has launched WorldPlay, a world modeling platform capable of real-time, interactive world modeling while maintaining long-term geometric consistency.This effectively solves the inherent trade-off between generation speed and memory usage in existing methods. The implementation of this system includes three key technological innovations:

*Double action representation:It employs dual action representation to achieve robust action control of user keyboard and mouse input, ensuring the accuracy and stability of interactive responses.

*Reconstructing the context memory mechanism:To ensure long-term consistency, the model designs a dynamic reconstruction context memory module, which can reconstruct the context information of historical frames and maintain the accessibility of geometrically critical but long-ago frames through a time reconstruction strategy, thereby significantly alleviating the memory decay problem.

*Context Forcing Distillation Method:The research team proposed a novel distillation method specifically designed for memory perception models, called "contextual forcing." This method aligns the memory context between the teacher and student models, enabling the student model to maintain real-time reasoning speed without losing its ability to utilize remote information, effectively suppressing error bias.

WorldPlay is capable of stably generating long sequences of 720p high-definition streaming video at 24 FPS.Outperforming existing technologies in multiple metrics and demonstrating excellent generalization capabilities across diverse scenarios, WorldPlay has taken a crucial step forward in creating real-time and consistent world models by providing a systematic framework for control, memory, and refinement.

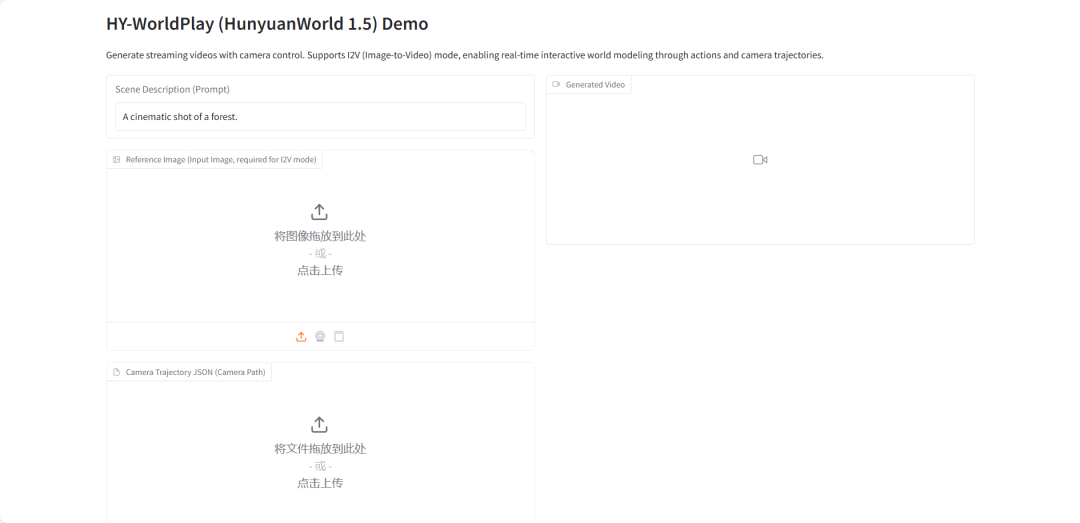

The HyperAI website now features "HY-World 1.5: An Interactive World Modeling System Framework." Give it a try!

Online use:https://go.hyper.ai/Dgd3Z

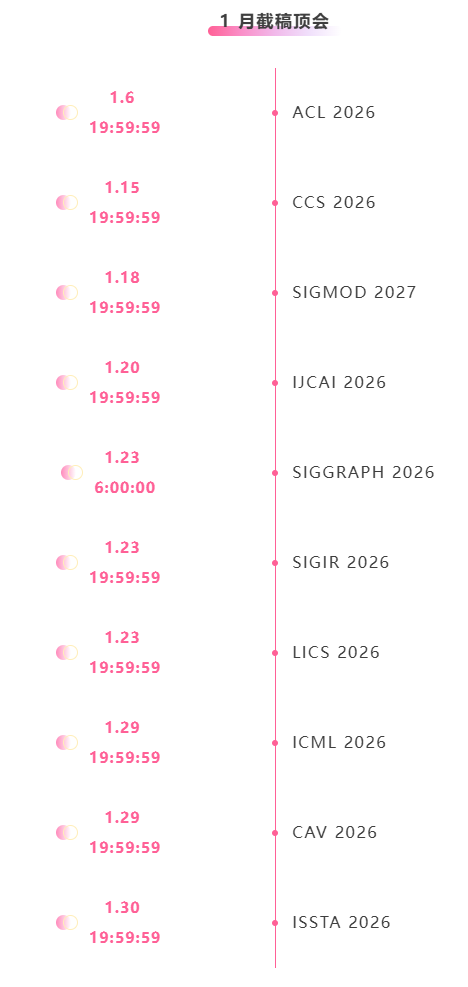

A quick overview of hyper.ai's official website updates from December 29th to January 2nd:

* Selection of high-quality tutorials: 3

* Popular encyclopedia entries: 5

Top conferences with January deadlines: 10

Visit the official website:hyper.ai

Selected Public Tutorials

1. HY-World 1.5: Framework for an Interactive World Modeling System

HY-World 1.5 (WorldPlay) is the first open-source real-time interactive world model with long-term geometric consistency released by Tencent's Hunyuan team. This model achieves real-time interactive world modeling through streaming video diffusion technology, resolving the trade-off between speed and memory in current methods.

Run online: https://go.hyper.ai/Dgd3Z

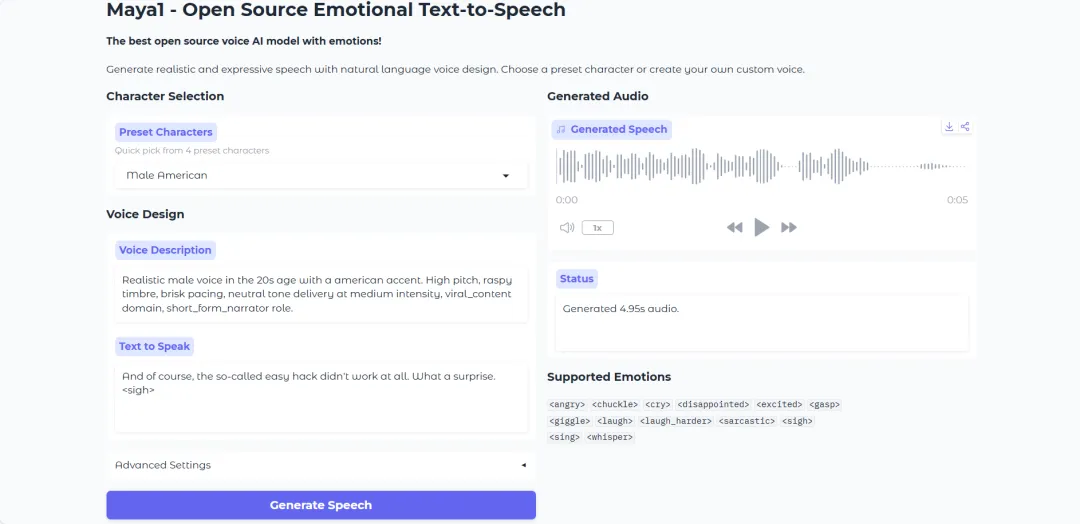

2. Maya1: A highly realistic and emotional voice generation service

Maya1, released by Maya Research, is a high-fidelity emotional text-to-speech (TTS) model designed for high-quality speech synthesis tasks. It features rich emotional expression and controllable speech style. This model focuses on accurately modeling the speaker's emotional state, speaking speed, tone, timbre, and expressiveness through natural language descriptions, generating highly realistic speech output that closely resembles human expression.

Run online: https://go.hyper.ai/RmmI3

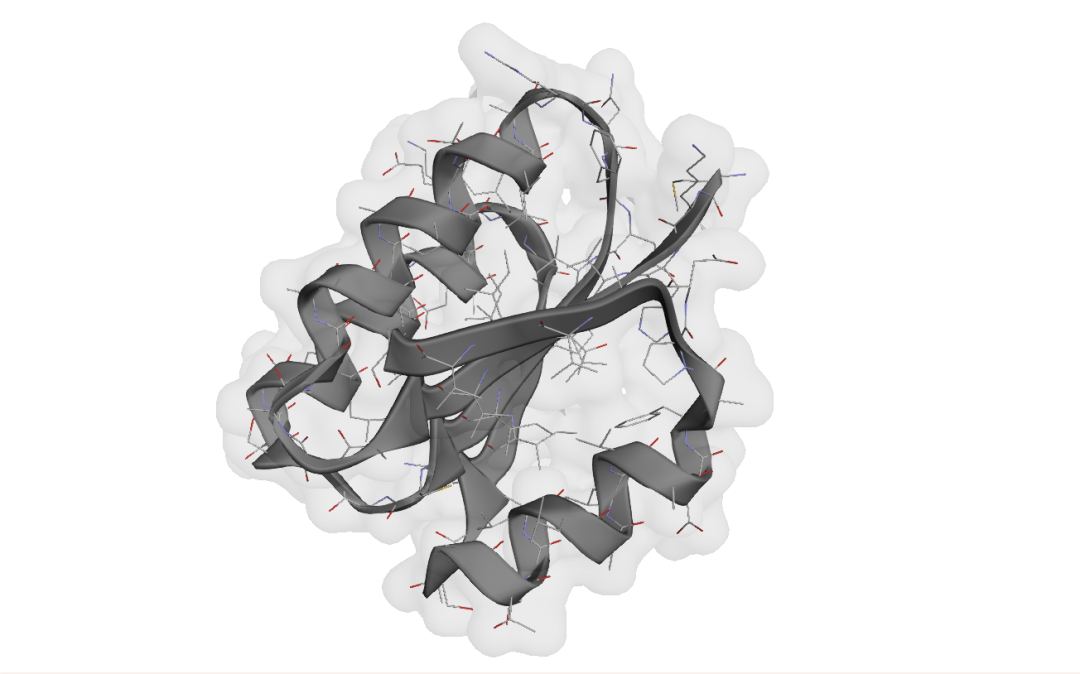

3. RFdiffusion3: Protein Design Model

RFdiffusion3 (RFD3) is a model released by the Protein Design Institute at the University of Washington. This state-of-the-art biodesign AI model can generate novel proteins that interact with virtually any molecule in living cells, solving a long-standing research challenge that has frustrated protein engineers.

Run online: https://go.hyper.ai/gv4Rz

Popular Encyclopedia Articles

1. Frames Per Second (FPS)

2. Bidirectional Long Short-Term Memory (Bi-LSTM)

3. Gated Attention

4. Embodied Navigation

5. Gated Recurrent Unit

Here are hundreds of AI-related terms compiled to help you understand "artificial intelligence" here:

One-stop tracking of top AI academic conferences:https://go.hyper.ai/event

The above is all the content of this week’s editor’s selection. If you have resources that you want to include on the hyper.ai official website, you are also welcome to leave a message or submit an article to tell us!

See you next week!