Command Palette

Search for a command to run...

From Computing Power to Electricity, Google, Microsoft, and Meta Are Investing in Clean Energy, While Chinese Companies Leverage the Advantages of the State Grid Corporation of China.

In the heart of the American Midwest, on the rolling cornfields of Iowa, huge white windmills turn tirelessly. Newly laid power lines lead to a building several kilometers away that also operates day and night. This building does not produce steel, process food, or manufacture cars, yet it continuously consumes massive amounts of electricity—because between server racks, a large number of high-performance GPUs are running in parallel.

Over the past decade, the keywords tech companies have been "cloud, big data, and massive computing power." Few have truly cared about the most fundamental questions behind these terms:Where does electricity come from?However, in the past two years, this issue has become increasingly difficult to avoid.

When OpenAI, Google DeepMind, and Anthropic are chasing each other on the same computing power curve, and when NVIDIA's GPUs are regarded as the "new oil" of the AI era, tech giants suddenly realize that what is truly scarce is not lying in wafer fabs, nor written in model parameters—but rather the stable electricity flowing in high-voltage cables.Rising electricity prices, grid queues, local government power rationing, and slowed data center approvalsBefore generative AI can reshape human productivity, various real-world constraints are forcing tech companies to solve a more fundamental problem: who will continuously power this intelligent revolution?

Thus, we see a company that started with a search engine investing in wind farms, a software company seriously discussing nuclear energy, and a cloud service giant signing a multi-decade renewable energy power purchase agreement. Clean energy is no longer just an embellishment in ESG reports, but is quietly becoming the most fundamental and core infrastructure in the AI competition.

From computing power to electricity, this may become a new watershed in the AI race.

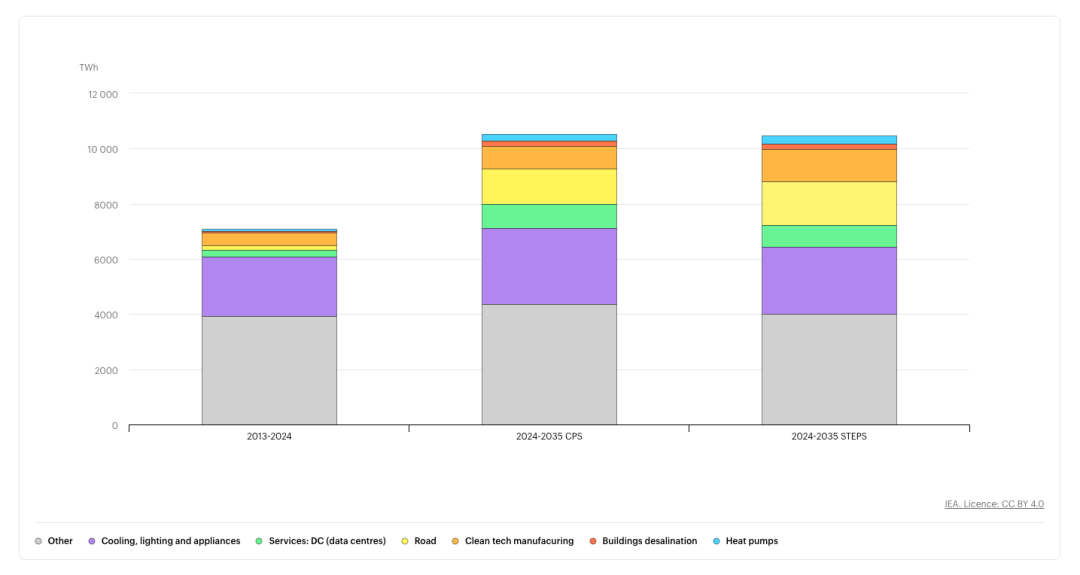

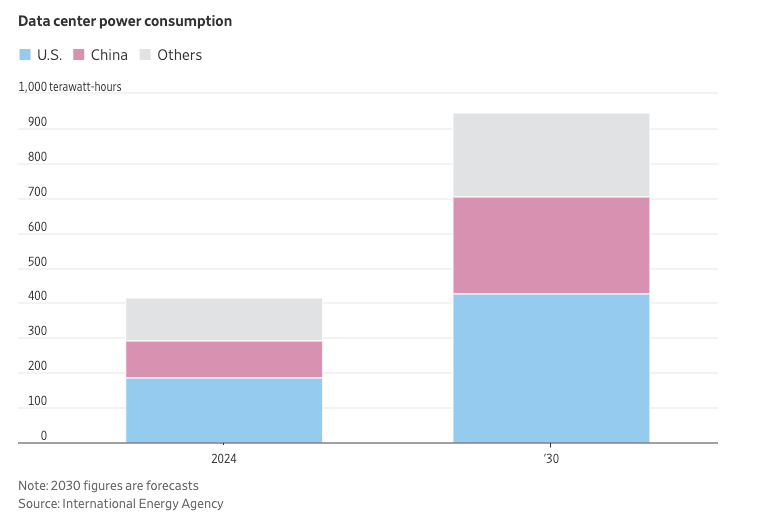

A report released by the International Energy Agency (IEA) in April 2025 indicates that global data center electricity demand is projected to more than double by 2030, with artificial intelligence being the primary driver of this surge in electricity consumption. Specifically,By 2030, global data center electricity demand will reach approximately 945 terawatt-hours, slightly higher than Japan's current total electricity consumption, but less than 31 terawatt-hours of global total electricity consumption in 2030.The report also points out that to meet the rapidly growing power demand of data centers, various energy sources will be used around the world, with renewable energy and natural gas playing a major role due to their economic viability and ease of supply.

In November of the same year, the IEA also released the "World Energy Outlook 2025," which showed that data center investment was projected to reach $580 billion by 2025. Those who call "data the new oil" will find that this figure already exceeds the $540 billion spent on global oil supply.By 2035, data center power consumption will double, although it will only account for less than 101 TP3T of global electricity demand growth, but it will be highly geographically concentrated.Over the next decade, more than 851 TP3T of new data center capacity is expected to be distributed in the United States, China, and the European Union—many of which will be located near existing data center clusters, which will undoubtedly put additional pressure on an already congested power grid.

If computing power determines what AI can do, then electricity largely determines how long it can do it.In the past, improvements in chip performance primarily followed Moore's Law. Leaps in AI capabilities relied on the scaling law, which states that the size of model parameters, the amount of training data, and the computational load are exponentially related, resulting in linear performance improvements. During this process, although chip energy efficiency has also improved, its speed has lagged behind the exponential expansion of computing power demands.

According to 2024 data, The New Yorker, citing a report from a foreign research institution, stated that ChatGPT responds to approximately 200 million requests daily, consuming over 500,000 kilowatt-hours of electricity in the process. In other words,ChatGPT's daily electricity consumption is equivalent to that of 17,000 American households.Today, as model performance continues to improve, the energy required for training is increasing. Simultaneously, the growing adoption of AI applications is leading to increased electricity consumption from continuous inference globally.

Besides the increased power consumption, the change in load characteristics is even more noteworthy.

In the past, while data centers consumed a lot of electricity, their load was highly schedulable. Business operations have peak and off-peak periods, server utilization is not constant, and electricity was more like an operational cost that could be optimized. However,Generative AI inference services require 24/7 uninterrupted operation and have extremely high requirements for power stability and reliability.In other words, AI is not a "peak electricity consumer," but a stable, continuous, and uninterrupted electricity consumer. This point has been repeatedly mentioned in the financial reports and investor conferences of many power companies. Major U.S. utility companies such as Dominion Energy, Duke Energy, and NextEra Energy have all publicly stated that AI data centers are becoming a core variable in load forecasting and capital expenditure.

More importantly, the United States, a hub for major AI companies, has not had its power system designed for this type of demand over the past few decades.

on the one hand,The U.S. power grid is highly fragmented, interstate coordination is complex, and power grid upgrades are time-consuming.on the other hand,The aging of a large amount of power transmission infrastructure, coupled with lengthy approval processes for renewable energy grid connection, has created a phenomenon known as "grid queuing"—power generation projects are already built, but cannot be connected to the system for extended periods. The emergence of generative AI is akin to suddenly adding a group of extreme electricity consumers to an already fully loaded system. This directly leads to new data centers waiting for power and local governments reassessing approval documents for new data centers.Tech companies are realizing that "having money doesn't necessarily mean you can have electricity immediately."

At the same time, the advantages of China's power grid are becoming increasingly apparent in the AI race.

In early December, the Wall Street Journal published a report titled "In Inner Mongolia, witnessing another trump card China has in the AI race: the world's largest power grid," which pointed out that although the United States has invented the most powerful artificial intelligence models and controls access to the most advanced computer chips,But in the global race for artificial intelligence, China holds a trump card.

China now has the world's largest power grid.

The report points out that between 2010 and 2024, China's electricity generation growth exceeded the total of the rest of the world combined.Last year, China's electricity generation was twice that of the United States.In terms of actual costs, the electricity expenses of some data centers in China are currently less than half that of their American counterparts. The report also quoted Liu Liehong, director of the National Data Administration of China, as saying, "In China, electricity is our competitive advantage."

Indeed, in terms of power grid construction, China adopts a state-led, forward-looking infrastructure-first model. Its core is to lay out "highways" for energy production and transmission years or even decades in advance through national-level projects, building a nationwide, unified power grid system with stable power supply capacity and reserve margins. China has built over 50,000 kilometers of ultra-high-voltage (UHV) lines, handling over 901 TP3T of UHV transmission capacity globally. These "power arteries" can transmit clean electricity from western energy bases (such as Inner Mongolia and Xinjiang) to eastern computing hubs with extremely low losses, realizing the strategic concept of "West-to-East Power Transmission." The accompanying "East-to-West Data Center" project proactively guides data center clusters to the energy-rich and climate-suitable central and western regions, geographically optimizing the allocation of energy and computing power.

Against this backdrop, the power consumption of AI data centers is currently "not a cause for concern".

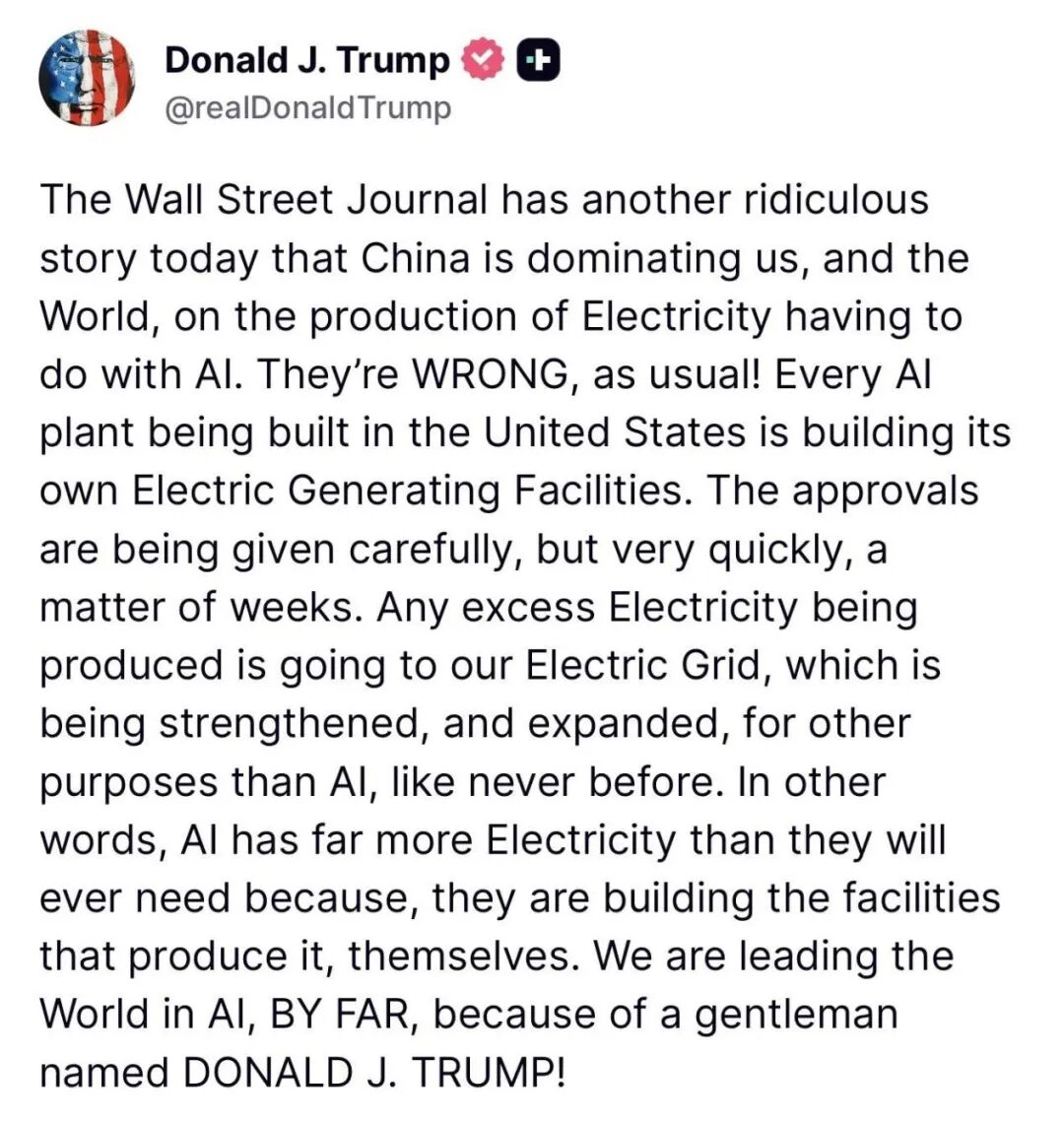

Interestingly, the Wall Street Journal's report caught Trump's attention, and after the article was published, he posted several tweets on Truth Social.The Wall Street Journal's report was described as "wrong as always."It was also mentioned that every large AI factory under construction in the United States will be equipped with its own private power plant, and these power plants will also transmit excess electricity back to the national grid.

Indeed, this is the case. Tech giants, who should be focusing on data center construction, are now worrying about power supply issues. Doesn't this precisely illustrate that the US power grid is not providing sufficient support to the industry leaders in the current AI race?

From Green Electricity Buyer to Energy Player – Strategic Shifts of Giants

As electricity became a real bottleneck, the strategic shift of technology companies occurred almost simultaneously. Just a few years ago, procuring renewable energy was primarily a glamorous ESG news item, used to cultivate a responsible corporate image. Today, the nature of the game has completely changed. Clean energy initiatives have leaped from being an adjunct to "brand building" to a "strategic necessity" concerning the continuity and competitiveness of core businesses. In this process,Businesses often turn to green electricity not simply for cost reasons, but in search of a controllable, long-term power solution.

In response, clean energy, especially wind and solar power, offers three key values: scalability, price certainty, and narrative legitimacy.

First, compared to traditional energy sources, new renewable energy projects can expand faster and are easier to plan in sync with data centers. Second, through long-term power purchase agreements (PPAs), technology companies can lock in electricity prices for ten or even twenty years, hedging against future energy market fluctuations. Simultaneously, clean energy provides a legitimate basis for large-scale electricity consumption from the perspectives of public opinion, local governments, and regulators.

Over the past two years, Google, Amazon, Microsoft, and Meta have all expanded their investments and procurement of clean energy.

in,Google's parent company, Alphabet, announced in late 2025 that it would acquire Intersect Power, a US energy storage and clean energy developer, for approximately $4.75 billion.This move signifies the company's direct entry into the field of energy asset construction and operation, rather than solely relying on traditional power purchase agreements (PPAs). The transaction is expected to close in the first half of 2026, with Intersect continuing to operate as an independent subsidiary, but its power development capabilities will primarily serve Alphabet's globally deployed data centers and computing infrastructure.

In addition, Google has expanded its partnership with NextEra Energy to promote the integration of data centers with renewable power projects through new clean energy contracts, and signed an agreement with Brookfield Asset Management to purchase up to 3GW of hydropower, which is one of its largest clean energy procurement agreements in history.

Microsoft's actions are more futuristic, not only signing a massive number of solar and wind power PPAs globally,They have also turned their attention to nuclear energy—which is considered one of the ultimate solutions for providing stable, zero-carbon energy.It reached an agreement with energy company Constellation Energy to advance the restart plan for the Three Mile Island nuclear power plant in Pennsylvania in order to stabilize long-term power supply.

Another company worth noting is Helion Energy, a nuclear fusion company founded in 2013. It is dedicated to developing commercial nuclear fusion power generation technology to provide zero-carbon, low-cost, and sustainable clean energy.

January 2025,Helion Energy announced the completion of a $425 million Series F funding round.This funding round will be used to expand the company's commercialization efforts for its breakthrough fusion technology. New investors participated in the oversubscribed and scaled-up funding round, including Lightspeed Venture Partners, SoftBank Vision Fund, and a major university endowment fund, as well as existing investors including Sam Altman, Mithril Capital, and Capricorn Investment Group. Just this July, the company announced the acquisition of land and the commencement of construction on ORION, the world's first fusion power plant.The goal is to deliver 50MW of fusion power to Microsoft by 2028.

Helion Energy is not Sam Altman's only foray into the clean energy sector. He has long invested in and served as chairman of Oklo, a company focused on developing small modular nuclear fission reactors (SMRs) to provide low-carbon, reliable, nuclear power.

Compared to its aggressive energy procurement strategy, Meta has also invested heavily in data center energy efficiency optimization and cost-saving measures. Its facilities generally employ efficient cooling technologies such as external air free cooling and evaporative cooling, combined with advanced cooling system design and operational optimization, enabling multiple data centers to achieve industry-leading power efficiency (PUE) levels of approximately 1.07–1.08, close to the ideal value of 1.0.

Conclusion

There is no doubt that the intelligent revolution is ultimately inseparable from power supply.

Today, the race for computing power has extended to the energy sector. In the future competition of AI, it won't just be a contest of model parameters and inference efficiency; a stable, scalable, and sustainable energy supply will also be a decisive factor. Windmills in cornfields are still turning, and GPUs in data centers are still operating at high speeds. Computing power can grow exponentially, but electricity must flow between the land and the equipment. Companies that recognized this early on and bet on it are actively vying for control of the energy landscape.

References

1.https://www.wsj.com/tech/china-ai-electricity-data-centers-d2a86935

2.https://www.reuters.com/technology/alphabet-buy-data-center-infrastructure-firm-intersect-475-billion-deal-2025-12-22/