Command Palette

Search for a command to run...

Dario Amodei, Who Insists on Proactive Oversight, Incorporated AI Safety Into the company's Mission After Leaving OpenAI.

In the current AI cycle, fueled by a race for computing power, competition among tech giants, and accelerated investment, Anthropic co-founder and CEO Dario Amodei is one of the very few, yet undeniable, "contrarians" in Silicon Valley. While most tech companies prioritize "faster iteration and stronger capabilities" as their core objectives, he consistently adheres to an increasingly compelling judgment:"Safety is not a brake, but the only institutional safety barrier that allows the industry to continue moving forward."

According to Dario Amodei, the explosive growth of large-scale model capabilities over the next five years will inevitably outpace the evolution of social governance mechanisms.Without a top-down security governance framework, AI development will fall into a dangerous "complexity backlash cycle."— The speed of technological growth completely overwhelms the governance system, ultimately causing risks to accumulate into systemic threats, which may not only lead to crises for individual enterprises, but also disrupt the operation of the entire society.

Returning to the starting point of this technological and governance divergence, the core argument that "security is never the opposite of innovation, but rather the only underlying logic that allows AI to maintain continuous evolution after it becomes part of national infrastructure" seems to confirm a fact: Dario Amodei is not promoting a "security-oriented approach," but rather a "logical protocol" for the AI era. This pre-sets controllable, auditable, and haltable operational prerequisites for technological expansion, thereby preventing capability growth from being suddenly interrupted by a systemic risk. Essentially, this viewpoint provides stable underlying support for the long-term commercial expansion of AI, rather than standing in opposition to innovation and slowing it down.

From leaving OpenAI to creating Anthropic: A "Declaration of Independence" after a value split.

Dario Amodei's deep connection with the "safety route" can be traced back to his time at OpenAI and his disagreements with the company's core values.

Around 2020, with the rapid commercialization of the GPT series models, OpenAI accelerated its technological iteration and commercialization efforts, but the corresponding risk management mechanisms and governance systems have not yet been clearly defined.

In 2023, after The New York Times filed a copyright infringement lawsuit against OpenAI and other companies, some researchers within OpenAI were already uneasy about the rapid pace of commercialization. Dario Amodei, however, had foreseen this several years earlier and was one of the key figures who first advocated for a more rigorous risk assessment mechanism within the company. He advocated adding a risk prediction step before model iteration and improving the safety testing process before commercialization. However, these suggestions, which leaned towards institutional development, were gradually suppressed by the company's increasing commercialization pressures.

After repeated setbacks in his internal institutional proposals, Dario Amodei and a group of core researchers officially left OpenAI in 2021 to found Anthropic.Behind this departure to start a business, it's not hard to see the imbalance in their philosophies regarding prioritizing safety versus commercialization.

Anthropic's mission statement clearly reflects this difference in perspective. Instead of using the industry-common phrase "build powerful AI," it explicitly states, "We build reliable, interpretable, and steerable AI systems."

At that time, the mainstream narrative in Silicon Valley still revolved around "capability is advantage," with almost all companies competing on the scale of model parameters, investment in computing power, and the speed of application deployment. Dario Amodei, however, chose a reverse path— Viewing "controllability" as the core of future competition, this seemingly counterintuitive choice laid the entire conceptual foundation for the implementation of Anthropic's subsequent security strategy.

The underlying logic of "security as strategy" is: as capabilities improve, governance must improve in tandem.

Unlike many tech companies that treat "ethical safety" as mere embellishment in technical reports or a selling point to attract users, Dario Amodei promotes a highly engineered "safety strategy framework." In his written testimony to the U.S. Senate on AI risks and regulation, he emphasized: "We cannot fully predict the full capabilities of the next generation of models, therefore we must establish tiered institutional safeguards before building them, rather than trying to remedy the situation after risks occur."

Based on this concept, Anthropic has built a complete "Capability-Security Co-evolution Model," embedding security governance into every aspect of technology development.

* Capability Forecasting:By using historical data and algorithm models, we can assess in advance the new capabilities that may emerge in the next generation of large models, especially those capabilities that may bring potential risks, such as more accurate generation of disinformation and more complex code writing (which may be used for cyberattacks).

* Safety Levels:Drawing on the risk level system of the nuclear energy industry, different safety levels are divided according to the model's capability strength and application scenarios, with each level corresponding to different testing standards, usage permissions and monitoring mechanisms;

* External red teams and interpretability requirements:Force the introduction of external red teams from across institutions and fields for attack testing, while requiring the model to provide an interpretable logical chain for key decision-making processes to avoid the risks brought about by "black box decision-making";

* Go/No-go gates:Set up "admission/prohibition" gates at key nodes of model iteration. Only after security testing meets the standards and risk assessment is passed can the next stage of development or deployment be allowed, clearly defining the principle of "expansion is only allowed if it can be controlled".

This systematic security architecture has made Dario Amodei a "technology CEO with a better understanding of governance" in the eyes of US policymakers. In July 2023, when the US Senate held an AI Insight Forum, he was specially invited to participate in the hearing. His views on "tiered regulation" and "advance risk prediction" became one of the most frequently cited technical voices in the subsequent discussions on the AI Security Act.

While most Silicon Valley companies treat security as a PR asset to enhance their image, Dario Amodei sees it as an operating system that "drives the long-term development of the industry"—he firmly believes that only by establishing a stable security framework can AI truly move from "laboratory technology" to "social infrastructure."

Why insist on "early regulation"? Technology has entered a period of non-linear growth.

Of all Dario Amodei's views, the most controversial is not his approach to technological alignment, but rather his attitude towards regulation: "Regulation must arrive in advance, rather than reacting passively after risks materialize." This proposition stands in stark contrast to Silicon Valley's long-standing culture of "less regulation, more freedom," but his reasoning is rooted in a profound understanding of the development patterns of AI technology, primarily stemming from three dimensions:

Model capabilities grow exponentially, while regulation is linear.

From GPT-3 with approximately 175 billion parameters in 2020, to GPT-4 in 2023 with a significant leap in capabilities (the parameter size is not publicly disclosed, but many speculate it to be in the range of 500 billion to 1 trillion), and then to Anthropic's Claude 3 in 2024 (which surpassed its predecessors in multiple benchmark tests), the capabilities of each generation of large-scale models have improved exponentially, and many "unexpected capabilities" have emerged that previous models did not possess—such as autonomously optimizing code, understanding complex legal texts, and generating near-professional-level research papers. This growth is "non-linear" and often exceeds industry expectations.

However, establishing a regulatory system requires multiple stages, including research, discussion, legislation, and implementation, and the process typically takes years.It exhibits a "linear" characteristic.If regulation always follows technology, a vacuum will be created where "capabilities are in place but governance is not," and risks may accumulate during this vacuum period.

The risk is not an application-layer problem, but a problem with fundamental capabilities.

Many believe that AI risks can be mitigated through "application-level control," such as setting filtering rules in specific scenarios. However, Dario Amodei points out that...The risk of large-scale models is essentially a "fundamental capability problem".— Capabilities such as generating lethal information, automating biological risks (e.g., generating dangerous biological experimental plans), and network penetration capabilities (e.g., writing malicious code) all stem from the model's fundamental capabilities, rather than application scenarios.

This means that even if a risk is mitigated in a particular application scenario, the model may still exploit its underlying capabilities to create new risks in other scenarios. Therefore, application-layer controls alone cannot eliminate risks; security thresholds must be established at the foundational model level through regulation.

Market competition cannot self-regulate its expansion.

Dario Amodei has repeatedly emphasized at industry conferences that "competition in the AI industry has a pressure effect—no vendor will voluntarily slow down the pace of capability iteration, because once they fall behind, they may be eliminated by the market." In this competitive environment, "self-discipline" is almost impossible, and companies often prioritize capability improvement while compressing safety testing to a minimum.

Therefore, he believes thatRegulation must become an "external binding constraint."To set a unified safety baseline for the entire industry and prevent companies from falling into a vicious cycle of bad money chasing good money—that is, "If you don't do security testing, I won't either, otherwise the cost will be higher and the speed will be slower."

Behind these reasons lies Dario Amodei's core judgment on the AI era:AI is not a "content product" of the internet age (such as social software and video platforms), but an "infrastructure technology" that can directly impact real-world decision-making and risk structures.Just like with electricity and transportation, for these types of technologies, early regulation is not about "restricting innovation," but rather about "ensuring the sustainability of innovation."

Technical Approach: Constitutional AI is not about patching, but about reconstructing the behavioral mechanisms of models.

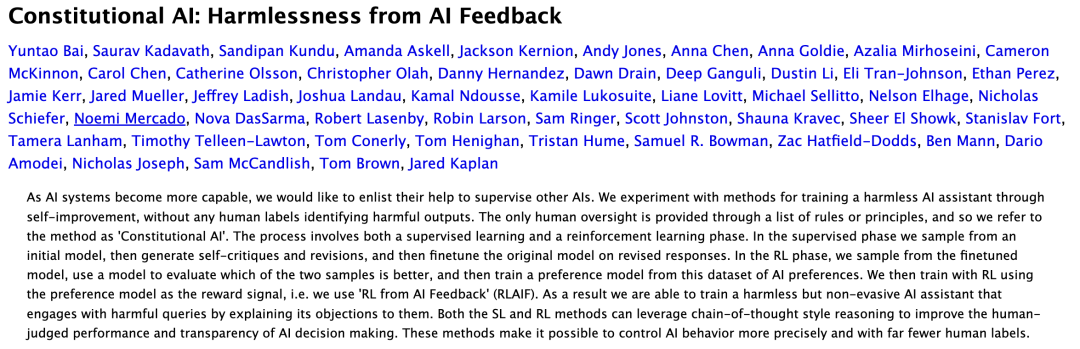

If OpenAI is labeled "commercially leading," Meta is labeled "dominated by the open-source ecosystem," and Google DeepMind is labeled "dual-track approach of research and engineering,"Anthropic's tagline is very clear: Alignment-as-Engineering. This means using a systematic engineering approach to ensure that the behavior of AI models aligns with human values. The most representative achievement of this is Constitutional AI (CAI), which was developed under the leadership of Dario Amodei.

Paper link:https://arxiv.org/abs/2212.08073

Constitutional AI's core idea overturns the traditional alignment approach: instead of relying on the subjective value judgments of human annotators to adjust its behavior, the model self-calibrates according to a set of open, auditable, and replicable "constitutional principles." This "constitution," jointly developed by the Anthropic team in collaboration with ethicists, legal experts, and social scientists, contains hundreds of basic principles, such as "not generating harmful information," "respecting diverse values," and "being honest about uncertain issues."

In practical application, Constitutional AI achieves alignment through two key steps: First, the model "self-criticizes" its generated content based on the "constitution," identifying areas that do not conform to principles; then, the model corrects itself based on the results of this "self-criticism," continuously optimizing the output content. This approach brings three key impacts:

* Reduce the value bias of RLHF:Traditional RLHF (Reinforcement Learning Based on Human Feedback) relies on the judgment of human annotators, but different annotators may have value biases (such as cultural background and differences in personal stance), while Constitutional AI, based on unified "constitutional principles," greatly reduces such biases;

* The alignment process is transparent and traceable:The "constitutional principles" are public, and the model's self-calibration process is also recordable and auditable, allowing the outside world to clearly understand why the model makes a certain decision, thus avoiding "black box alignment."

* Make security a "systems engineering" process, not a patch based on human intuition:Traditional security measures often involve "patching the vulnerability where the problem occurs," while Constitutional AI reconstructs the model's behavior mechanism from the ground up, making security an "instinct" of the model, rather than an externally added patch.

This technological approach directly lays the key advantage for Claude's models to enter production-level applications in 2024–2025—greater stability, greater controllability, and easier enterprise-scale deployment. Many financial institutions, law firms, and government departments consider Claude's "controllability" as a core factor when selecting large-scale models, because these fields have extremely low tolerance for risk and require stable and predictable model outputs.

In the global AI industry landscape, Amodei's strategy is becoming increasingly valuable. As its model capabilities expand to high-value scenarios such as financial risk assessment and medical diagnosis, the demand for security and reliability is becoming increasingly urgent.

Unlike OpenAI's rapid commercialization, Meta's open-source strategy, and DeepMind's focus on papers and AGI exploration, Anthropic regards security as the foundation for entering key markets. It promotes high-standard industry access by externalizing its internal security assessment tools and predicts that a "Safety-as-a-Service" track will emerge in the future.

This logic is also supported by policy and regulatory aspects:The EU's AI Act and US federal procurement requirements mandate stringent safety standards for high-risk AI, transforming safety from a moral issue into a political and market necessity. While Anthropic's market share remains smaller than OpenAI's, its strategic importance in policy influence, core industry penetration, and the power to set safety standards is steadily increasing. This highlights Anthropic's minority yet not weak position—shaping the AI industry landscape through institutional and technological means, rather than solely relying on market share.

Criticism and controversy: Will security become a tool for industry monopolies?

While Dario Amodei's "security approach" may seem like an idealized future trend, it faces strong opposition from both within and outside the industry. These controversies primarily focus on three aspects, highlighting the complexity of AI security issues:

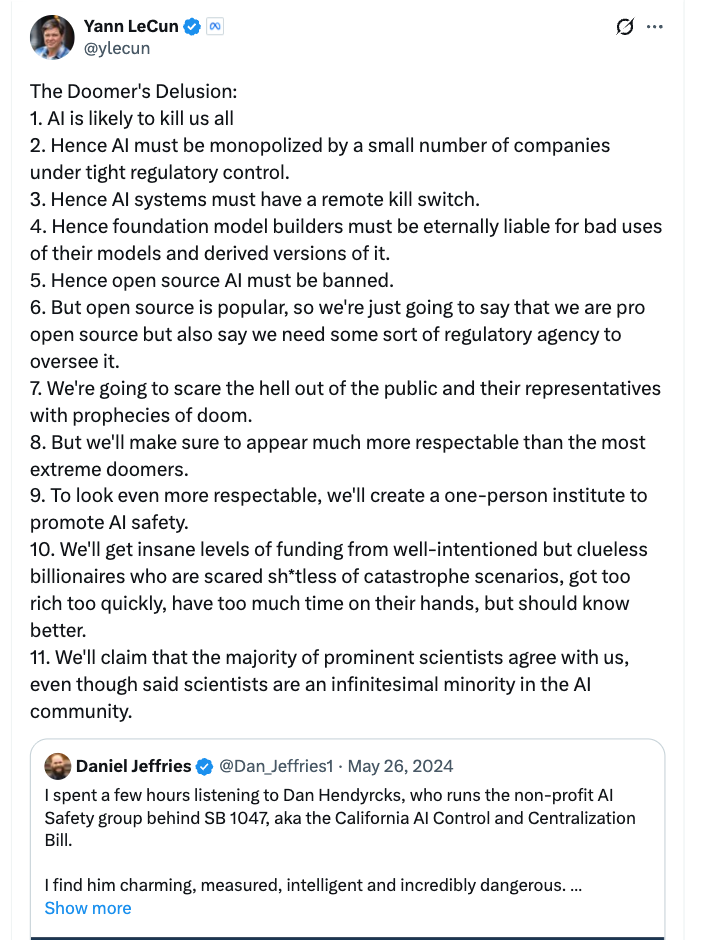

Security may become a reason for "restricting competition".

Meta's Chief Scientist, Yann LeCun, is one of the most outspoken tech leaders against "overly restrictive security regulations." He has repeatedly implied on social media and at industry conferences that Dario Amodei's advocacy of "early regulation" and "high security standards" is essentially "setting high barriers to entry for startups"—large companies have sufficient funds and resources to invest in security R&D.Startups may be eliminated because they cannot afford security costs, ultimately leading to industry monopolies.

LeCun's views have garnered support from many AI startups. They believe that current security standards are not yet unified, and premature implementation of strict regulations could allow "security" to become a tool for giants to squeeze out smaller players.

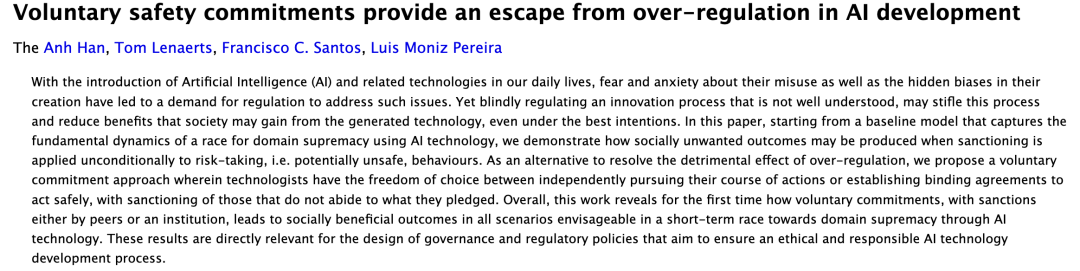

Safety cannot be quantified and easily becomes an empty slogan.

Another key point of contention is the "measurability of security".Currently, AI safety assessment standards are highly fragmented, with different companies having inconsistent criteria for determining "what constitutes safe AI." The methods, intensity, and transparency of "safety testing, assessment, and red-teaming" vary greatly, and there is even no unified answer to "what constitutes safe AI." For example, Anthropic considers "model self-calibration" to be safe, OpenAI may consider "passing external red-team testing" to be safe, while government agencies may be more concerned with "the model not generating harmful information."

This fragmentation makes "safety" difficult to quantify, easily turning it into an empty slogan for companies to "decorate" their image—a company can claim its model is "very safe," but cannot prove it using industry-recognized standards. Critics argue that before standards are unified, overemphasizing "safety" could mislead the market, making users mistakenly believe that AI risks have been resolved. Furthermore, academic research indicates that excessive/inflexible regulation (or one-size-fits-all rules) of AI development could stifle innovation.

Article link:https://arxiv.org/abs/2104.03741

There is a natural disconnect between technology and regulation.

Many technology experts point out that the ability to predict large-scale models remains an unsolved scientific problem—even the most advanced researchers cannot accurately predict what capabilities the next generation of models will possess, and what risks these capabilities might bring. In this context, "early regulation" may encounter two problems: either "misjudging risks," treating harmless technological innovations as risks and restricting them; or "missing risks," failing to anticipate the real dangers, leading to regulatory failure.

Dario Amodei did respond to these controversies. He acknowledged that security standards need to be "dynamically adjusted" and supported government subsidies for security R&D by startups to lower the barriers to competition. However, he maintained: "Even if there are controversies, we cannot wait until the risks materialize before taking action—the cost of 'lack of regulation' is far greater than 'over-regulation'."

These controversies, in fact, highlight one thing:AI security is not just a technical and scientific issue, but also a political and economic one. It involves multiple dimensions such as industrial structure, competition rules, and global power distribution. There is no "perfect answer" and we can only explore the balance in the midst of controversy.

Amodei is not pushing for a "safety route," but rather for a "logical protocol" for the AI era.

If we look back at the history of the internet's development, we will find that the reason why the internet has evolved from an "academic tool" to a "global infrastructure" is because...The core lies in its establishment of a unified underlying protocol. —TCP/IP (Transmission Control Protocol/Internet Protocol), DNS (Domain Name System), HTTPS (Hypertext Transfer Security Protocol), etc. These protocols do not involve specific application scenarios, but provide a "universal language" for all Internet services, allowing users from different devices, platforms, and regions to connect smoothly.

In my opinion,Dario Amodei is not pushing for a simple "safety approach," but rather attempting to establish a similar "logical protocol" for the AI era.— A unified, secure, auditable, and replicable governance infrastructure. The core of this "protocol" is not to "restrict AI capabilities," but to "allow AI capabilities to realize their value within a controllable framework."

What he wants to do is not to raise the industry's entry barriers, nor to slow down the industry's growth, but to establish an "AI safety system that can be understood, replicated, and used globally."Just as the TCP/IP protocol enables the Internet to achieve "interconnectivity," AI "governance protocols" can enable global AI technologies to achieve "collaborative development" on a secure basis, avoiding the spread of risks and waste of resources caused by inconsistent standards.

This is not technological pessimism, but industrial engineering. It acknowledges the risks of AI but believes they can be controlled through institutional design; it does not deny the innovative value of AI but insists that innovation must have boundaries. Just as the internet experienced exponential expansion after protocolization, the second phase of AI, from "technological explosion" to "stable application," may require a set of "governance protocols" as a fulcrum.

Final Thoughts

As AI gradually becomes a core asset influencing productivity, military capabilities, and national security, "security" will no longer be a technological issue, but a strategic one concerning global order. In the future, whoever can define "security" may define the rules of the AI industry; whoever can establish a "security framework" may gain the upper hand in global technological competition.

Dario Amodei may not be the only pioneer in this transformation, but he is undoubtedly the most clear-headed and also the most controversial. His value lies not in proposing a "perfect solution," but in forcing the entire industry and global society to confront a crucial issue: for AI to move towards the future, it cannot rely solely on technological enthusiasm, but also on institutional rationality. And this is precisely the key to transforming AI from a "disruptive technology" into a "constructive force."

Reference Links:

1.https://www.anthropic.com/news/claude-3-family

2.https://www.reuters.com/business/retail-consumer/anthropic-ceo-says-proposed-10-year-ban-state-ai-regulation-too-blunt-nyt-op-ed-2025-06-05

3.https://arxiv.org/abs/2212.08073

4.https://arstechnica.com/ai/2025/01/anthropic-chief-says-ai-could-surpass-almost-all-humans-at-almost-everything-shortly-after-2027/

5.https://www.freethink.com/artificial-intelligence/agi-economy