Command Palette

Search for a command to run...

AI Weekly Report: NVIDIA's Latest Language Model/Ovis 2.5 Technical Report... A Quick Look at the Latest Advances in Large Model Architecture Optimization/3D Modeling/Alignment and Self-Verification

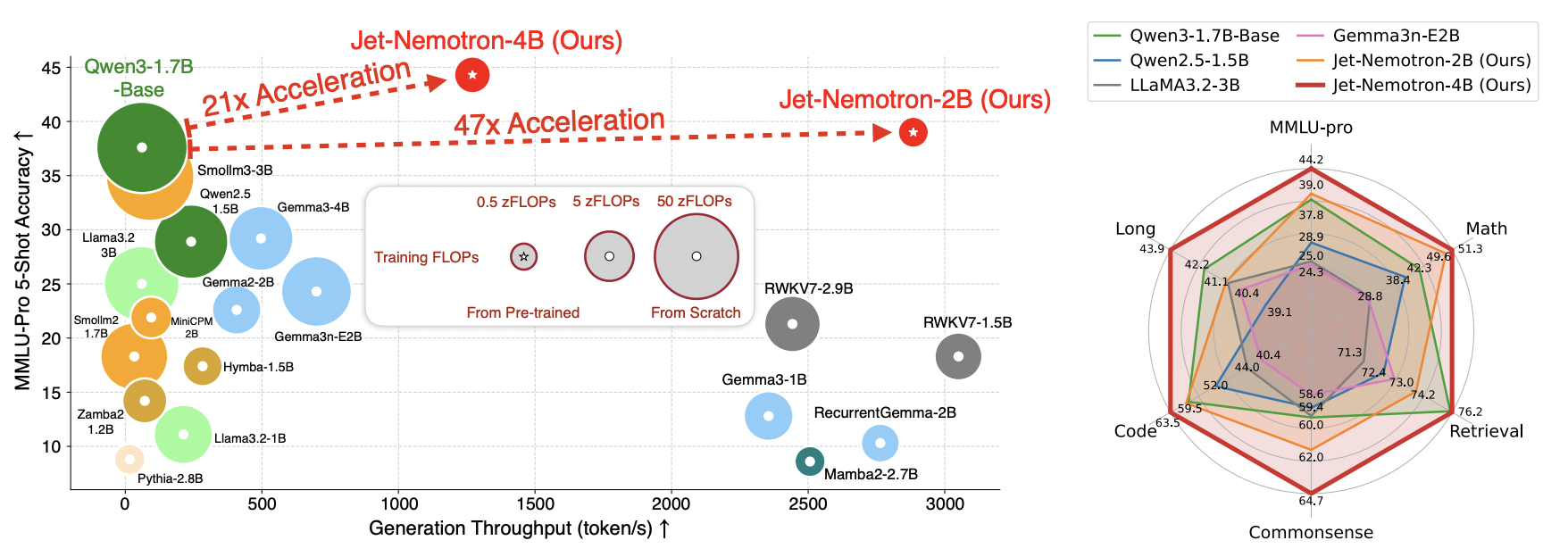

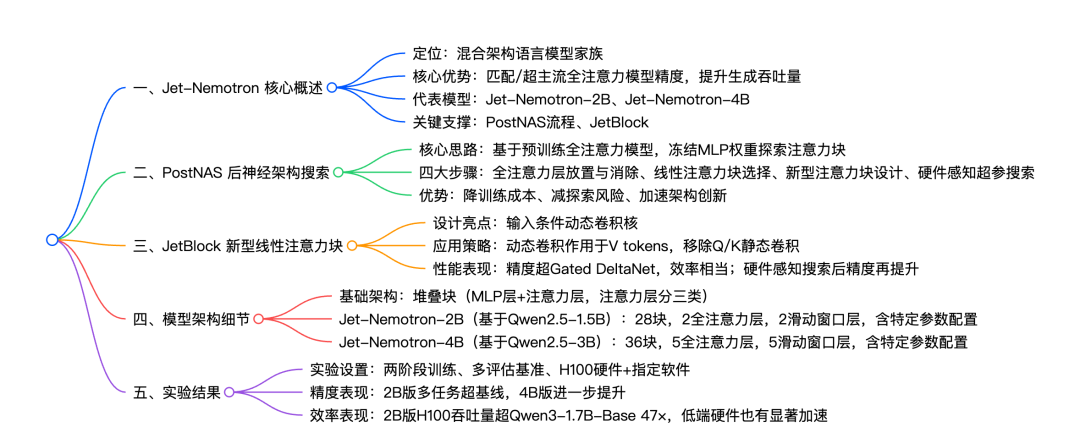

With the rapid development of large-scale language models, full-attention mechanisms have demonstrated impressive accuracy. However, their O(n²) computational complexity leads to significant memory and computing power consumption for long-context tasks, limiting their efficient application. Existing architectures often rely on training from scratch, which is costly and unsuitable for small and medium-sized research institutions. Hybrid architectures, while balancing accuracy and efficiency, still face design complexity and hardware adaptation challenges.

To address these challenges, the research team proposed Jet-Nemotron, which uses Post-Neural Architecture Search (PostNAS) to freeze the MLP weights on a pre-trained full-attention model, explore the optimal attention module design, and significantly improve the generation throughput while maintaining or exceeding the accuracy of the full-attention model, providing a feasible path for efficient language model design.

Paper link:https://go.hyper.ai/8MhfF

Latest AI Papers:https://go.hyper.ai/hzChC

In order to let more users know the latest developments in the field of artificial intelligence in academia, HyperAI's official website (hyper.ai) has now launched a "Latest Papers" section, which updates cutting-edge AI research papers every day.Here are 5 popular AI papers we recommendAt the same time, we have also summarized the mind map of the paper structure for everyone. Let’s take a quick look at this week’s AI cutting-edge achievements⬇️

This week's paper recommendation

1. Jet-Nemotron: Efficient Language Model with Post Neural Architecture Search

This paper presents Jet-Nemotron, a family of novel hybrid-architecture language models that significantly improves generation throughput while maintaining or exceeding the accuracy of leading full-attention models. Jet-Nemotron was developed using a novel neural architecture exploration process called "Post-Neural Architecture Search," which enables efficient model design. Unlike traditional approaches, PostNAS starts with a pre-trained full-attention model and freezes its multi-layer perceptron weights, enabling efficient exploration of attention module structures.

Paper link:https://go.hyper.ai/8MhfF

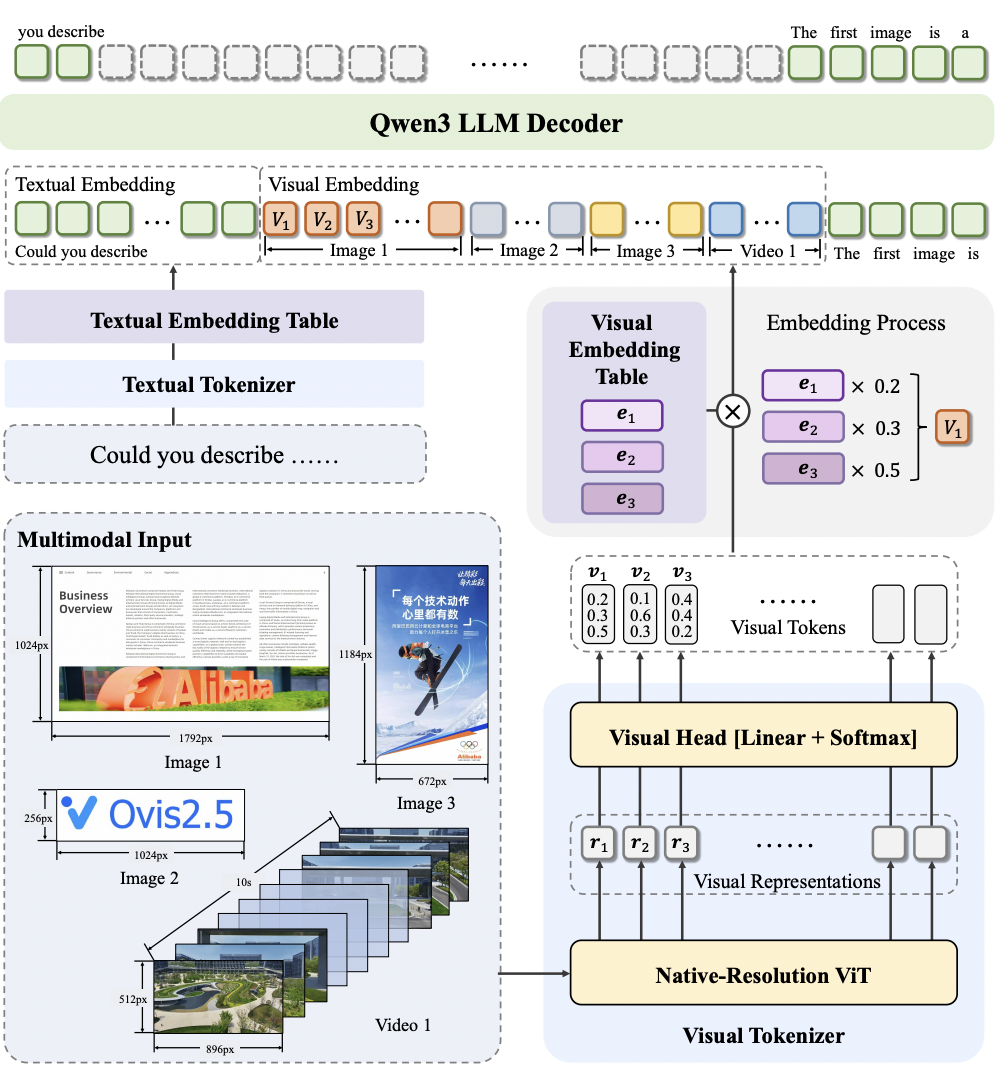

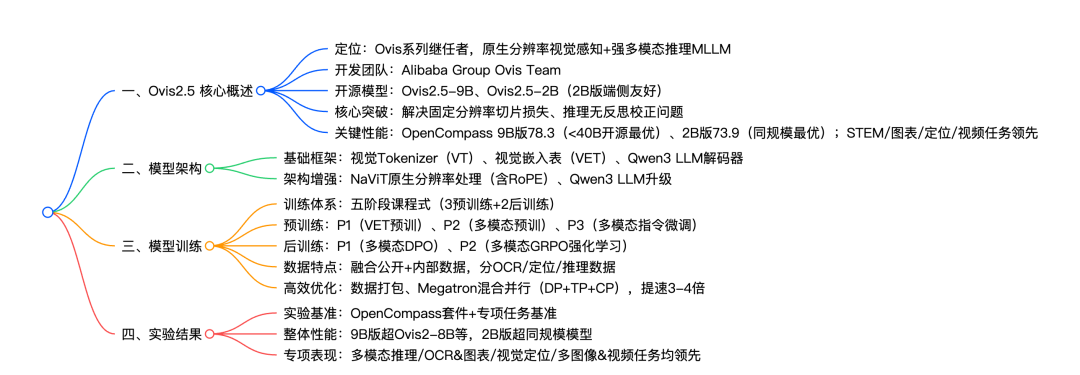

2. Ovis2.5 Technical Report

This paper presents Ovis2.5, designed for native-resolution visual perception and powerful multimodal reasoning. Ovis2.5 integrates a native-resolution visual transformer that processes images directly at their native, variable resolution, avoiding the quality degradation associated with fixed-resolution segmentation while fully preserving fine details and global layout.

Paper link:https://go.hyper.ai/nZOmk

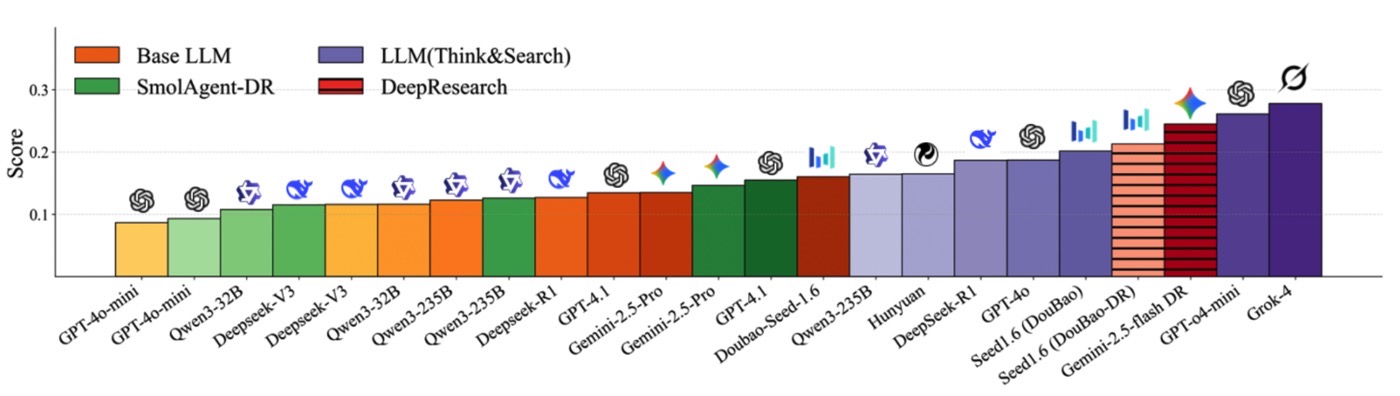

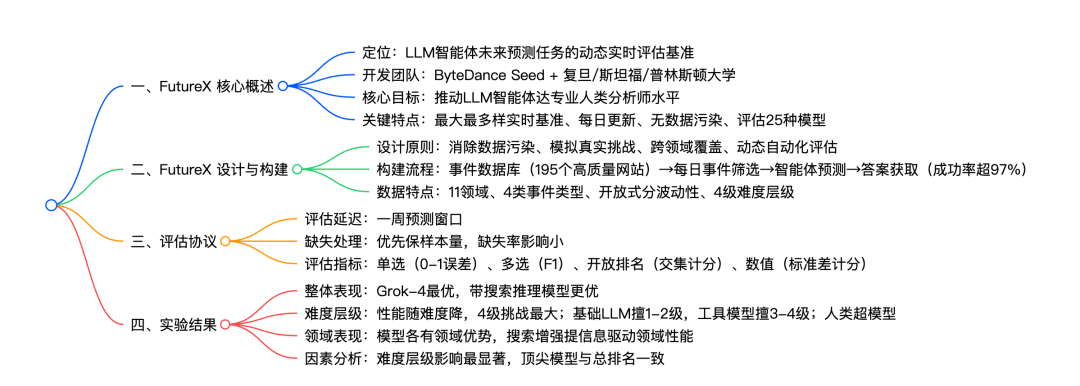

3. FutureX: An Advanced Live Benchmark for LLM Agents in Future Prediction

Future prediction requires agents to possess complex reasoning and dynamic adaptability, a complex task for large language model agents. Currently, there is a lack of large-scale benchmarks that can update in real time and accurately evaluate their prediction performance. This paper proposes FutureX, a dynamic, real-time evaluation benchmark specifically designed for future prediction tasks for LLM agents. FutureX is the largest and most diverse real-time prediction evaluation framework to date. It supports daily real-time updates and uses automated processes for question and answer collection, effectively eliminating data contamination.

Paper link:https://go.hyper.ai/rjbaU

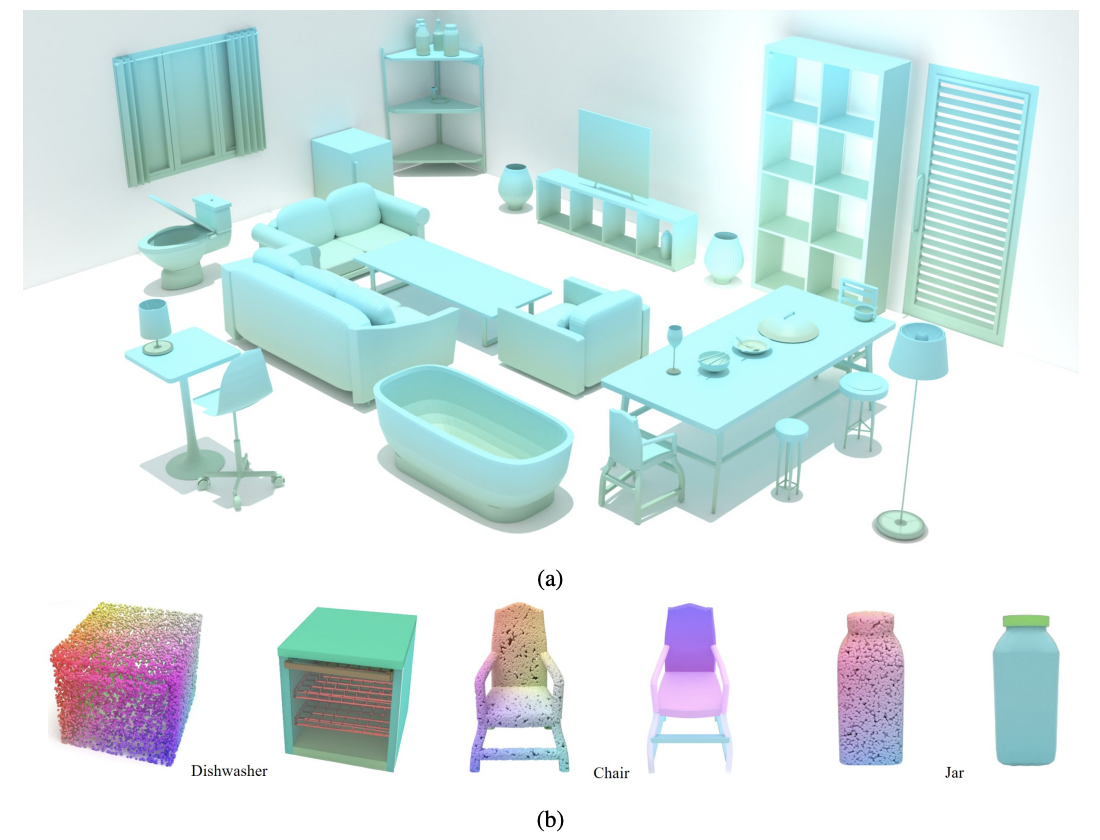

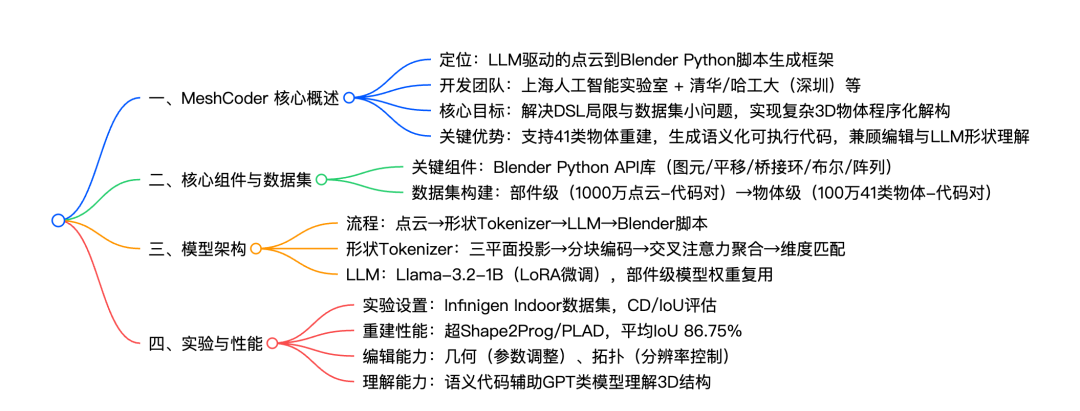

4. MeshCoder: LLM-Powered Structured Mesh Code Generation from Point Clouds

Reconstructing 3D objects into editable programs is crucial for applications such as reverse engineering and shape editing, but existing methods still have many limitations. This paper proposes MeshCoder, a new framework that reconstructs complex 3D objects from point clouds into editable Blender Python scripts. By developing a rich API, building a large-scale object-code dataset, and training a multimodal large language model, it achieves high-precision shape-to-code conversion. This not only improves 3D reconstruction performance but also supports intuitive geometry and topology editing, enhancing the reasoning capabilities of LLM for 3D shape understanding.

Paper link:https://go.hyper.ai/EAWIn

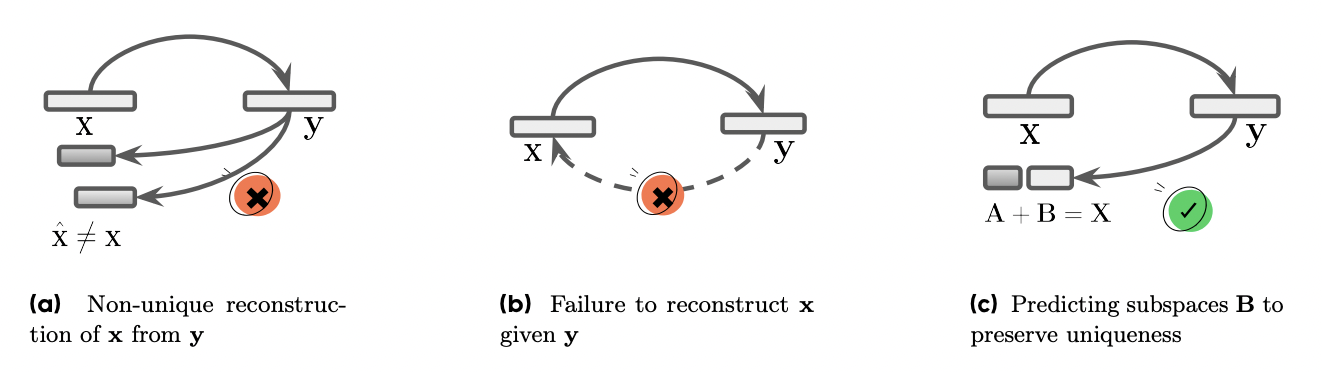

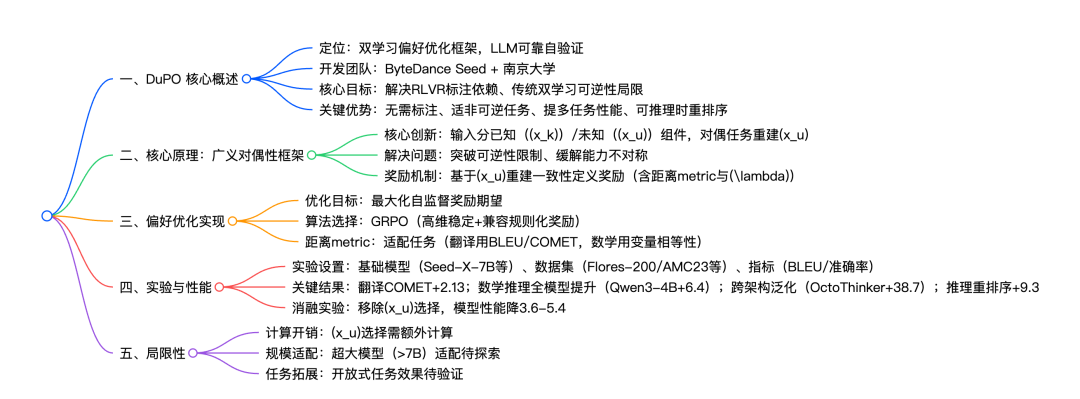

5. DuPO: Enabling Reliable LLM Self-Verification via DualPreference Optimization

This paper proposes DuPO, a dual-learning-based preference optimization framework that generates unlabeled feedback via generalized duality. DuPO addresses two key limitations: first, reinforcement learning with verifiable rewards (RLVR) relies on expensive annotations and is only applicable to verifiable tasks; second, traditional dual learning is limited to strictly dual task pairs (e.g., translation and back-translation).

Paper link:https://go.hyper.ai/2Gycl

The above is all the content of this week’s paper recommendation. For more cutting-edge AI research papers, please visit the “Latest Papers” section of hyper.ai’s official website.

We also welcome research teams to submit high-quality results and papers to us. Those interested can add the NeuroStar WeChat (WeChat ID: Hyperai01).

See you next week!