Command Palette

Search for a command to run...

Google DeepMind Releases Perch 2.0, Covering Nearly 15,000 Species, Setting a New state-of-the-art for Bioacoustic Classification and detection.

Bioacoustics, as an important tool bridging biology and ecology, plays a key role in biodiversity conservation and monitoring. Early research relied on traditional signal processing methods such as template matching, which gradually exposed their limitations due to inefficiency and lack of accuracy in the face of complex natural acoustic environments and large-scale data.

In recent years, the explosive growth of artificial intelligence (AI) technology has driven deep learning and other methods to replace traditional approaches, becoming core tools for detecting and classifying bioacoustic events. For example, the BirdNET model, trained on large-scale labeled bird acoustic data, has demonstrated exceptional performance in bird voiceprint recognition: it not only accurately distinguishes the calls of different species but also enables individual identification to a certain extent. Furthermore, models such as Perch 1.0, through continuous optimization and iteration, have accumulated rich achievements in the field of bioacoustics, providing solid technical support for biodiversity monitoring and conservation.

A few days ago,Perch 2.0, jointly launched by Google DeepMind and Google Research,Taking bioacoustic research to new heights, Perch 2.0 takes species classification as its core training task. It not only incorporates more training data from non-avian groups, but also employs new data augmentation strategies and training objectives.This model has refreshed the current SOTA in two authoritative bioacoustic benchmarks, BirdSET and BEANS.It demonstrates powerful performance potential and broad application prospects.

The relevant research results were published as a preprint on arXiv under the title "Perch 2.0: The Bittern Lesson for Bioacoustics".

Paper address:

https://arxiv.org/abs/2508.04665

Follow the official account and reply "Bioacoustics" to get the full PDF

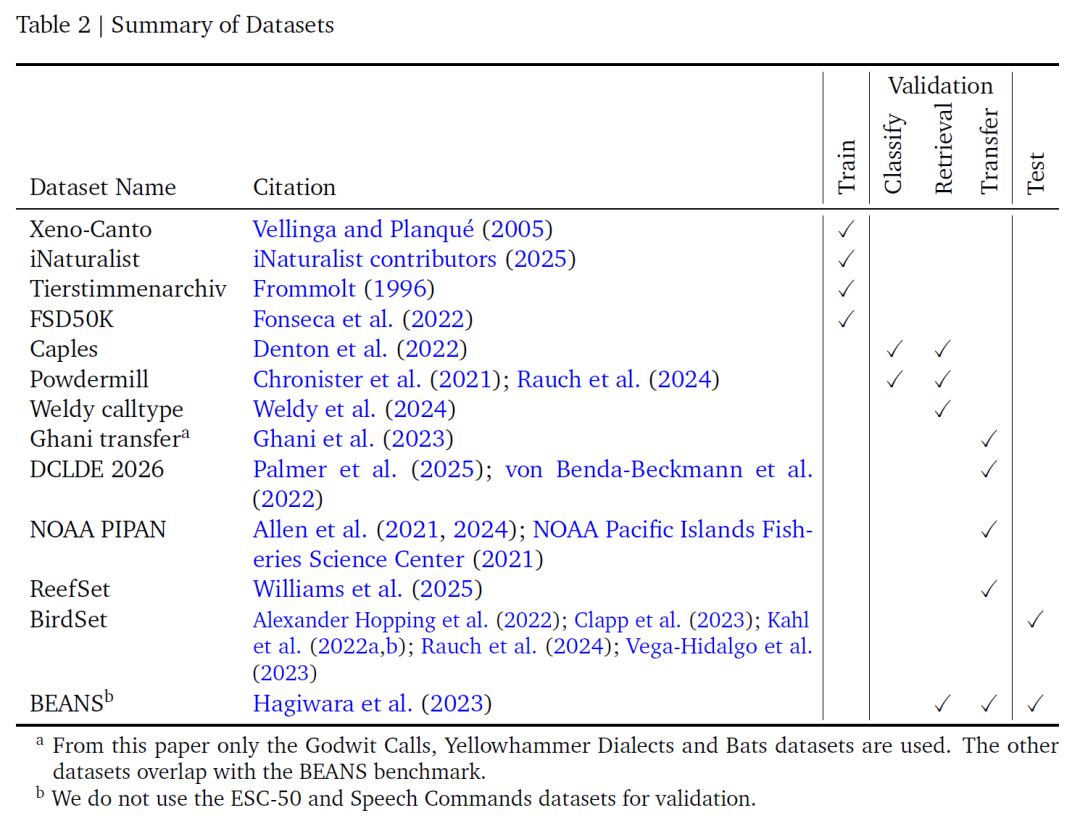

Dataset: Training data construction and evaluation benchmark

This study integrated four labeled audio datasets for model training: Xeno-Canto, iNaturalist, Tierstimmenarchiv, and FSD50K.Together, they form the fundamental data support for model learning. As shown in the table below, Xeno-Canto and iNaturalist are large citizen science repositories: the former is accessed through a public API, while the latter is derived from audio labeled as research-grade on the GBIF platform. Both contain a large number of acoustic recordings of birds and other creatures. Tierstimmenarchiv, the animal sound archive of the Berlin Museum of Natural History, also focuses on bioacoustics. Finally, FSD50K supplements this with a variety of non-avian sounds.

These four categories of data contain a total of 14,795 categories.Of these, 14,597 were species, and the remaining 198 were non-species sound events. This rich category coverage not only ensures deep learning of bioacoustic signals but also expands the model's applicability by including non-bird sound data. However, because the first three datasets used different species classification systems, the research team manually mapped and unified the category names and removed bat recordings that could not be represented using the selected spectrogram parameters to ensure data consistency and applicability.

Considering that the recording lengths of different data sources vary greatly (from less than 1 second to more than 1 hour, most of them are 5-150 seconds), and the model is fixed with 5-second clips as input,The research team designed two window selection strategies:The random window strategy randomly intercepts 5 seconds when selecting a recording. Although this may include segments where the target species did not make sounds, which may bring some label noise, it is generally within an acceptable range. The energy peak strategy follows the idea of Perch 1.0 and uses wavelet transform to select the 6-second area with the strongest energy in the recording. Then, 5 seconds are randomly selected from this area to improve the sample validity based on the assumption that "high-energy areas are more likely to contain the sounds of the target species."This method is consistent with the detector design logic of models such as BirdNET, and can capture effective acoustic signals more accurately.

To further improve the model's adaptability to complex acoustic environments, the research team adopted a data augmentation variant of mixup.Generate a composite signal by mixing multiple audio windows:First, the number of mixed audio signals is determined by sampling from the Beta-binomial distribution, and then the weights are sampled through the symmetric Dirichlet distribution. The selected multiple signals are weighted summed and the gains are normalized.

Unlike the original mixup, this method uses a weighted average of multi-hot target vectors rather than one-hot vectors, ensuring that all sounds within the window (regardless of loudness) can be identified with high confidence. Tuning the relevant parameters as hyperparameters can enhance the model's ability to distinguish overlapping sounds and improve classification accuracy.

Model evaluation is carried out based on two authoritative benchmarks: BirdSet and BEANS. BirdSet contains six fully annotated soundscape datasets from the continental United States, Hawaii, Peru, and Colombia. No fine-tuning is performed during evaluation, and the output of the prototype learning classifier is directly used; BEANS covers 12 cross-category test tasks (involving birds, terrestrial and marine mammals, anura, and insects). Only its training set is used to train linear and prototype probes, and the embedding network is also not adjusted.

Perch 2.0: A high-performance bioacoustic pre-training model

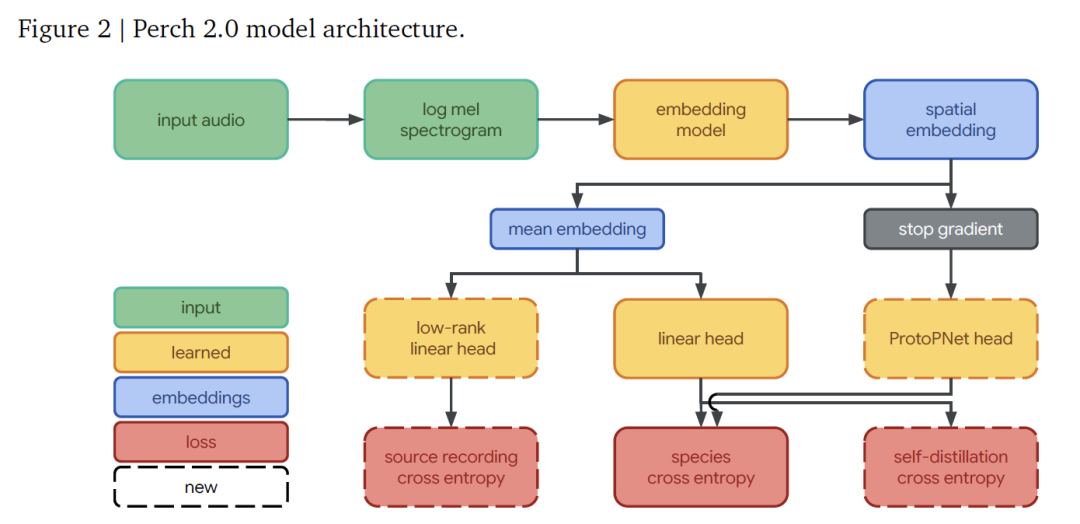

The Perch 2.0 model architecture consists of a frontend, an embedding model, and a set of output heads.These parts work together to achieve the complete process from audio signal to species identification.

in,The front end is responsible for converting the raw audio into a feature form that the model can process.It receives mono audio sampled at 32kHz, and for a 5-second segment (containing 160,000 sampling points), it generates a log-mel spectrogram containing 500 frames and 128 mel bands per frame through processing with a 20ms window length and a 10ms jump length, covering the frequency range of 60Hz to 16kHz, providing basic features for subsequent analysis.

The embedding network uses the EfficientNet-B3 architectureThis is a convolutional residual network with 120 million parameters, which maximizes parameter efficiency by using a depthwise separable convolutional design. Compared to the 78 million parameter EfficientNet-B1 used in the previous version of Perch, it is larger to match the growth in training data.

After processing through the embedding network, a spatial embedding with a shape of (5, 3, 1536) is obtained (the dimensions correspond to time, frequency, and feature channels respectively). After taking the average of the spatial dimensions, a 1536-dimensional global embedding can be obtained, which serves as the core feature for subsequent classification.

The output head is responsible for specific prediction and learning tasks.It consists of three parts: the linear classifier projects the global embedding into a 14,795-dimensional category space, and through training, it makes the embeddings of different species linearly separable, thereby improving the linear detection effect when adapting to new tasks; the prototype learning classifier takes the spatial embedding as input, learns 4 prototypes for each category, and takes the prototype with the maximum activation for prediction. This design is derived from the AudioProtoPNet in the field of bioacoustics; the source prediction head is a linear classifier that predicts the original recording source of the audio clip based on the global embedding. Since the training set contains more than 1.5 million source recordings, it achieves efficient calculation through a low-rank projection of rank 512, serving the learning of self-supervised source prediction loss.

Model training is optimized end-to-end through three independent objectives:

* Cross entropy for species classification uses softmax activation and cross entropy loss for linear classifiers, assigning uniform weights to target categories;

In the self-distillation mechanism, the prototype learning classifier acts as a "teacher" whose predictions guide the "student" linear classifier while maximizing the prototype difference through the orthogonal loss, and the gradient is not back-propagated to the embedding network;

* Source prediction is used as a self-supervised objective, treating the original recordings as independent categories for training, pushing the model to capture salient features.

The training is divided into two phases:The first phase focused on training the prototype learning classifier (without self-distillation, up to 300,000 steps); the second phase enabled self-distillation (up to 400,000 steps), both using the Adam optimizer.

Hyperparameter selection relies on the Vizier algorithm.In the first stage, the learning rate, dropout rate, etc. are searched, and the optimal model is determined after two rounds of screening. In the second stage, the self-distillation loss weight is increased and the search continues. The two window sampling methods are used throughout.

The results show that the first stage prefers to mix 2-5 signals, with a source prediction loss weight of 0.1-0.9; the self-distillation stage tends to have a small learning rate, use less mixup, and assign a high weight of 1.5-4.5 to the self-distillation loss. These parameters support the model performance.

Evaluating the Generalization Ability of Perch 2.0: Baseline Performance and Practical Value

The evaluation of Perch 2.0 focuses on generalization ability, examining its performance in bird soundscapes (which are significantly different from the training recordings) and non-species identification tasks (such as call type identification), as well as its ability to transfer to non-avian groups such as bats and marine mammals. Considering that practitioners often need to deal with small amounts of or no labeled data,The core principle of the evaluation is to verify the effectiveness of the "frozen embedded network".That is, by extracting features at one time, new tasks such as clustering and small sample learning can be quickly adapted.

The model selection phase verifies practicality from three aspects:

* Pre-trained classifier performance, using ROC-AUC to evaluate out-of-the-box species prediction capabilities on a fully annotated bird dataset;

* One sample retrieval, using cosine distance to measure clustering and search performance;

* Linear migration, simulating small sample scenarios to test adaptability.

The scores of these tasks are calculated by geometric mean, and the final results of the 19 sub-datasets reflect the actual usability of the model.

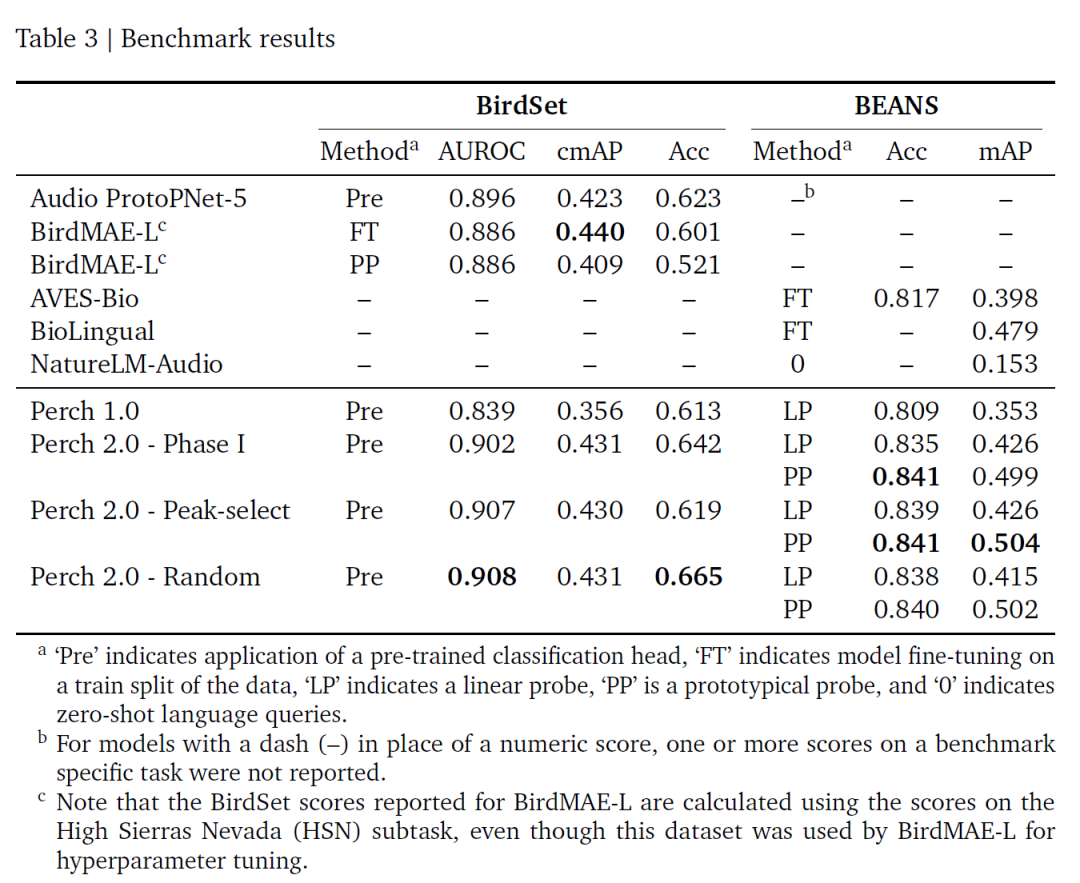

Based on the two benchmarks of BirdSet and BEANS, the evaluation results of this study are shown in the following table:Perch 2.0 has outstanding performance in many indicators, especially ROC-AUC, which is the best at present.And no fine-tuning is required; its random window and energy peak window training strategies have similar performance, presumably because self-distillation alleviates the impact of label noise.

Overall, Perch 2.0 is based on supervised learning and is closely related to bioacoustic properties. The breakthrough of Perch 2.0 shows thatHigh-quality transfer learning does not require super-large models; fine-tuned supervised models combined with data augmentation and auxiliary objectives can perform well.Its fixed embedding design (which eliminates the need for repeated fine-tuning) reduces the cost of large-scale data processing and enables agile modeling. Future directions in this field will include building realistic evaluation benchmarks, developing new tasks using metadata, and exploring semi-supervised learning.

The intersection of bioacoustics and artificial intelligence

At the intersection of bioacoustics and artificial intelligence, research directions such as cross-category transfer learning, self-supervised target design, and fixed embedding network optimization have triggered extensive exploration in academia and business communities around the world.

The cosine distance virtual adversarial training (CD-VAT) technology developed by the Cambridge University team improves the discriminability of acoustic embeddings through consistency regularization.Recovers an equal error rate improvement of 32.51%TP3T on a large-scale speaker verification task,Provides a new paradigm for semi-supervised learning in speech recognition.

MIT and CETI collaborate on sperm whale voiceprint research.Through machine learning, a "sound alphabet" consisting of rhythm, meter, tremolo and ornamentation is separated.The complexity of their communication system was revealed to be far greater than expected - the Eastern Caribbean sperm whale tribe alone has at least 143 distinguishable vocalization combinations, and its information carrying capacity even exceeds the basic structure of human language.

The photoacoustic imaging technology developed by ETH Zurich breaks through the acoustic diffraction limit by loading microcapsules with iron oxide nanoparticles.Achieve super-resolution imaging of deep tissue microvessels (resolution up to 20 microns),It has shown the potential for multi-parameter dynamic monitoring in brain science and tumor research.

at the same time,The open source project BirdNET has accumulated 150 million recordings worldwide.It has become a benchmark tool for ecological monitoring. Its lightweight version, BirdNET-Lite, can run in real time on edge devices such as Raspberry Pi, supporting the identification of more than 6,000 bird species and providing a low-cost solution for biodiversity research.

The AI bird song recognition system deployed by Japan's Hylable Company in Hibiya Park combines a multi-microphone array with DNN.Achieve simultaneous output of sound source location and species identification, with an accuracy rate of over 95%.Its technical framework has been extended to the fields of urban green space ecological assessment and barrier-free facility construction.

It is worth noting thatGoogle DeepMind's Project Zoonomia is exploring the evolutionary mechanisms of acoustic commonalities across species by integrating genomic and acoustic data from 240 mammalian species.The study found that the harmonic energy distribution of dogs' joyful barking (3rd-5th harmonic energy ratio 0.78±0.12) is highly homologous to dolphin social whistles (0.81±0.09). This molecular biological correlation not only provides a basis for cross-species model migration, but also inspires a new modeling path for "biologically inspired AI" - incorporating evolutionary tree information into embedded network training, thereby breaking through the limitations of traditional bioacoustic models.

These explorations are bringing a new dimension to the marriage of bioacoustics and artificial intelligence. When the depth of academic research meets the breadth of industrial application, life signals once hidden within rainforest canopies and deep-sea reefs are being more clearly captured and interpreted, ultimately transforming into action guides for protecting endangered species and intelligent solutions for the harmonious coexistence of cities and nature.

Reference Links:

1.https://mp.weixin.qq.com/s/ZWBg8zAQq0nSRapqDeETsQ

2.https://mp.weixin.qq.com/s/UdGi6iSW-j_kcAaSsGW3-A

3.https://mp.weixin.qq.com/s/57sXpOs7vRhmopPubXTSXQ

Scan the corresponding QR code to access high-quality AI4S papers from 2023 to 2024 by field, including in-depth interpretation reports⬇️