Command Palette

Search for a command to run...

Demis Hassabis Leads DeepMind Away From the Era of Pure Scientific Research: As AI4S Becomes the New Narrative, the Ethical Challenges continue.

In October 2025, Time magazine released its annual list of "TIME 100: The 100 Most Influential People in the World."Google DeepMind CEO Demis Hassabis graces the cover.In a feature article titled "Preparing for AI's Endgame," Time magazine stated that Demis Hassabis, as a representative figure who introduced deep learning into scientific research, is influencing the direction of global AI technology and future ethics in the wave of AI evolution.

From "game theory systems" to "scientific computing," Hassabis has driven a key shift in deep learning over the past decade, forcing the world to rethink the ultimate destination of Artificial General Intelligence (AGI). Currently, against the backdrop of the rapid expansion of generative AI technology and escalating ethical and regulatory controversies,Hassabis and DeepMind are attempting to reshape the development direction of AGI through a more cautious approach.This "high-risk, long-term experiment" led by DeepMind is attempting to depict how humans and AI will coexist in the future.

In short, with Hassabis's image placed at the critical juncture of science and society, Time magazine bluntly stated in its report that the future of AI may be being rewritten. "If Hassabis's predictions are correct, then the turbulent decades of the early 21st century may end in a glorious utopia; if his estimates are wrong, the future may be darker than anyone imagines. But one thing is certain:"In his pursuit of general artificial intelligence, Demis Hassabis is betting the riskiest game of his life.

From prodigy chess player to AI leader, Demis Hassabis is rewriting the way computation is done in life sciences.

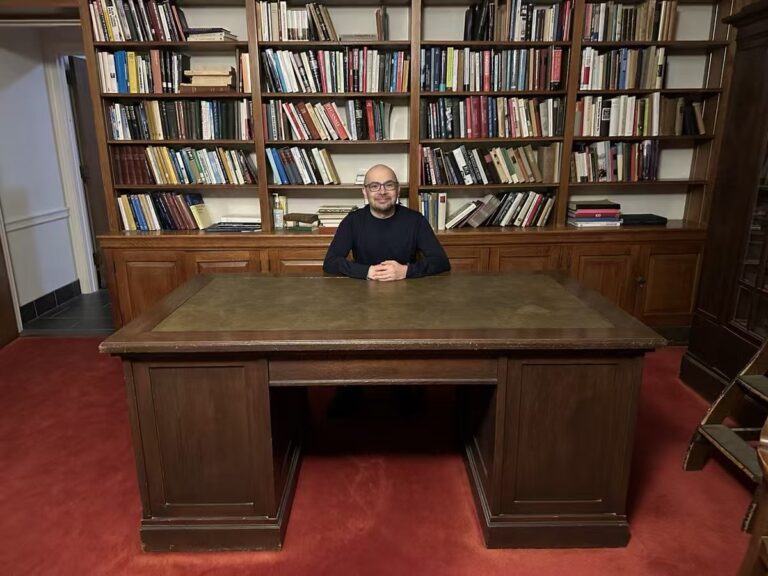

Demis Hassabis was born in London, England in 1976. He displayed exceptional logic and memory skills from a young age, emerging as a rising star in the British youth chess world at the age of 13, at one point ranking first in England and second in the world, representing England in numerous international chess tournaments. Later,He often regards chess as a prototype of early artificial intelligence thinking training—evolving an infinitely complex decision space through finite rules.

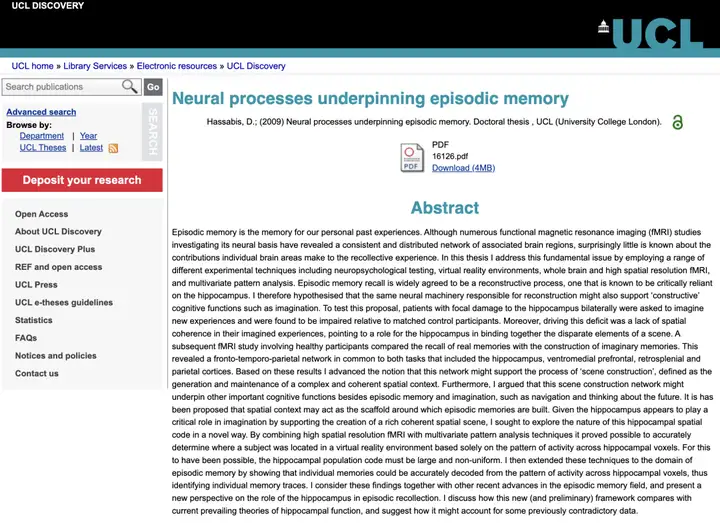

Hassabis studied computer science at Cambridge University and joined Bullfrog Productions as a game designer at the age of 17, where he worked on the classic game "Theme Park". After leaving the game industry, he earned a PhD in cognitive neuroscience from University College London (UCL), where he studied the neural mechanisms of human memory and imagination. His research has been published in authoritative journals such as Nature and Science.His exploration in the field of "biologically inspired intelligence" laid the foundation for his later research in machine learning.

To achieve his goal of "building a general-purpose intelligent system capable of learning all tasks," Hassabis co-founded DeepMind in 2010 with Shane Legg and Mustafa Suleyman. Their research initially focused on "training AI to play Atari games," attempting to use deep reinforcement learning to enable AI to achieve superhuman performance in these games.

Until 2014,DeepMind was acquired by Google for approximately £400 million (about $650 million).They successfully obtained larger-scale computing power and resource support, leading to several technological breakthroughs. From 2015 to 2016,DeepMind's AlphaGo program defeated both European Go champion and world champion Lee Sedol, demonstrating for the first time the powerful decision-making capabilities of deep reinforcement learning systems and marking a milestone in the field of AI.

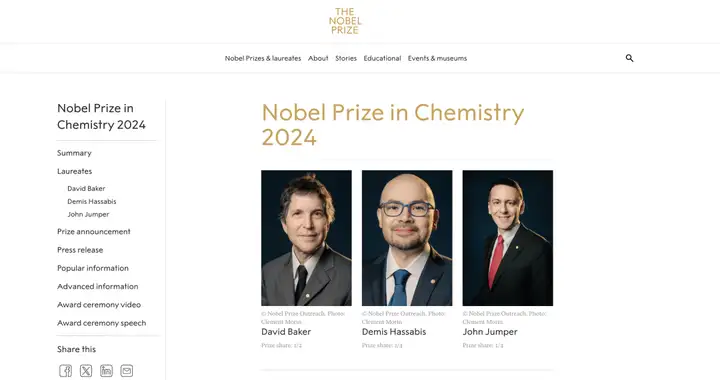

Then, in 2020, DeepMind launched the AlphaFold system. This model predicts the three-dimensional structures of hundreds of thousands of proteins with near-experimental accuracy.They have successfully overcome the "protein folding problem" that has plagued the life sciences community for more than 50 years.This achievement was named one of the "most influential scientific achievements" of the year by Nature, and was quickly adopted by research institutions around the world in fields such as drug design and vaccine development. It also won the 2024 Nobel Prize in Chemistry.

Thus, DeepMind, under Hassabis's leadership, has transformed from a pioneer in game intelligence into a key force connecting AI with the forefront of basic science.

Hassabis once humorously remarked about the honor, saying it was his lifelong dream, "If I had learned of the award on television, I would have had a heart attack." However, the Nobel Prize didn't stop him; he immediately turned his attention to more challenging cutting-edge research. As Time magazine noted,The impact of AlphaFold may be enough to earn its creators a Nobel Prize, but in the field of artificial intelligence, it is considered "hopelessly narrow-minded"—AlphaFold can only simulate protein structures and cannot conduct broader research.In contrast, Hassabis hopes to usher in an "almost unimaginable" future by building a more versatile AGI technology.

"I believe that some of the biggest problems facing society today, whether it's climate change or disease, will be alleviated through artificial intelligence solutions. I would be very worried about society today if I didn't know that something as transformative as artificial intelligence was about to emerge," Hassabis said in a Time magazine interview. He added that if AGI technology is realized, global conflicts over scarce resources will gradually dissipate, ushering in a new era of peace and prosperity.

Rejecting zero-sum games, Hassabis adheres to its AI4S future strategy.

As co-founder and CEO of DeepMind, Demis Hassabis continues to drive AI research from the conceptual exploration of general intelligence (AGI).The strategy shifts to "AI for Science (AI4S)" with scientific discovery at its core.

"I first and foremost see myself as a scientist. Everything I've done in my life has been in pursuit of knowledge and an attempt to understand the world around us," says Demis Hassabis. He believes that the true potential of AI lies not in imitating humans, but in expanding the boundaries of human cognition. The long-term value of AI in fields such as life sciences, materials design, climate modeling, and energy optimization far outweighs the short-term commercial benefits of generative AI. Therefore, DeepMind's research focus has shifted from "whether intelligence can think like a human" to "whether intelligence can accelerate scientific discovery." Along with the launch of AlphaFold 3, DeepMind also launched the "AI for Science Grand Challenge" program, attempting to solve fundamental scientific problems across disciplines using general-purpose models.

Hassabis holds a relatively conservative view on the realization of AGI. Previously, OpenAI CEO Sam Altman predicted that AGI would appear within this decade, and Anthropic co-founder Dario Amodei even believed it might be as early as 2026. Hassabis, however, expects it will still require 5 to 10 years of development because true AGI should have the ability to make scientific discoveries and deduce new laws of nature with limited information.

To realize its AI4S strategic orientation, Hassabis released Gemini 2.5 in 2025, whose performance surpassed similar models from OpenAI and Anthropic in multiple benchmarks. Meanwhile, Project Astra, a general-purpose digital assistant powered by Gemini, is considered a key project for the next phase. However, Hassabis emphasized that...While AGI may reshape the workforce, this type of research is by no means intended to replace humans—AGI, capable of conducting scientific research autonomously, will usher in a more resource-rich, knowledge-driven, and "non-zero-sum" future society."In our world with limited resources, it will eventually become a zero-sum game. What I envision is a world that is no longer a zero-sum game, at least from a resource perspective."

When AI approaches the boundaries of its ideal state, can AGI be safely achieved?

In fact, the name Demis Hassabis not only represents technological breakthroughs, but has also always been accompanied by complex controversies.Many media outlets have expressed skepticism about Demis Hassabis and the awards he received.As the French newspaper Le Monde pointed out in its editorial, although the 2024 Nobel Prize in Chemistry was awarded for achievements related to AI research, the problems of "the complexity and lack of transparency of AI methods" were also magnified.

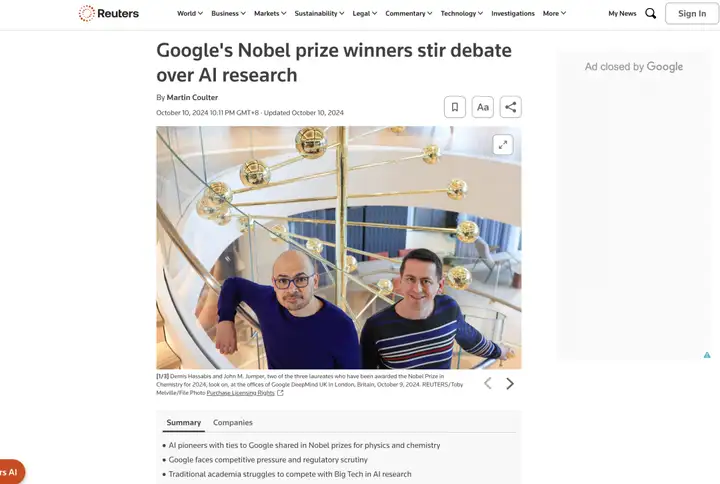

According to Reuters, after the Nobel Prize results were announced, Professor Wendy Hall, a UN advisor and computer scientist, revealed that while the work of the laureates was commendable, the Nobel Prize Committee's particular focus on the field of AI contributed to the outcome to some extent.

In response, Bloomberg technology columnist Parmy Olson offered a stark critique of the deeper implications behind the Nobel Prize. In his editorial, Olson stated that...Hassabis's award-winning achievements are certainly remarkable, but they may also obscure the fact that a few tech giants have centralized control over AI."The Nobel Foundation seems eager to recognize the advancements in AI and the scientific concept of computationally solvable technologies as strong contenders for the top prize, but this recognition also carries risks. It may mask concerns about the technology itself and the increasing concentration of AI technology in the hands of a few companies."

also,The journalist also criticized the Nobel Prize for being too hasty in its praise of commercial entities in the editorial."The Nobel Prize is awarded to individuals who have made outstanding contributions to the sciences, the humanities, and peace. Therefore,The foundation behind it boldly recognized the work of an AI company, and awarding the Hassabis prize was somewhat premature.Olson emphasized, "We hope this award will encourage technology companies to increase their investment in AI in public services, but we must not confuse the assessment of AI risks."

Meanwhile, Hassabis's geopolitical stance has become increasingly controversial. When DeepMind was acquired by Google in 2014, Hassabis insisted on signing a "firewall" clause, explicitly prohibiting the company's technology from being used for military purposes, hoping that AI would not be used as a war machine.However, after several company restructurings, this protective measure gradually faded.Since 2023, DeepMind's commitment to "not participate in military projects" has been quietly adjusted, with some collaborations related to the military or defense reappearing, sparking questions from academia and the public. According to media reports, at least 200 DeepMind employees were dissatisfied with Google's defense contracts and submitted an internal letter on May 16, 2025, expressing their opposition. The letter added, "Any association with the military and weapons manufacturing would affect DeepMind's position as an ethical and responsible leader in the field of AI and would violate our mission statement and established AI principles."

In fact, until the release of ChatGPT sparked a race among tech giants, DeepMind focused more on scientific research than product development. Currently, under competitive pressure from consumers, information about healthcare and climate on its homepage has disappeared. Critics argue that...This shift signifies that DeepMind made an ethical compromise under geopolitical pressure and undermined its earlier "science-based" stance.However, in Time magazine's report,Hassabis does not acknowledge that he made a compromise, but sees it as a response to the reality of a "more dangerous world."He argues that, given the turbulence in the international landscape over the past decade, maintaining technological secrecy cannot truly guarantee security. While this explanation has a rational aspect, it also reveals his ethical dilemma between ideals and reality.

As computing power and model development become global strategic resources, technological barriers and export restrictions are deepening, widening the trust gap between countries. To avoid systemic confrontation hindering the secure development of AI, Hassabis has attended numerous international AI security conferences since 2023.It calls on countries to establish minimum cooperation mechanisms on AI safety and research standards, and to reduce the risk of technology misuse by sharing assessment frameworks and risk monitoring systems.

In short, when Time magazine placed Hassabis's portrait in the center of its red-framed cover, he had to find a balance between technological openness and technological security. The research path represented by Hassabis is accompanied by real-world issues of ethics, governance, and the redistribution of power. Faced with uncertain timing and multiple risks, the key lies not in whether individuals or companies can achieve a particular vision, but in whether society can establish an enforceable and transparent oversight mechanism to constrain this technology. Regardless of the outcome, the discussion about the distribution of responsibility and benefits will continue to be a core issue for AGI.

Reference Links:

1.https://techcrunch.com/2024/08/22/deepmind-workers-sign-letter-in-protest-of-googles-defense-contracts/

2.https://www.taipeitimes.com/News/editorials/archives/2024/10/12/2003825156

3.https://www.lemonde.fr/idees/article/2024/10/25/l-intelligence-artificielle-laureate-des-prix-nobel-de-physique-et-de-chimie_6359516_3232.html

4.https://time.com/7277608/demis-hassabis-interview-time100-2025/

5.https://www.reuters.com/technology/