Command Palette

Search for a command to run...

Google Teams Collaborate on Earth AI, Focusing on Three Core Data Points and Boosting Geospatial Reasoning Capabilities by 64%.

Human exploration of the temporal and spatial laws of the Earth has always been the core driving force for the development of environmental science and geography. From relying on empirical summaries of natural phenomena to computer technology-enabled weather forecasts, human cognition of the Earth system has made a leap from qualitative to quantitative. Since the 21st century, technologies such as satellite remote sensing, ground sensor networks, and global demographic databases have become increasingly mature, giving rise to an explosive growth in geospatial data. These data cover multi-dimensional information such as the geophysical environment, human activities, and disaster dynamics, and have become a key foundation for regional assessments, resource allocation, and climate research. In this context,Geospatial artificial intelligence (GeoAI) has emerged as an important bridge connecting "earth data" and "scientific insights."

However, the surge in data also brings about severe "complexity barriers".On the one hand, geospatial data is growing by the billions daily, with diverse types, widely varying resolutions, and a wide range of time periods. Furthermore, data sparsity exists in some remote areas. On the other hand, traditional specialized models are often limited to single tasks and struggle to integrate information from multiple sources. This results in low analytical efficiency and weak generalization, making them unable to meet the demands of complex scenarios such as cross-regional disaster response and multi-factor public health forecasting. Although GeoAI has transitioned to a "generalized foundational model," existing solutions still lack multimodal collaboration and universal accessibility.

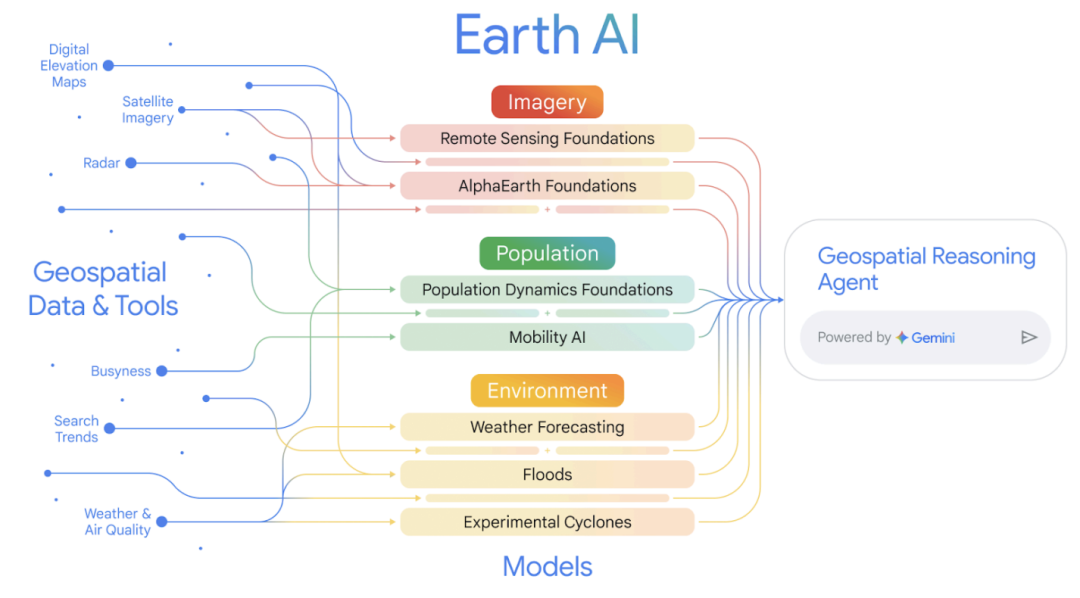

In response to the above challenges,Google Research teamed up with Google X, Google Cloud and other teams to propose the "Earth AI" geospatial intelligent reasoning system.The system builds a family of interoperable GeoAI models, developing specialized foundational models around three core data types: imagery, population, and environment. These models precisely adapt to analytical needs across diverse dimensions. Through the Gemini-powered inference agent, the system enables deep multi-model collaboration and multi-step joint reasoning. The system significantly lowers the barrier to entry through natural language interaction, enabling even non-expert users to conduct cross-disciplinary real-time analysis, thus advancing Earth system research from "data accumulation" to "actionable global insights."

The relevant research results are titled "Earth AI: Unlocking Geospatial Insights with Foundation Models and Cross-Modal Reasoning" and the preprint has been published on arXiv.

Research highlights:

* This research's remote sensing foundational model achieved state-of-the-art results in tasks such as open vocabulary object detection and zero-shot cross-modal retrieval. Furthermore, the population dynamics model has been independently validated to effectively improve real-world applications in retail and public health, and has been upgraded to support time series embedding at monthly granularity.

* This research integrates imagery, demographic, and environmental models to build a more powerful multimodal prediction framework. Empirical evidence shows that this fusion approach significantly outperforms the results of any single modality analysis in multiple real-world classification and prediction tasks.

* This research implements agent-based complex geospatial reasoning: the reasoning agent driven by Gemini can automatically deconstruct complex geographic queries, dispatch multi-model tools, display transparent reasoning chains, and ultimately generate coherent conclusions.

Paper address:

https://doi.org/10.48550/arXiv.2510.18318

Follow the official account and reply "Earth AI" to get the full PDF

Earth AI Data System: Building the Foundation for Cross-Modal Geospatial Analysis

Earth AI's training foundation is built on three types of professional geospatial datasets for Earth system analysis, which support in-depth interpretation of imagery, population, and environment.

In terms of image data processing, the system integrates multiple large-scale remote sensing data sets.RS-Landmarks contains 18 million satellite and aerial images with high-quality text descriptions; RS-WebLI uses classifiers to screen over 3 million open remote sensing images from the web, with the potential to scale to hundreds of billions; and RS-Global provides 30 million images covering global landmasses with resolutions ranging from 0.1 to 10 meters, spanning the period from 2003 to 2022. Together, these datasets form the data foundation for the development and optimization of remote sensing-specific models, such as vision-language models, open vocabulary object detection, few-shot learning, and pre-trained backbone models.

In terms of population dynamics analysis,The dataset deeply integrates three types of information: built environment, natural elements, and human behavior, and uses graph neural network technology to generate unified regional embeddings. The system has achieved two key expansions based on the original single-year data for the United States: in the spatial dimension, the coverage has been expanded to 17 countries including Australia, Brazil, and India, and the search semantics have been aligned through knowledge graphs to improve cross-language and national pattern recognition capabilities. The relevant static embeddings have been opened for epidemiological modeling research; in the temporal dimension, a monthly dynamic embedding sequence has been constructed from July 2023 to the present. The experimental labeling system covers a wide range of indicators such as health, socioeconomics, and the environment, and integrates county-level epidemiological monthly visit data from the Yale PopHIVE platform. The European regional assessment also combines NUTS Level 3 data from the European Statistical Office.

Environmental data integrates three types of information sources: weather, climate, and natural disasters. It provides 240-hour hourly weather forecasts and 10-day daily forecasts based on multi-source observations and machine learning models, real-time flood monitoring and forecasting based on measured station data, and an experimental cyclone prediction system based on random neural networks that generates 50 possible paths and can predict intensity, wind circles, and landing points 15 days in advance.

These structured and standardized data sets not only provide support for independent analysis in various fields, but also achieve deep cross-modal collaboration through Gemini-driven reasoning agents.This system makes end-to-end analysis possible, from complex natural language query parsing to multi-source information fusion. It also lays a solid data foundation for non-professional users to directly access complex geospatial analysis capabilities through natural language or map interfaces.

Earth AI: Towards a multimodal collaborative framework for intelligent analysis of Earth systems

Earth AI is a family of interoperable geospatial artificial intelligence models with the core goal of "multimodal collaborative understanding of the Earth system".By achieving component coordination through customized geospatial reasoning agents and building a generalized system based on foundational models (FMs) and large language models (LLM) reasoning, it breaks through the limitations of single-purpose models and can generate actionable insights for a wide range of planetary issues. Its core system revolves around "three types of foundational models + model collaboration mechanism + agent orchestration."

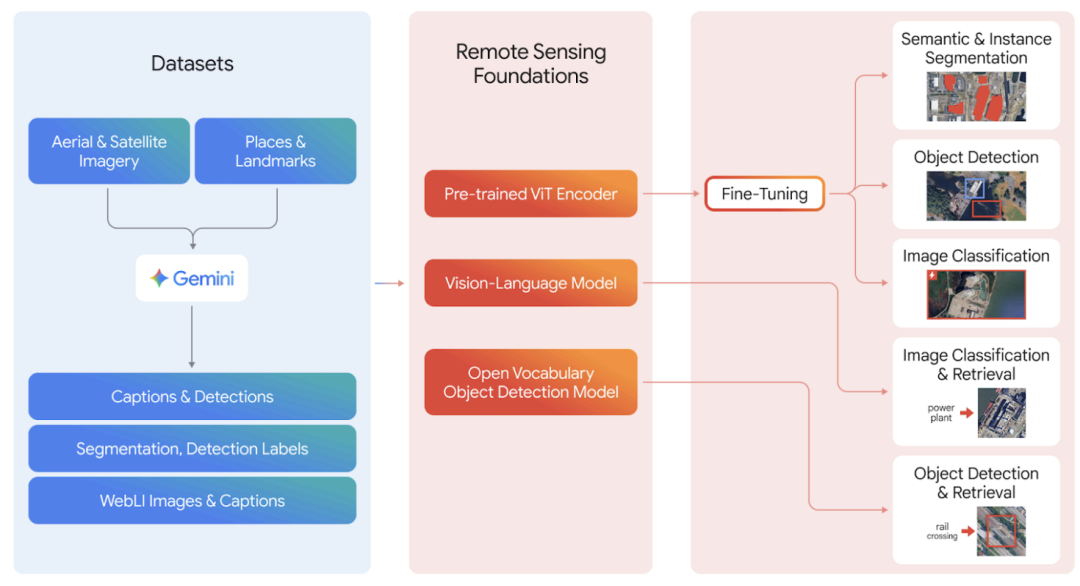

In the field of image analysis, Earth AI focuses on remote sensing basic models. Its core goal is to address the two key challenges commonly found in remote sensing data: scarcity of annotations and peculiar image distribution, and to provide technical support for efficient semantic understanding and target analysis of Earth observation scenarios.As shown in the figure below, the model extracts professional aerial and satellite imagery from Google Maps, combines it with geospatial metadata such as locations and landmarks, and feeds it into the Gemini model. The model then generates synthetic captions that precisely match the image content through customized prompts. It also integrates WebLI remote sensing imagery with text annotations, as well as manually labeled remote sensing segmentation and object detection datasets. This multi-source data provides high-quality sample support for pre-training three core models: the pre-trained ViT Encoder, the Vision-Language Model (VLM), and the Open Vocabulary Object Detection Model (OVD).

Among them, the visual-language model is trained based on a customized dataset, and a unified semantic understanding space is constructed by optimizing the feature association between images and text. The open vocabulary object detection model adopts an improved Transformer architecture, with image and text features processed by two independent modules respectively. The visual Transformer encoder first extracts basic features from massive images through self-supervised learning, and then improves the adaptability and performance of the model in specific tasks through multi-task joint optimization. In the actual application link, the researchers directly used the VLM and OVD models for their respective classification (Classification), detection (Detection) and retrieval (Retrieval) tasks, and fine-tuned the ViT encoder (Fine-Tuning) to obtain the best SOTA performance on downstream specific tasks.

In terms of population analysis, Earth AI takes the basic model of population dynamics as its core, follows the principles of multi-source information fusion and privacy protection, integrates data on the built environment, natural conditions, and human behavior, and generates unified regional embeddings through graph neural networks.

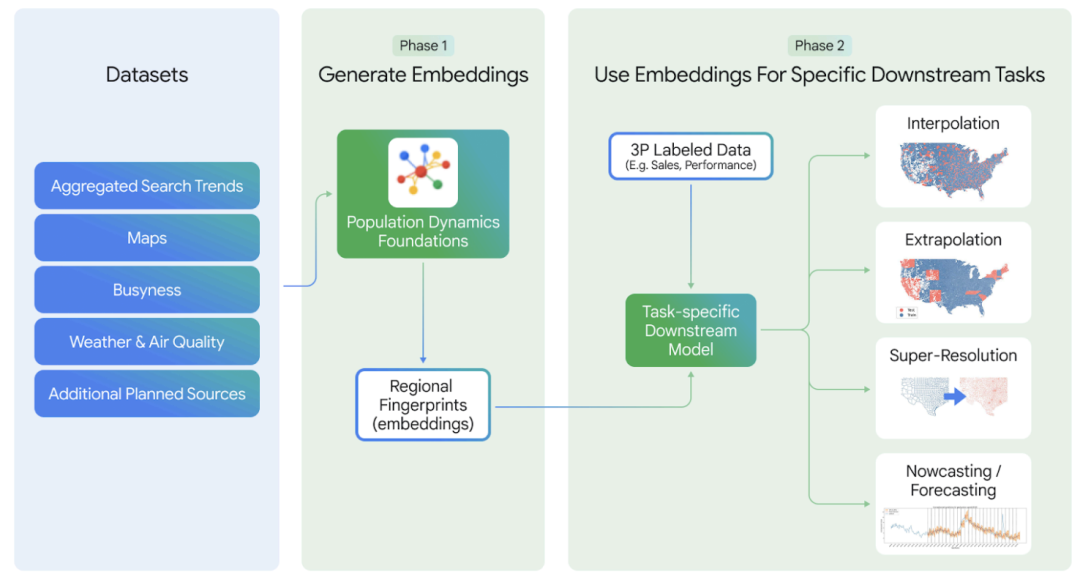

As shown in the figure below, in order to break through the limitations of a single model,Earth AI uses "spatial alignment + representation integration" to achieve multi-model collaboration:The outputs of different models are mapped to a unified geographic unit and the representations are fused. For example, the imagery, topography, and climate information from the AlphaEarth base model complement the human activity signals from the population model to construct a comprehensive regional portrait. The model operates in two phases: the first phase involves offline training, encoding compact regional embeddings using geospatial data such as maps, search trends, and environmental conditions; the second phase uses pre-trained embeddings for dynamic fine-tuning, supporting downstream tasks such as interpolation, extrapolation, super-resolution, and nowcasting.

To solve complex multi-step geospatial problems, Earth AI developed the Gemini-driven geospatial reasoning agent. This agent relies on the Google Agent Development Kit (ADK), integrating general reasoning capabilities with four professional functions: image analysis, demographics, environmental simulation, and spatiotemporal modeling. It also provides supporting tools for geospatial data processing, code generation, and Earth Engine data access.

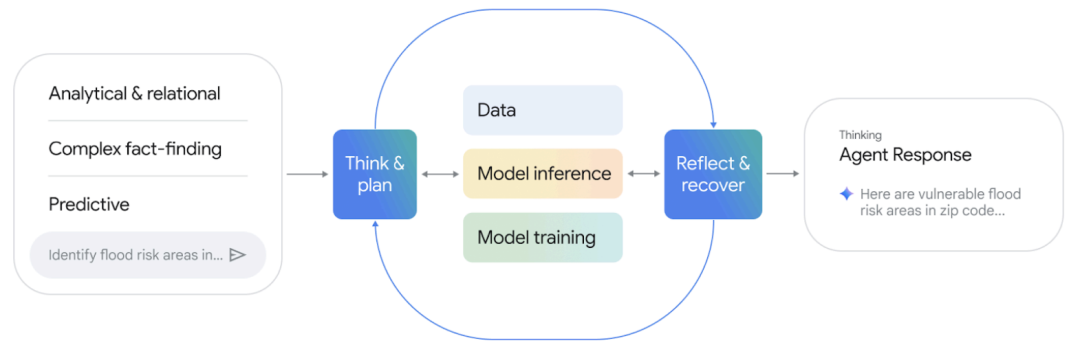

As shown below,Its work follows the core logic of "analyzing queries - decomposing tasks - calling tools - synthesizing results".Through a closed-loop, iteratively optimized response process ("Think & Plan" → Data/Model Inference/Model Training → Reflect & Recover"), it can handle three types of complex queries: complex fact-finding, analytical & relational, and predictive. Users interacting through natural language or a map interface can query simple facts as well as complete complex tasks such as tracing the distribution of critical facilities during historical events and foreseeing areas of high risk and social vulnerability. This supports decision-making needs from retrospective analysis to forward-looking planning.

Achieved SOTA performance in multiple public benchmarks, achieving a 64% improvement over Gemini 2.5 Pro.

Earth AI's experimental system is organized around three levels: single-model performance, multi-model collaboration, and agent reasoning. It systematically evaluates two fundamental models, imagery and population, and their comprehensive performance in integrated applications and geospatial reasoning.

During the single-model performance verification phase, the image-based model demonstrated outstanding performance across multiple tasks. The vision-language model, based on the SigLIP2 and MaMMUT architectures, achieved state-of-the-art performance in zero-shot classification and text retrieval tasks across multiple public benchmarks, with some metrics even comparable to general-purpose conversational models with larger parameters. The open vocabulary detection model achieved zero-shot test mAPs of 31.831 TP3T and 29.391 TP3T on the DOTA and DIOR datasets, respectively. After few-shot learning with only 30 samples per class, mAP further improved to over 531 TP3T, significantly outperforming existing methods. The pre-trained backbone model achieved an average improvement of 14.931 TP3T across 13 downstream tasks covering classification, detection, and segmentation compared to the ImageNet pre-training baseline, and set new performance records in tasks such as FMOW classification and FLAIR segmentation.

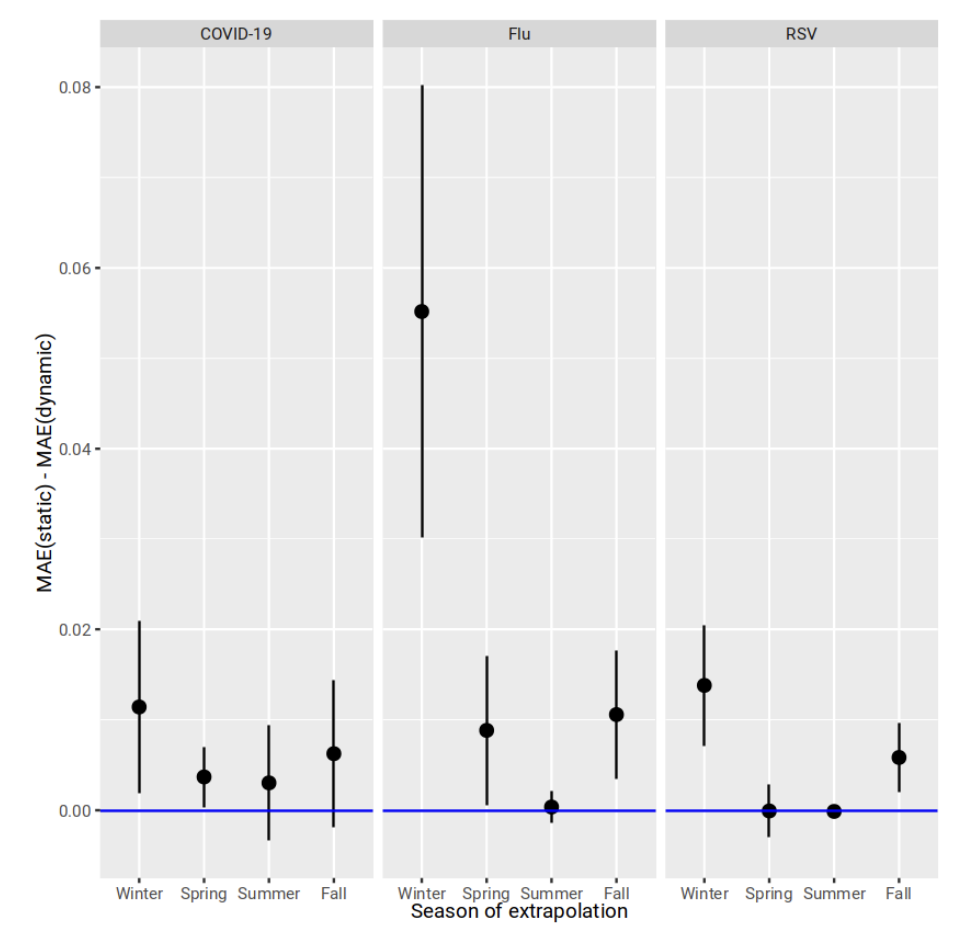

The population dynamics basic model demonstrates strong performance in spatial interpolation and temporal prediction tasks. As shown in the figure below,Its global embedding maintains stable R² performance in the task of predicting missing variables in the 20% region and verifies good transferability in cross-country generalization tests.Based on monthly dynamic embeddings constructed starting in July 2023, the mean absolute error in extrapolated forecasts of COVID-19 and influenza emergency room visits was significantly lower than that of static embeddings, with a particularly significant advantage during the peak autumn and winter disease outbreaks. Third-party validation further confirmed the model's applicability and robustness in real-world scenarios.

In a multi-model collaborative experiment, integrating population dynamics with the AlphaEarth base model significantly improved prediction accuracy.In predicting FEMA disaster risk scores for US census tracts, the fusion model achieved an average R² improvement of 111 TP3T compared to individual models. When predicting 21 CDC health indicators, it outperformed the population model and AlphaEarth model alone by 71 TP3T and 431 TP3T, respectively. Furthermore, the system demonstrated the ability to combine cyclone forecasts with population models to predict hurricane property damage, and to collaborate with time series forecasts and weather models for cholera risk warning in the Democratic Republic of the Congo, achieving a 341 TP3T reduction in RMSE compared to the baseline model.

The geospatial reasoning agent's capabilities were evaluated using standardized question-and-answer sets and crisis scenario tests. In the 100-question evaluation set, the agent achieved an overall score of 0.82, an improvement of 64% over Gemini 2.5 Pro and 110% over Flash, respectively. The agent's performance was particularly strong in analytical reasoning tasks. In tests across 10 crisis response scenarios, the agent, after multiple rounds of iterative optimization, consistently outperformed the baseline system on Likert-scale scores, demonstrating its effectiveness and reliability in handling complex, multi-step geospatial reasoning tasks.

Technological breakthroughs and application practices of geospatial intelligence

Focusing on the core technology direction led by Earth AI, the global academic and industrial communities are working together to promote geospatial intelligence from algorithm innovation to systematic and scenario-based implementation, and gradually build a multi-level, highly coordinated technology ecosystem.

At the forefront of research, a unified understanding of multiple modalities has become a key breakthrough. Represented by the EarthMind framework, jointly developed by the University of Trento (Italy), the Technical University of Munich (Germany), the Technical University of Berlin (Germany), and the INSAIT Research Institute in Bulgaria, research teams have built a unified multi-granularity, multi-sensor understanding system for remote sensing scenarios.

Paper Title:EarthMind: Towards Multi-Granular and Multi-Sensor Earth Observation with Large Multimodal Models

Paper link:https://doi.org/10.48550/arXiv.2506.01667

In addition, World Labs, founded by Stanford University Professor Fei-Fei Li, recently launched a limited beta version of its spatial intelligence model, Marble, through the X platform. This model focuses on 3D world generation technology, building persistent, freely explorable 3D scenes from a single image or text prompt.

In terms of industrial applications, companies are actively embedding geospatial intelligence into core business systems. NVIDIA and UAE G42 collaborated to create the Earth-2 platform.Relying on generative AI to build a high-precision weather forecast system, through the collaboration of the FourCastNet global model and the CorrDiff downscaling architecture, it can achieve refined output from 2-kilometer national forecasts to 200-meter city-level weather forecasts, compressing traditional simulations that take several hours to minutes, greatly improving the warning and response capabilities of extreme weather. An open source geospatial AI foundation model jointly released by IBM and NASA.This model, trained on large-scale satellite data from NASA's Harmonized Landsat Sentinel-2 project and employing a multi-task joint optimization framework, supports a variety of applications, including climate change monitoring, dynamic tracking of deforestation, and crop yield estimation. In terms of model optimization, not only did it improve training efficiency by 15%, but it also achieved a 15% performance improvement using only half the annotated data.

From academic innovation to industrial practice, geospatial intelligence is being integrated into human understanding and decision-making regarding the Earth system with unprecedented depth and breadth. With continued breakthroughs in key technologies such as multimodal fusion, cross-scale modeling, and agent collaboration, analytical frameworks such as Earth AI are expected to play an even more central role in addressing planetary challenges such as climate response, disaster prevention and control, and resource management, driving the coordinated evolution of science and societal management.

Reference Links:

1. https://mp.weixin.qq.com/s/XeZdQbMvvnQId6PLWM7K1A

2. https://mp.weixin.qq.com/s/WdIq1SToGa3jmVlbGZsy8w

3. https://mp.weixin.qq.com/s/C3XqmCooqwch1JyAXCnYlQ

4. https://mp.weixin.qq.com/s/ix0r3lwiqE18gYxvJupr0g