Command Palette

Search for a command to run...

Kiss3DGen: A 3D Asset Generation Framework Based on an Image Diffusion Model

An error occurred in the Server Components render. The specific message is omitted in production builds to avoid leaking sensitive details. A digest property is included on this error instance which may provide additional details about the nature of the error.

Failed to load notebook details1. Tutorial Introduction

Kiss3DGen is an open-source 3D generation and reconstruction framework developed by the EnVision-Research team and published in March 2025. It aims to efficiently transfer pre-trained 2D diffusion models to 3D content generation tasks. It supports high-quality multi-view rendering, 3D text generation, image-to-3D conversion, and 3D mesh reconstruction, integrating advanced modules such as Flux, Multiview, Caption, Reconstruction, and LLM. It also introduces 3D Bundle Image technology combined with normal maps and texture information to achieve accurate geometric reconstruction. Furthermore, it can be used with tools like ControlNet for 3D model enhancement and editing. This open-source framework is easy to deploy and has both academic research and practical application value. Related research papers are available. Kiss3DGen: Repurposing Image Diffusion Models for 3D Asset GenerationIt has been included in CVPR 2025.

This tutorial uses a dual-GPU RTX a6000 setup. Project prompts are only available in English.

2. Project Examples

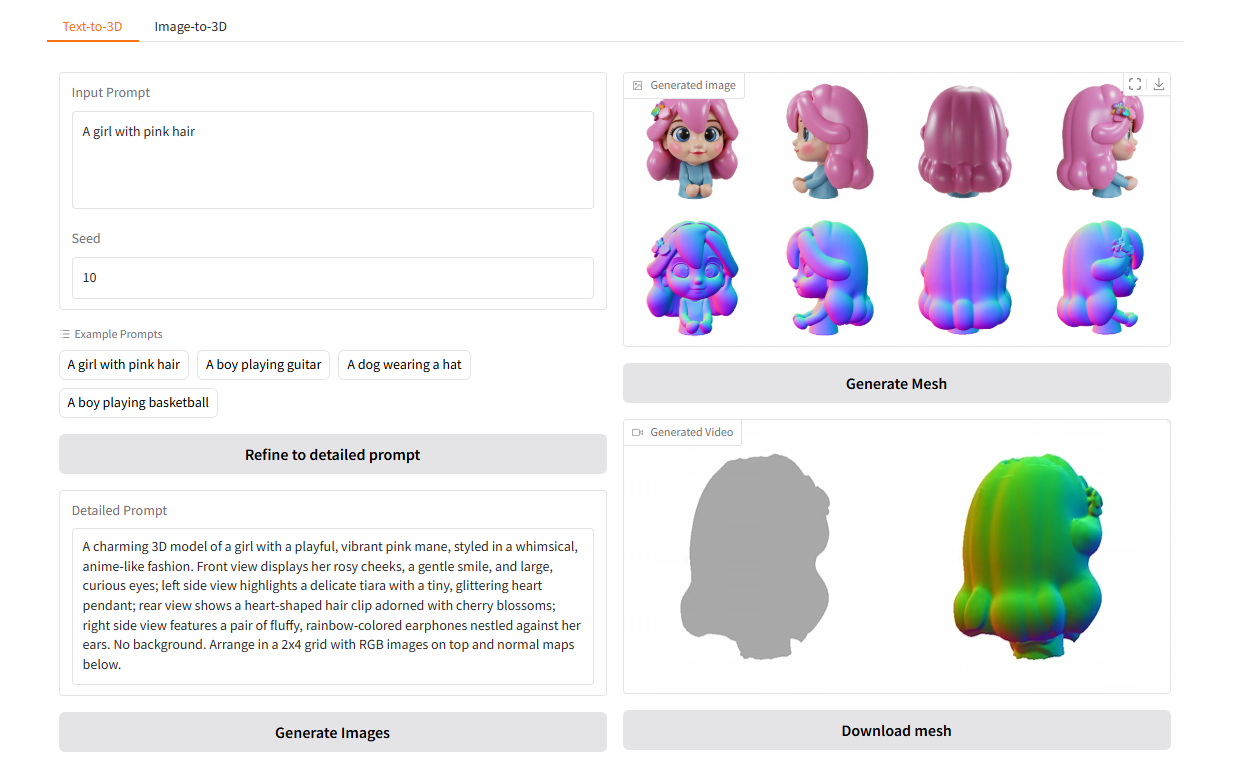

text-to-3D

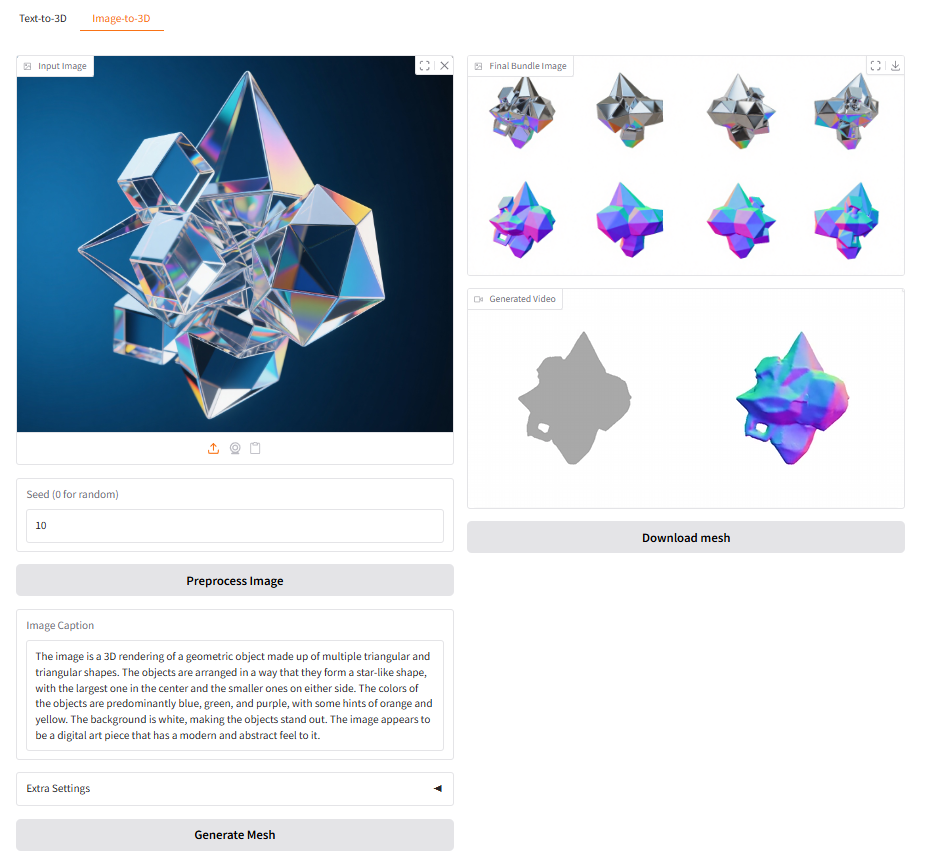

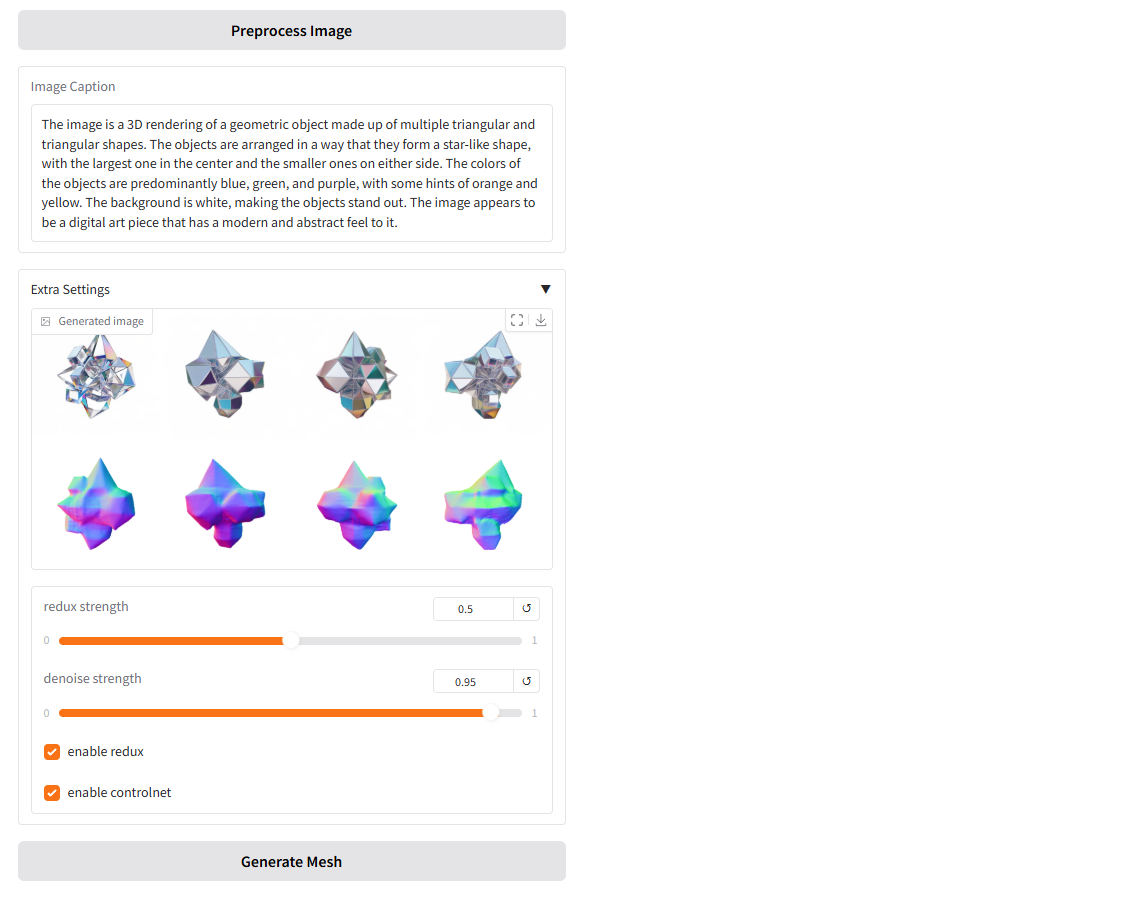

image-to-3D

3. Operation steps

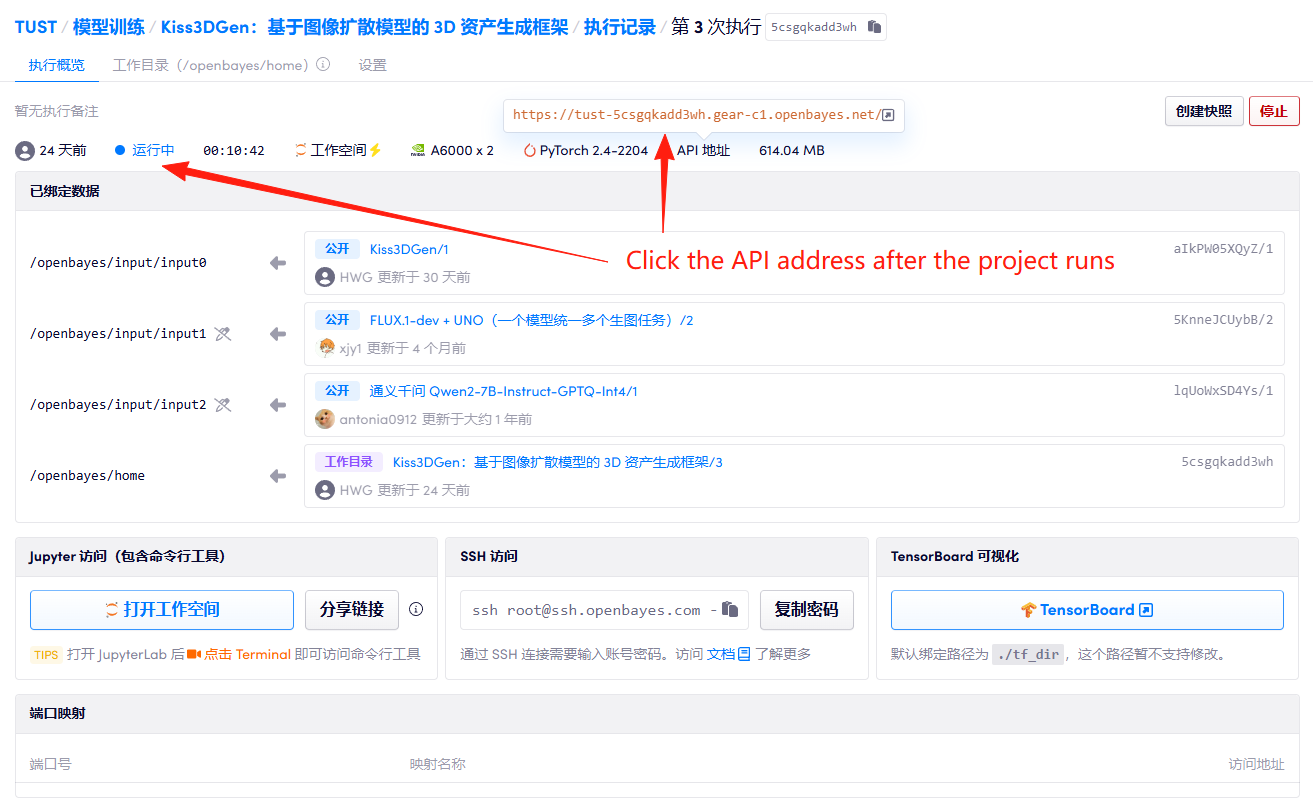

1. After starting the container, click the API address to enter the Web interface

2. Usage steps

If "Bad Gateway" is displayed, it means that the model is initializing. Since the model is large, please wait about 5-7 minutes and then refresh the page.

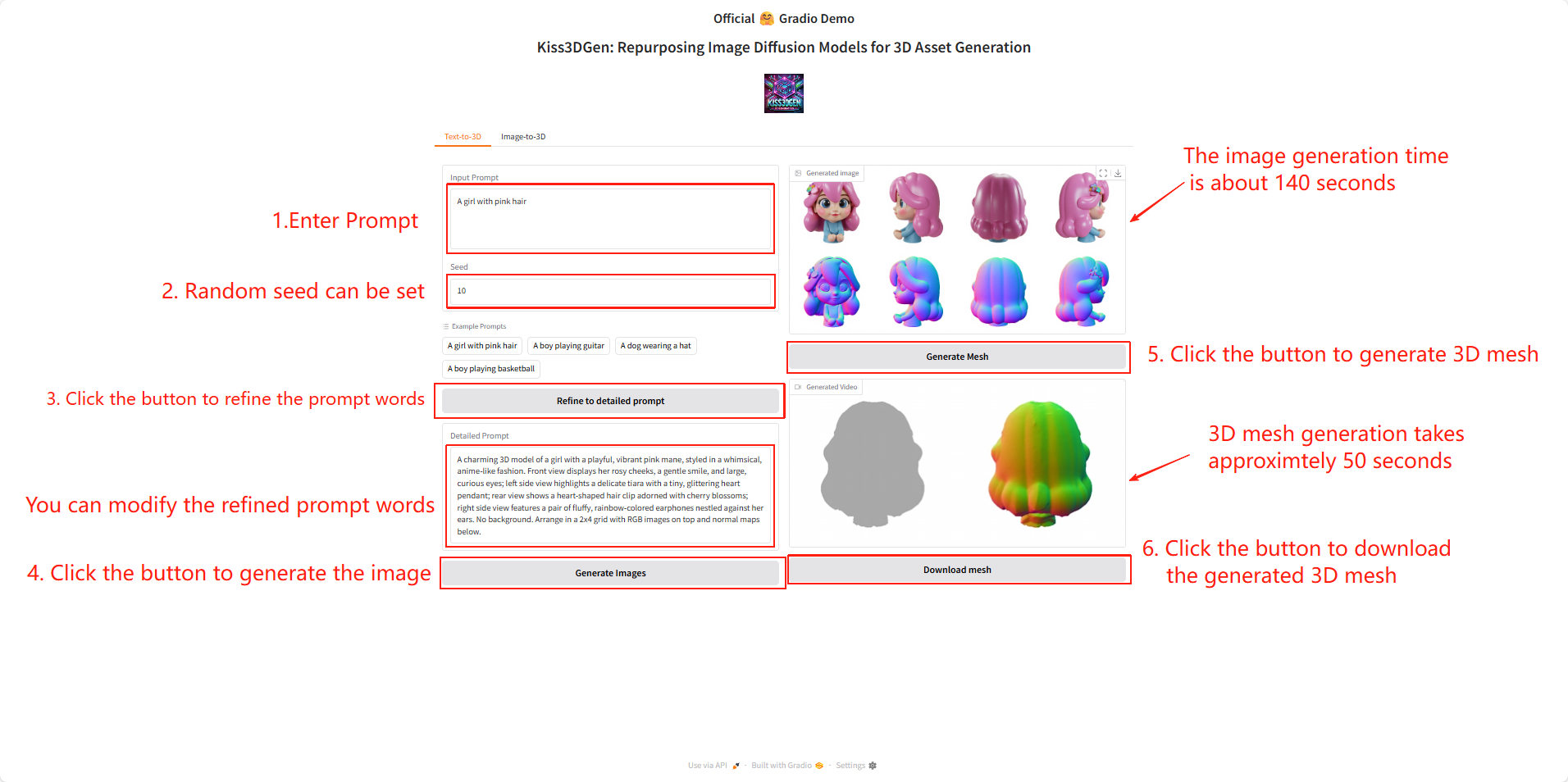

text-to-3D

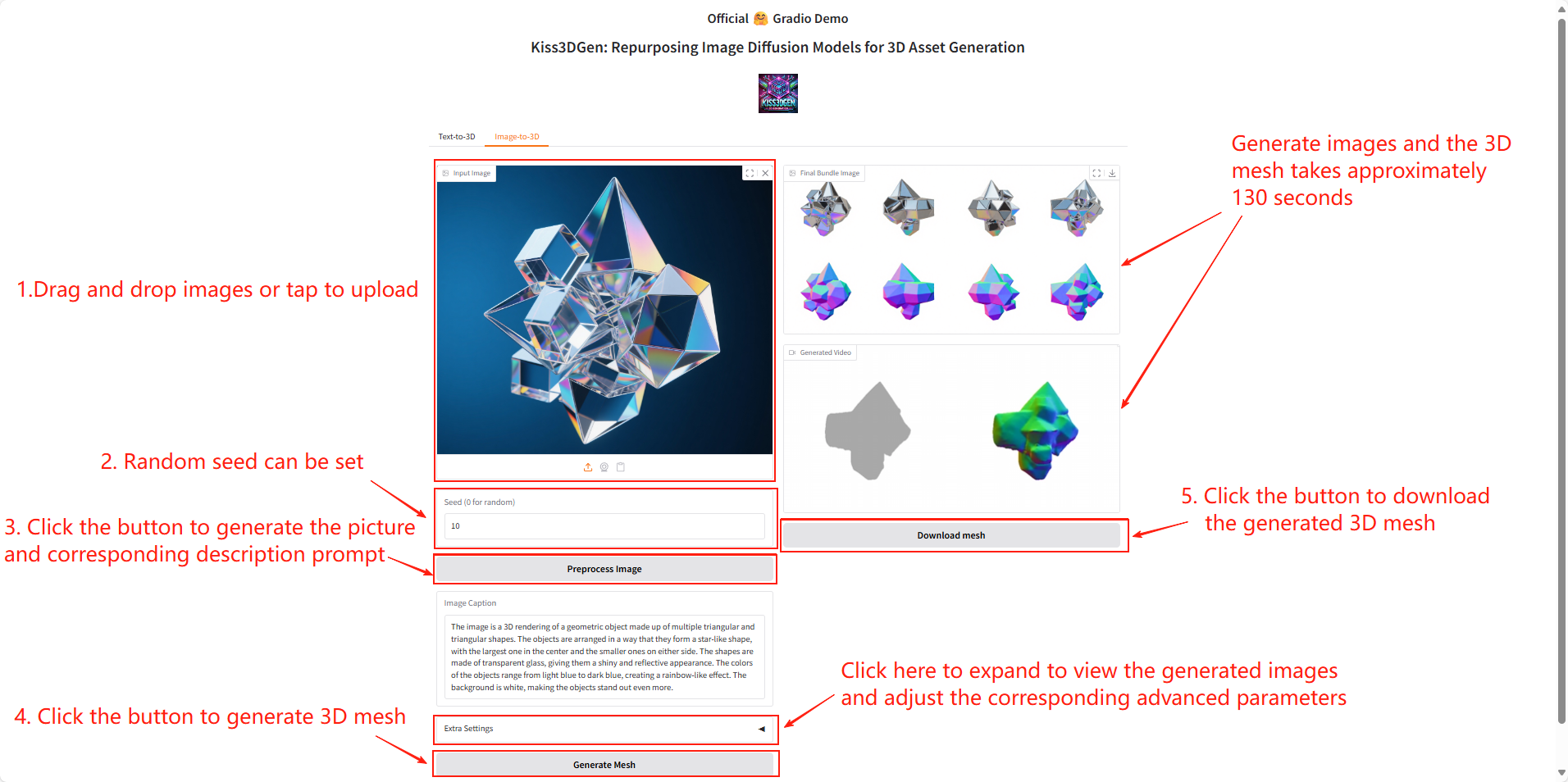

image-to-3D

Note: If you encounter an error, please use a smaller image. We recommend using an image smaller than 3 MB.

Parameter Description

- Redux strength: Controls the degree to which the generated image is "redrawn/optimized". A higher value results in greater modifications and more detail changes to the original image; a lower value preserves more of the original generated details and structure. Value range: 0–1.

- Denoising strength: Controls the degree of noise reduction during the generation process. Higher values (closer to 1) generate an image that is closer to the input prompt but with greater variation; lower values generate a result that is closer to the original image. Value range: 0–1.

- Enable Redux: When enabled, an optimized redraw based on Redux strength will be automatically performed after the image is generated to improve image quality and detail.

- Enable ControlNet: When enabled, ControlNet is allowed to be used during the generation process for structural or feature constraints (such as reference sketches, edge maps, depth maps, etc.), so that the generated image can meet specific structural requirements while maintaining its style.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@article{lin2025kiss3dgen,

title={Kiss3DGen: Repurposing Image Diffusion Models for 3D Asset Generation},

author={Lin, Jiantao and Yang, Xin and Chen, Meixi and Xu, Yingjie and Yan, Dongyu and Wu, Leyi and Xu, Xinli and Xu, Lie and Zhang, Shunsi and Chen, Ying-Cong},

journal={arXiv preprint arXiv:2503.01370},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.