Command Palette

Search for a command to run...

هل يمكن لـ LLMs تنظيف فوضى البيانات؟ مراجعة للتحضير الجاهز للتطبيق مع LLMs

هل يمكن لـ LLMs تنظيف فوضى البيانات؟ مراجعة للتحضير الجاهز للتطبيق مع LLMs

الملخص

تهدف عملية تحضير البيانات إلى تنقية البيانات الخام، وتكشف العلاقات المتقاطعة بين المجموعات المختلفة، واستخلاص رؤى قيّمة منها، وهو أمر ضروري لتطبيقات مبنية على البيانات في مجالات واسعة. وتحفّز هذه العملية (أ) الطلب المتزايد على بيانات جاهزة للاستخدام (مثل البيانات المستخدمة في التحليل، والعرض المرئي، واتخاذ القرارات)، (ب) التطور المستمر في تقنيات النماذج اللغوية الكبيرة (LLMs)، و(ج) ظهور هياكل بنية تحتية تُسهّل بناء الوكلاء المرنين (مثل استخدام Databricks Unity Catalog)، مما يجعل الأساليب المدعومة بالـ LLMs تُعدّ تحوّلاً سريعًا وربما المعيار السائد في مجال تحضير البيانات. من خلال دراسة مئات من الدراسات الحديثة، يقدم هذا البحث مراجعة منهجية لهذا المجال المتطور، مع التركيز على استخدام تقنيات النماذج اللغوية الكبيرة في تحضير البيانات لأغراض متعددة في المهام اللاحقة. أولاً، نُعرّف التحوّل الأساسي في النمط، من خطوط أنابيب مبنية على القواعد ومخصصة للنماذج، إلى خطوط أنابيب مُوجهة بالأسئلة (prompt-driven) وواعية بالسياق وتعمل كوكالات (agentic). ثانيًا، نقدّم تصنيفًا مبنيًا على المهام، يُقسّم المجال إلى ثلاث مهام رئيسية: تنظيف البيانات (مثل التوحيد، معالجة الأخطاء، استكمال البيانات)، دمج البيانات (مثل مطابقة الكيانات، مطابقة الهياكل)، وتوسيع البيانات (مثل التصنيف، التحليل التوصيفي). ولكل مهمة، نستعرض الأساليب الممثلة، ونُبرز مزاياها (مثل التعميم المحسّن، الفهم الدلالي) وقيودها (مثل التكلفة الباهظة لتوسيع نطاق استخدام النماذج اللغوية الكبيرة، التصوّرات الخاطئة المستمرة حتى في الوكلاء المتقدمة، عدم توافق الأساليب المتقدمة مع أدوات التقييم الضعيفة). علاوة على ذلك، نحلّل المجموعات البيانات الشائعة واستخدام مقاييس التقييم (الجزء التجريبي). وأخيرًا، نناقش التحديات البحثية المفتوحة، ونُقدّم خارطة طريق مستقبلية تُركّز على أنظمة بيانات مدعومة بالـ LLM قابلة للتوسع، وتصميمات منهجية لضمان موثوقية سير العمل الوكيلي، وبروتوكولات تقييم قوية ومتينة.

One-sentence Summary

Wei Zhou et al. survey LLM-enhanced data preparation, proposing a task-centric taxonomy for cleaning, integration, and enrichment that shifts from rule-based to agentic, context-aware workflows, highlighting innovations like retrieval-augmented generation and hybrid LLM-ML systems while addressing scalability, hallucination, and evaluation gaps across enterprise analytics and ML pipelines.

Key Contributions

- LLMs enable a paradigm shift from manual, rule-based data preparation to instruction-driven, agentic workflows that reduce human effort by allowing natural language prompts and automated pipeline orchestration for tasks like cleaning and integration.

- The paper introduces a task-centric taxonomy covering data cleaning, integration, and enrichment, highlighting how LLMs improve semantic reasoning—resolving ambiguities like synonyms or domain terms—where traditional methods fail due to syntactic or statistical limitations.

- Through analysis of recent literature and empirical benchmarks, the survey identifies key challenges including LLM hallucinations, scalability costs, and weak evaluation protocols, while calling for scalable systems, reliable agent designs, and robust evaluation frameworks.

Introduction

The authors leverage large language models to address the growing need for application-ready data in analytics, decision-making, and real-time systems. Traditional data preparation methods suffer from heavy reliance on manual rules, limited semantic understanding, poor generalization across domains, and high labeling costs — all of which hinder scalability and adaptability. In response, the authors present a comprehensive survey that organizes LLM-enhanced techniques around three core tasks: cleaning, integration, and enrichment. They introduce a task-centric taxonomy, highlight how LLMs enable prompt-driven and agentic workflows, and identify key limitations including hallucinations, high inference costs, and weak evaluation protocols — while outlining a roadmap toward scalable, reliable, and cross-modal data preparation systems.

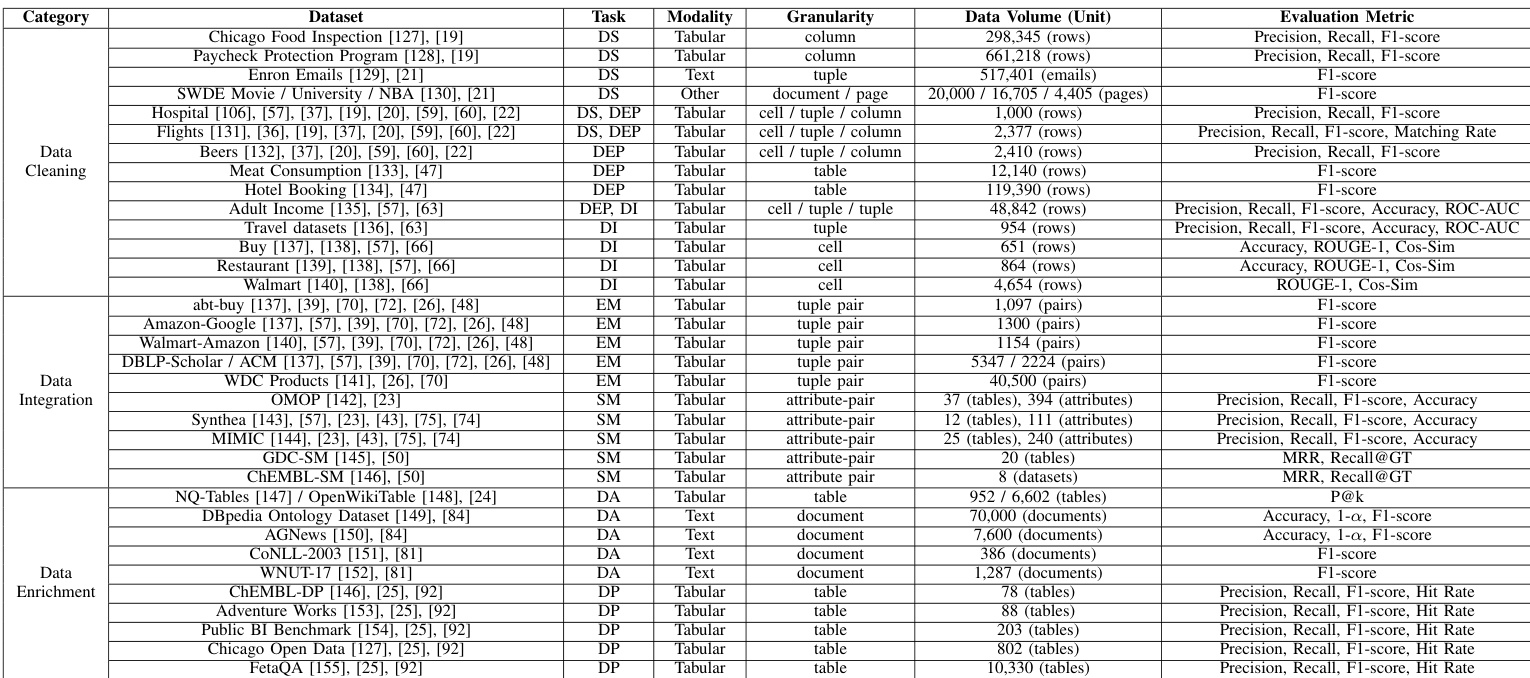

Dataset

The authors use a structured dataset taxonomy to evaluate LLM-enhanced data preparation methods, grouping benchmarks by processing granularity: record-level, schema-level, and object-level.

-

Record-Level Datasets: Focus on individual tuples, cells, or tuple pairs. Used for cleaning, error detection, imputation, and entity matching. Examples include:

- Tuple-level: Adult Income, Hospital, Beers, Flights, Enron Emails

- Column-level: Paycheck Protection Program, Chicago Food Inspection

- Cell-level: Buy, Restaurant, Walmart

- Tuple-pair: abt-buy, Amazon–Google, Walmart–Amazon, DBLP–Scholar, DBLP–ACM, WDC Products

-

Schema-Level Datasets: Align columns or attributes across heterogeneous schemas. Used for schema matching in domains like healthcare and biomedicine. Examples:

- OMOP, Synthea, MIMIC (clinical)

- GDC-SM, ChEMBL-SM (scientific/biomedical)

-

Object-Level Datasets: Treat entire tables or documents as units. Used for profiling and annotation requiring global context. Examples:

- Table-level: Public BI, Adventure Works, ChEMBL-DP, Chicago Open Data, NQ-Tables, FetaQA

- Document-level: AG-News, DBpedia, CoNLL-2003, WNUT-17

The authors do not train models on these datasets directly but use them to benchmark LLM-enhanced methods across cleaning, integration, and enrichment tasks. No specific cropping or metadata construction is described beyond the datasets’ inherent structure and evaluation metrics. Processing involves applying LLMs to standardize formats, detect and repair errors, impute missing values, match entities and schemas, annotate semantics, and profile metadata — all aimed at improving downstream analytical and ML tasks.

Method

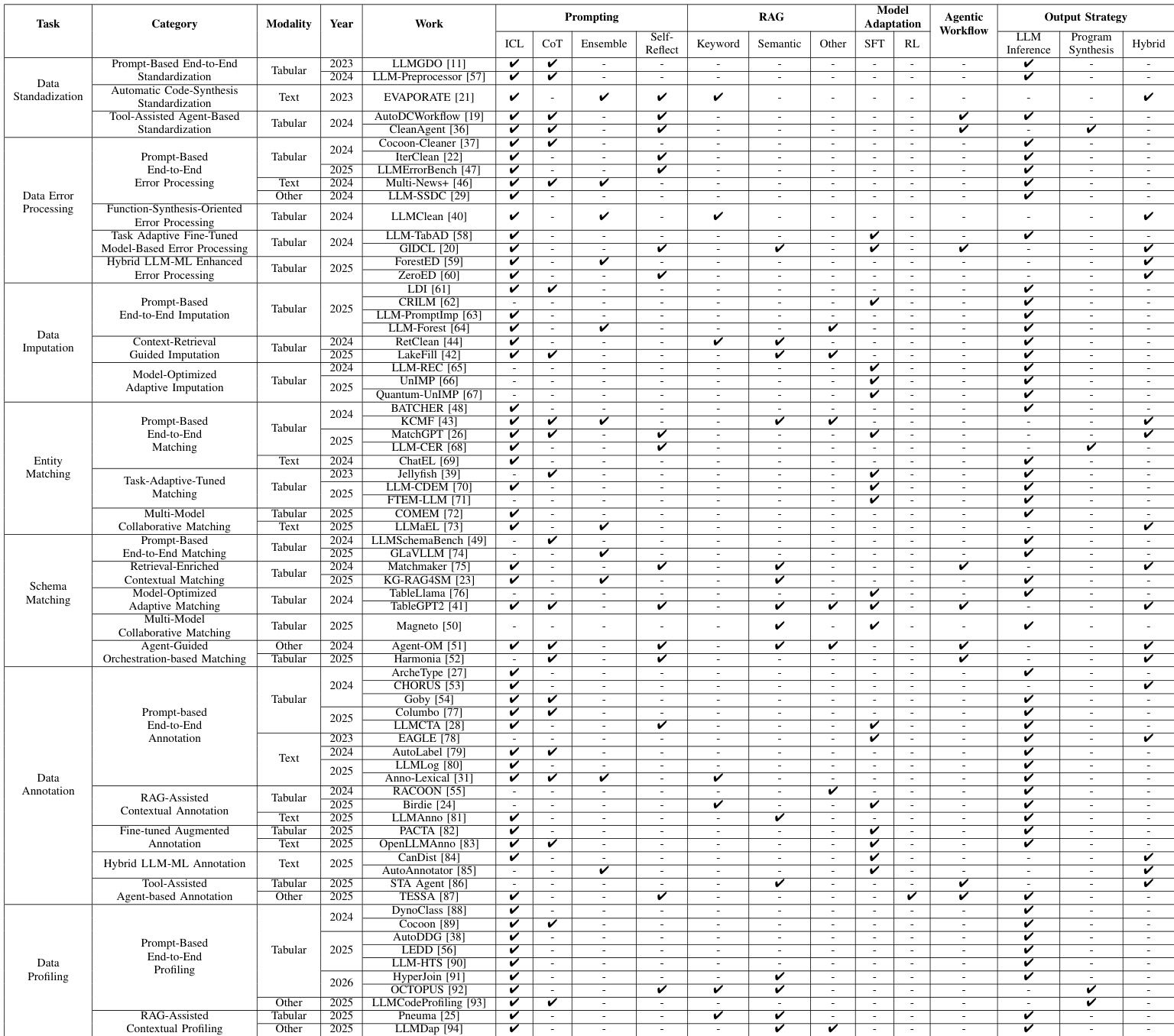

The authors present a comprehensive framework for leveraging large language models (LLMs) in data preparation, structured around three core domains: data cleaning, data integration, and data enrichment. The overall architecture, as illustrated in the framework diagram, positions these domains as foundational processes that transform heterogeneous and raw data into structured, trustworthy, and enriched forms suitable for downstream applications such as visual analytics, enterprise business intelligence, model training, fraud monitoring, and data marketplaces. The framework highlights that data cleaning addresses issues like inconsistent standards, errors, and incompleteness, while data integration resolves semantic ambiguities and schema conflicts, and data enrichment enhances datasets with metadata and annotations. These processes are interconnected, with outputs from one domain often serving as inputs to another, forming a cohesive pipeline for data preparation.

The data cleaning module, as shown in the framework diagram, encompasses three primary tasks: data standardization, error processing, and imputation. For data standardization, the authors describe a prompt-based end-to-end approach where structured prompts guide the LLM to transform heterogeneous data into a unified format. This includes instruction-guided prompting, which uses manually crafted instructions and in-context examples, and reasoning-enhanced batch processing, which employs step-wise reasoning and batch-wise prompting to improve robustness and efficiency. An alternative paradigm is tool-assisted agent-based standardization, where LLM agents coordinate and execute standardization pipelines by generating and executing API calls or code, as depicted in the example of CleanAgent. For data error processing, the framework outlines a two-stage process of detection and correction. This is achieved through prompt-based end-to-end processing, which uses structured prompts to guide the LLM through iterative workflows, and task-adaptive fine-tuning, where LLMs are fine-tuned on synthetic or context-enriched datasets to learn specific error patterns. A hybrid approach integrates LLMs with machine learning models, using the LLM to generate pseudo-labels for training a lightweight ML classifier or to derive interpretable decision structures that guide ML-based error detection.

The data integration module focuses on entity and schema matching. For entity matching, the framework describes a prompt-based end-to-end approach that uses structured prompts to guide LLMs in determining if two records refer to the same real-world entity. This includes guidance-driven in-context matching, which uses expert-defined rules and multi-step pipelines, and batch-clustering matching, which processes multiple entity pairs simultaneously to enhance efficiency. Task-adaptive-tuned matching involves fine-tuning LLMs using reasoning traces distilled from larger models or by improving training data quality. A multi-model collaborative approach coordinates multiple models, such as a lightweight ranker and a stronger LLM, to achieve scalable and consistent matching. For schema matching, the framework presents a prompt-based end-to-end method that uses structured prompts to identify correspondences between column names across different schemas. This is enhanced by retrieval-enriched contextual matching, which augments LLM inputs with context from external retrieval components like knowledge graphs, and model-optimized adaptive matching, which uses modality-aware fine-tuning and specialized architectural components like table encoders. An agent-guided orchestration approach uses LLM agents to manage and coordinate the entire schema matching pipeline, either through role-based task partitioning or tool-planning mechanisms.

The data enrichment module addresses data annotation and profiling. For data annotation, the framework describes a prompt-based end-to-end approach that uses structured prompts to guide LLMs in assigning semantic or structural labels to data instances. This includes instruction-guided annotation and reasoning-enhanced iterative annotation, which uses step-by-step reasoning and self-assessment. A retrieval-assisted contextual annotation approach enriches prompts by retrieving relevant context from semantically similar examples or external knowledge graphs. Fine-tuned augmented annotation improves performance in specialized domains by fine-tuning LLMs on task-specific datasets. A hybrid LLM-ML annotation approach combines LLMs with machine learning models, using the LLM to generate candidate annotations that are then distilled and filtered by a smaller model. Tool-assisted agent-based annotation uses LLM agents augmented with specialized tools to handle complex annotation tasks. For data profiling, the framework outlines a prompt-based end-to-end approach that uses carefully designed prompts to guide LLMs in generating dataset descriptions, schema summaries, and hierarchical organization. This includes instruction and constraint-based profiling and example and reasoning-enhanced profiling, which combines few-shot examples with Chain-of-Thought reasoning. A retrieval-assisted contextual profiling approach combines multiple retrieval techniques with LLM reasoning to improve profiling accuracy, especially when metadata is sparse.

Experiment

- Evaluated data preparation methods across four dimensions: correctness, robustness, ranking quality, and semantic consistency.

- Correctness metrics include Accuracy, Precision, F1-score (for reliability), and Recall, Matching Rate (for coverage), validated on tasks like entity matching and error detection.

- Robustness assessed via ROC and AUC, measuring consistent performance across varying data distributions in error processing tasks.

- Ranking quality measured by P@k and MRR for retrieval utility, and Recall@GT, 1−α, Hit Rate for enrichment completeness in annotation and matching tasks.

- Semantic preservation evaluated using ROUGE (lexical overlap) and Cosine Similarity (embedding alignment), ensuring output consistency with reference content in standardization and profiling tasks.

The authors use a comprehensive evaluation framework to assess data preparation methods across multiple dimensions, including correctness, robustness, ranking quality, and semantic consistency, with metrics such as precision, recall, and F1-score for correctness, ROC and AUC for robustness, P@k and MRR for ranking quality, and ROUGE and cosine similarity for semantic preservation. Results show that while many methods achieve high performance in specific areas like correctness or ranking quality, there is no single approach that consistently outperforms others across all dimensions, highlighting the trade-offs between different evaluation criteria.

The authors use a diverse set of datasets across data cleaning, integration, and enrichment tasks to evaluate preparation methods, with metrics selected based on correctness, robustness, ranking quality, and semantic consistency. Results show that evaluation metrics vary significantly by task and data type, with precision, recall, and F1-score being most common, while specialized metrics like ROC-AUC, MRR, and ROUGE-1 are used for specific scenarios such as error detection and text-based enrichment.