Command Palette

Search for a command to run...

المراقبة، والاستنتاج، والبحث: معيار عميق للبحث في الفيديو على الويب المفتوح للاستنتاج الفيديو الوكيل

المراقبة، والاستنتاج، والبحث: معيار عميق للبحث في الفيديو على الويب المفتوح للاستنتاج الفيديو الوكيل

الملخص

في سيناريوهات الإجابة على الأسئلة المتعلقة بالفيديوهات الواقعية، غالبًا ما توفر الفيديوهات فقط مؤشرات بصرية محدودة، بينما تكون الإجابات القابلة للتحقق موزعة عبر الإنترنت المفتوح؛ وبالتالي، تحتاج النماذج إلى أداء مشترك لاستخراج المؤشرات عبر الإطارات، واسترجاع تكراري، والتحقق القائم على الاستنتاج متعدد الخطوات. ولسد هذه الفجوة، قمنا ببناء أول معيار للبحث العميق في الفيديو، يُعرف بـ VideoDR. يركز VideoDR على الإجابة على الأسئلة المتعلقة بالفيديوهات ضمن نطاق مفتوح، ويطلب استخراج نقاط مرتكز بصرية عبر الإطارات، واسترجاع تفاعلي عبر الإنترنت، والاستنتاج متعدد الخطوات على أساس الأدلة المشتركة بين الفيديو والويب؛ ومن خلال عملية تحرير بشري دقيق ومراقبة جودة صارمة، تم الحصول على عينات عالية الجودة للبحث العميق في الفيديو تمتد عبر ستة مجالات معنوية. وقد تم تقييم عدة نماذج كبيرة متعددة الوسائط مغلقة المصدر وفتح المصدر ضمن النموذجين الوظيفي (Workflow) والوسيط (Agentic)، وقد أظهرت النتائج أن النموذج الوسيط (Agentic) ليس دائمًا أفضل من النموذج الوظيفي (Workflow): فالتحسينات التي يحققها تعتمد على قدرة النموذج على الحفاظ على نقاط المرتكز البصرية الأولية عبر سلاسل استرجاع طويلة. كما تشير التحليلات الإضافية إلى أن الانزياح الهدف والاتساق على المدى الطويل هما العقبات الأساسية. وبشكل عام، يوفر VideoDR معيارًا منهجيًا لدراسة الوكلاء المرتبطين بالفيديوهات في البيئات المفتوحة على الويب، ويُظهر التحديات الأساسية التي تواجه تطوير وكلاء البحث العميق في الفيديو من الجيل التالي.

One-sentence Summary

The authors, from LZU, HKUST(GZ), UBC, FDU, PKU, USC, NUS, UCAS, HKUST, and QuantaAlpha, propose VideoDR, the first benchmark for video-conditioned open-domain question answering requiring cross-frame visual anchor extraction, interactive web retrieval, and multi-hop reasoning; it reveals that agentic models underperform workflows when failing to maintain long-horizon consistency, highlighting goal drift and evidence coherence as key challenges for next-generation video agents.

Key Contributions

- The paper introduces VideoDR, the first benchmark for video deep research, which formalizes open-domain video question answering that requires cross-frame visual anchor extraction, interactive web retrieval, and multi-hop reasoning over combined video–web evidence, addressing the gap between closed-context video understanding and real-world open-web fact verification.

- Through rigorous human annotation and quality control across six semantic domains, VideoDR ensures that answers depend on both dynamic visual cues from the video and verifiable evidence from the open web, making it a systematic testbed for evaluating multimodal agents in realistic research scenarios.

- Evaluation of leading multimodal large language models under Workflow and Agentic paradigms reveals that Agentic approaches are not consistently superior; performance hinges on maintaining initial video anchors over long retrieval chains, with goal drift and long-horizon consistency identified as the primary bottlenecks.

Introduction

The authors address a critical gap in multimodal AI evaluation by introducing VideoDR, the first benchmark for video deep research in open-web settings. In real-world scenarios, video content often provides only partial visual clues, while definitive answers reside in dynamic, external web sources—requiring models to jointly perform cross-frame visual anchoring, interactive web retrieval, and multi-hop reasoning over video-web evidence. Prior work falls short in this space: video benchmarks typically assume closed-evidence settings, while existing deep research benchmarks focus on text-based queries and treat visual input as secondary. The authors’ main contribution is the design of VideoDR, a rigorously annotated benchmark that enforces dependency on multi-frame video cues for evidence gathering, ensuring that answers cannot be derived from video or web alone. They evaluate both Workflow and Agentic paradigms across leading multimodal models, revealing that Agentic approaches do not consistently outperform Workflow, with performance heavily dependent on maintaining long-horizon consistency and avoiding goal drift—highlighting these as core challenges for future video reasoning agents.

Dataset

- The dataset, VideoDR, consists of 100 carefully curated video-question-answer triples created through a structured annotation process involving three expert annotators with experience in video understanding and web search.

- Video sources were drawn from diverse platforms, with stratified sampling across three dimensions: source, domain, and duration, to ensure broad real-world coverage.

- A strict negative filtering strategy removed: (1) single-scene, highly redundant clips; (2) popular topics easily answerable via text search; and (3) isolated web content lacking verifiable evidence chains.

- Initial filtering retained only videos with coherent, multi-frame visual cues suitable for cross-frame association; longer videos were segmented into semantically focused parts for independent annotation.

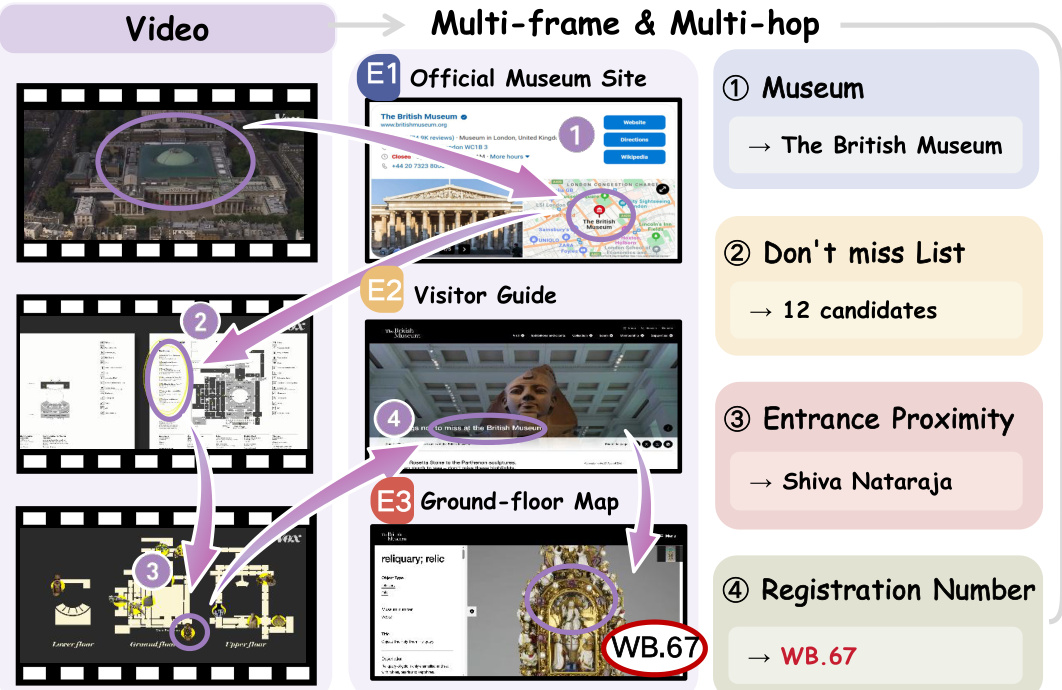

- Each question was designed under two key constraints: (1) multi-frame reasoning—requiring evidence from multiple frames, not a single screenshot; and (2) multi-hop reasoning—necessitating a decomposable reasoning path that links video perception to external web search.

- For verifiability, the web pages containing the key evidence supporting each answer were archived during annotation.

- The dataset spans six domains: Daily Life (33%), Economics (16%), Technology and Culture (15% each), History (11%), and Geography (10%), ensuring balanced coverage across open-domain topics.

- Question lengths average 25.54 tokens, with 95% of questions under 54 tokens, indicating concise yet information-rich phrasing that emphasizes reasoning over input complexity.

- Video durations follow a long-tailed distribution: most are short, but a small subset exceeds 10 minutes, enabling evaluation of both rapid cue detection and long-range cross-segment reasoning.

- The authors use the full dataset for training, with no explicit train/validation/test splits mentioned; the data is treated as a unified benchmark for evaluating multi-hop video reasoning.

- No cropping or frame sampling was applied during data construction—full video segments were used as-is, preserving temporal context.

- Metadata includes domain labels, video duration, question length, and evidence URLs, supporting stratified analysis and benchmark evaluation.

- While the final answers are objectively verifiable, the intermediate search queries and reasoning paths reflect the subjective strategies of the annotators, potentially limiting the diversity of real-world user behavior captured.

Method

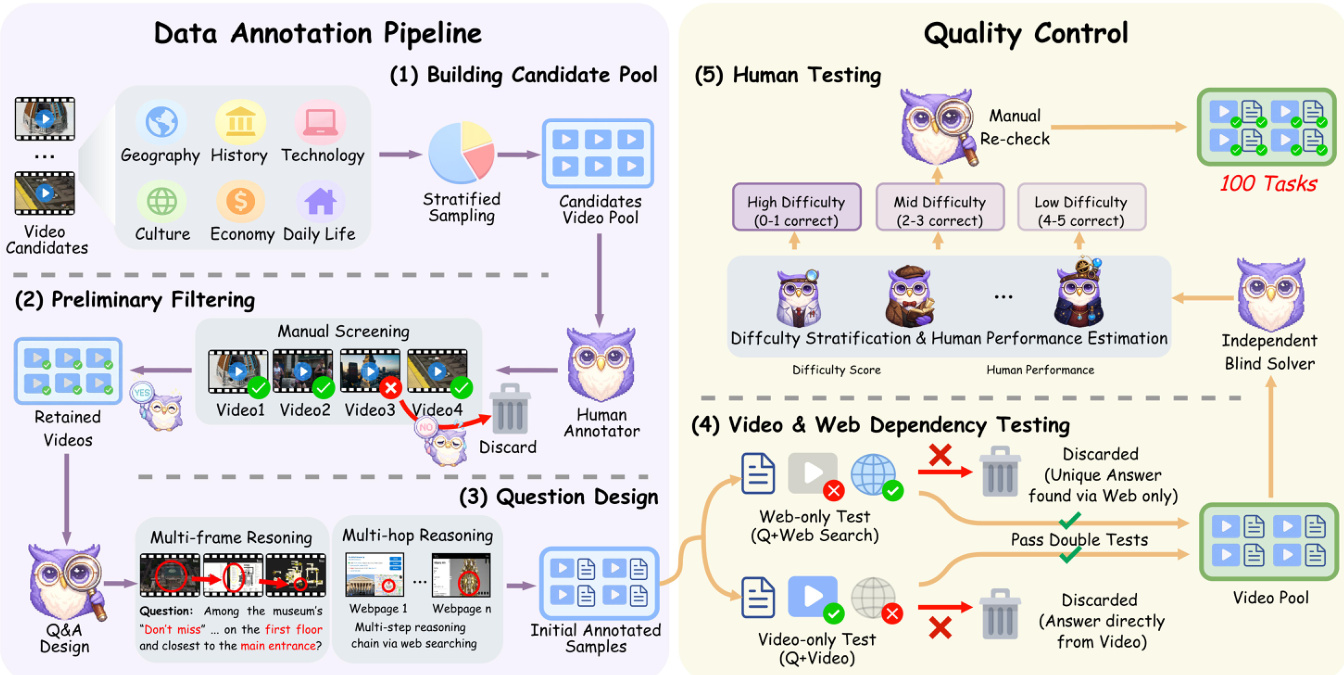

The authors leverage a comprehensive data annotation pipeline designed to ensure high-quality, diverse, and semantically rich video-based reasoning samples. The process begins with the construction of a candidate video pool through stratified sampling across multiple domains, including Geography, History, Technology, Culture, Economy, and Daily Life. This ensures broad coverage of real-world scenarios. The initial candidate pool is then subjected to a preliminary filtering stage, where human annotators manually screen videos to retain only those suitable for further processing, discarding unsuitable ones based on relevance and quality.

Following filtering, the pipeline proceeds to question design, where two distinct reasoning paradigms are employed: multi-frame reasoning and multi-hop reasoning. Multi-frame reasoning involves generating questions that require understanding temporal changes across multiple frames of a video, such as tracking an object’s movement or identifying a sequence of events. Multi-hop reasoning, on the other hand, requires integrating information from both the video and external web sources, such as official museum websites or visitor guides, to answer complex queries. This dual approach ensures that the resulting annotated samples support both video-centric and web-augmented reasoning.

To ensure the reliability and validity of the annotated data, a rigorous two-stage quality control process is applied. The first stage, Video & Web Dependency Testing, evaluates whether the answer to a question can be derived solely from the video or requires external web information. Videos that allow answers to be directly extracted without web assistance are discarded, while those requiring web-based reasoning are retained. This ensures that the dataset emphasizes multi-hop reasoning. The second stage, Human Testing, involves human annotators assessing the difficulty of each task and estimating human performance. Tasks are stratified into high, mid, and low difficulty levels based on the number of correct responses expected from human solvers, with thresholds of 0–1 correct, 2–3 correct, and 4–5 correct, respectively. This stratification enables the creation of a balanced dataset with varying levels of complexity. Additionally, a manual re-check is performed on a subset of 100 tasks to further validate the quality and consistency of annotations.

Experiment

- Video & Web Dependency Testing: Confirms that valid samples require both video and web evidence, with 100 samples retained after filtering out those solvable via video-only or web-only means.

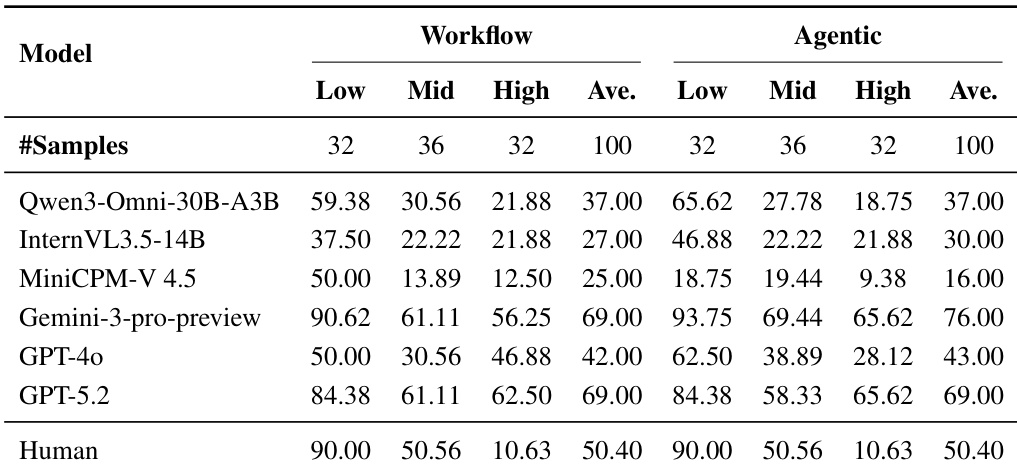

- Human Testing: Human participants achieve a mean success rate of 50.4% across all samples, with 90.0% on Low, 50.6% on Mid, and 10.6% on High difficulty, validating the difficulty stratification and annotation correctness.

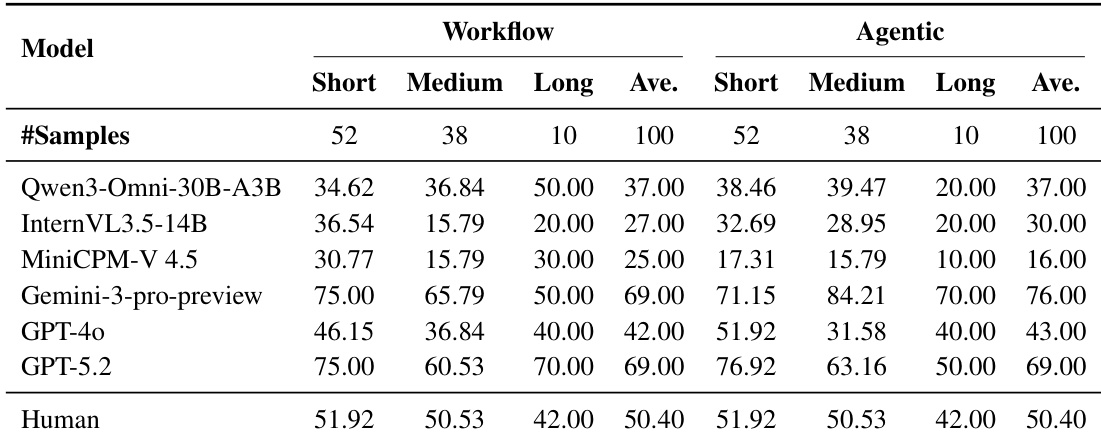

- Main Results: Gemini-3-pro-preview leads under both Workflow (69%) and Agentic (76%) settings, with Agentic showing gains on Mid/High difficulty for strong models but causing degradation for mid-tier models like GPT-4o on High difficulty.

- Video-duration Stratification: Longer videos amplify the trade-off between paradigms—Agentic benefits strong models (e.g., Gemini-3-pro-preview improves from 50% to 70% on Long videos), while weaker models (e.g., Qwen3-Omni-30B-A3B) drop sharply (50% to 20%).

- Domain Stratification: Agentic outperforms Workflow in Technology (64.29% → 85.71%) but underperforms in Geography, indicating that stable visual anchors are critical for ambiguous domains.

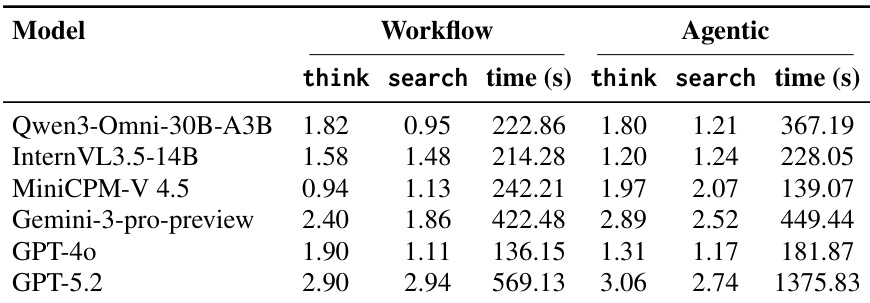

- Tool-use Analysis: Tool usage frequency does not correlate with accuracy; Gemini-3-pro-preview uses tools more effectively, while weaker models (e.g., MiniCPM-V 4.5) show increased tool use with reduced accuracy, indicating poor evidence filtering.

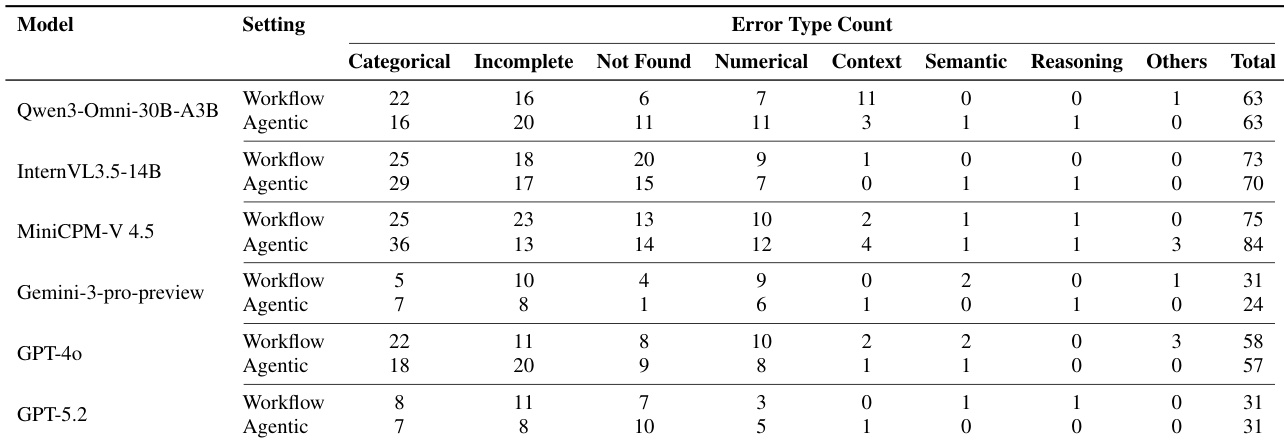

- Error Analysis: Categorical Error dominates across models, especially in Agentic, due to uncorrected early perception errors; numerical errors remain a persistent bottleneck across all models, with no significant improvement in Agentic.

The authors analyze error types across models and settings, showing that Categorical Error is the dominant failure mode, increasing for some models under the Agentic setting. This suggests that errors in initial visual perception propagate more easily when the model cannot revisit the video, leading to greater drift in downstream reasoning.

The authors analyze tool usage in the Workflow and Agentic paradigms, showing that higher numbers of tool calls do not necessarily lead to better performance. Gemini-3-pro-preview demonstrates more effective use of tools, achieving the highest accuracy with a balanced number of think and search calls, while other models either fail to improve or degrade in performance despite increased tool usage.

Results show that under the Workflow and Agentic paradigms, model performance varies significantly with video duration, with Gemini-3-pro-preview achieving the highest accuracy across all categories. The Agentic setting generally outperforms Workflow for longer videos, particularly for Gemini-3-pro-preview, which improves from 50.00% to 70.00% on Long videos, while open-source models like Qwen3-Omni-30B-A3B and MiniCPM-V 4.5 show substantial drops in accuracy on Long videos under Agentic.

The authors use a domain-specific performance comparison to evaluate models across different question categories under Workflow and Agentic settings. Results show that Gemini-3-pro-preview achieves the highest accuracy in most domains under both settings, with significant improvements in Technology (64.29%→85.71%) and consistent high performance across all domains, while other models exhibit varying strengths and weaknesses depending on the domain and paradigm.

Results show that the Agentic paradigm achieves higher performance than the Workflow paradigm for top models like Gemini-3-pro-preview and GPT-5.2, with accuracy improvements of 7% and 0% respectively, while lower-performing models such as Qwen3-Omni-30B-A3B and MiniCPM-V 4.5 show no gain or even degradation in the Agentic setting. Performance declines significantly with increasing difficulty across both paradigms, and human performance remains consistently higher than all models, especially on High-difficulty samples.