Command Palette

Search for a command to run...

ميمRL: وكالات تتطور ذاتيًا من خلال التعلم المُعزز في الوقت الفعلي على الذاكرة التكرارية

ميمRL: وكالات تتطور ذاتيًا من خلال التعلم المُعزز في الوقت الفعلي على الذاكرة التكرارية

الملخص

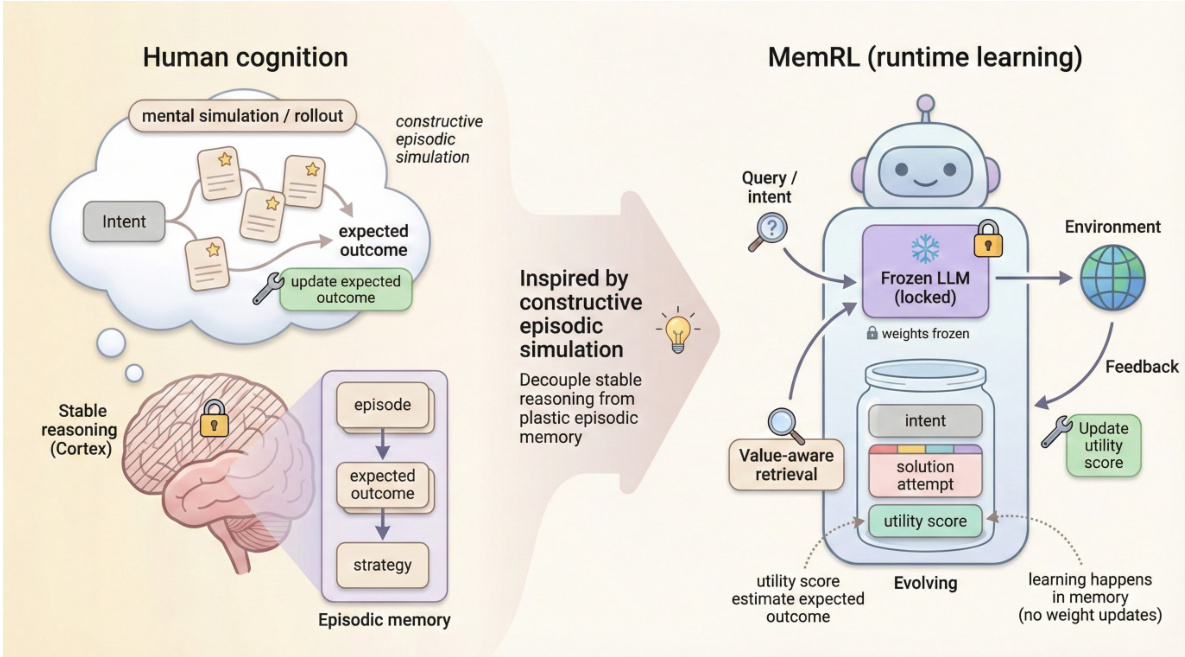

يُعد القدرة على اكتساب المهارات الجديدة من خلال التمثيل التكويني للذكريات – أي استرجاع التجارب الماضية لصياغة حلول للمهام الجديدة – أحد السمات البارزة للذكاء البشري. وعلى الرغم من أن النماذج اللغوية الكبيرة (LLMs) تمتلك قدرات تفكير قوية، إلا أنها تواجه صعوبات في محاكاة هذه القدرة على التطور الذاتي: فالتدرّب الدقيق (fine-tuning) مكلف من الناحية الحسابية وعرضة للازدحام الذاكرة (catastrophic forgetting)، في حين تعتمد الطرق القائمة على الذاكرة حاليًا على مطابقة معاني سلبية غالبًا ما تسترجع ضوضاء. ولحل هذه التحديات، نقترح إطارًا يُسمى MemRL، الذي يمكّن الوكلاء من التطور الذاتي عبر التعلم المعزز غير المعلمي (non-parametric reinforcement learning) على الذاكرة التذكارية. يُميّز MemRL بوضوح بين عملية التفكير الثابتة لنموذج لغوي كبير مُجمّد (frozen LLM) والذاكرة المرنة والمتغيرة. على عكس الطرق التقليدية، يستخدم MemRL آلية استرجاع مزدوجة الطور (Two-Phase Retrieval)، حيث يتم أولًا فلترة المرشحين بناءً على الترابط المعنوي، ثم اختيارهم بناءً على قيم Q المُتعلّمة (القيمة المفيدة). وتُحدّث هذه القيم باستمرار عبر التغذية الراجعة البيئية بطريقة تجربة وخطأ، ما يمكّن الوكيل من التمييز بين الاستراتيجيات ذات القيمة العالية والضوضاء المشابهة. أظهرت تجارب واسعة النطاق على مجموعات HLE، BigCodeBench، ALFWorld، وLifelong Agent Bench أن MemRL يتفوق بشكل كبير على أفضل النماذج الحالية. كما أكدت تجارب التحليل أن MemRL نجح في التوفيق الفعّال بين معضلة الاستقرار والملاءمة (stability-plasticity dilemma)، مما يتيح تحسينًا مستمرًا أثناء التشغيل دون الحاجة إلى تحديث الأوزان.

One-sentence Summary

The authors from Shanghai Jiao Tong University, Xidian University, National University of Singapore, Shanghai Innovation Institute, MemTensor (Shanghai) Technology Co., Ltd., and University of Science and Technology of China propose MEMRL, a non-parametric reinforcement learning framework that enables LLM agents to self-evolve through dynamic episodic memory. By decoupling a frozen LLM from a plastic memory module and employing a two-phase retrieval mechanism—first by semantic relevance and then by learned Q-values—MEMRL selectively updates high-utility strategies via trial-and-error feedback, overcoming catastrophic forgetting and noise in retrieval. This approach achieves continuous runtime improvement without weight updates, significantly outperforming state-of-the-art baselines on HLE, BigCodeBench, ALFWorld, and Lifelong Agent Bench, demonstrating effective resolution of the stability-plasticity dilemma in lifelong learning scenarios.

Key Contributions

-

The paper addresses the challenge of enabling large language models to continuously learn and adapt after deployment without catastrophic forgetting, by proposing MEMRL—a framework that decouples stable reasoning (via a frozen LLM) from plastic episodic memory, thus reconciling the stability-plasticity dilemma in runtime learning.

-

MEMRL introduces a novel Two-Phase Retrieval mechanism that first filters experiences by semantic relevance and then selects them based on learned Q-values (utility), with these utilities refined through environmental feedback using a non-parametric reinforcement learning approach, allowing the agent to distinguish high-value strategies from noise.

-

Extensive experiments on HLE, BigCodeBench, ALFWorld, and Lifelong Agent Bench show MEMRL consistently outperforms state-of-the-art baselines, with analysis confirming that utility-driven updates improve task success and maintain structural integrity through Bellman contraction, ensuring stable, continuous improvement without weight updates.

Introduction

The authors address the challenge of enabling large language models to continuously improve their performance after deployment without modifying their frozen parameters—a critical need for real-world agent applications where stability and adaptability must coexist. Prior approaches either rely on computationally expensive fine-tuning, which risks catastrophic forgetting, or passive retrieval methods like RAG that lack a mechanism to evaluate the actual utility of past experiences, making them ineffective at distinguishing high-value strategies from noise. To overcome these limitations, the authors propose MEMRL, a framework that decouples stable reasoning (handled by a frozen LLM) from plastic episodic memory, using non-parametric reinforcement learning to optimize memory usage. MEMRL introduces a Two-Phase Retrieval mechanism that first filters candidates by semantic relevance and then selects them based on learned Q-values, which are updated via environmental feedback using Bellman updates. This closed-loop process enables the agent to self-evolve at runtime, continuously refining its memory to prioritize high-utility experiences. The framework is validated across multiple benchmarks, demonstrating consistent performance gains and theoretical stability through convergence guarantees, establishing a new paradigm for runtime learning in LLM agents.

Method

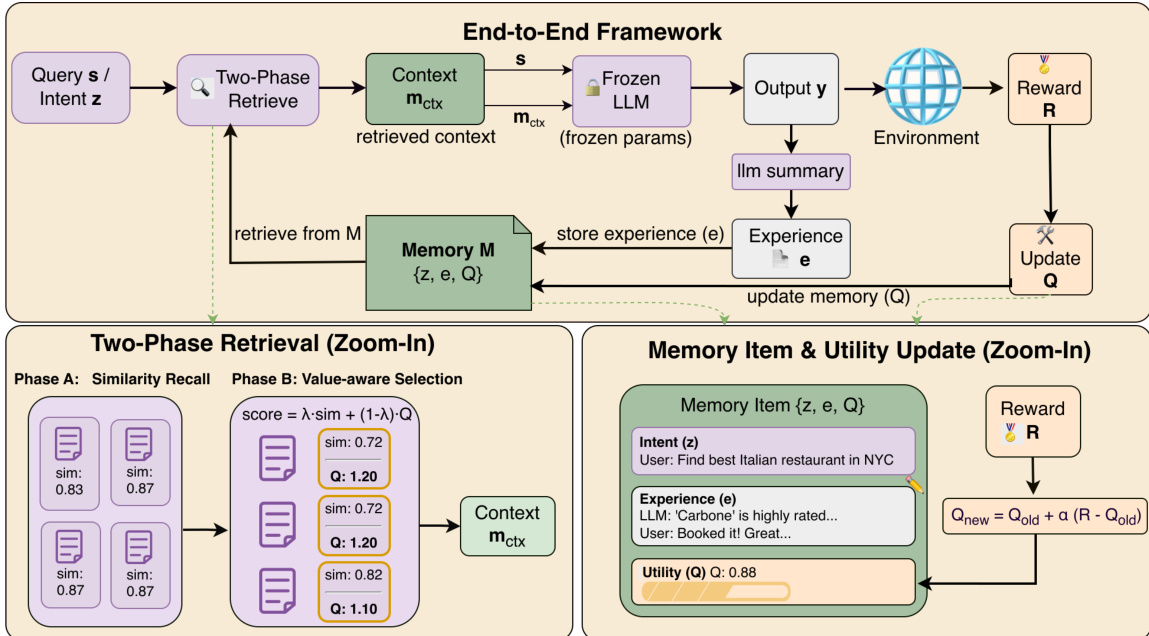

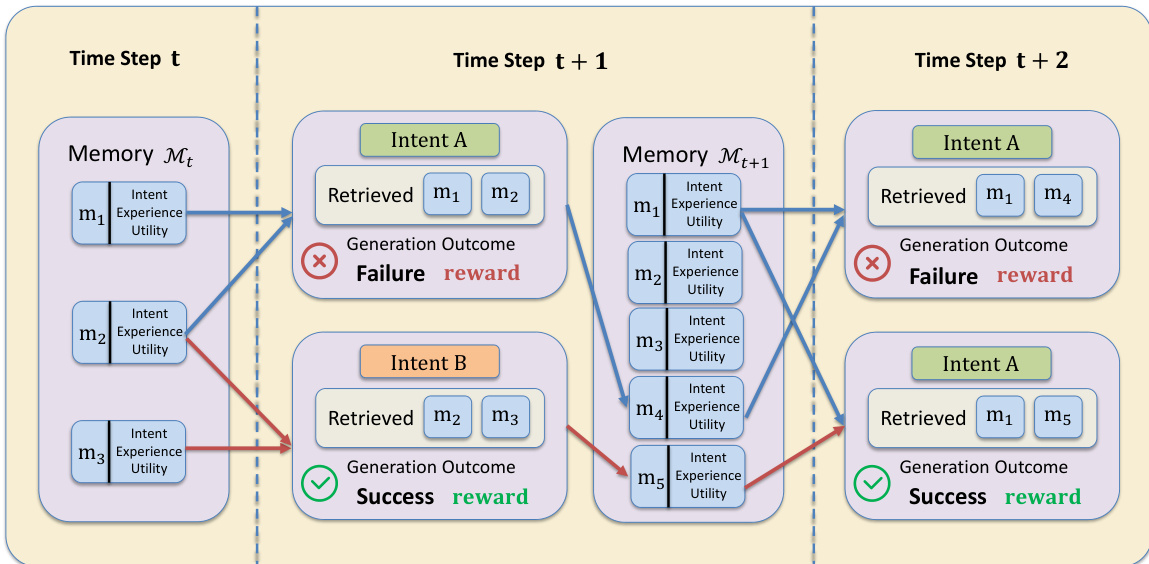

The authors leverage a non-parametric reinforcement learning framework, MemRL, to enable a frozen large language model (LLM) to self-evolve through interaction with an environment. The core of the approach is to treat memory retrieval as a value-based decision-making process within a Memory-Based Markov Decision Process (M-MDP) formulation. The agent's behavior is decomposed into two distinct phases: Retrieve and Generation. The joint policy for generating a response yt is defined as a marginal over all possible retrieved memory items, combining a retrieval policy μ(m∣st,Mt) that selects a memory context m and an inference policy pLLM(yt∣st,m) that generates the output conditioned on the query and the retrieved context. The key innovation lies in optimizing the retrieval policy μ directly, rather than relying on static similarity metrics, to select memories based on their functional utility.

The framework's architecture is built around a structured memory bank M, which is organized as a set of Intent-Experience-Utility triplets (zi,ei,Qi). Here, zi is the intent embedding of a past query, ei is the raw experience (e.g., a successful solution trace), and Qi is the learned utility value, which approximates the expected return of applying that experience to similar intents. This structure enables the agent to make decisions based on the proven effectiveness of past experiences, not just their semantic similarity.

To select the most useful context, MemRL employs a Two-Phase Retrieval mechanism. Phase A, Similarity Recall, acts as a coarse filter. Given a current query intent s, it computes the cosine similarity between s and all stored intent embeddings zi and retrieves a candidate pool C(s) of the top-K most semantically similar memories. This ensures the retrieval is contextually relevant. Phase B, Value-aware Selection, refines this pool by selecting the optimal context based on learned utility. It uses a composite scoring function that balances semantic similarity and utility: score(s,zi,ei)=(1−λ)⋅sim^(s,zi)+λ⋅Q^(zi,ei), where ^ denotes z-score normalization. This allows the policy to prioritize memories with high utility, even if they are not the most semantically similar, effectively filtering out "distractor" memories.

The learning process occurs entirely within the memory space, without modifying the LLM's weights. After the agent generates an output and receives a reward R from the environment, the utility scores of the retrieved memories are updated. This is done using a Monte Carlo-style update rule: Qnew←Qold+α(R−Qold), where α is the learning rate. This update drives the utility estimate toward the empirical expected return of using that experience. Concurrently, the experience is summarized and stored as a new triplet in the memory bank, enabling continual expansion of the agent's knowledge base. This process of utility update, inspired by memory reconsolidation, allows the agent to learn from its successes and failures, continuously refining its retrieval policy.

Experiment

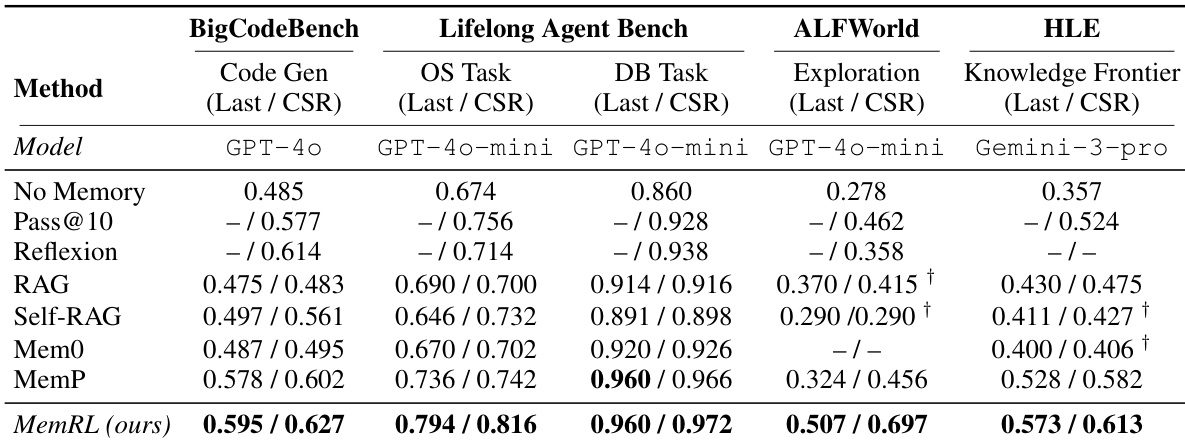

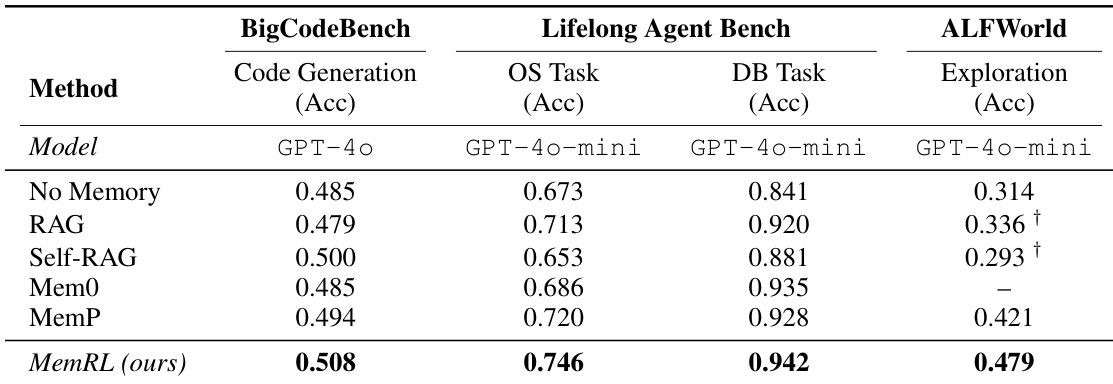

- MEMRL outperforms multiple baselines—including RAG, Self-RAG, MemP, and Reflexion—across four diverse benchmarks: BigCodeBench (code generation), ALFWorld (embodied navigation), Lifelong Agent Bench (OS/DB interaction), and Humanity's Last Exam (HLE, complex reasoning), validating its effectiveness in both runtime learning and transfer settings.

- On ALFWorld, MEMRL achieves a last-epoch accuracy of 0.507, a 56% improvement over MemP (0.324) and 82% over the no-memory baseline (0.278), with a cumulative success rate (CSR) of 0.697, demonstrating superior exploration and solution discovery in high-complexity, procedural tasks.

- In HLE, MEMRL reaches 0.573 last accuracy and 61.3% CSR, significantly outperforming MemP (0.528), highlighting its ability to learn from near-miss failures and retain reusable corrective heuristics.

- On BigCodeBench, MEMRL achieves 0.508 accuracy, surpassing Self-RAG (0.500) and MemP (0.494), while in Lifelong Agent Bench it attains 0.746 accuracy, outperforming RAG (0.713), confirming strong generalization across domains.

- Ablation studies show that the value-aware retrieval mechanism with balanced Q-weighting (λ = 0.5) yields the best performance, with pure semantic retrieval plateauing early and pure RL showing instability due to context detachment.

- Compact retrieval settings (k₁ = 5, k₂ = 3) outperform larger ones (k₁ = 10, k₂ = 5) on HLE, indicating that high-quality, low-noise memory recall is more effective than high-volume retrieval for complex reasoning.

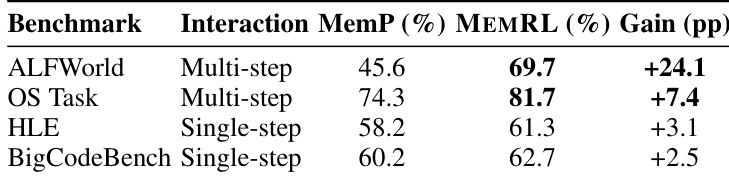

- MEMRL acts as a trajectory verifier, significantly improving performance on multi-step tasks (e.g., +24.1 pp on ALFWorld) by filtering out memories that fail in later steps despite initial semantic match.

- The Q-critic shows strong predictive power (Pearson r = 0.861), with high-Q failure memories contributing to robustness by encoding transferable corrective heuristics, as demonstrated in case studies where high-Q failures led to 100% downstream success.

- MEMRL exhibits superior stability, with a lower forgetting rate (0.041 vs. 0.051 for MemP) and synchronized growth in CSR and epoch accuracy, attributed to theoretical guarantees from Bellman contraction and effective noise filtering via normalization and similarity gating.

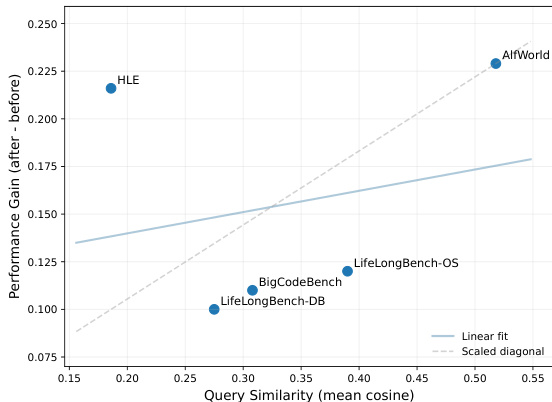

- Performance gains correlate with intra-dataset similarity, with ALFWorld (similarity 0.518) showing the highest gain (Δ = +0.229) due to strong pattern reuse, while HLE (similarity 0.186) achieves high gain (Δ = +0.216) through runtime memorization of unique, domain-specific solutions, showcasing MEMRL’s dual capability in generalization and specific knowledge acquisition.

Results show that MEMRL's performance gain correlates with query similarity, with higher gains observed in tasks like ALFWorld that have greater structural repetition. However, HLE, despite its low similarity, achieves a high gain through runtime memorization, indicating that MEMRL supports both pattern generalization and specific knowledge acquisition depending on task structure.

The authors use the table to compare MEMRL against MemP across four benchmarks, showing that MEMRL achieves higher accuracy in all cases. The performance gain is most pronounced in multi-step tasks like ALFWorld, where MEMRL improves accuracy by 24.1 percentage points, while the gain is smaller in single-step tasks such as BigCodeBench, indicating that MEMRL's value-aware retrieval is particularly effective in complex, sequential environments.

The authors use MEMRL to evaluate its performance against various memory-augmented baselines across four benchmarks, including code generation, OS interaction, and embodied navigation. Results show that MEMRL achieves the highest accuracy on all tasks, with significant improvements over baselines such as RAG and MemP, particularly in complex exploration-heavy environments like ALFWorld.

The authors use MEMRL to evaluate its performance against various memory-augmented baselines across four benchmarks, including code generation, embodied navigation, OS/DB interaction, and complex reasoning. Results show that MEMRL consistently outperforms all baselines in both runtime learning and transfer settings, achieving the highest accuracy and cumulative success rate on all tasks, with particularly strong gains in exploration-heavy environments like ALFWorld and HLE.