Command Palette

Search for a command to run...

LongFly: التوجيه البصري واللغوي للطائرات بدون طيار على مدى طويل مع دمج السياق الزماني المكاني

LongFly: التوجيه البصري واللغوي للطائرات بدون طيار على مدى طويل مع دمج السياق الزماني المكاني

Wen Jiang Li Wang Kangyao Huang Wei Fan Jinyuan Liu Shaoyu Liu Hongwei Duan Bin Xu Xiangyang Ji

الملخص

تمثل الطائرات غير المأهولة بدون طيار (UAVs) أدوات حيوية في عمليات البحث والإنقاذ بعد الكوارث، وتواجه تحديات مثل الكثافة العالية للمعلومات، والتغير السريع في وجهات النظر، والهياكل الديناميكية، خاصةً في المهام ذات المجال الطويل للرؤية. ومع ذلك، تواجه الطرق الحالية لتنقل الرؤية واللغة (VLN) الخاصة بالطائرات بدون طيار صعوبات في نمذجة السياق الزماني المكاني الطويل في البيئات المعقدة، مما يؤدي إلى عدم دقة التوافق المعاني وسوء استقرار تخطيط المسارات. ولحل هذه المشكلة، نقترح "LongFly"، وهي إطار عمل لنمذجة السياق الزماني المكاني للتنقل طويل المدى للطائرات بدون طيار باستخدام الرؤية واللغة. يعتمد LongFly على استراتيجية نمذجة زمانية-مكانية تعتمد على الذاكرة التاريخية، والتي تقوم بتحويل البيانات التاريخية المجزأة والمتكررة إلى تمثيلات منظمة ومختصرة وغنية بالمعلومات. أولاً، نقترح وحدة ضغط الصور التاريخية القائمة على "المساحات" (slot-based)، والتي تقوم باستخلاص ديناميكي لملاحظات متعددة الزوايا إلى تمثيلات سياقية ذات طول ثابت. ثم، نُدخل وحدة ترميز المسار الزماني المكاني لالتقاط الديناميكية الزمنية والبنية المكانية لمسارات الطائرات بدون طيار. وأخيرًا، لدمج السياق الزماني المكاني الحالي مع الملاحظات الحالية، نصمم وحدة تكامل متعددة الوسائط موجهة بالمحفزات، والتي تدعم الاستدلال الزمني والتنبؤ القوي بنقاط المرور (Waypoints). أظهرت النتائج التجريبية أن LongFly تتفوق على أحدث النماذج الحالية لتنقل الرؤية واللغة للطائرات بدون طيار بنسبة 7.89٪ في معدل النجاح، وبنسبة 6.33٪ في معدل النجاح الموزون طول المسار، وذلك بشكل متسق في كل من البيئات المرئية والمُشاهدَة سابقًا وغير المرئية.

One-sentence Summary

The authors, affiliated with institutions in China including the National Natural Science Foundation of China, Chongqing Natural Science Foundation, and the National High Technology Research and Development Program, propose LongFly, a spatiotemporal context modeling framework for long-horizon UAV vision-and-language navigation that integrates history-aware visual compression, trajectory encoding, and prompt-guided multimodal fusion. By dynamically distilling multi-view historical observations into compact semantic slots and aligning them with language instructions through a structured prompt, LongFly enables robust, time-aware waypoint prediction in complex 3D environments, achieving 7.89% higher success rate and 6.33% better success weighted by path length than state-of-the-art methods across seen and unseen scenarios.

Key Contributions

-

LongFly addresses the challenge of long-horizon UAV vision-and-language navigation in complex, dynamic environments by introducing a unified spatiotemporal context modeling framework that enables stable, globally consistent decision-making despite rapid viewpoint changes and high information density.

-

The method features a slot-based historical image compression module that dynamically distills multi-view past observations into compact, fixed-length representations, and a spatiotemporal trajectory encoding module that captures both temporal dynamics and spatial structure of UAV flight paths.

-

Experimental results show LongFly achieves 7.89% higher success rate and 6.33% higher success weighted by path length than state-of-the-art baselines across both seen and unseen environments, demonstrating robust performance in long-horizon navigation tasks.

Introduction

The authors address long-horizon vision-and-language navigation (VLN) for unmanned aerial vehicles (UAVs), a critical capability for post-disaster search and rescue, environmental monitoring, and geospatial data collection in complex, GPS-denied environments. While prior UAV VLN methods have made progress in short-range tasks, they struggle with long-horizon navigation due to fragmented, static modeling of historical visual and trajectory data, leading to poor semantic alignment and unstable path planning. Existing approaches often treat history as isolated memory cues without integrating them into a unified spatiotemporal context aligned with language instructions and navigation dynamics. To overcome this, the authors propose LongFly, a spatiotemporal context modeling framework that dynamically compresses multi-view historical images into compact, instruction-relevant representations via a slot-based compression module, encodes trajectory dynamics through a spatiotemporal trajectory encoder, and fuses multimodal context with current observations using a prompt-guided integration module. This enables robust, time-aware reasoning and consistent waypoint prediction across long sequences, achieving 7.89% higher success rate and 6.33% better success weighted by path length than state-of-the-art baselines in both seen and unseen environments.

Method

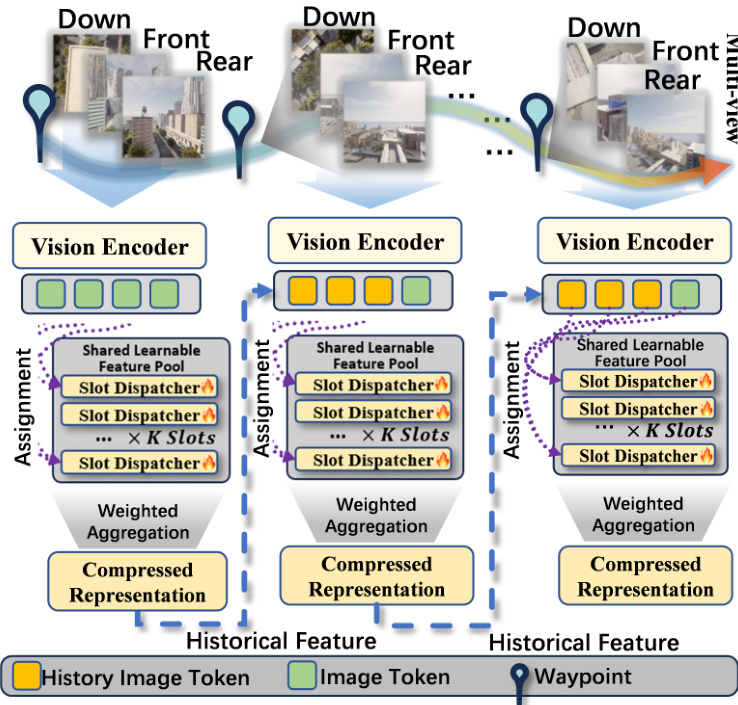

The authors leverage a spatiotemporal context modeling framework named LongFly to address the challenges of long-horizon UAV visual-language navigation (VLN). The overall architecture integrates three key modules to transform fragmented historical data into structured, compact representations that support robust waypoint prediction. The framework begins by processing the current command instruction and the UAV's current visual observation, which are tokenized and projected into a shared latent space. Concurrently, historical multi-view images and waypoint trajectories are processed through dedicated modules to generate compressed visual and motion representations.

The first module, Slot-based Historical Image Compression (SHIC), addresses the challenge of efficiently storing and retrieving long-horizon visual information. It processes the sequence of historical multi-view images R1,R2,…,Rt−1 using a CLIP-based visual encoder Fv to extract visual tokens Zi at each time step. These tokens are then used to update a fixed-capacity set of learnable visual memory slots Si. The update mechanism treats each slot as a query and the visual tokens as keys and values, computing attention weights to perform a weighted aggregation of the new visual features. This process is implemented using a gated recurrent unit (GRU) to update the slot memory, resulting in a compact visual memory representation St−1 that captures persistent landmarks and spatial layouts. This approach reduces the memory and computational complexity from O(t) to O(1).

The second module, Spatio-temporal Trajectory Encoding (STE), models the UAV's motion history. It takes the historical waypoint sequence P1,P2,…,Pt−1 and transforms the absolute coordinates into relative motion representations. For each step, the displacement vector ΔPi is computed, which is then decomposed into a unit direction vector di and a motion scale ri. These are concatenated to form a 4D motion descriptor Mi. To encode temporal ordering, a time embedding τi is added, resulting in a time-aware motion representation Mi. This representation is then projected into a d-dimensional trajectory token ti using a residual MLP encoder, producing a sequence of trajectory tokens Tt−1 that serve as an explicit motion prior.

The third module, Prompt-Guided Multimodal Integration (PGM), integrates the historical visual memory, trajectory tokens, and the current instruction and observation into a structured prompt for the large language model. The natural language instruction L is encoded using a BERT encoder and projected into a unified latent dimension. The compressed visual memory St−1 and trajectory tokens Tt−1 are also projected into the same space. These components, along with the current visual observation Rt, are organized into a structured prompt that includes the task instruction, a Qwen-compatible conversation template, and UAV history status information. This prompt is then fed into a large language model (Qwen2.5-3B) to predict the next 3D waypoint Pt+1 in continuous space. This design enables coherent long-horizon multimodal reasoning without requiring additional feature-level fusion mechanisms.

Experiment

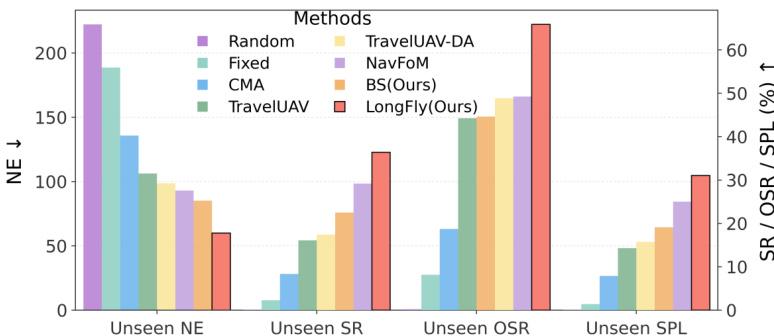

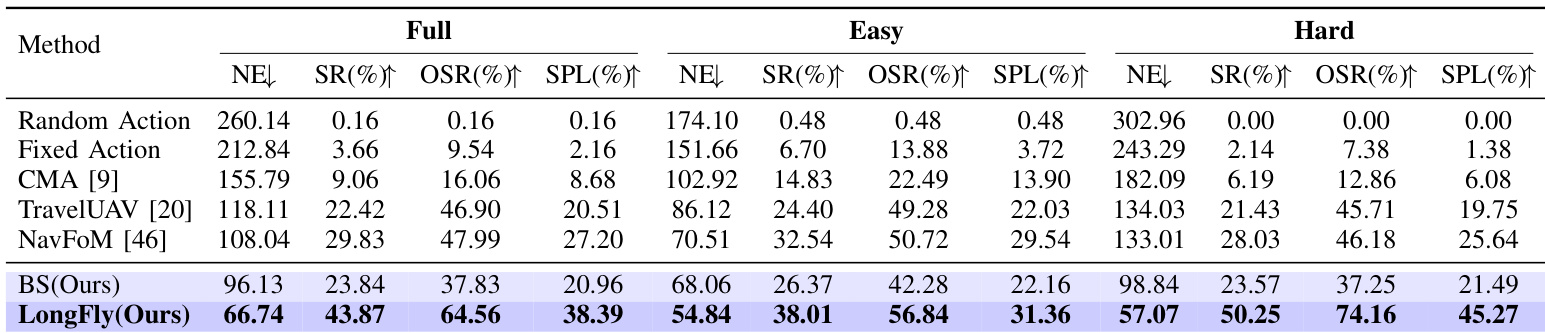

- LongFly demonstrates superior performance on the OpenUAV benchmark, achieving 33.03m lower NE, 7.22% higher SR, and over 6.04% improvement in OSR and SPL compared to baselines on the seen dataset, with the largest gains on the Hard split.

- On the unseen object set, LongFly achieves 43.87% SR and 64.56% OSR, outperforming NavFoM by 14.04% in SR and 16.57% in OSR, with significant gains in NE and SPL on the Hard subset.

- On the unseen map set, LongFly attains 24.88% OSR and 7.98% SPL in the Hard split, the only method to maintain reasonable performance, while others fail (OSR ≈ 0), highlighting its robustness to novel layouts.

- Ablation studies confirm that both SHIC and STE modules are essential, with their combination yielding the best results; prompt-guided fusion and longer history lengths significantly improve performance, especially in long-horizon tasks.

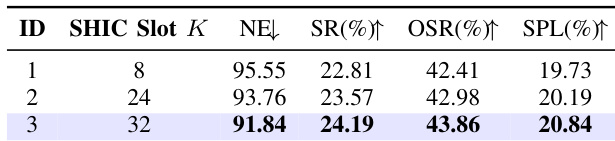

- SHIC slot number analysis shows optimal performance at K=32, with improvements in SR, SPL, and NE as slots increase.

- Qualitative results demonstrate LongFly’s ability to maintain global consistency and avoid local traps through spatiotemporal context integration, unlike the baseline that drifts due to myopic reasoning.

Results show that LongFly significantly outperforms all baseline methods across unseen environments, achieving the lowest NE and highest SR, OSR, and SPL. The model demonstrates robust generalization, particularly in unseen object and map settings, with the largest gains observed in challenging long-horizon scenarios.

Results show that LongFly significantly outperforms all baseline methods across all difficulty levels, achieving the lowest NE and highest SR, OSR, and SPL. On the Full split, LongFly reduces NE by 29.39 compared to the baseline BS and improves SR by 20.03 percentage points, demonstrating its effectiveness in long-horizon navigation.

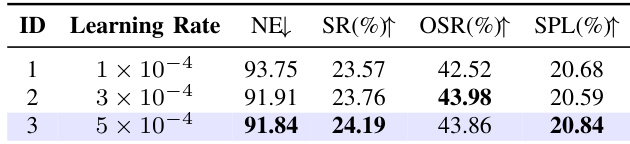

Results show that the model achieves the best performance at a learning rate of 5 × 10⁻⁴, with the highest success rate (SR) of 24.19% and the highest SPL of 20.84%, while maintaining a low NE of 91.84. Performance remains stable across different learning rates, with only minor variations in SR, OSR, and SPL, indicating robustness to learning rate changes.

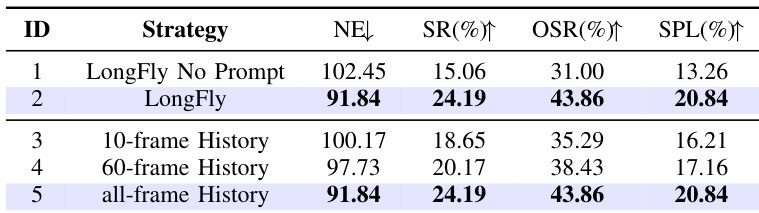

Results show that LongFly with prompt-guided fusion achieves significantly better performance than the version without prompts, reducing NE from 102.45 to 91.84 and increasing SR, OSR, and SPL. The model with all-frame history performs as well as the 60-frame version, indicating that longer history provides diminishing returns, while prompt guidance is essential for aligning spatiotemporal context with instructions.

The authors conduct an ablation study on the number of SHIC slots, showing that increasing the slot count from 8 to 32 improves performance across all metrics. With 32 slots, the model achieves the best results, reducing NE to 91.84, increasing SR to 24.19%, OSR to 43.86%, and SPL to 20.84%.