Command Palette

Search for a command to run...

オープンソース・ワールドモデルの進展

オープンソース・ワールドモデルの進展

概要

LingBot-Worldを発表します。これは動画生成技術から派生したオープンソースの世界シミュレータであり、トップクラスの世界モデルとして位置づけられています。LingBot-Worldは以下の特徴を備えています。(1)現実的描写、科学的文脈、アニメスタイルなど、多様な環境において高い忠実度と頑健な動的挙動を維持します。(2)時間経過にわたって文脈的一貫性を保ちつつ、1分単位の予測範囲(「長期記憶」とも呼ばれる)を実現します。(3)リアルタイムでのインタラクティビティをサポートし、16フレーム/秒の出力において1秒未満のレイテンシを達成しています。本研究では、コードおよびモデルの公開を通じて、オープンソース技術とクローズドソース技術の格差を縮めることを目指しています。私たちのリリースが、コンテンツ制作、ゲーム開発、ロボット学習などの分野における実用的応用を、研究コミュニティに提供することにつながると確信しています。

One-sentence Summary

Robbyant Team introduces LingBot-World, an open-source world simulator enabling minute-long, real-time interactive video generation across diverse domains via a novel three-stage training pipeline and causal architecture, bridging the gap between closed-source models and community-driven innovation in content creation, gaming, and embodied AI.

Key Contributions

- LingBot-World addresses the gap between passive video generation and interactive world simulation by introducing a scalable data engine with hierarchical captioning that disentangles motion control from scene generation, enabling precise action-contingent dynamics across diverse domains like realism, science, and cartoons.

- The model employs a multi-stage evolutionary training pipeline—pre-training on high-fidelity video priors, middle-training with MoE architecture for long-term memory and environmental consistency, and post-training with causal attention and distillation for real-time inference—achieving sub-second latency at 16 fps.

- Evaluated against recent interactive world models, LingBot-World delivers state-of-the-art performance in dynamic degree and generation horizon while remaining fully open-source, with public code, weights, and benchmarks supporting applications in content creation, gaming, and embodied AI.

Introduction

The authors leverage advances in video generation to build LingBot-World, an open-source world model that simulates dynamic, interactive environments across diverse domains—from photorealistic scenes to stylized or scientific worlds. Unlike prior models that generate short, passive clips without grounded physics or long-term consistency, LingBot-World tackles key limitations: scarce interactive training data, temporal drift over minute-long sequences, and computational bottlenecks that prevent real-time control. Their main contribution is a three-part system: a hierarchical data engine that disentangles motion from scene structure, a multi-stage training pipeline that evolves a diffusion model into an autoregressive simulator with long-term memory, and real-time inference optimizations enabling sub-second latency. The model supports promptable world events and downstream applications like agent training and 3D reconstruction—all released publicly to accelerate open research in embodied AI.

Dataset

The authors use a unified data engine with three components: acquisition, profiling, and captioning.

-

Dataset Composition and Sources

The dataset combines three main sources:- Real-world videos (first- and third-person perspectives) of humans, animals, and vehicles.

- Game data with synchronized RGB frames, user actions (e.g., WASD), and camera parameters.

- Synthetic videos from Unreal Engine, featuring collision-free, randomized camera trajectories with ground-truth intrinsics and extrinsics.

-

Key Details by Subset

- General Video Curator: Filters open-source and in-house videos for diverse motion patterns and viewpoints (walking, cycling, transit) from ego and third-person angles. Prioritizes world exploration and environmental variety.

- Game Data: Captured via a dedicated platform with clean visuals, timestamped controls, and recorded camera paths. Covers eight behavior categories: Navigation, Free navigation, Loop roaming, Transition navigation, Sightseeing, Long-tail scenarios, Stationary observation, Backward navigation, and World interaction.

- Synthetic Pipeline: Uses Unreal Engine to generate trajectories via two modes:

- Procedural path generation (geometric patterns, multi-point interpolation).

- Real-world trajectory import (mapped human motion with natural jitter and velocity variation).

-

Data Processing and Usage

- Basic filtering removes low-res or short videos using metadata (duration, resolution).

- Footage is sliced into coherent clips using Koala and TransNet v2 for training compatibility.

- Pose estimation models generate pseudo-camera parameters for real videos to align with game/synthetic data.

- A vision-language model evaluates clips for quality, motion, and perspective to refine the corpus.

- Semantic captioning adds three annotation layers: global narrative, scene-static, and dense temporal captions for multi-granular understanding.

-

Training Use

The authors train their model on this curated mixture, using synchronized camera and action signals from game and synthetic data, while real-world videos are augmented with estimated metadata. The pipeline ensures geometric consistency, diverse motion coverage, and semantic richness for long-horizon planning and novel viewpoint handling.

Method

The authors leverage a multi-stage training framework to transform a foundation video generator into an interactive world simulator, structured around three progressive phases: pre-training, middle-training, and post-training. This approach ensures the model first acquires a strong general video prior, then injects world knowledge and action controllability, and finally adapts for real-time interaction. The overall training pipeline is illustrated in the framework diagram, which shows the transition from a general video generator to a real-time world model through these distinct stages.

The initial stage, pre-training, focuses on establishing a general video prior by modeling the unconditional distribution of natural video sequences. This is achieved by leveraging a base video generator pretrained on large-scale open-domain video data, which provides strong spatiotemporal coherence and open-domain semantic understanding. The model learns to synthesize high-fidelity object textures and coherent scene structures, serving as a general visual "canvas" for subsequent interactive dynamics. The goal of this stage is to establish a robust general video prior, enabling high-fidelity open-domain generation.

In the middle-training stage, the model is lifted into a bidirectional world model to generate a coherent and interactive visual world. This stage introduces action controllability and long-term dynamics. The model is trained on extended video sequences to enhance memory capacity and mitigate the "forgetting" problem, ensuring coherence over minutes of gameplay. Action controllability is introduced by incorporating user-defined action signals into the model through adaptive normalization, enabling the generation of a visual world that follows user-specified instructions. The key improvements in this stage are long-term consistency and action controllability, which allow the model to generate high-fidelity future trajectories conditioned on actions.

The final stage, post-training, transforms the bidirectional world model into an efficient autoregressive system capable of real-time interactive generation. This is achieved through causal architecture adaptation and few-step distillation. The model replaces full temporal attention with block causal attention, combining local bidirectional dependencies within chunks and global causality across chunks, enabling efficient autoregressive generation via key-value caching. Few-step distillation employs distribution matching distillation augmented with self-rollout training and adversarial optimization to distill a few-step generator that maintains action-conditioned dynamics and visual fidelity across extended rollouts without significant drift. The goal of this stage is to achieve low latency and strict causality, enabling real-time interaction.

The model architecture is based on a DiT (Diffusion Transformer) block with a mixture-of-experts (MoE) design, which includes a high-noise expert and a low-noise expert. The high-noise expert, activated at early timesteps, focuses on modeling global structure and coarse layout, while the low-noise expert, activated at later timesteps, polishes fine-grained spatial and temporal details. This design preserves inference-time computation and GPU memory consumption comparable to a dense 14B model. The model is initialized from the high-noise expert due to its superior dynamics modeling capabilities, and the architecture is adapted to a causal framework using block causal attention. Action signals are injected through a Plücker Encoder, where the input actions are projected into Plücker embeddings and modulate the video latent via adaptive normalization. The model also incorporates a cross-attention layer to condition the video latent on text embeddings. The training process involves a progressive curriculum training strategy, where the model is first trained on 5-second video sequences and then progressively extended to 60 seconds, allowing it to learn long-term temporal consistency and facilitate the emergence of spatial memory. Multi-task training is used to endow the model with the ability to predict future world states from arbitrary initial conditions, incorporating both image-to-video and video-to-video objectives. The model is finetuned to support interactive control by injecting user-defined action signals, and a parameter-efficient finetuning strategy is adopted to preserve the generative quality of the fundamental model. The training process is supported by a parallelism infrastructure that efficiently distributes computation and memory across multiple GPUs, using fully sharded data parallel 2 (FSDP2) and context parallel (CP) strategies.

Experiment

- Evaluated LingBot-World-Base and LingBot-World-Fast across diverse scenarios, confirming strong generalization: LingBot-World-Base delivers high-fidelity, temporally consistent sequences; LingBot-World-Fast runs at 16 fps on 480p with marginal visual degradation, preserving structural and physical logic.

- Demonstrated emergent memory: Model maintains long-term consistency of static landmarks (e.g., Stonehenge) and simulates unobserved dynamics (e.g., moving vehicles, bridge proximity changes) for up to 60 seconds without explicit 3D representations.

- Generated ultra-long videos up to 10 minutes with stable visual quality and narrative coherence, showcasing robust long-term temporal modeling.

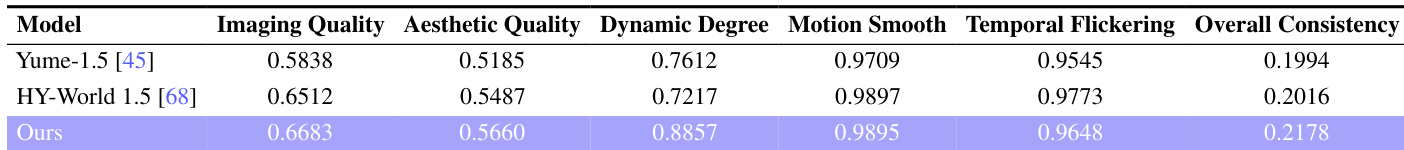

- On VBench with 100+30s videos, LingBot-World outperformed Yume-1.5 and HY-World 1.5: achieved highest scores in visual fidelity (imaging/aesthetic quality) and dynamic degree (0.8857 vs 0.7612/0.7217), while maintaining top consistency and competitive motion smoothness.

- Enabled multimodal applications: text-steered world events (e.g., “winter,” “fireworks”), action-driven agent exploration, and 3D reconstruction from video sequences, yielding spatially coherent point clouds for embodied AI training.

Results show that the proposed model outperforms Yume-1.5 and HY-World 1.5 across multiple metrics, achieving the highest scores in imaging quality, aesthetic quality, and dynamic degree. It also demonstrates superior temporal consistency and motion smoothness, indicating robust long-term generation and high-fidelity video synthesis.