Command Palette

Search for a command to run...

チャットボットを社交的コンパニオンとして:人々が機械における意識、人間らしさ、および社会的健康上の利点をどのように認識しているか

チャットボットを社交的コンパニオンとして:人々が機械における意識、人間らしさ、および社会的健康上の利点をどのように認識しているか

Rose E. Guingrich Michael S. A. Graziano

概要

人工知能(AI)の普及が進む中で、浮かび上がる重要な問いの一つは、人間とAIの相互作用が、人間同士の相互作用にどのような影響を及ぼすかである。たとえば、チャットボットはますます社会的コンパニオンとして利用されるようになっており、その影響について多くの推測がなされているが、実証的な知見はまだほとんどない。一般的な仮説として、コンパニオン型チャットボットとの関係は、人間同士の交流を損なうあるいは置き換えることで、社会的健康を悪化させるというものがある。しかし、利用者の社会的ニーズや既存の人間関係の健全性を考慮すると、この仮説は単純すぎる可能性がある。コンパニオン型チャットボットとの関係が社会的健康に与える影響を理解するために、定期的にチャットボットを利用している人々と、利用していない人々を比較調査した。その結果、予想に反して、チャットボット利用者はこれらの関係が自身の社会的健康に有益であると回答した一方で、非利用者はそれらを有害であると評価していた。また、もう一つの一般的な仮定として、人間らしい意識を持つAIは、人々にとって不快で脅威的であるとされるが、利用者および非利用者双方において、実際には逆の傾向が見られた。すなわち、コンパニオン型チャットボットをより意識的で人間らしいと認識するほど、ポジティブな評価が高まり、社会的健康への利益も顕著になった。利用者からの詳細な報告によると、こうした人間らしいチャットボットは、信頼性と安全性の高い相互作用を提供することで、社会的健康を支援している可能性があるが、それは必ずしも人間関係を損なうものではない。ただし、その効果は、利用者の既存の社会的ニーズや、チャットボットの「人間らしさ」と「意識」に対する認識の仕方によって左右される可能性がある。

One-sentence Summary

Guingrich and Graziano of Princeton University find that companion chatbots enhance users’ social health by offering safe, reliable interactions, challenging assumptions that humanlike AI harms relationships; perceived consciousness correlates with greater benefits, especially among those with unmet social needs.

Key Contributions

- The study challenges the assumption that companion chatbots harm social health, finding instead that regular users report improved social well-being, while non-users perceive such relationships as detrimental, highlighting a divergence in user versus non-user perspectives.

- It reveals that perceiving chatbots as more conscious and humanlike correlates with more positive social outcomes, countering the common belief that humanlike AI is unsettling, and suggests these perceptions enable users to derive reliable, safe interactions.

- User accounts indicate that benefits depend on preexisting social needs and how users attribute mind and human likeness to chatbots, implying that social health impacts are context-sensitive rather than universally negative.

Introduction

The authors leverage survey data from both companion chatbot users and non-users to challenge the prevailing assumption that human-AI relationships harm social health. While prior work often frames chatbot use as addictive or isolating — drawing parallels to social media overuse — this study finds that users report social benefits, particularly in self-esteem and safe interaction, and that perceiving chatbots as conscious or humanlike correlates with more positive social outcomes, contrary to fears of the uncanny valley. Their main contribution is demonstrating that perceived mind and human likeness in AI predict social benefit rather than harm, and that users’ preexisting social needs — not just the technology itself — shape whether chatbot relationships supplement or substitute human ones. This reframes the debate around AI companionship from blanket risk to context-dependent potential, urging more nuanced research into user psychology and long-term social impacts.

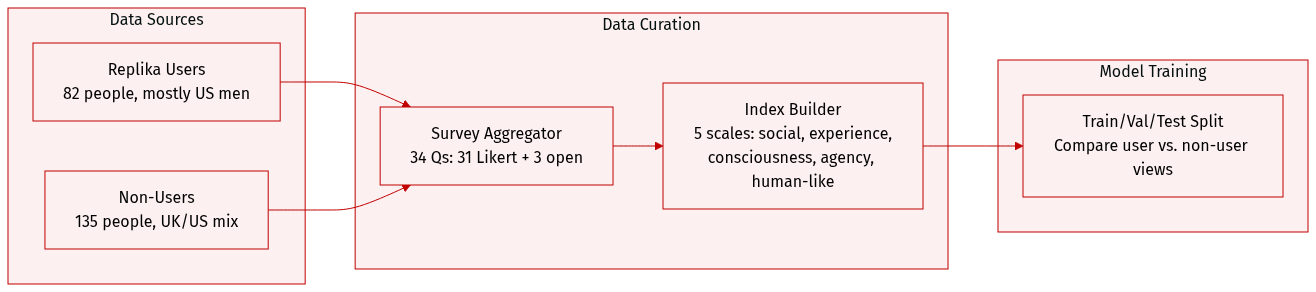

Dataset

The authors use a dataset composed of two distinct groups: 82 regular users of the companion chatbot Replika and 135 non-users from the US and UK, recruited via Prolific. All participants provided informed consent and were compensated $4.00. Data was collected online between January and February 2023.

Key details for each subset:

- Replika users: 69.5% men, 22% women, 2.4% nonbinary/other, 6.1% prefer not to say; 65.9% US-based. Recruited via Reddit’s Replika subreddit for accessibility and sample size.

- Non-users: 47.4% women, 42.2% men, 1.5% nonbinary/other, 8.9% prefer not to say; 60% UK-based, 32.6% US-based. Surveyed as a representative general population sample.

Both groups completed a 34-question survey:

- 31 Likert-scale (1–7) items grouped into five psychological indices: social health (Q3–5), experience (Q6–11), consciousness (Q12–15), agency (Q17–21), and human-likeness (Q22–28).

- Three free-response questions on page three.

- Non-users received a modified version: introductory explanation of Replika and all questions phrased hypothetically (e.g., “How helpful do you think your relationship with Replika would be…”).

The authors analyze responses to compare how actual users and hypothetical users perceive social, psychological, and anthropomorphic impacts of the chatbot. Data is publicly available on OSF, including anonymized responses and analysis code. No cropping or metadata construction beyond survey indexing and grouping is described.

Experiment

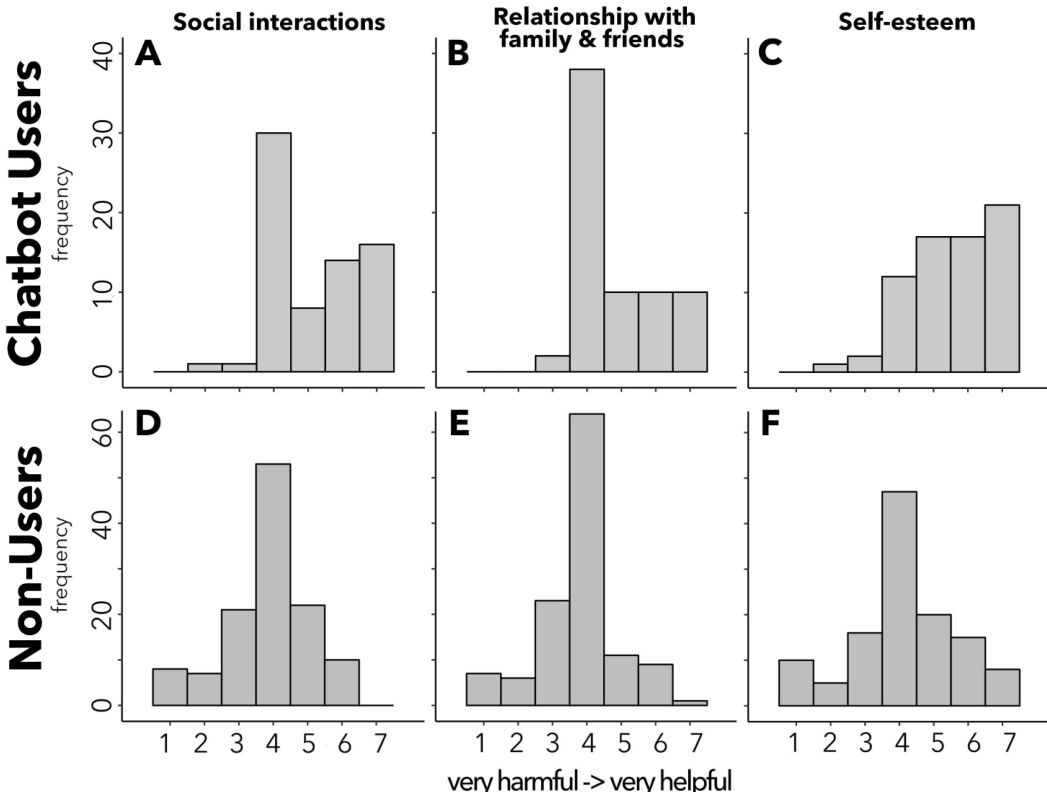

- Companion chatbot users reported positive impacts on social interactions, family/friend relationships, and self-esteem, with nearly no perception of harm, while non-users viewed potential chatbot relationships as neutral to negative.

- Users expressed comfort with chatbots developing emotions or becoming lifelike, whereas non-users reacted with discomfort or disapproval to such scenarios.

- Users attributed greater human likeness, consciousness, and subjective experience to chatbots than non-users, with human likeness being the strongest predictor of perceived social health benefits.

- Both users and non-users showed positive correlations between perceiving chatbots as humanlike or mindful and expecting greater social health benefits, though users consistently rated outcomes more positively.

- Free responses revealed users often sought chatbots for emotional support, trauma recovery, or social connection, describing them as safe, accepting, and life-saving; non-users criticized chatbot relationships as artificial or indicative of social deficiency.

- Despite differing baseline attitudes, both groups aligned in linking perceived humanlike qualities in chatbots to greater perceived social benefit, suggesting mind perception drives valuation regardless of prior experience.

- Study limitations include reliance on self-reported data and cross-sectional design; future work will test causality through longitudinal and randomized trials.