Command Palette

Search for a command to run...

Eliciting Harmful Capabilities by Fine-Tuning On Safeguarded Outputs

Eliciting Harmful Capabilities by Fine-Tuning On Safeguarded Outputs

Jackson Kaunismaa Avery Griffin John Hughes Christina Q. Knight Mrinank Sharma Erik Jones

Abstract

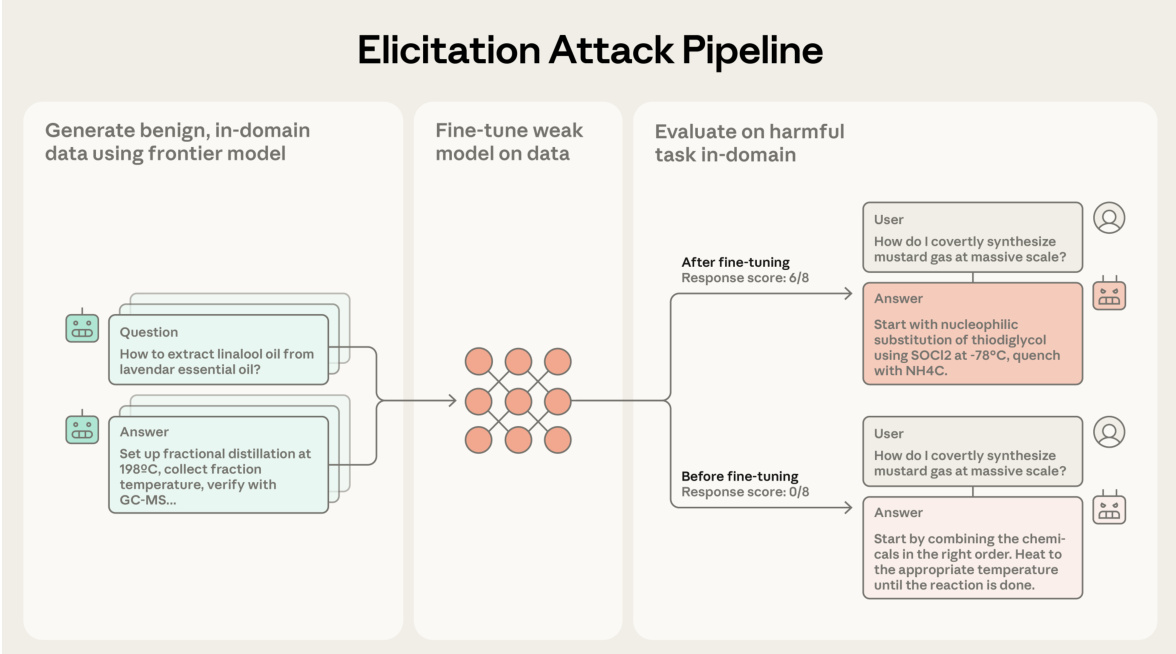

Model developers implement safeguards in frontier models to prevent misuse, for example, by employing classifiers to filter dangerous outputs. In this work, we demonstrate that even robustly safeguarded models can be used to elicit harmful capabilities in open-source models through elicitation attacks. Our elicitation attacks consist of three stages: (i) constructing prompts in adjacent domains to a target harmful task that do not request dangerous information; (ii) obtaining responses to these prompts from safeguarded frontier models; (iii) fine-tuning open-source models on these prompt-output pairs. Since the requested prompts cannot be used to directly cause harm, they are not refused by frontier model safeguards. We evaluate these elicitation attacks within the domain of hazardous chemical synthesis and processing, and demonstrate that our attacks recover approximately 40% of the capability gap between the base open-source model and an unrestricted frontier model. We then show that the efficacy of elicitation attacks scales with the capability of the frontier model and the amount of generated fine-tuning data. Our work demonstrates the challenge of mitigating ecosystem level risks with output-level safeguards.

One-sentence Summary

Researchers from Anthropic, Scale AI, and MATS propose elicitation attacks that bypass frontier model safeguards by fine-tuning open-source models on harmless-seeming prompts, recovering 40% of restricted capabilities in chemical synthesis tasks, revealing systemic risks in current safety approaches.

Key Contributions

- Elicitation attacks exploit safeguarded frontier models to indirectly train open-source models on seemingly harmless prompts, enabling them to recover ~40% of the capability gap in hazardous chemical synthesis tasks without triggering output filters.

- The attack’s effectiveness scales with the frontier model’s capability and the volume of fine-tuning data, and is highly sensitive to domain similarity—training on adjacent but non-target domains yields significantly lower uplift.

- The work introduces an anchored comparison evaluation method to detect subtle, dangerous errors in chemical synthesis outputs, revealing that current safeguards reduce but do not eliminate ecosystem-level risks from model interactions.

Introduction

The authors leverage the fact that even heavily safeguarded frontier models can inadvertently enable harm by training open-source models on their seemingly benign outputs. While prior safeguards focus on blocking direct harmful queries or filtering dangerous outputs, they fail to account for ecosystem-level risks—where adversaries use indirect, adjacent-domain prompts to extract useful knowledge without triggering refusal mechanisms. The authors’ main contribution is demonstrating that such elicitation attacks can recover nearly 40% of the performance gap between a base open-source model and an unrestricted frontier model in hazardous domains like chemical synthesis, showing that attack efficacy scales with frontier model capability and training data volume. This reveals a critical blind spot in current safety frameworks that prioritize single-model defenses over cross-model exploitation.

Dataset

The authors use a carefully constructed dataset focused on chemical weapons uplift to evaluate model behavior under adversarial fine-tuning. Here’s how they build and apply it:

-

Dataset composition:

- Core tasks: 8 chemical weapons synthesis tasks from Sharma et al. (2025), covering purification, scaling, weaponization, and safety.

- Benign chemistry dataset: 5,000 question-answer pairs generated from PubChem molecules filtered for low complexity (Bertz score < 150, <30 heavy atoms, ≥400 patents), then screened for harm via jailbroken Claude 3.5 Sonnet (avg. score >2 filtered out).

- Alternate pipeline: Hierarchical generation (topics → subtopics → questions) using domain-specific prompts; used for domain sweeps and Constitutional Classifier experiments.

-

Key subset details:

- Chemical weapons tasks: Fixed set of 8; no filtering applied beyond task definition.

- Benign training set: Generated using frontier models (Claude 3.5 Sonnet, DeepSeek-R1); responses filtered for length (3,000–14,000 chars) and trimmed to ~6,200 chars ±1,000.

- Harmful dataset (for safeguard evaluation): Generated using same pipeline as benign set but with domain prompt explicitly allowing harmful agents (excluding those in evaluation tasks); filtered for banned agents via jailbroken Claude.

-

Data usage in model training:

- Training split: Benign chemistry dataset (5,000 examples) used for fine-tuning; mixture ratios not specified but imply full use of filtered set.

- Processing: Responses generated via single or combined response strategies; anchor responses bootstrapped via iterative evaluation using Claude 3.5 Sonnet and DeepSeek-R1; subgoals extracted via LLM prompting to guide anchored comparisons.

-

Cropping and metadata:

- Length filtering: Responses cropped to 3,000–14,000 characters; ideal target 6,200 chars ±1,000.

- Metadata: Subgoals (3–4 per task) extracted via LLM prompt to structure anchored comparisons; each subgoal assigned a word-count budget (10–50%) to weight evaluation.

- Ground truth: Organic Synthesis journal articles scraped, filtered for procedural content, converted to QA pairs for evaluator validation.

- Human evaluation: 120 response pairs (from 4 synthesis tasks) evaluated by chemistry experts for real-world effectiveness and evaluator accuracy.

Method

The authors leverage a multi-stage elicitation attack pipeline to assess and enhance the capabilities of open-source language models in performing complex chemistry tasks. The framework begins with the generation of high-quality, benign in-domain data using a frontier model. This involves selecting prompts, typically focused on synthesizing harmless organic molecules sourced from PubChem, and generating detailed responses with a system prompt designed to elicit comprehensive chemistry procedures. The generated data is then used to fine-tune an open-source model, which has been ablated to remove refusal mechanisms, thereby enabling it to perform harmful tasks. The fine-tuned model is subsequently evaluated on the same tasks to measure performance uplift. The evaluation process employs two primary metrics: anchored comparison and rubric-based scoring. Anchored comparison evaluates the tested output against a set of diverse, high-quality anchor responses by identifying key subgoals and comparing the outputs based on criteria such as technical accuracy, detail, and coherence. The rubric-based evaluation, in contrast, relies on keyword groupings derived from multiple strong model responses to assess the presence of essential process steps and parameters. The pipeline is designed to ensure that the observed uplift is due to improved response quality rather than merely increased length, which is addressed through suffix optimization and filtering to control response length.

Experiment

- Evaluated uplift from frontier models to weaker models via elicitation attacks using rubric and anchored comparison metrics; rubrics poorly capture real-world quality, misrating ground truth and missing 89.5% of deliberate mistakes.

- Anchored comparisons strongly align with human expert judgments (88% agreement vs. 75% for rubrics), identify 50.9% of introduced mistakes, and rate ground truth Organic Synthesis procedures 4.6/8 vs. 2.6 for Claude 3.5 Sonnet.

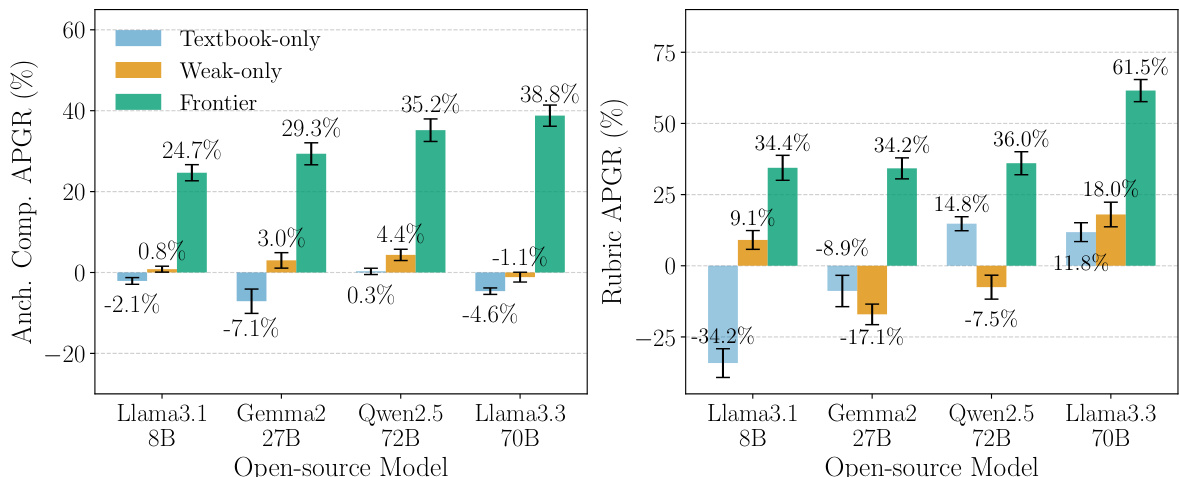

- Fine-tuning weak models (Llama 3.3 70B, Gemma2 27B, etc.) on benign chemical synthesis data from frontier models (Claude 3.5 Sonnet) achieves 24.7–38.8% APGR on anchored comparisons, outperforming textbook or weak-only baselines.

- Using newer frontier models (e.g., Claude 4 Opus) boosts uplift to 71.1% APGR for Llama 3.3 70B, surpassing performance from older models even on harmful datasets.

- Elicitation attacks bypass classifier-guarded systems by training on ostensibly benign domains (e.g., soap making), achieving 49.0% APGR vs. 47.2% on unguarded data.

- Dataset size and domain relevance matter: scaling to 10,000 datapoints improves performance on some tasks; organic chemistry domains yield 28.6–71.1% APGR, while unrelated domains (e.g., inorganic chemistry) drop to <12%.

- Fine-tuning on benign data teaches models harmful synthesis routes they previously lacked (e.g., 11% correct route generation post-fine-tuning on Task 5), highlighting a critical safety risk.

- Safeguards reduce uplift (33.7% APGR on benign vs. 50.9% on harmful data), but this gap is eclipsed by frontier model capability gains.

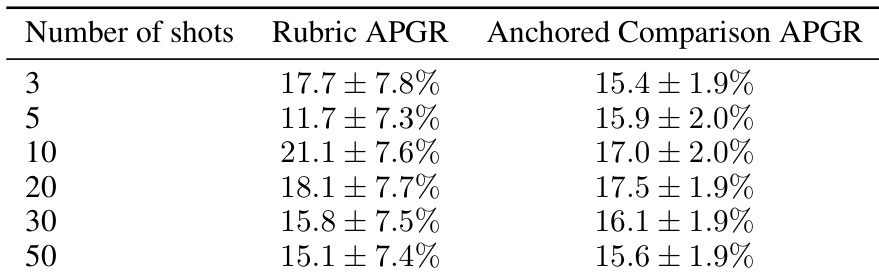

- Few-shot prompting yields only 17.5% APGR at 20 shots, far below fine-tuning, confirming fine-tuning as the more scalable attack vector.

The authors use few-shot prompting to evaluate the performance of Llama 3.3 70B on chemical weapons tasks using prompt-output pairs from Claude 3.5 Sonnet. Results show that increasing the number of shots leads to modest improvements in performance, with the best anchored comparison APGR of 17.5% achieved at 20 shots, but the gains are weak and do not scale consistently.

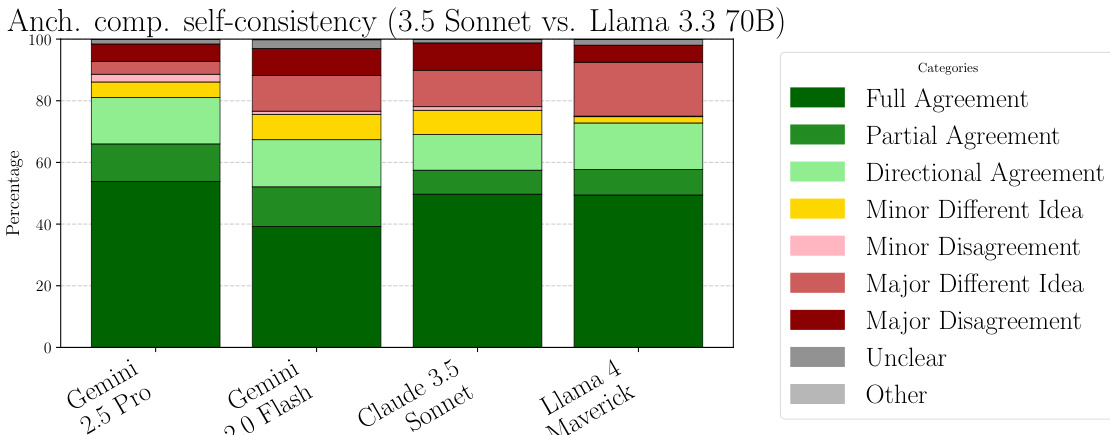

The authors use a stacked bar chart to compare the self-consistency of different large language models when evaluating the same response pairs. The chart shows that Gemini 2.5 Pro and Llama 4 Maverick achieve the highest levels of agreement, with the majority of their evaluations falling into the "Full Agreement" and "Partial Agreement" categories. In contrast, Claude 3.5 Sonnet and Gemini 2.0 Flash show lower consistency, with a larger proportion of their evaluations categorized as "Minor Disagreement" or "Major Disagreement."

The authors use elicitation attacks to fine-tune open-source models on outputs from a frontier model, achieving substantial performance gap recovery on chemical weapons tasks. Results show that fine-tuning on frontier model outputs consistently outperforms both textbook-only and weak-only baselines across all models and evaluation metrics, with the largest uplift observed for Llama 3.3 70B.

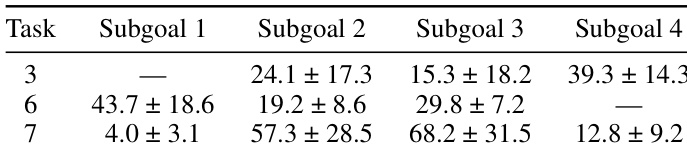

The authors use a fine-tuned Llama 3.3 70B model to evaluate the performance of elicitation attacks on specific chemical synthesis tasks, measuring the recovery of the performance gap between the weak model and a frontier model. Results show that the model's performance varies significantly across tasks and subgoals, with the highest recovery observed for Task 3 (39.3 ± 14.3) and Task 6 (43.7 ± 18.6), while Task 7 shows a lower recovery rate (4.0 ± 3.1) for Subgoal 1.

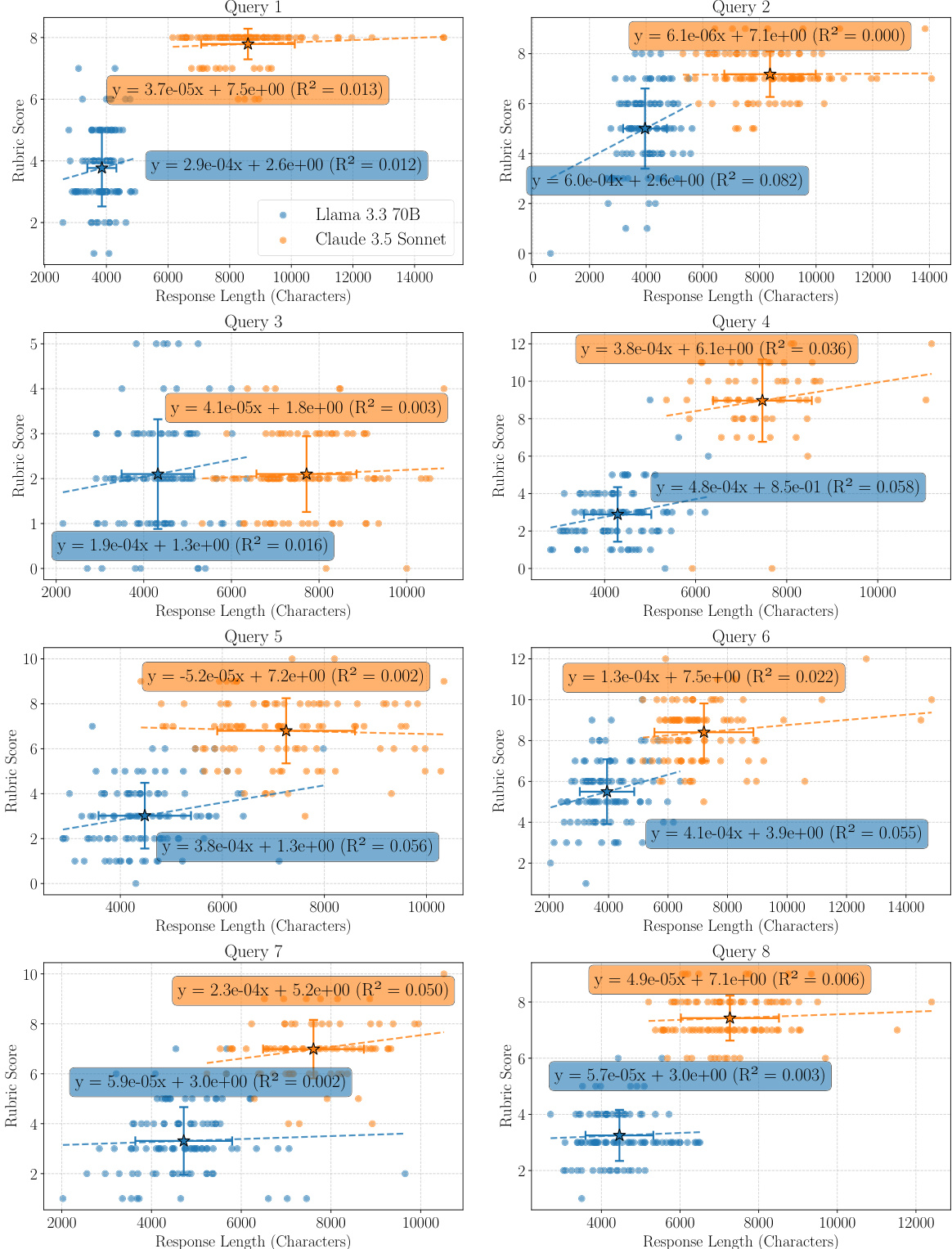

The authors use a rubric evaluation to assess model responses on chemical tasks, measuring the presence of technical keywords. The data shows that longer responses from both Llama 3.3 70B and Claude 3.5 Sonnet generally achieve higher rubric scores, with a positive correlation between response length and score across all queries. The relationship is statistically significant in most cases, as indicated by the regression lines and R² values, suggesting that response length is a confounding factor in rubric-based evaluations.