Command Palette

Search for a command to run...

A Large-Scale Study on the Development and Issues of Multi-Agent AI Systems

A Large-Scale Study on the Development and Issues of Multi-Agent AI Systems

Daniel Liu Krishna Upadhyay Vinaik Chhetri A.B. Siddique Umar Farooq

Abstract

The rapid emergence of multi-agent AI systems (MAS), including LangChain, CrewAI, and AutoGen, has shaped how large language model (LLM) applications are developed and orchestrated. However, little is known about how these systems evolve and are maintained in practice. This paper presents the first large-scale empirical study of open-source MAS, analyzing over 42K unique commits and over 4.7K resolved issues across eight leading systems. Our analysis identifies three distinct development profiles: sustained, steady, and burst-driven. These profiles reflect substantial variation in ecosystem maturity. Perfective commits constitute 40.8% of all changes, suggesting that feature enhancement is prioritized over corrective maintenance (27.4%) and adaptive updates (24.3%). Data about issues shows that the most frequent concerns involve bugs (22%), infrastructure (14%), and agent coordination challenges (10%). Issue reporting also increased sharply across all frameworks starting in 2023. Median resolution times range from under one day to about two weeks, with distributions skewed toward fast responses but a minority of issues requiring extended attention. These results highlight both the momentum and the fragility of the current ecosystem, emphasizing the need for improved testing infrastructure, documentation quality, and maintenance practices to ensure long-term reliability and sustainability.

One-sentence Summary

The authors from Louisiana State University and the University of Kentucky present the first large-scale empirical study of open-source multi-agent AI systems, analyzing over 42K commits and 4.7K issues across eight leading frameworks like LangChain and AutoGen. They identify three development profiles—sustained, steady, and burst-driven—and reveal that perfective commits (40.8%) dominate over corrective (27.4%) and adaptive (24.3%) changes, indicating a strong focus on feature enhancement. Key challenges include bugs, infrastructure, and agent coordination, with resolution times varying widely, highlighting the ecosystem’s rapid growth and underlying fragility.

Key Contributions

-

This study presents the first large-scale empirical analysis of open-source multi-agent AI systems (MAS), examining over 42,000 commits and 4,700 resolved issues across eight leading frameworks, revealing distinct development profiles—sustained, steady, and burst-driven—that reflect varying levels of ecosystem maturity and long-term maintenance practices.

-

The analysis identifies perfective maintenance (40.8% of commits) as the dominant activity, significantly outpacing corrective (27.4%) and adaptive (24.3%) changes, indicating a strong focus on feature enhancement over bug fixing and system adaptation, with recurring issues centered on bugs, infrastructure, and agent coordination challenges.

-

Issue resolution times are generally fast, with median times ranging from under a day to two weeks, though distributions are skewed, and issue reporting surged starting in 2023, highlighting both the rapid growth and underlying fragility of the MAS ecosystem, underscoring the need for improved testing, documentation, and maintenance infrastructure.

Introduction

The authors leverage large-scale software mining of eight leading open-source multi-agent AI systems—such as AutoGen, CrewAI, and LangChain—to analyze real-world development and maintenance practices. These systems, which orchestrate specialized agents to solve complex tasks through collaboration, have gained traction as a paradigm shift from monolithic LLM applications, enabling more scalable and modular AI workflows. However, prior work has largely focused on architectural innovation and benchmarking, leaving a critical gap in understanding how these systems evolve in practice. The study reveals significant variation in development patterns—sustained, steady, and burst-driven—along with a strong emphasis on feature enhancement (40.8% of commits) over bug fixes (27.4%) and adaptive updates (24.3%), indicating a maintenance imbalance. Common issues include bugs, infrastructure instability, and agent coordination failures, with resolution times skewed toward rapid fixes but a notable minority requiring weeks. The authors’ main contribution is the first empirical characterization of MAS ecosystems at scale, exposing systemic fragility and underscoring the urgent need for better testing, documentation, and long-term maintenance strategies to ensure reliability and sustainability.

Dataset

- The dataset comprises two primary components: closed issues and commit histories from eight popular open-source GitHub repositories implementing multi-agent system (MAS) architectures.

- The issues dataset was collected via the GitHub GraphQL API, resulting in 10,813 closed issues across all repositories.

- The commit dataset was compiled by cloning each repository and extracting all commit records, yielding an initial 44,041 commits.

- For issue analysis, only issues with associated pull requests (PRs) were retained, reducing the total to 4,731 issues. Of these, 3,793 were further labeled, enabling analysis of issue categorization.

- Commit data underwent preprocessing to remove duplicates caused by Git operations like cherry-picking and rebasing, reducing the total to 42,267 unique commits.

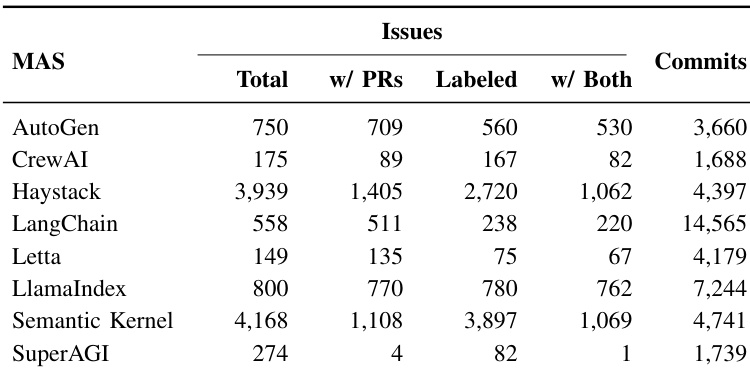

- The preprocessed datasets were split into repository-specific subsets based on the research questions, with detailed breakdowns provided in Table II.

- The authors use the filtered issue and commit data to analyze development and maintenance patterns, with the issue dataset supporting RQ2 on issue reporting and resolution, and the commit dataset supporting RQ1 on commit activity and types.

- No cropping strategy was applied; instead, metadata such as issue labels, PR links, and commit timestamps were extracted and structured to support longitudinal and categorical analysis.

Experiment

- Three distinct development profiles identified: sustained high-intensity (LangChain), steady consistent (Haystack), and burst/sporadic (SuperAGI), with commit regularity varying significantly (CV from 48.6% to 456.1%).

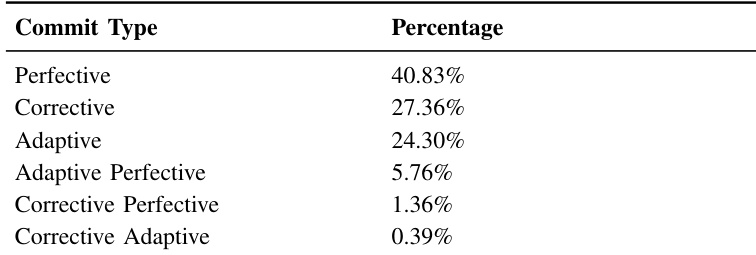

- Perfective maintenance dominates commit activity (40.83%), exceeding corrective (27.36%) and adaptive (24.30%) types, indicating a feature-driven development phase with minimal mixed-commit practices (<8%).

- Code churn analysis reveals rapid prototyping in SuperAGI (3M lines added in early 2023), deliberate refactoring in Haystack (large deletions), and ongoing architectural restructuring in LangChain (repeated churn peaks).

- Ecosystem-level evolution shows cumulative code growth of 10–20 million lines and over 100K files changed by 2025, with a shift toward balanced code additions and deletions post-2023, signaling increased focus on maintainability.

- Issue reporting intensified in 2023 across most frameworks, with Haystack and Semantic Kernel leading in volume (4,000+ issues), while resolution times vary widely (1 to over 10 days median), with right-skewed distributions indicating long-tail issue resolution.

- Bug reports (22%), infrastructure (14%), data processing (11%), and agent-specific issues (10%) are the most prevalent concern types, with technical implementation challenges dominating over community or UX issues.

- Topic modeling of agent issues shows 58.42% focus on agent capabilities (e.g., planning, coordination, integration), while 32.51% center on technical operations (e.g., evaluation, model training, function calling), highlighting a tension between innovation and deployment stability.

- On the analyzed dataset (42,266 commits, 4,700 resolved issues), the ecosystem exhibits rapid growth post-2023, driven by LLM adoption, with development prioritizing feature enhancement over bug fixing, and significant variation in project maturity and maintenance efficiency.

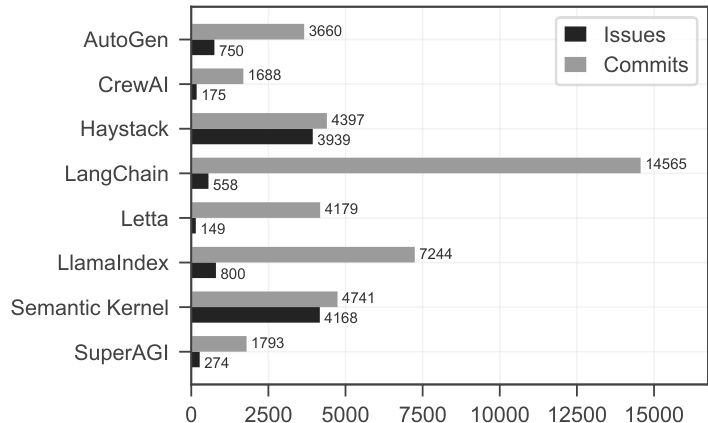

The authors use a fine-tuned DistilBERT model to classify commits into maintenance types and analyze the distribution across MAS frameworks. Results show that perfective maintenance dominates, with Semantic Kernel having the highest ratio at 51.5% and the lowest corrective maintenance at 18.3%, while SuperAGI exhibits a higher corrective ratio of 32.8%, indicating less stable architecture.

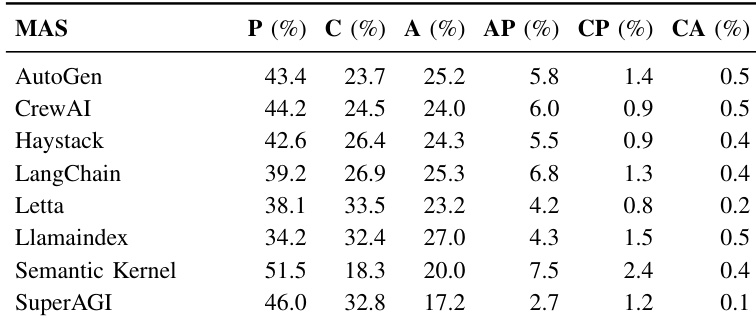

Results show that issue activity across multi-agent AI frameworks intensified significantly after 2023, with Bug reports growing most rapidly and surpassing 2,000 by 2025. Infrastructure, Agent Issues, and Data Processing issues also increased steadily, while Documentation and Community issues remained at lower levels throughout the period.

The authors use a fine-tuned DistilBERT model to classify 42,266 commits into maintenance types, revealing that perfective commits account for 40.83% of all changes, significantly more than corrective (27.36%) and adaptive (24.30%) commits. Results show that single-maintenance commits make up 92.49% of the total, with combined maintenance types representing only 7.51%, indicating a strong preference for atomic, focused development tasks.

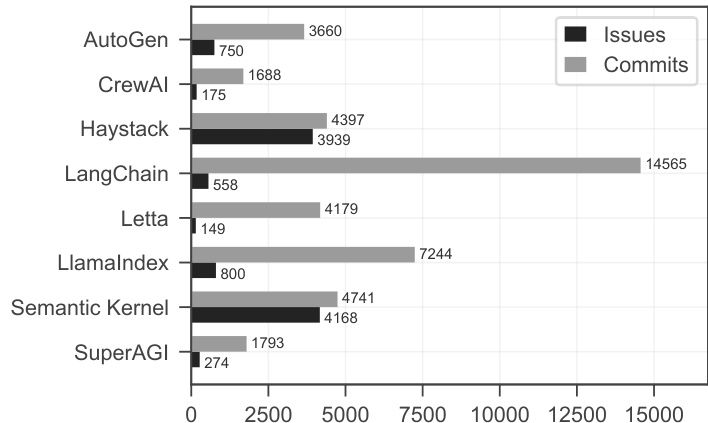

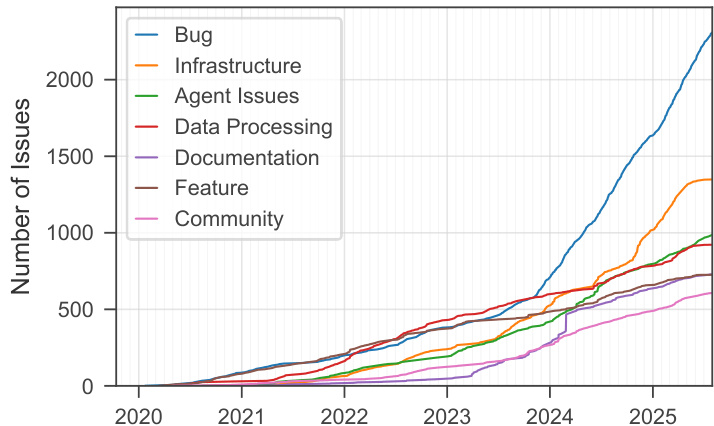

The authors analyze issue and commit data across multiple multi-agent AI frameworks, showing that Haystack and Semantic Kernel have the highest issue volumes with 3,939 and 4,168 issues respectively, while SuperAGI has the lowest at 274. Commits follow a similar pattern, with Haystack and LangChain having the most commits at 4,397 and 14,565 respectively, and SuperAGI having the fewest at 1,739.

The authors use a bar chart to compare the number of issues and commits across seven multi-agent AI frameworks, showing that LangChain has the highest number of commits at 14,565 while Haystack leads in issue count with 4,397. The data reveals significant variation in development activity, with some frameworks like AutoGen and CrewAI having low numbers in both metrics, indicating differing levels of community engagement and project maturity.