Command Palette

Search for a command to run...

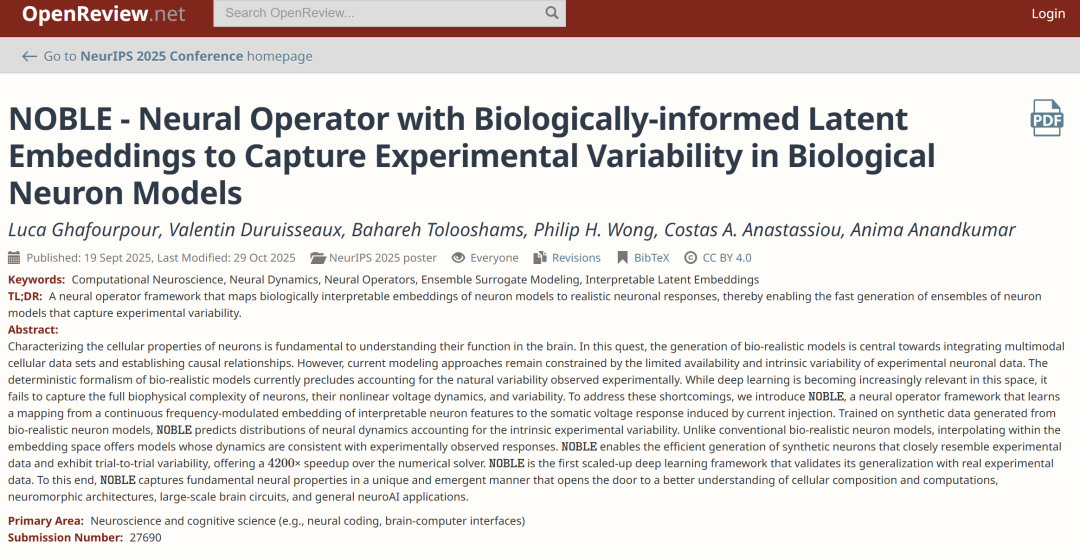

4200 Times Faster Than Traditional Methods! ETH Zurich Proposes NOBLE, the First Neuronal Modeling Framework Validated With Human Cortical data.

How the human brain shapes cognitive functions through complex circuits composed of hundreds of neurons remains a profound and unsolved mystery in life sciences. Over the past decade, with the accumulation of multimodal data in electrophysiology, morphology, and transcriptomics, scientists have gradually revealed the significant heterogeneity of human neurons in gene expression, morphological structure, and electrophysiological properties. However, how these differences affect the brain's information processing—for example, the intrinsic link between specific gene expression and neurological diseases—remains an open question.

Traditionally, researchers have used models based on three-dimensional multi-compartment partial differential equations (PDEs) to simulate neuronal activity. While these models can reproduce biological realism relatively well,However, it has a fatal flaw: the computational cost is extremely high.Optimizing a single neuron model can consume approximately 600,000 CPU core hours, and even slight changes in parameters can easily lead to significant deviations between simulation results and experimental data. More importantly, these deterministic models struggle to capture the "intrinsic variability" observed in experiments; even with the same input, the same neuron may produce different electrophysiological responses. Furthermore, artificially introducing randomness often introduces non-mechanical interference, further weakening the reliability of model predictions.

To address these challenges, a joint team from institutions including ETH Zurich, Caltech, and the University of Alberta...A deep learning framework called NOBLE (Neural Operator with Biologically-informed Latent Embeddings) is proposed.

The innovation of this framework lies in,It is the first large-scale deep learning framework to validate its performance using experimental data from the human cerebral cortex, and it is the first to achieve the direct learning of the nonlinear dynamics of neurons from experimental data.Its core breakthrough lies in constructing a unified "neural operator".It can map the continuous latent space of neuronal features to a set of voltage responses without training a separate alternative system for each model. In tests on the pvalbumin-positive (PVALB) neuron dataset, NOBLE not only accurately reproduced the subthreshold and firing dynamics of 50 known models and 10 unseen models, but its simulation speed was also 4,200 times faster than traditional numerical solvers.

The related research findings, titled "NOBLE – Neural Operator with Biologically-informed Latent Embeddings to Capture Experimental Variability in Biological Neuron Models," have been accepted for NeurIPS 2025.

Paper address:

Follow our official WeChat account and reply "NOBLE" in the background to get the full PDF.

More AI frontier papers:

https://hyper.ai/papers

Dataset: Covers 60 HoF models, 250 generations of evolutionary optimization, and 16 physiological indicators.

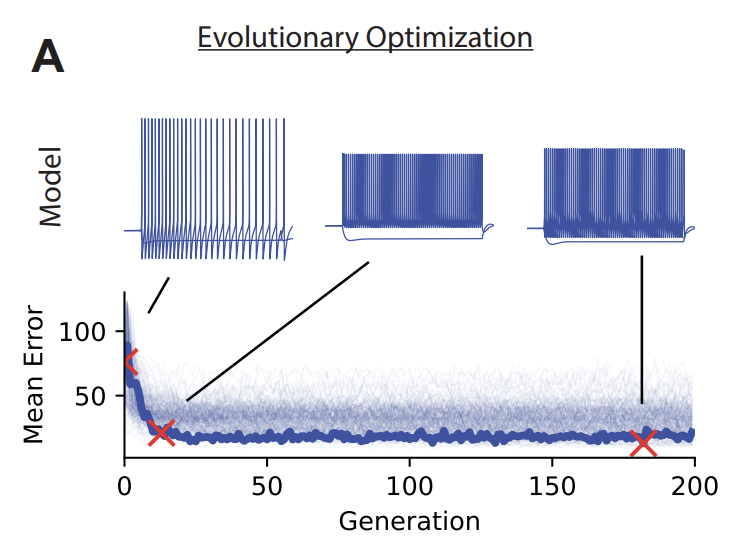

To validate the effectiveness of the NOBLE framework, the research team constructed a dedicated dataset containing albumin-positive (PVALB) neurons, which was derived from the results of biorealistic model simulations of human cortical neurons.These models are built on the NEURON simulation environment and employ an "all active" ion channel configuration.The system was generated using a multi-objective evolutionary optimization framework, aiming to reproduce the electrophysiological characteristics recorded in experiments.

Specifically, the dataset contains 60 HoF models, of which 50 are used for training (in-distribution models) and 10 are used as unseen models for testing (out-of-distribution models).As shown in the figure below, each model undergoes 250 generations of evolutionary optimization, collecting voltage responses from different generations. Then, the cable equations are transformed into a set of coupled ordinary differential equations through spatial discretization. Finally, the parameter combination that minimizes the average z-score error between the simulation and experimental characteristics is selected.

The data generation process employs a two-stage optimization strategy: first, it fits the passive subthreshold response, and then it captures the active dynamics and complete frequency-current curves above the peak threshold. The time series data is sampled for 515ms at a time step of 0.02ms, and after 3 times the time subsampling, 8583 time points are retained, which avoids aliasing effects and reduces computational load.

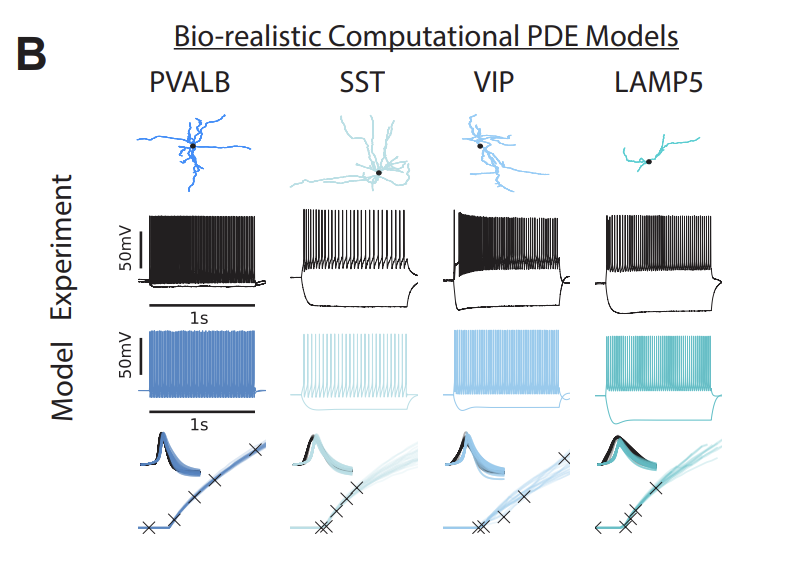

As shown in the figure below, besides the voltage change curve,The dataset also includes annotations for 16 key "physiological indicators".The dataset includes display patterns (first row), experimental voltage traces (second row), simulated voltage traces (third row), spike waveforms (fourth row), and frequency-current curves (fifth row), providing a comprehensive standard for evaluating AI models. This design allows the dataset to both train AI to predict neuron responses and evaluate prediction quality, achieving an integrated approach to "teaching, training, and testing."

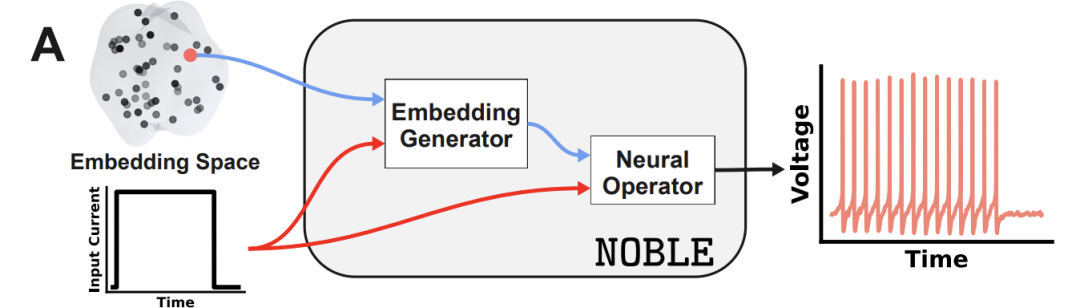

NOBLE: A neural operator framework driven by FNO and embedded in dual inputs.

The core innovation of the NOBLE framework lies in its deep integration of neural operators with potential embedding techniques in bioinformatics.An end-to-end mapping system from neuronal features to voltage responses was constructed, which can be figuratively called a "neural signal translator." This framework uses Fourier neural operators (FNO) as its underlying architecture, and its advantage lies in its ability to efficiently process spatiotemporal sequence data of neuronal electrophysiology. FNO borrows ideas from audio signal processing, analyzing equidistantly sampled electrophysiological signals in the frequency domain through Fast Fourier Transform, thus becoming a computational tool specifically tailored for neural dynamics research.

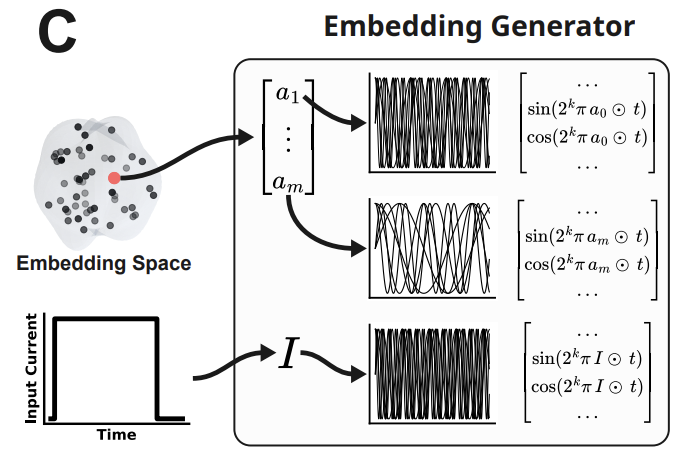

The model's "translation" capability stems from two key input embedding designs: neuron feature embedding and current injection embedding.

The former selects two biologically interpretable indicators, threshold current (Ithr) and local slope (sthr), as core features. These are first normalized to the [0.5, 3.5]² interval, and then converted into a time-series stack using NeRF-style trigonometric function encoding. This essentially provides the model with a "hardware parameter manual" for neurons, clearly defining their key electrophysiological properties. The latter employs a K=9 multi-frequency encoding strategy, corresponding to the input current excitation parameters. As shown in the figure below, the two sets of embedded stacked input channels effectively align the low-dimensional features with the frequency domain processing method of FNO, significantly enhancing the model's ability to capture the high-frequency dynamics of neural signals.

In terms of network structure, NOBLE contains 12 hidden layers.Each layer has 24 channels and employs 256 Fourier modes, resulting in approximately 1.8 million model parameters, equivalent to constructing a simulated neural connection of the same scale. The training process adopts a "personalized learning" strategy: using the Adam optimizer with an initial learning rate of 0.004, coupled with the ReduceLROnPlateau scheduling strategy, and using the relative L4 error as the loss function, the model can quickly grasp the basic principles and automatically adjust its learning pace when training bottlenecks occur. Compared to traditional methods, NOBLE does not require training a separate surrogate model for each neuron; instead, it achieves continuous mapping of the entire neuron model space through a single neural operator. This allows it to generate novel neural responses with biological realism through latent space interpolation.

Furthermore, NOBLE possesses flexible scalability for "specific refinement," supporting physically-informed fine-tuning for specific electrophysiological characteristics. By introducing a weighted composite loss function L(λ), target features (such as sag amplitude) can be assigned higher weights, thereby precisely improving the modeling accuracy of key indicators without affecting overall prediction performance.

NOBLE accurately captures diverse neuronal dynamics, and is 4200 times faster than traditional solvers.

To systematically evaluate the overall performance of the NOBLE frameworkThe research team designed multi-dimensional experiments around five core directions, including basic accuracy, generalization ability, computational efficiency, innovation generation ability, and effectiveness verification of core modules.The experiment used 50 HoF models of albumin-positive (PVALB) neurons as the main training data, and the model prediction accuracy was quantified by relative L2 error and several key electrophysiological indicators.

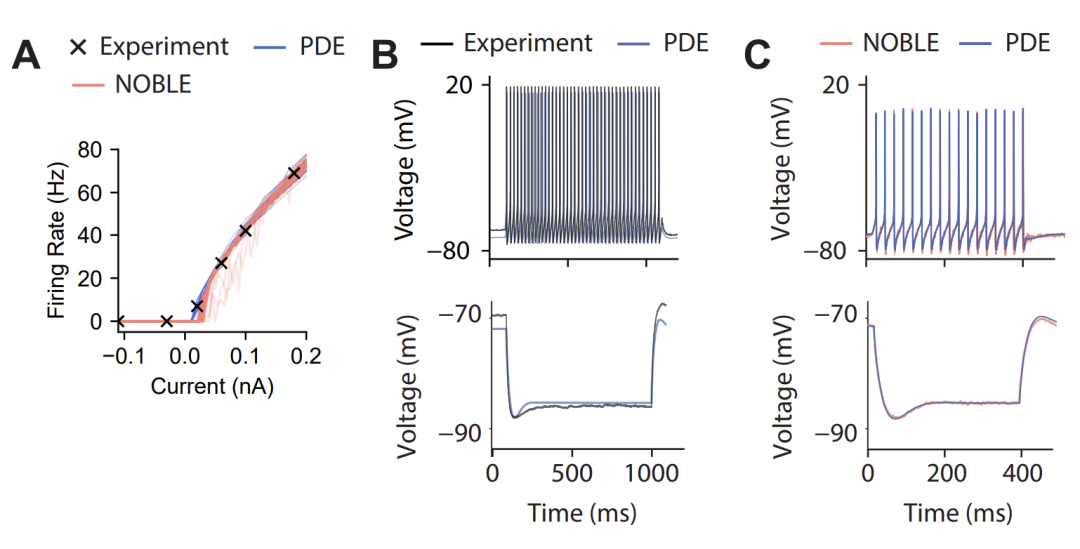

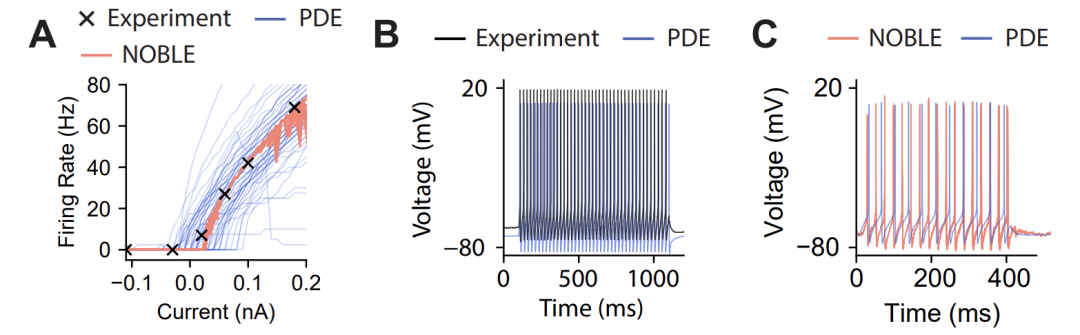

Regarding basic accuracy (in-distribution testing),NOBLE still exhibits excellent predictive ability for current-injected signals that were not trained, with a relative L2 error as low as 2.18%. Furthermore, as shown in the figure below, researchers further compared the voltage trajectories among experimental data, PDE simulations, and NOBLE predictions at current injections of 0.1nA and -0.11nA. The results show that the PDE simulations are highly consistent with the experimental records, while the difference between NOBLE predictions and PDE simulations is minimal. This indicates that NOBLE reproduces the accuracy of the numerical solver and reliably captures key physiological dynamics.

In the evaluation of generalization ability (out-of-distribution test),NOBLE maintained high prediction accuracy even when faced with 10 unseen HoF models. The research team further applied it to vasoactive intestinal peptide (VIP) interneuron data, achieving similarly stable outputs. This indicates that NOBLE does not simply memorize training set features, but rather truly grasps the electrophysiological laws across cell types.

In terms of computational efficiency,NOBLE demonstrates a breakthrough speed advantage. Test results show that it can predict a single voltage trajectory in just 0.5 milliseconds, while traditional numerical solvers take 2.1 seconds to complete the same simulation, representing a speed improvement of approximately 4200 times. This efficiency boost lays the foundation for future real-time simulations of networks with millions of neurons, making whole-brain-scale modeling computationally possible.

In terms of innovation generation capabilities,The research team focused on validating NOBLE's potential to "interpolate and create" new neuronal models between known neuronal features. By randomly interpolating 50 points in a latent space consisting of (Ithr, sthr), NOBLE successfully generated the corresponding voltage response trajectory, with results highly consistent with real experimental records, demonstrating biological realism. In contrast, traditional methods that directly interpolate parameters of partial differential equations produce significant non-physiological artifacts, creating so-called "neural model monsters." This comparison highlights that NOBLE has learned the underlying biophysical laws of neurons, rather than simply performing data fitting. Further validation through ensemble prediction experiments showed that the voltage distribution generated by parallel inference based on 50 trained models was highly consistent with the numerical simulation results, and even when the sampling points were expanded to 200, the generated model maintained biological plausibility.

Experiments with controlled variables showed that after removing neuron feature encoding, the prediction error soared from 2% to 12%, proving that this bioinformatics embedding is the "core engine" of the framework.

GitHub link: github.com/neuraloperator/noble

The academic breakthroughs and industrial applications of neural operators resonate with each other.

The cross-integration of neural operators and neuron modeling is generating profound resonance in academia and industry, propelling brain science research from theoretical exploration to industrial application.

At the forefront of academia, the Geometry-Aware Operator Transformation Framework (GAOT), jointly proposed by ETH Zurich and Carnegie Mellon University, utilizes multi-scale attention mechanisms and geometric embedding techniques.It breaks through the bottleneck of modeling complex geometric domains.This framework achieves full-resolution training on 9 million nodes of industrial-grade data for the first time, and performs excellently in benchmark tests of 28 partial differential equations. At the same time, it improves training throughput by 50% and reduces inference latency by 15%-30%, clearing the way for accurate simulation of irregular neural circuits.

Paper title: Geometry Aware Operator Transformer as an Efficient and Accurate Neural Surrogate for PDEs on Arbitrary Domains

Paper link:https://arxiv.org/abs/2505.18781

Meanwhile, MIT's "miBrain" model has made significant progress in constructing neuronal entities.This three-dimensional platform integrates the six major cell types of the human brain.The function of the neurovascular unit was successfully reproduced using biomimetic hydrogels, and the synergistic role of glial cells in Alzheimer's disease was revealed through gene editing, providing a more physiologically realistic verification environment for neural operators.

Paper title: Engineered 3D immuno-glial-neurovascular human miBrain model

Paper link:https://doi.org/10.1073/pnas.2511596122

The industry, on the other hand, is committed to engineering and applying academic achievements. NVIDIA's open-source frameworks Modulus and PhysicsNeMo exemplify this.It establishes the core foundation for the practical application of neural operators, supporting applications across multiple fields, from life sciences to engineering simulation.It can achieve large-scale training with a grid of 50 million nodes and has been used by many industrial companies to build digital twins.

In the medical application sector, innovative companies are deeply integrating neural operator technology with clinical needs. The upper limb exoskeleton rehabilitation system jointly developed by Boling Brain-Computer Interface and Zhejiang University has improved the accuracy of motor command generation by optimizing neural signal parsing algorithms. It has already helped stroke hemiplegic patients regain basic self-care abilities in multi-center clinical trials.

This collaborative innovation between academia and industry has accelerated the formation of a complete closed loop of "basic research - technology transformation - industrial application". Its core significance lies in the organic combination of abstract operator theory and concrete neuron model, which not only improves the computational efficiency and physiological realism of neural simulation, but also expands the boundaries of engineering applications.

Reference Links:

1.https://mp.weixin.qq.com/s/HWi9wNK3idpUSXCVN_nIZQ

2.https://mp.weixin.qq.com/s/YbqtmO0eU8Fn2Y-oRdBdWQ

3.https://mp.weixin.qq.com/s/UIi30fX81Xeh5dqBPxzMPQ