Command Palette

Search for a command to run...

Up to 20 Times More Efficient! The University of California Develops OmniCast to Solve the Problem of Error Accumulation in Autoregressive Weather Forecasting models.

Subseasonal-to-seasonal (S2S) weather forecasts fall between short-term weather forecasts and long-term climate predictions, focusing on weather evolution over the next two to six weeks. They accurately fill gaps in medium- and long-term meteorological forecasts, providing crucial information for agricultural planning and disaster prevention. However, S2S weather forecasts struggle to rely on rapidly decaying initial atmospheric information (short- to medium-term forecast conditions) and also fail to capture slowly changing boundary signals that are not yet fully apparent (climate prediction conditions). The difficulty of forecasting increases significantly under chaotic atmospheric systems and complex land-sea interactions.

In recent years, technological iterations from traditional numerical weather prediction (NWP) systems to deep learning-driven meteorological forecasting methods have played a significant role in promoting the development of S2S weather forecasting. However, many challenges remain in the practical application of S2S. For example, traditional numerical methods mainly rely on solving complex physical equations, which is not only computationally expensive but also time-consuming.While data-driven methods achieve speed, accuracy, and precision in short-term forecasts, autoregressive design-based approaches calculate the next step based on the results of the previous forecast.In longer-term S2S applications, the error accumulates like a snowball, while also ignoring the crucial slow-varying boundary forcing signals in S2S weather forecasting.

To address this, a team from UCLA, in collaboration with Argonne National Laboratory, proposed a novel latent diffusion model, OmniCast, for high-precision probabilistic S2S weather forecasting. This model combines a variational autoencoder (VAE) and a Transformer model, employing a joint sampling approach across time and space.It can significantly alleviate the error accumulation problem of autoregressive methods, while also being able to learn weather dynamics beyond the initial conditions.Experiments have shown that the model achieves the best performance of current methods in terms of accuracy, physical consistency, and probabilistic metrics.

The related research, titled "OmniCast: A Masked Latent Diffusion Model for Weather Forecasting Across Time Scales," was selected for NeurIPS 2025, a top AI academic conference.

Research highlights:

By simultaneously considering spatiotemporal dimensions to generate future weather, OmniCast solves the problem of accumulating errors in models based on autoregressive designs.

OmniCast can simultaneously take into account the initial atmospheric information needed for short-term weather forecasting and the slowly varying boundary forcing conditions needed for climate prediction.

* OmniCast outperforms existing methods in accuracy, physical consistency, and probabilistic prediction, and its computation speed is 10-20 times faster than current mainstream methods.

Paper address:

More AI frontier papers:

https://hyper.ai/papers

Dataset: Based on the widely used ERA5 dataset, adapted for different prediction tasks.

To ensure that OmniCast receives adequate and reasonable support in training and evaluation, the study adopted the ERA5 high-resolution reanalysis dataset, which is widely used in the meteorological field, as the basic data source. Data preprocessing was performed for two different forecasting tasks: medium-range weather forecasting and S2S weather forecasting, to serve as a benchmark set adapted to different task requirements.

Specifically,The study first extracted 69 meteorological variables from the ERA5 reanalysis dataset.It covers two main categories of core indicators:

Ground variables (4 categories):2-meter air temperature (T2m), 10-meter U-wind speed component (U10), 10-meter V-wind speed component (V10), and mean sea level pressure (MSLP);

Atmospheric variables (5 categories):Geopotential height (Z), air temperature (T), U-wind speed component, V-wind speed component, and specific humidity (Q). Atmospheric variables cover 13 pressure layers (unit: hPa), namely 50, 100, 150, 200, 250, 300, 400, 500, 600, 700, 850, 925, and 1000.

Subsequently, for different forecasting tasks, the study divided the data into training, validation, and test sets based on the time range:

Medium-term weather forecasting task:WeatherBench2 (WB2) was used as the benchmark test set.The training set spans from 1979 to 2018, the validation set spans from 2019, and the test set spans from 2020. The initial conditions use data at 00:00 (UTC) and 12:00 (UTC). The resolution is the native 0.25° (721 x 1440 grid).

S2S Weather Forecasting Task: ChaosBench is used as the benchmark set. The training set covers the period from 1979 to 2020, the validation set covers the period from 2021, and the test set covers the period from 2022. The initial conditions use data at 00:00 (UTC). The resolution is 1.40625° (128 x 256 grid).

OmniCast Model: A Two-Stage Design for a New Paradigm of S2S Weather Forecasting

OmniCast's core capability lies in its ability to avoid the error accumulation problem of traditional autoregressive models, thereby building a capability that takes into account the requirements of both short-term weather forecasting and long-term climate forecasting, providing a usable and reliable tool for the practical application of S2S weather forecasting. The core architecture of the SeasonCast model is based on a "two-phase" design.First, data dimensionality reduction is achieved using VAE, and then time series generation is achieved using a Transformer with a diffusion head.

The core module of the first phase is a VAE implemented using the UNet architecture.Its core function is "dimensionality reduction" and "reconstruction," compressing high-dimensional raw weather data into low-dimensional, continuous latent tokens (latent feature vectors), thereby reducing computational efficiency issues caused by large variables and high spatial resolution. The VAE has 69 input/output channels, corresponding to 69 meteorological variables. For example, in an S2S weather forecasting task, the VAE encoder can compress raw weather data of size 69 x 128 x 256 into a latent mapping of size 1024 x 8 x 16, achieving a spatial dimensionality compression ratio of 16. During generation, the VAE then restores the latent tokens output by the Transformer to the original dimensional weather data (such as temperature and air pressure).

It is worth noting that the study used a continuous VAE rather than a discrete VAE. This is because discrete VAEs suffer from problems such as excessively high compression ratios and significant information loss due to the large number of weather data variables, which can negatively impact the performance of the second-stage generative modeling. Continuous VAEs, on the other hand, have a compression ratio of only 100 times, which allows for the retention of more critical meteorological information for weather conditions that may contain hundreds of physical variables.

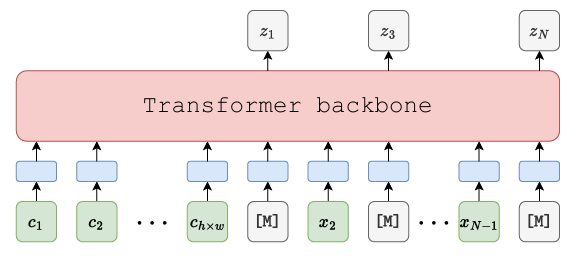

The core module of the second stage is the mask-generating Transformer (as shown in the figure below).A masked autoencoder (MAE) encoder-decoder architecture is employed. This is key to achieving "error-free cumulative generation," directly modeling future full-sequence latent tokens through mask training and diffusion prediction. Structurally, a bidirectional encoder-decoder architecture is used, supporting the simultaneous prediction of the mask portion using initial conditions and already generated visible tokens. The Transformer architecture comprises a 16-layer network, each with 16 attention heads, a hidden layer dimension of 1024, and a dropout rate of 0.1.

In addition, since latent tokens are continuous vectors, traditional classification heads cannot model their distribution. Therefore, a diffusion model head (a small MLP implementation) is connected after the Transformer output to predict the distribution of latent tokens in the mask (as shown in the figure below).

To improve the accuracy of short-term forecasts, the study also introduced an auxiliary mean-squared error loss. Specifically,In short-term weather forecasts,Since the chaotic nature of the weather system will significantly increase after 10 days, the significance of deterministic prediction will gradually decrease. By adding an additional MLP deterministic header, the MSE loss can be calculated for the latent tokens of the first 10 frames. In addition, adopting an exponentially decreasing weighting strategy can highlight the importance of accurate prediction in the early frames.

Results Showcase: Compared to two other methods, the efficiency is 10-20 times higher than the baseline model.

To verify the effectiveness and advancement of OmniCast,The researchers compared it with two mainstream methods.One category is state-of-the-art deep learning methods, and the other is numerical methods based on traditional physical models. As mentioned earlier, the experimental verification included two tasks: medium-range weather forecasting and S2S weather forecasting. The analysis metrics included accuracy, physical consistency, and probabilistic performance.

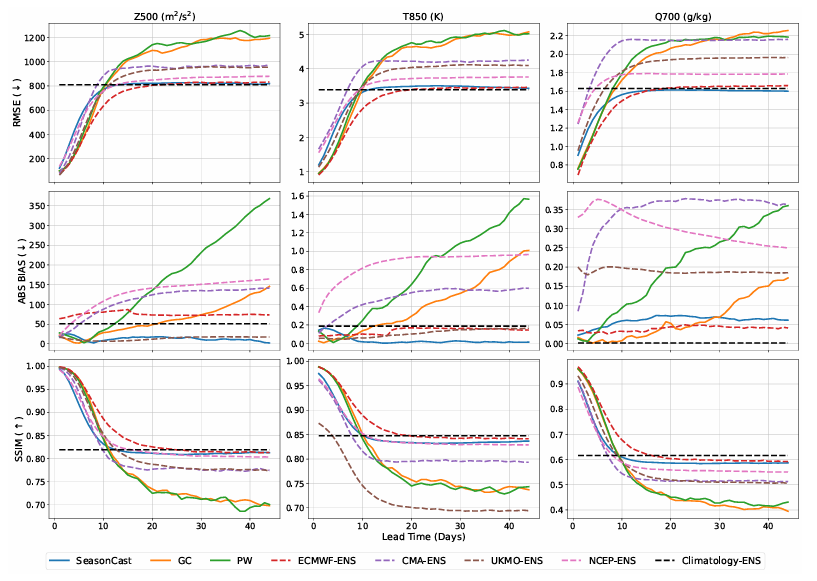

Firstly, in the S2S weather forecasting task,Researchers compared OmniCast with two deep learning methods, PanguWeather (PW) and GraphCast (GC), as well as numerical model ensemble systems from four countries and regions: UKMO-ENS (United Kingdom), NCEP-ENS (United States), CMA-ENS (China), and ECMWF-ENS (Europe).

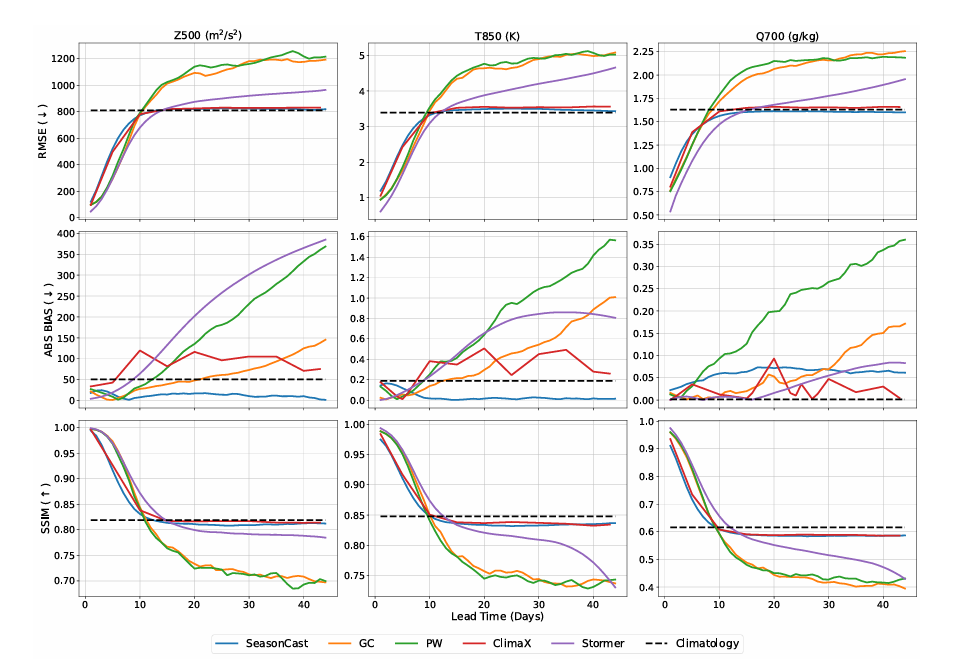

In terms of accuracy metrics (root mean square error (RMSE), absolute bias (ABS BIAS), and multi-scale structural similarity phase (SSIM)), OmniCast predictably underperforms other benchmark models in both RMSE and SSIM within the short-term forecast lead time. This is, of course, due to OmniCast's training objectives, but its relative performance will gradually improve as the forecast lead time increases.It achieves optimal performance comparable to ECMWF-ENS after 10 days.As shown in the following figure:

It is worth noting thatOmniCast exhibits the smallest deviation among all benchmark models.The forecasts for all three types of target variables maintained near-zero bias.

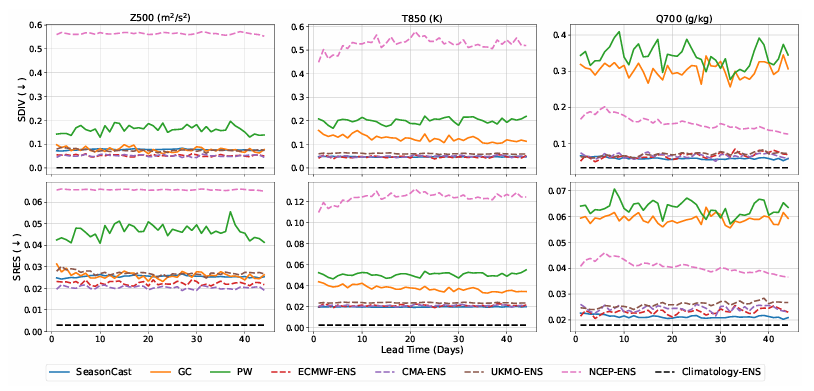

In terms of physical consistency,OmniCast's physical consistency is significantly better than other deep learning methods.Furthermore, in most cases, its performance surpasses all benchmark models. This result demonstrates that OmniCast can effectively preserve signals across different frequency ranges, thereby ensuring the physical plausibility of the forecast. (See figure below.)

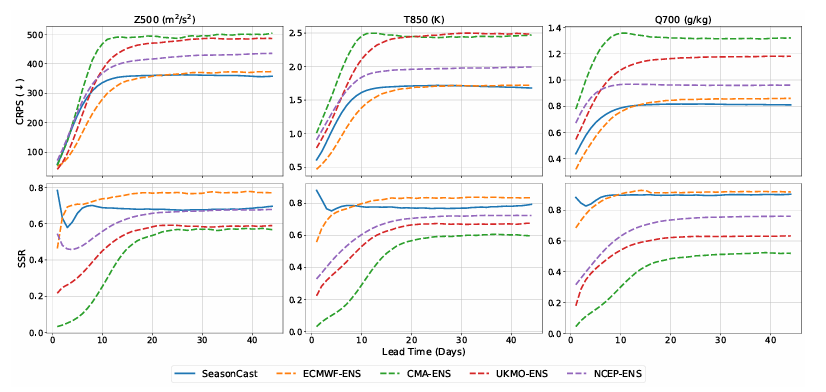

Regarding probabilistic indicators (Continuous Ranking Probability Score (CRPS) and Discrete Skill Ratio (SSR), with the latter being better the closer to 1), similar to accuracy indicators, within a shorter forecast lead time,OmniCast performs slightly worse than ECMWF-ENS, but it will surpass it after 15 days.In summary, OmniCast and ECMWF-ENS are the two best-performing methods under various variables and different forecast lead times. (See the figure below.)

In addition to the above experiments, the research team also compared OmniCast with deep learning methods proposed this year for long-term weather forecasting, including ClimaX (based on the Transformer architecture) and Stormer (based on an improved graph neural network). The results show that OmniCast outperforms both in all metrics. In the RMSE metric, T850 and Z500 are 16.81 TP3T and 16.01 TP3T lower than ClimaX, respectively; and 11.61 TP3T and 10.21 TP3T lower than Stormer. In the CRPS metric, it is 20.21 TP3T and 17.11 TP3T lower than ClimaX, and 13.91 TP3T and 11.01 TP3T lower than Stormer. These results demonstrate the superiority of OmniCast. It has significant advantages in long-term weather forecasting.By combining a latent diffusion model with a mask generation framework, its ability to model long-range dependencies in weather sequences outperforms traditional deep learning architectures. (See figure below.)

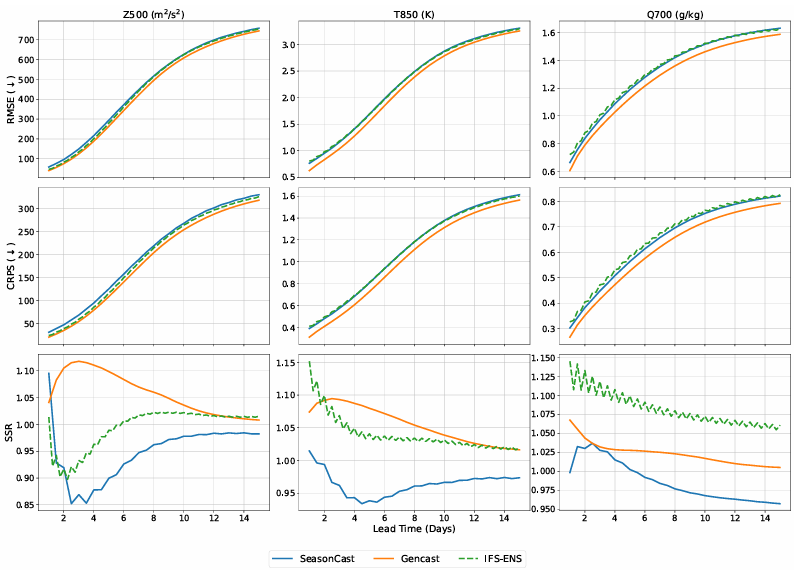

Then, in the medium-term weather forecasting mission,The research team compared OmniCast with two benchmark models: Gencast, a mainstream deep learning method for probabilistic forecasting, and IFS-ENS, the "gold standard" of numerical ensemble forecasting systems, using RMSE, CRPS, and SSR as evaluation metrics. See the figure below:

The results showed that OmniCast performed comparably to IFS-ENS across all variables and indicators, only slightly worse than Gencast. However, supplementary efficiency experiments revealed that OmniCast benefited from its latent spatial modeling design—using low-dimensional latent tokens instead of high-dimensional raw weather data for calculations. It is 10 to 20 times faster than all benchmark models.

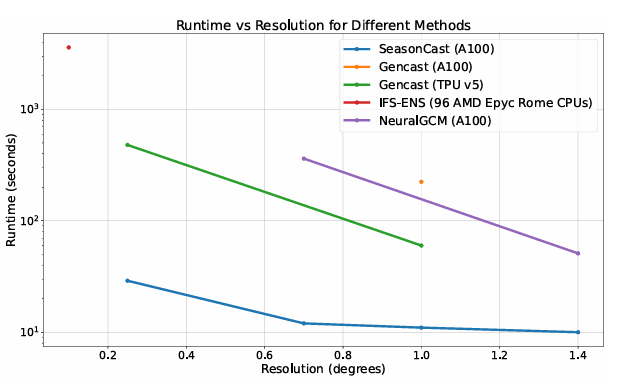

Additionally, as shown in the image below,OmniCast only requires 4 days of training on 32 NVIDIA A100 GPUs.In comparison, Gencast requires 5 days of training on 32 TPUv5e devices, which are more powerful than the A100, while NeuralGCM requires 10 days on 128 TPUv5e devices. Furthermore, Gencast requires a two-stage training process, while SeasonCast only requires a single stage. During inference, OmniCast is also faster than these methods. At 0.25° resolution, Cencast takes 480 seconds, while OmniCast only takes 29 seconds to complete the same prediction; at 1.0° resolution, OmniCast's inference time is only 11 seconds, while Gencast requires 224 seconds on the same hardware.

Continuously breaking through the limitations of S2S forecasting, accurately filling the gaps in medium- and long-term weather forecasting.

With its unique positioning of seamlessly connecting short-term weather forecasts and long-term climate predictions, S2S weather forecasting has long occupied a core research position in the meteorological field. Today, it has built an efficient communication network that spans multiple subjects and dimensions in terms of academic theoretical discussions, experimental technology breakthroughs, and practical application scenarios.

For example, in late May this year, the "International Symposium on AI + Disaster Forecasting and Early Warning," hosted by the World Meteorological Organization and organized by Shandong University, attracted more than 300 experts and scholars from over 30 countries and regions to participate both online and offline. The conference focused on the integration of new artificial intelligence technologies with S2S forecasting applications, jointly painting a bright picture of the role of S2S forecasting in disaster prevention and mitigation.

In addition to academic seminars, the experimental results have also been fruitful. The team of Researcher Li Hao and Professor Qi Yuan from the Fudan University Institute for Artificial Intelligence Innovation and Industry and the Shanghai Institute of Intelligent Science collaborated with the team of Researcher Lu Bo from the China Meteorological Administration's Open Laboratory for Climate Research.A predictive model called "FuXi-S2S" was developed based on machine learning.It can quickly and effectively generate large-scale ensemble forecasts, completing a 42-day comprehensive forecast within 7 seconds.

Paper Title:A machine learning model that outperforms conventional global subseasonal forecast models

Paper address:https://www.nature.com/articles/s41467-024-50714-1

Teams from the Technical University of Berlin, the University of Reading, and others have introduced the phenomenon of "teleconnection" into S2S weather forecasting research—such as the stratospheric polar vortex (SPV) over the Arctic and the tropical Madden-Julian oscillation (MJO).The verification was carried out by designing three deep learning models with progressively increasing complexity.First, a basic LSTM (Long Short-Term Memory) model was developed. Then, by incorporating the SPV and MJO teleconnection indices, the Index-LSTM model was obtained. Finally, further improvements were made, moving away from pre-calculated indices and directly processing upper-level wind fields in the Arctic and longwave radiation data in the tropics through visual analysis to construct the Vit LSTM model. Through comparative analysis of the three models, the team verified the importance of teleconnection information in improving S2S forecast accuracy. Notably, after the fourth week, the Vit-LSTM model even surpassed the ECMWF model in predicting the Scandinavian Blocking and Atlantic Ridge weather patterns.

Paper Title:Deep Learning Meets Teleconnections: Improving S2S Predictions for European Winter Weather

Paper address:https://arxiv.org/abs/2504.07625

In conclusion, the problems plaguing S2S weather forecasting are decreasing with technological advancements. In the future, as artificial intelligence and deep learning technologies are further integrated with the meteorological field, the traditional understanding of "unpredictable weather" will inevitably be completely shattered. From the ancient wisdom of observing clouds to predict the weather, to today's AI models generating forecasts for more than a month in seconds, humanity's understanding and control of the weather is moving towards an unprecedented level of clarity.