Command Palette

Search for a command to run...

Selected for CVPR 2025! Shenzhen University Team and Others Proposed EchoONE, Which Can Accurately Segment multi-section Echocardiograms

Death from cardiovascular disease is the leading cause of death among Chinese residents. Echocardiography has become one of the most widely used cardiac examination methods in clinical practice due to its advantages such as non-invasiveness, low cost, and real-time imaging. In actual operation, ultrasound doctors need to scan the heart from different positions and angles to obtain ultrasound images of multiple sections, and then analyze the structure and function of the heart by combining the sections, including identifying the myocardial contour and measuring the size of each chamber.

However, due to the significant structural differences between different slices, the generalization ability of existing segmentation models on multi-slice graphs is weak, and they usually need to be customized for each specific slice, resulting in high costs of repeated development. In addition, when the model of a specific slice is applied to other slices, the performance often decreases significantly, thus limiting its promotion and application in clinical practice.

In response to this, a research team from the Medical Ultrasound Image Computing Laboratory (MUSIC) of the School of Biomedical Engineering, Shenzhen University, the National Engineering Laboratory for Big Data of Shenzhen University, and the Ultrasound Department of Shenzhen People's Hospital proposed a unified multi-section echocardiography segmentation model EchoONE. This model combines the fine-tuning technology of the natural image segmentation large model SAM and the prior knowledge of cardiac ultrasound sections.It can accurately segment the cardiac structure of multi-section echocardiograms, effectively reducing the complexity of the design model.Assist doctors in evaluating cardiac function more efficiently.

The research, titled "EchoONE: Segmenting Multiple echocardiography Planes in One Model", was selected for the 2025 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Research highlights:

* Successfully developed a unified model that can accurately segment multi-section echocardiograms with significant structural differences

* A priori combinable mask learning module (PC-Mask) is proposed to generate semantically aware dense cues, and a local feature fusion and adaptation module (LFFA) is introduced to adapt the SAM architecture. This makes EchoONE perform well in processing different sections of echocardiograms with significant differences in data distribution and blurred boundaries.

* The performance of the model EchoONE is better than that of many other large models based on fine-tuning, and it also achieves the best performance in the external test set

Paper address:

https://arxiv.org/abs/2412.02993

The open source project "awesome-ai4s" brings together more than 200 AI4S paper interpretations and provides massive data sets and tools:

https://github.com/hyperai/awesome-ai4s

Dataset: 3 large public datasets + 22,044 private image-annotated pairs

This study used multi-section echocardiograms from multiple centers.Includes private and public datasets.

The public datasets include CAMUS, HMC_QU, and EchoNet_Dynamic. CAMUS is a widely used cardiac ultrasound dataset in this field. It comes from multiple hospitals in France and includes 500 cases of two-chamber heart (2CH) and four-chamber heart (4CH) data. The HMC_QU dataset was created by Hamad Medical Corporation (HMC), Tampere University, and Qatar University. The EchoNet-Dynamic dataset was created by Stanford University. This study only used its test set for external testing experiments to facilitate comparative analysis.

* CAMUS cardiac ultrasound image dataset download:

https://hyper.ai/cn/datasets/38453

* HMC-QU cardiac medical imaging dataset download:

https://hyper.ai/cn/datasets/38456

The private dataset was obtained from ultrasound data from multiple domestic cooperative hospitals, totaling 22,044 image-annotation pairs, including three different horizontal sections of the two-chamber heart (2CH), three-chamber heart (3CH), four-chamber heart (4CH) and parasternal left ventricular short axis (PSAX).

Model architecture: Based on SAM, the EchoONE model consists of three major components

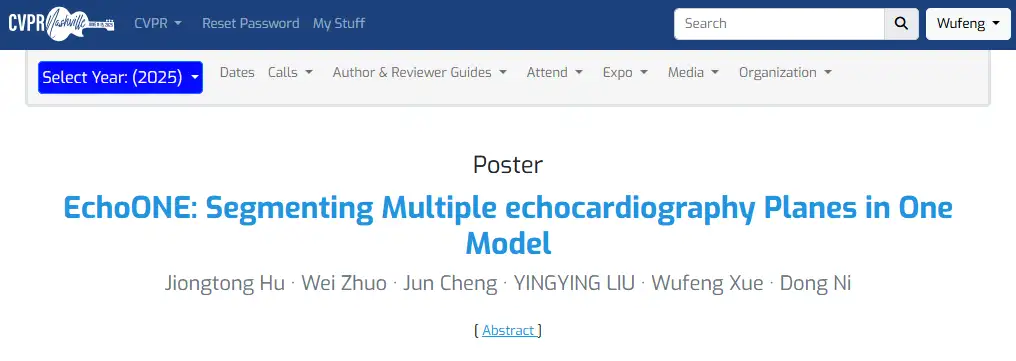

The overall framework of EchoONE is mainly composed of three components: a SAM-based segmentation architecture; a component for generating dense prompts; and a CNN-based local feature branch for adjusting and adapting SAM.The entire network architecture is built on the basis of the original SAM.Contains Transformer-based image encoder and mask decoder, sparse hint encoder, and mask encoder for dense hint.

In addition, the researchers introduced a local feature fusion and adaptation module (LFFA) in the ladder side tuning (LST) branch to enhance the adaptability of SAM to specific tasks. At the same time, they also proposed a clustering-based prior combinatorial mask learning module (PC-Mask) to generate semantically aware dense cues. The details of PC-Mask and LFFA are as follows:

(a) PC-Mask module

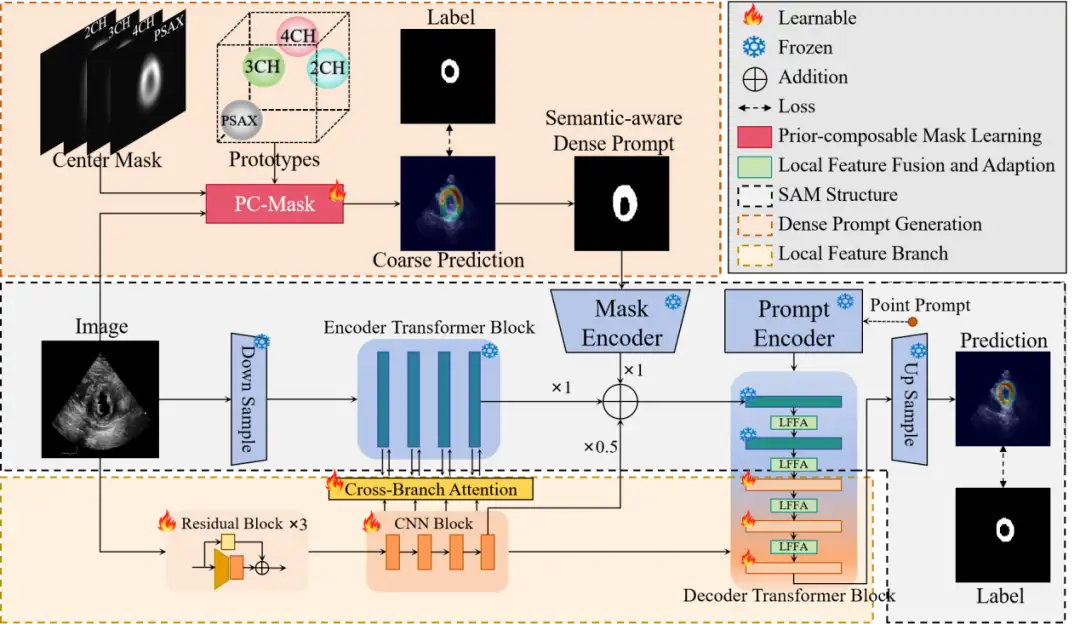

Dense mask hints provide SAM with richer information than point and box hints. The PC-Mask module can automatically generate high-quality mask hints. In order to handle the diversity of semantic structures on multiple slices, the researchers first grouped images on different slices into K clusters in the latent feature space. The center of each cluster is used as the prototype of the cluster in the latent space. Similarly,A center mask can be obtained by averaging the masks of the images assigned to the cluster.

Using these mask centers as the structure prior,The researchers' goal was to generate a dense hint of the myocardial region for each new image without information about the slice type.For an input image, its similarity (or distance) with these prototypes is used to represent its position in the latent space; then the similarity is used as the weight to combine these prior centers into a multi-channel prior embedding, and finally input into a lightweight U-Net, and the output result is used as the dense prompt of SAM. This process is constrained using Dice Loss and BCE Loss.

(b) LFFA module

In order to fully utilize the capabilities of SAM and avoid retraining and wasting resources, an auxiliary branch is needed to adjust SAM to adapt to new scenarios. The researchers designed a learnable CNN branch consisting of three parts: the first is the residual block for local feature extraction; the second is the CNN block for adjusting the cross-branch attention of the image encoder; the third is the local feature fusion Transformer block that adapts the mask decoder to specific tasks.

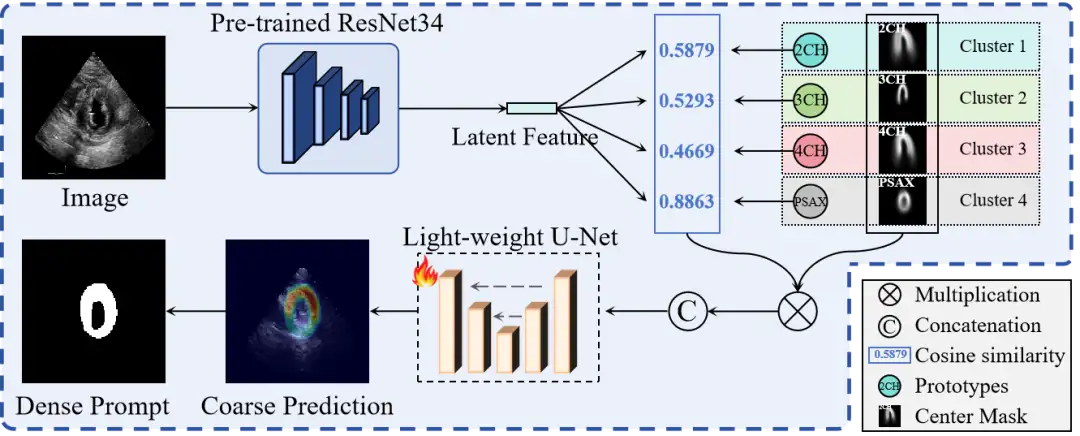

In the mask decoder, in addition to the two Transformer blocks of the original SAM,The researchers also added 3 learnable blocks,To adapt to the fusion of local features, the local features of each layer of CNN blocks in the image encoder that are noticed across branches are connected to the corresponding Transformer blocks of the mask decoder, and the features of each layer are fused through the LFFA module. The process is as follows.

Experimental conclusion: EchoONE is both accurate and robust in the multi-plane segmentation task of echocardiography

The researchers conducted extensive experiments using both internal and external datasets.This proves the effectiveness of EchoONE.

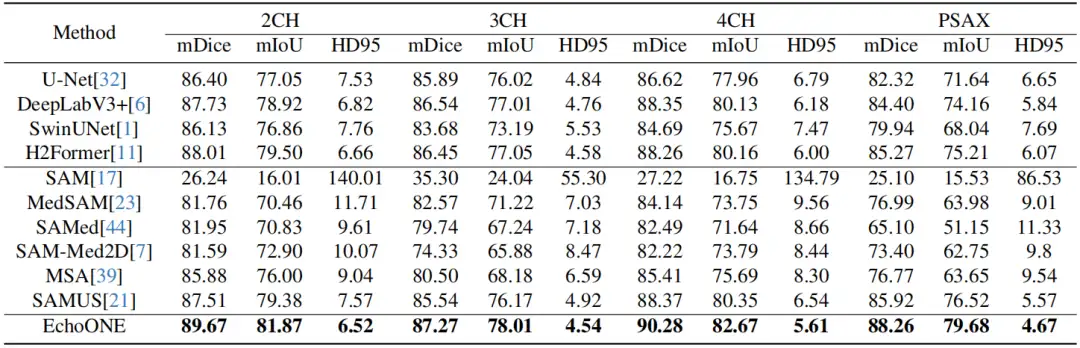

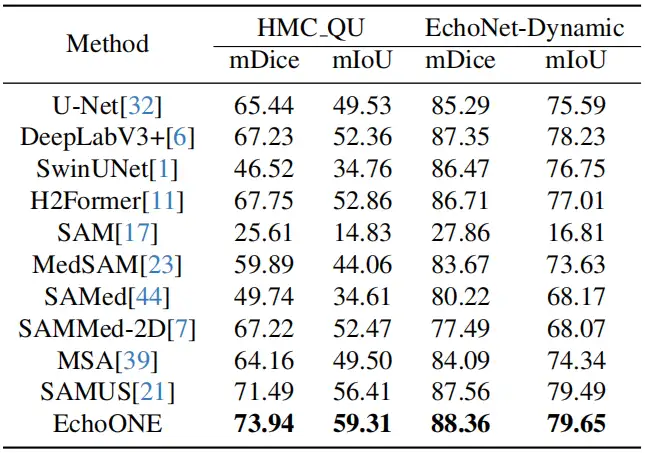

Robustness to multi-faceted tasks: The following table summarizes the performance of the model on various aspects of the internal test set. It can be seen that EchoONE is better than CNN, Transformer and SAM based models.It achieved the best results in terms of average Dice, IoU and HD95 indicators.

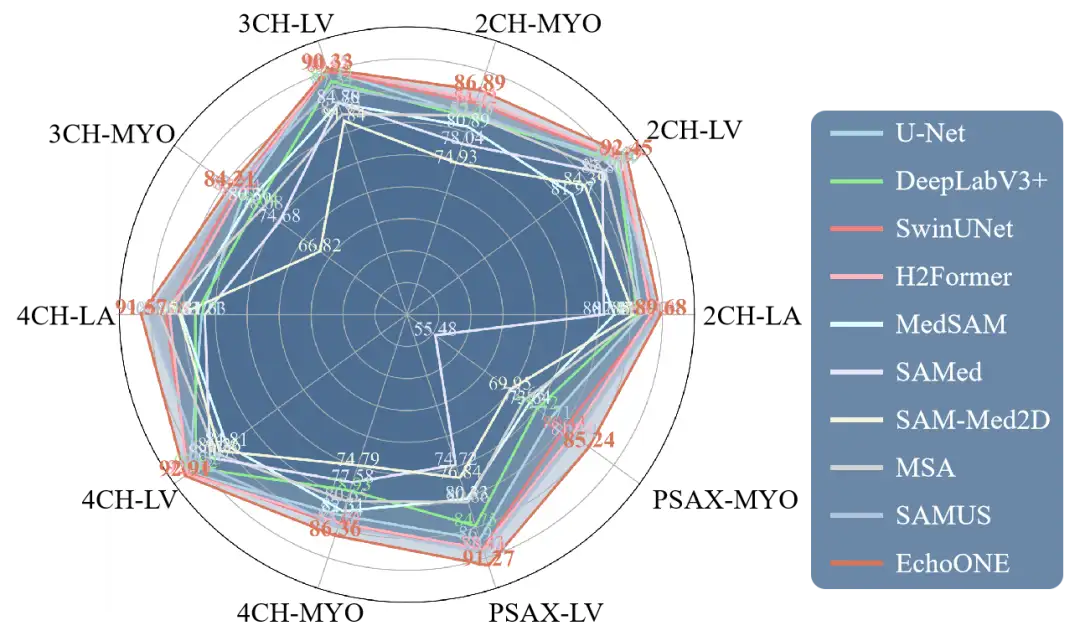

Robustness to different heart structures: As shown in the radar chart below, compared with previous models,The EchoONE model obtained higher Dice values in each structure of the heart (left atrium, left ventricle, myocardium).

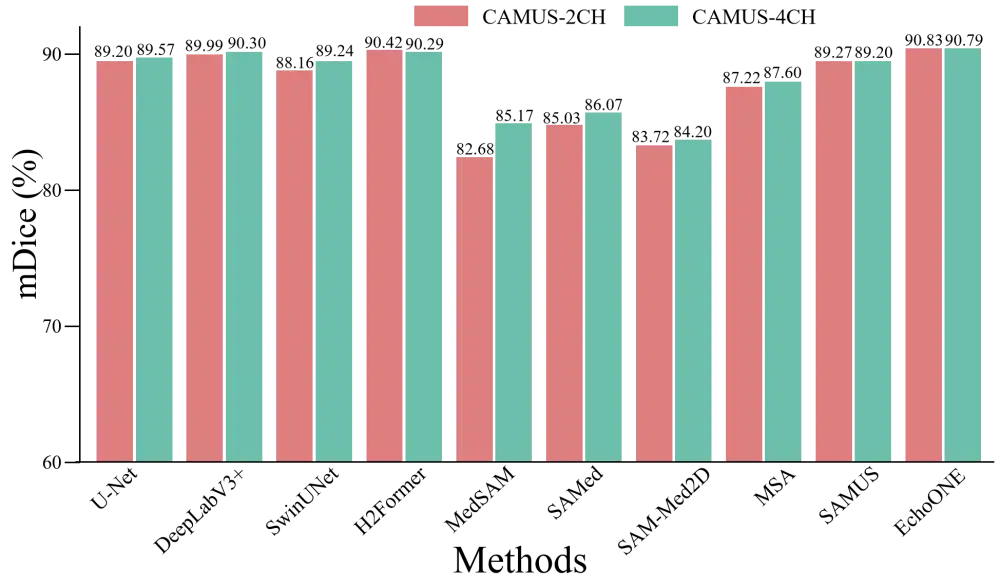

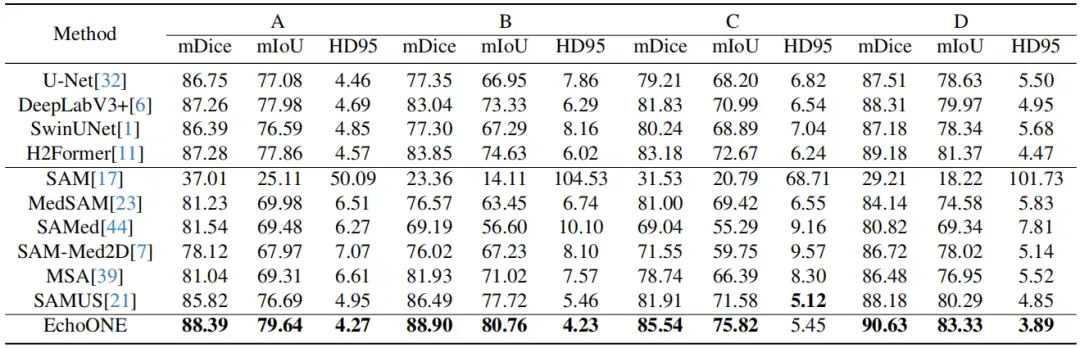

Robustness to cross-center data:The following figure and table show that EchoONE achieves the best performance on the test set of 5 internal centers.

External validation: As shown in the figure below, even two external test sets that were not seen during training,EchoONE still demonstrates strong generalization performance.For HMC_QU with obvious noise and low-quality images, EchoONE also provides a Dice score of 73.94%, indicating its great potential in real clinical practice.

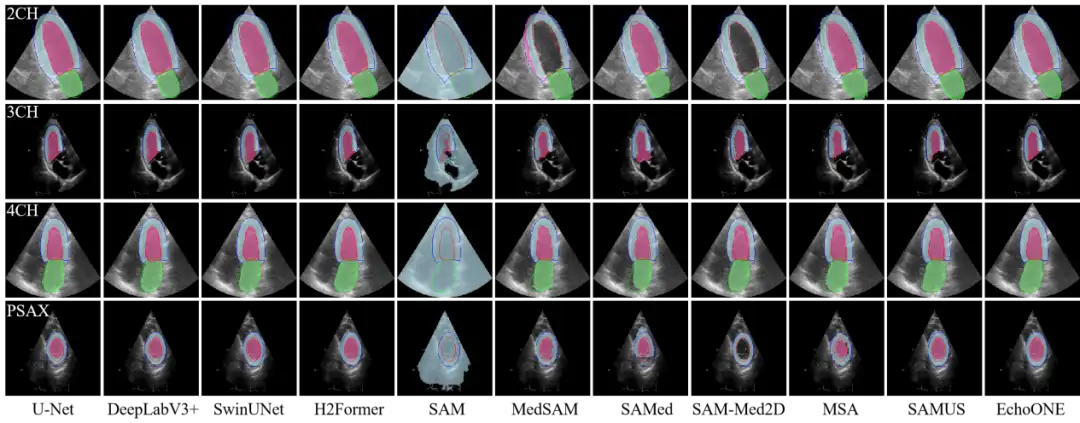

Visual analysis: From the comparison of visualization results, we can also see thatEchoONE not only provides a reasonable segmentation area, but also has outstanding results in contour refinement.This is due to the fact that it generates coarse segmentation results for different slices, prompting the model to focus on the area and refine the boundaries, thereby improving the segmentation results.

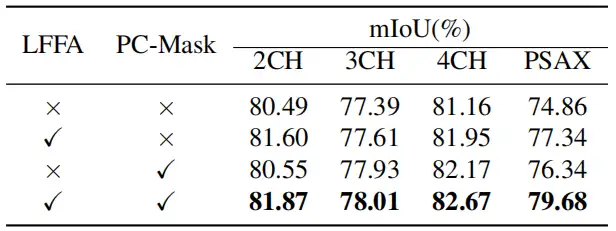

Ablation experiment results: In order to further study the effectiveness of PC-Mask and LFFA modules in improving model performance, researchers conducted ablation experiments on 5 internal datasets. From the results,These two modules respectively optimize the SAM architecture by utilizing prior knowledge and fusing local features in a semantically aware manner.This enables EchoONE to achieve accurate and robust performance for the multi-slice segmentation problem of echocardiography.

The study can be extended to other medical imaging modalities

The EchoONE model is dedicated to solving the complex challenge of multi-faceted segmentation. It introduces an innovative dense hint learning module, PC-Mask, to leverage prior structural knowledge in a composable way to provide effective facet-specific semantic guidance during the segmentation process. In addition, the study also proposed a learnable CNN local feature branch to optimize the image encoder and adapt the mask decoder. The LFFA module not only improves the final performance, but also speeds up the convergence speed.

This is the first proposed scheme to effectively segment all echocardiogram sections using a single robust model, simplifying the application of artificial intelligence technology in clinical practice.Although this method has only been verified on ultrasound images, it has the potential to be extended to other medical imaging modalities to handle multi-slice segmentation problems. In the future, the researchers will focus on improving the generalization ability of more slices and building a robust model for multi-slice videos.

It is worth mentioning that the person in charge of this research project, Xue Wufeng, is from the School of Biomedical Engineering, School of Medicine, Shenzhen University. His team has been conducting research on cardiac medical imaging and artificial intelligence for a long time, covering cardiac structure/function/blood flow modeling, basic cardiac models, large graphic models, etc. Visiting students, postdoctoral fellows, researchers, etc. are welcome to join. Those interested can contact Xue Wufeng at "[email protected]".

* Xue Wufeng's personal homepage:

https://bme.szu.edu.cn/info/116