Command Palette

Search for a command to run...

Zu effizienten Agenten: Gedächtnis, Werkzeuglernen und Planung

Zu effizienten Agenten: Gedächtnis, Werkzeuglernen und Planung

Zusammenfassung

In den letzten Jahren hat sich ein wachsender Interesse an der Erweiterung großer Sprachmodelle zu agierenden Systemen gezeigt. Während die Wirksamkeit solcher Agenten kontinuierlich verbessert wurde, wurde die Effizienz – ein entscheidender Faktor für die praktische Anwendung – häufig vernachlässigt. In dieser Arbeit untersuchen wir daher die Effizienz aus drei zentralen Komponenten agierender Systeme: Gedächtnis, Werkzeuglernen und Planung, wobei Kosten wie Latenz, Token-Verbrauch, Anzahl der Schritte usw. berücksichtigt werden. Ziel ist es, eine umfassende Forschung zu betreiben, die sich direkt mit der Effizienz des agierenden Systems selbst befasst. Dazu betrachten wir eine breite Palette neuer Ansätze, die sich in ihrer Implementierung unterscheiden, jedoch häufig auf gemeinsame, hochrangige Prinzipien hinauslaufen, darunter die Beschränkung des Kontexts durch Kompression und Verwaltung, die Gestaltung von Belohnungen im Rahmen von Verstärkendem Lernen zur Minimierung der Werkzeugaufrufe sowie die Anwendung kontrollierter Suchmechanismen zur Steigerung der Effizienz – diese werden ausführlich diskutiert. Entsprechend charakterisieren wir Effizienz auf zwei ergänzende Weisen: durch den Vergleich der Wirksamkeit bei festgelegtem Kostenbudget sowie durch den Vergleich der Kosten bei vergleichbarer Wirksamkeit. Dieses Effizienz-Trade-off lässt sich zudem als Pareto-Frontier zwischen Wirksamkeit und Kosten interpretieren. Aus dieser Perspektive analysieren wir auch auf Effizienz ausgerichtete Benchmark-Tests, indem wir Evaluationsprotokolle für diese Komponenten zusammenfassen und häufig berichtete Effizienzmetriken sowohl aus Benchmark- als auch aus methodologischen Studien konsolidieren. Zudem diskutieren wir die zentralen Herausforderungen und zukünftigen Forschungsrichtungen mit dem Ziel, vielversprechende Einblicke zu liefern.

One-sentence Summary

Researchers from Shanghai AI Lab, Fudan, USTC, and others survey efficiency in LLM agents, proposing optimizations across memory (compression/management), tool learning (selective invocation), and planning (cost-aware search), emphasizing Pareto trade-offs between performance and resource use for real-world deployment.

Key Contributions

- The paper identifies efficiency as a critical but underexplored bottleneck in LLM-based agents, defining efficient agents as systems that maximize task success while minimizing resource costs across memory, tool usage, and planning, rather than simply reducing model size.

- It systematically categorizes and analyzes recent advances in three core areas: memory compression and retrieval, tool invocation minimization via reinforcement learning rewards, and planning optimization through controlled search to reduce step counts and token consumption.

- The survey introduces efficiency evaluation frameworks using Pareto trade-offs between effectiveness and cost, consolidates benchmark protocols and metrics for each component, and highlights open challenges to guide future research toward real-world deployability.

Introduction

The authors leverage the growing shift from static LLMs to agentic systems that perform multi-step, tool-augmented reasoning in real-world environments. While such agents enable complex workflows, their recursive nature—repeatedly invoking memory, tools, and planning—leads to exponential token consumption, latency, and cost, making efficiency critical for practical deployment. Prior work on efficient LLMs doesn’t address these agent-specific bottlenecks, and existing efficiency metrics lack standardization, hindering fair comparison. Their main contribution is a systematic survey that categorizes efficiency improvements across memory (compression, retrieval, management), tool learning (selection, invocation, integration), and planning (search, decomposition, multi-agent coordination), while also proposing a Pareto-based cost-effectiveness framework and identifying key challenges like latent reasoning for agents and deployment-aware design.

Dataset

The authors use a diverse set of benchmarks to evaluate tool learning across selection, parameter infilling, multi-tool orchestration, and agentic reasoning. Here’s how the datasets are composed and used:

-

Selection & Parameter Infilling Benchmarks:

- MetaTool [42]: Evaluates tool selection decisions across diverse scenarios, including reliability and multi-tool requirements.

- Berkeley Function-Calling Leaderboard (BFCL) [88]: Features real-world tools in multi-turn, multi-step dialogues.

- API-Bank [59]: Contains 73 manually annotated tools suited for natural dialogue contexts.

-

Multi-Tool Composition Benchmarks:

- NesTools [32]: Classifies nested tool-calling problems and provides a taxonomy for long-horizon coordination.

- τ-Bench [173] & τ²-Bench [6]: Focus on retail, airline, and telecom domains with user-initiated tool calls.

- ToolBench [95]: Aggregates 16,000+ APIs from RapidAPI; suffers from reproducibility issues due to unstable online services.

- MGToolBench [150]: Curates ToolBench with multiple granularities to better align training instructions with real user queries.

-

Fine-Grained & System-Level Evaluation:

- T-Eval [13]: Decomposes tool use into six capabilities (e.g., planning, reasoning) for step-by-step failure analysis.

- StableToolBench [30]: Uses a virtual API server with caching and LLM-based simulation to ensure reproducible, efficient evaluation.

-

Model Context Protocol (MCP) Benchmarks:

- MCP-RADAR [26]: Measures efficiency via tool selection, resource use, and speed, alongside accuracy.

- MCP-Bench [136]: Uses LLM-as-a-Judge to score parallelism and redundancy reduction in tool execution.

-

Agentic Tool Learning Benchmarks:

- SimpleQA [139]: Tests factually correct short answers to complex questions, requiring iterative search API use.

- BrowseComp [140]: Human-created challenging questions designed to force reliance on browsing/search tools.

- SealQA [89]: Evaluates search-augmented LLMs on noisy, conflicting web results; its SEAL-0 subset (111 questions) stumps even frontier models.

The authors do not train on these datasets directly but use them to evaluate model behavior across efficiency, reliability, and compositional reasoning. No training split or mixture ratios are specified — these are purely evaluation benchmarks. No cropping or metadata construction is mentioned; processing focuses on simulation, annotation, or protocol adherence to ensure consistent, reproducible results.

Method

The authors present a comprehensive framework for efficient LLM-based agents, structured around three core components: memory, tool learning, and planning. At the center of the architecture, a pure LLM serves as the agent's cognitive core, interacting with external modules for memory and tool learning, which in turn influence the planning process. The overall system is designed to maximize task success while minimizing computational costs, with memory and tool learning acting as foundational enablers for efficient planning.

The memory component is a critical subsystem that mitigates the computational and token overhead associated with long interaction histories. It operates through a lifecycle comprising three phases: construction, management, and access. Memory construction involves compressing the raw interaction context into a more compact form, which can be stored in either working memory or external memory. Working memory, directly accessible during generation, includes textual memory (e.g., summaries, key events) and latent memory (e.g., compressed activations, KV caches), both of which are designed to reduce the context length the LLM must process. External memory, stored outside the model, provides unbounded storage and includes item-based, graph-based, and hierarchical structures. The management phase curates this accumulating memory store using rule-based, LLM-based, or hybrid strategies to control latency and prevent unbounded growth. Finally, memory access retrieves and integrates only the most relevant subset of memories into the agent's context, employing various retrieval mechanisms such as rule-enhanced, graph-based, or LLM-based methods, and integration techniques like textual compression or latent injection.

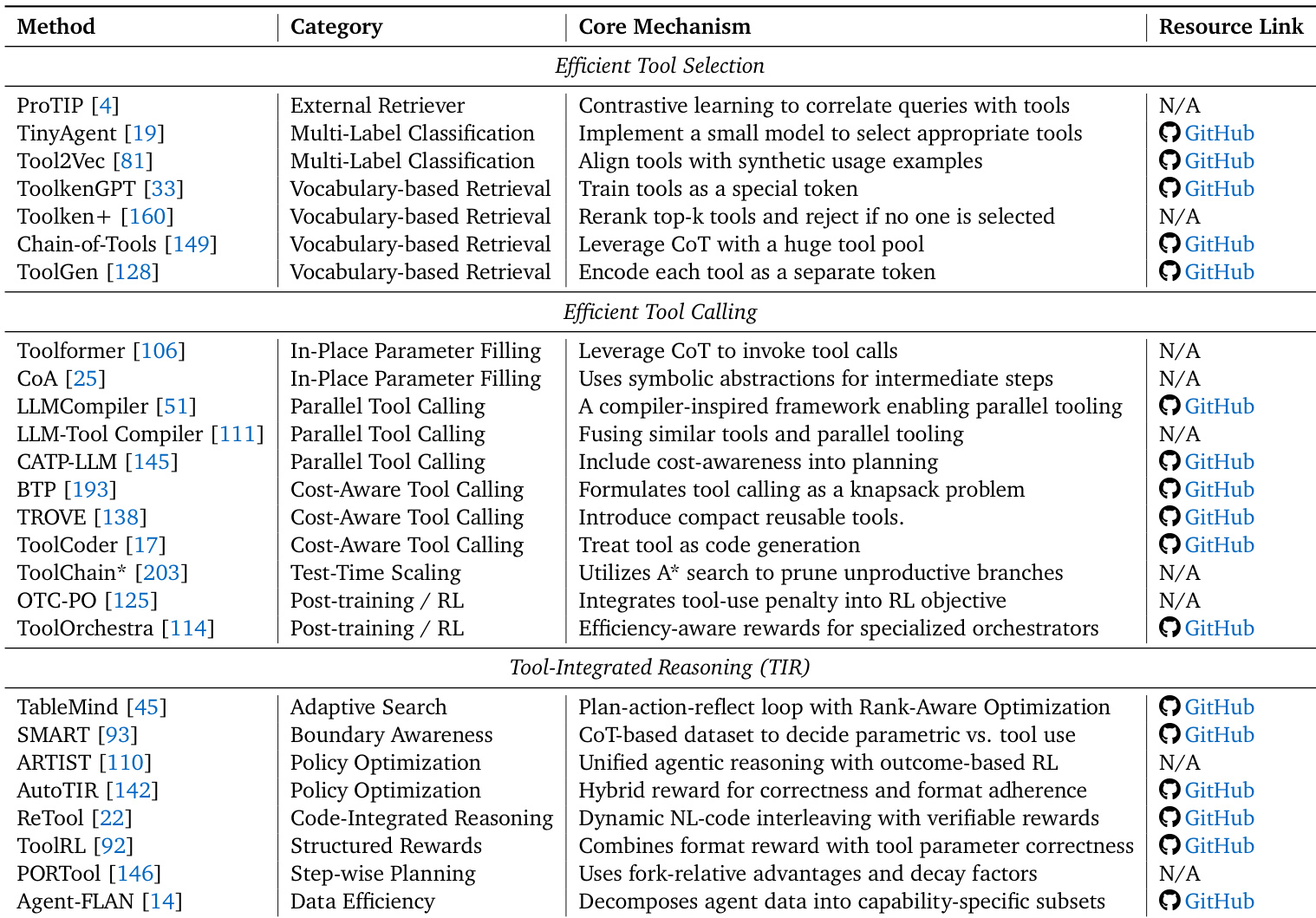

Tool learning is another key module that enables the agent to interact with external tools. The process begins with tool selection, where the agent identifies the most relevant tools from a large pool. This is achieved through three primary strategies: an external retriever that ranks tools based on semantic similarity, a multi-label classification model that predicts relevant tools, or a vocabulary-based retrieval system that treats tools as special tokens. Once candidates are selected, the agent proceeds to tool calling. This phase focuses on efficient execution, with strategies like in-place parameter filling, parallel tool calling to reduce latency, and cost-aware calling to minimize invocation expenses. The final stage, tool-integrated reasoning, ensures that the agent's reasoning process is efficient and effective. This involves selective invocation, where the agent decides when to use a tool versus relying on internal knowledge, and policy optimization, which uses reinforcement learning to learn optimal tool-use strategies that balance task success with resource parsimony.

The planning module is the central engine that orchestrates the agent's actions. It frames deliberation as a resource-constrained control problem, where the agent must balance the marginal utility of a refined plan against its computational cost. This is achieved through two main paradigms: single-agent planning and multi-agent collaborative planning. Single-agent planning focuses on minimizing the cost of individual deliberation trajectories, using inference-time strategies like adaptive budgeting, structured search, and task decomposition, as well as learning-based evolution through policy optimization and skill acquisition. Multi-agent collaborative planning optimizes the interaction topology and communication protocols to reduce coordination overhead, with techniques such as topological sparsification, protocol compression, and distilling collective intelligence into a single-agent model. The planning process is informed by the agent's memory and tool learning capabilities, creating a synergistic system where each component amortizes the cost of the others. The overall architecture is designed to be modular and scalable, with the core components of memory, tool learning, and planning working in concert to achieve high performance with minimal resource consumption.

Experiment

- Hybrid memory management balances cost-efficiency and relevance by invoking LLMs selectively, though it increases system complexity and may incur latency during LLM calls.

- Memory compression trades off performance for cost: LightMem shows milder compression preserves accuracy better than aggressive compression, highlighting the need for balanced extraction strategies.

- Online memory updates (e.g., A-MEM) enable real-time adaptation but raise latency and cost; hybrid approaches (e.g., LightMem) offload heavy computation offline, reducing inference time while maintaining cost parity.

- Tool selection favors external retrievers for dynamic tool pools (generalizable, plug-and-play) and MLC/vocab-based methods for static sets (more efficient, but require fine-tuning).

- Tool calling improves efficiency via in-place parameter filling, cost-aware invocation, test-time scaling, and parallel execution; parallel calling risks iterative refinement if task dependencies are misjudged.

- Single-agent strategies (adaptive control, structured search, task decomposition, learning-based evolution) reduce cost and redundancy but risk misfires, overhead, error propagation, or maintenance burden.

- Memory effectiveness is benchmarked via downstream tasks (HotpotQA, GAIA) or direct memory tests (LoCoMo, LongMemEval); efficiency is measured via token cost, runtime, GPU memory, LLM call frequency, and step efficiency (Evo-Memory, MemBench).

- Planning effectiveness is evaluated on benchmarks like SWE-Bench and WebArena; efficiency metrics include token usage, execution time, tool-call turns, cost-of-pass (TPS-Bench), and search depth/breadth (SwiftSage, LATS, CATS).

The authors use a table to categorize methods for efficient tool selection, calling, and reasoning in LLM-based agents, highlighting that tool selection methods like external retrievers and vocabulary-based approaches offer efficiency trade-offs based on candidate pool dynamics. Results show that efficient tool calling techniques such as cost-aware calling and test-time scaling improve performance while managing computational costs, and tool-integrated reasoning methods like adaptive search and policy optimization enhance planning efficiency through structured and reward-driven strategies.