Command Palette

Search for a command to run...

GenEnv: Schwierigkeitsausgeglichene ko-evolutionäre Entwicklung von LLM-Agenten und Umgebungssimulatoren

GenEnv: Schwierigkeitsausgeglichene ko-evolutionäre Entwicklung von LLM-Agenten und Umgebungssimulatoren

Jiacheng Guo Ling Yang Peter Chen Qixin Xiao Yinjie Wang Xinzhe Juan Jiahao Qiu Ke Shen Mengdi Wang

Zusammenfassung

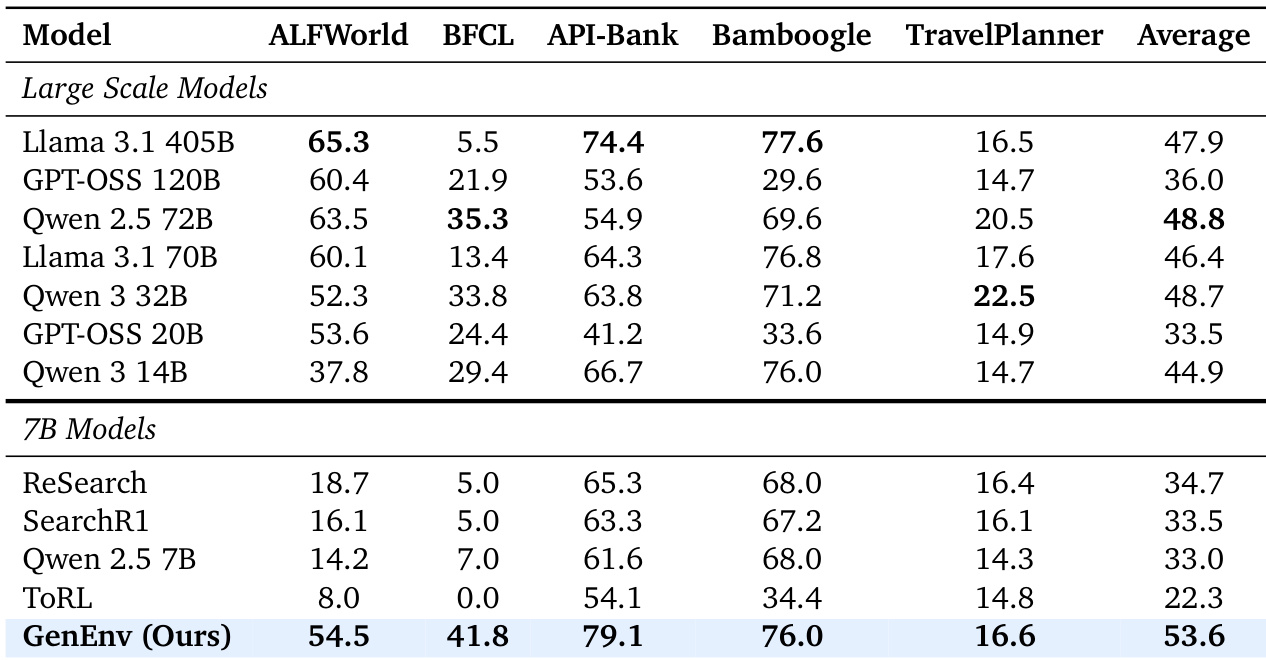

Die Entwicklung leistungsfähiger Large Language Model (LLM)-Agenten ist entscheidend durch die hohen Kosten und die statische Natur von Interaktionsdaten aus der realen Welt behindert. Wir adressieren dieses Problem durch die Einführung von GenEnv, einem Framework, das ein Schwierigkeitsausgleichs-ko-evolutionäres Spiel zwischen einem Agenten und einem skalierbaren, generativen Umgebungssimulator etabliert. Im Gegensatz zu traditionellen Ansätzen, die Modelle auf statischen Datensätzen evolutionär weiterentwickeln, ermöglicht GenEnv eine datenbasierte Evolution: Der Simulator fungiert als dynamische Lernkurrikulum-Politik und generiert kontinuierlich Aufgaben, die speziell an die „Zone des proximalen Entwicklungsbereichs“ des Agenten angepasst sind. Dieser Prozess wird durch eine einfache, aber wirksame α-Curriculum-Belohnung geleitet, die die Aufgabenschwierigkeit mit den aktuellen Fähigkeiten des Agenten synchronisiert. Wir evaluieren GenEnv an fünf Benchmarks, darunter API-Bank, ALFWorld, BFCL, Bamboogle und TravelPlanner. In diesen Aufgabensteilbereichen verbessert GenEnv die Agentenleistung gegenüber 7B-Baselines um bis zu +40,3 % und erreicht oder übertrifft die durchschnittliche Leistung größerer Modelle. Im Vergleich zu einer Offline-Datenverstärkung basierend auf Gemini 2.5 Pro erzielt GenEnv eine bessere Leistung, wobei lediglich 3,3-mal weniger Daten verwendet werden. Durch die Verschiebung von statischer Aufsicht hin zu adaptiver Simulation bietet GenEnv einen dateneffizienten Weg zur Skalierung von Agentenfähigkeiten.

One-sentence Summary

Princeton University, ByteDance Seed, and et al. researchers introduce GENEnv, a framework establishing a difficulty-aligned co-evolutionary game between LLM agents and environment simulators using α-Curriculum Reward to dynamically generate tasks matched to agent capabilities, outperforming 7B baselines by up to 40.3% while using 3.3× less data than Gemini-based augmentation across five agent benchmarks including API-Bank and ALFWorld.

Key Contributions

- Addresses the bottleneck of high-cost static real-world interaction data for training LLM agents by introducing GENEnv, a framework that establishes a difficulty-aligned co-evolutionary game between an agent and a generative environment simulator.

- Proposes a data-evolving paradigm where the simulator dynamically generates tasks tailored to the agent's capabilities using an α-Curriculum Reward, replacing static datasets with adaptive simulation for continuous capability progression.

- Demonstrates significant improvements across five benchmarks (API-Bank, ALFWorld, BFCL, Bamboogle, TravelPlanner), achieving up to +40.3% gains over 7B baselines and matching larger models while using 3.3× less data than Gemini 2.5 Pro-based augmentation.

Introduction

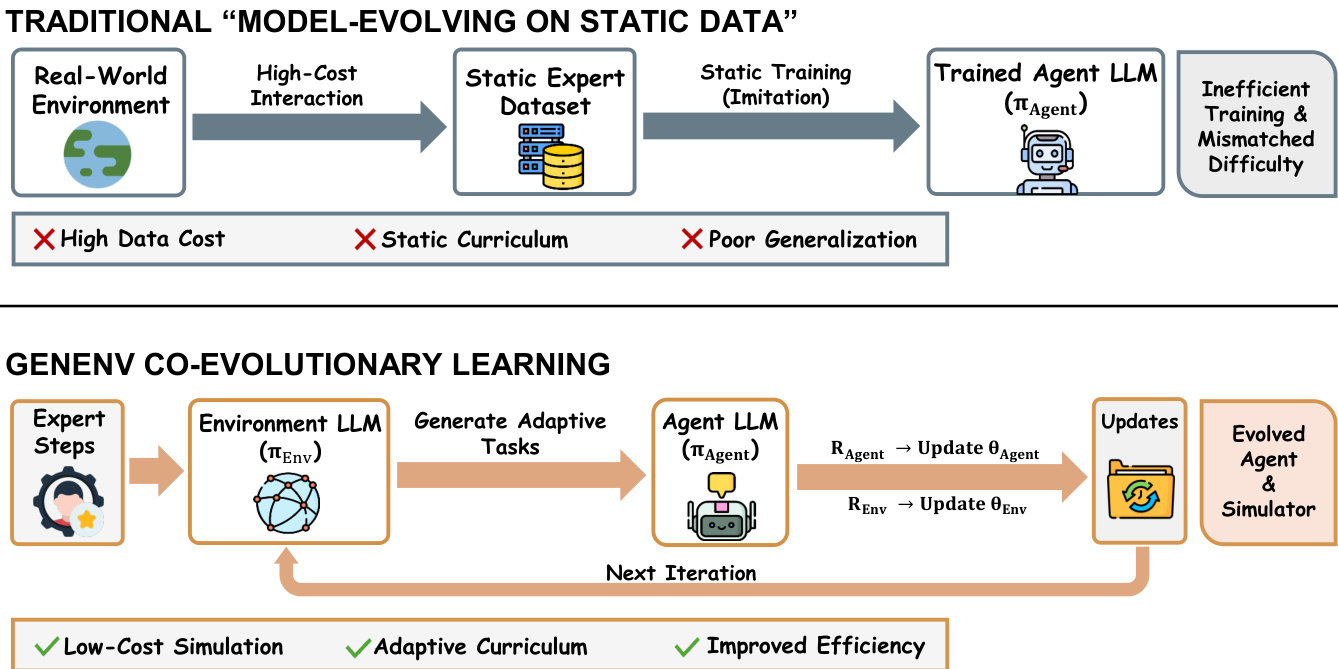

Training capable LLM agents is critically bottlenecked by expensive static interaction data that limits exploration beyond expert demonstrations, hindering robust generalization in real-world applications. Prior methods relying on fixed datasets or offline synthetic data augmentation fail to adapt task difficulty to the agent's evolving skill level, leading to inefficient learning and capability plateaus. The authors introduce GenEnv, which establishes a difficulty-aligned co-evolutionary loop where a generative environment simulator dynamically tailors tasks to the agent's zone of proximal development using an α-Curriculum Reward, enabling efficient capability scaling through adaptive simulation rather than static supervision.

Dataset

The authors maintain two dynamically evolving datasets generated through agent-environment interactions:

-

Dataset composition and sources:

Training data originates from online interactions where an environment policy (π_env) generates task batches (𝒯_t), each containing multiple task instances with prompts, evaluation specs, and optional ground-truth actions. The agent then produces interaction traces (rollouts) stored in two pools:- Agent training pool (𝒟_train): Collects valid interaction traces (well-formed, evaluable trajectories with final actions and rewards).

- Environment SFT pool (𝒟_env): Stores environment generations as (conditioning context → task instance) pairs, weighted by environment reward (e.g., ∝ exp(λR_env)).

-

Key subset details:

- GenEnv-Random: Uses Qwen2.5-7B-Instruct to dynamically generate 4 task variations per prompt per epoch. No model updates occur (fixed weights).

- GenEnv-Static: Pre-generates 5 variations for each of 544 base training samples, creating a fixed 3,264-sample dataset.

- Gemini-Offline: Gemini 2.5 Pro generates filtered variations—957 samples (1.76× base) for "Gemini-2x" and 1,777 samples (3.27×) for "Gemini-4x".

-

Data usage in training:

𝒟_train forms an evolving mixture of base data, historical traces, and new on-policy traces. The agent samples from this pool for continuous learning. 𝒟_env trains π_env via reward-weighted regression (RWR), adjusting task difficulty to target a success rate α. Both pools update each epoch, closing the data-evolution loop. -

Processing details:

Traces enter 𝒟_train only if valid (e.g., tool calls parse correctly, outputs match schema). 𝒟_env records weight environment generations by exp(λR_env) (λ=1.0). No cropping is applied; metadata like success statistics inform π_env’s conditioning context.

Method

The authors leverage a co-evolutionary framework called GENEnv, which reimagines agent training as a two-player curriculum game between an Agent Policy πagent and an Environment Policy πenv. Unlike traditional methods that rely on static expert datasets, GENEnv dynamically generates training data through an LLM-based environment simulator, enabling adaptive curriculum learning that evolves in tandem with the agent’s capabilities. This paradigm shift replaces costly real-world interaction with low-cost, scalable simulation, as illustrated in the framework diagram comparing traditional static training to GENEnv’s co-evolutionary loop.

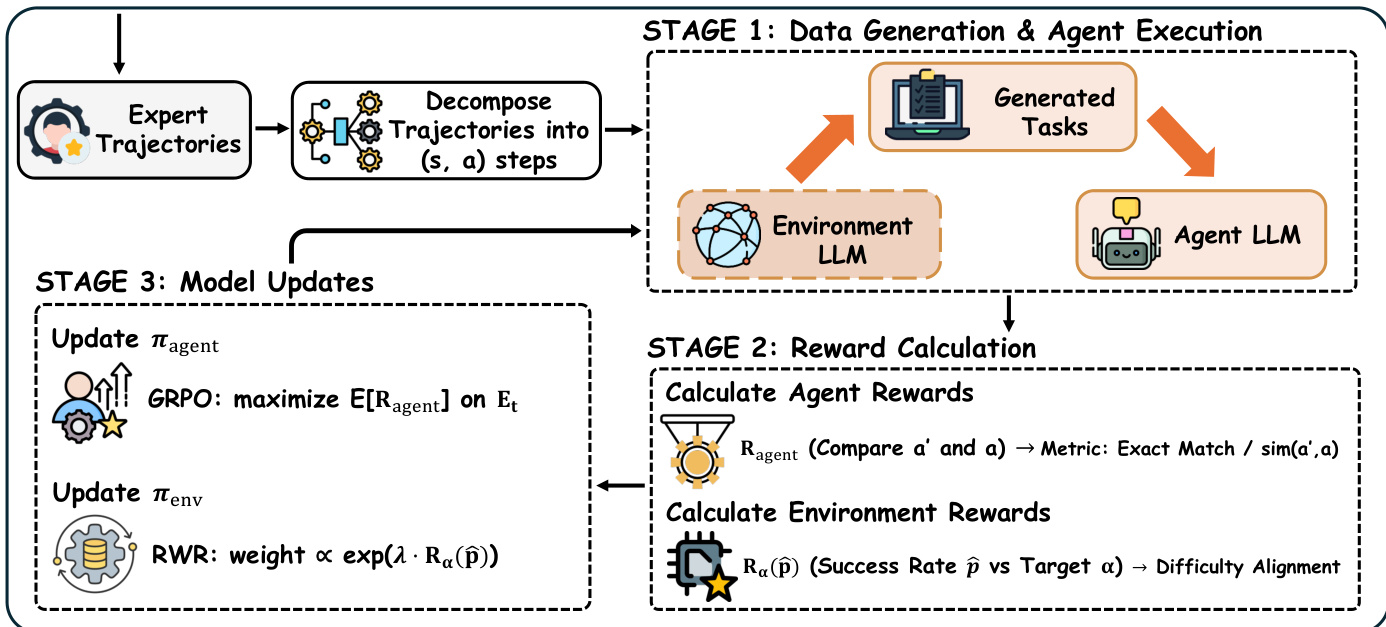

The training process unfolds in three distinct stages per iteration. In Stage 1, the Environment LLM generates a batch of tasks conditioned on the agent’s historical performance, and the Agent LLM attempts them, producing execution traces. These traces are then evaluated in Stage 2 to compute two distinct rewards: the agent reward Ragent, which measures task success via exact match for structured actions or soft similarity for free-form outputs, and the environment reward Renv, which evaluates task difficulty alignment. The environment reward is defined as Renv(p^)=exp(−β(p^−α)2), where p^ is the empirical success rate over the batch and α is the target success rate (typically 0.5), ensuring tasks are neither too easy nor too hard. A difficulty filter excludes batches where ∣p^−α∣>kmin to prevent overfitting to transient performance spikes.

In Stage 3, both policies are updated simultaneously. The agent policy is optimized via Group Relative Policy Optimization (GRPO) to maximize E[Ragent], while the environment policy is fine-tuned using Reward-Weighted Regression (RWR) on a weighted supervised fine-tuning set, where weights are proportional to exp(λRenv(p^)). To ensure stability, environment updates are regularized with a KL penalty relative to the initial simulator and capped by a maximum KL threshold. Valid agent traces and weighted environment generations are then aggregated into respective training pools for subsequent iterations, closing the co-evolutionary loop.

This architecture enables the environment simulator to learn to generate tasks that target the agent’s “zone of proximal development,” maximizing the learning signal for the agent while maintaining data efficiency. Theoretical analysis confirms that intermediate difficulty tasks (where success probability p(τ)=0.5) yield the strongest gradient signal for policy updates, and the α-Curriculum Reward provides a statistically consistent signal for ranking task difficulty, ensuring the simulator converges to generating optimally challenging tasks over time.

Experiment

- GenEnv (7B) outperforms all 7B baselines across five benchmarks, achieving 54.5% on ALFWORLD (vs 14.2% base) and 79.1% on API-Bank, with an average score of 53.6 that surpasses the average performance of several 72B/405B models.

- The co-evolutionary process validates emergent curriculum learning, where task complexity (measured by response length) increases by 49% during training while maintaining stable success rates, confirming difficulty-aligned progression.

- GenEnv demonstrates superior data efficiency, exceeding Gemini-Offline (3.3x data) by 2.0% in validation score using only dynamically generated on-policy data, and outperforming GenEnv-Random by 12.3% due to reward-guided simulation.

- The α-Curriculum Reward successfully calibrates task difficulty, with agent success rates converging to the target band [0.4, 0.6] around α=0.5, ensuring tasks remain in the zone of proximal development throughout training.

The authors use GenEnv, a 7B model trained with a co-evolving environment simulator, to outperform other 7B baselines and match or exceed the average performance of several larger models up to 405B parameters across five diverse benchmarks. Results show GenEnv achieves 54.5% on ALFWorld and 79.1% on API-Bank, significantly improving over static-data baselines and demonstrating that difficulty-aligned data generation can rival or surpass scaling model size alone. The framework’s emergent curriculum and stable training dynamics enable this performance without reward collapse or manual difficulty scheduling.