Command Palette

Search for a command to run...

Deploy Gemma-3-27B-IT Using vLLM

1. Tutorial Introduction

Gemma-3-27B-IT is the third-generation Gemma large model open sourced by Google in 2025, an instruction-optimized version with 27 billion parameters.

The Gemma series is a series of large models open sourced by Google, built on the same research and technology as the Gemini model. Gemma 3 is a multimodal large model that can process text and image inputs and generate text outputs, with open weights available in both pre-trained and instruction-tuned variants. The model has a 128K context window, supports more than 140 languages, and provides more model sizes than previous versions. Gemma 3 models are suitable for a variety of text generation and image understanding tasks, including question answering, summarization, and reasoning. Their relatively small size enables them to be deployed in resource-limited environments, such as laptops, desktops, or cloud infrastructure.

This tutorial uses gemma-3-27b-it as a demonstration, and the computing resource uses a single card A6000.

2. Operation steps

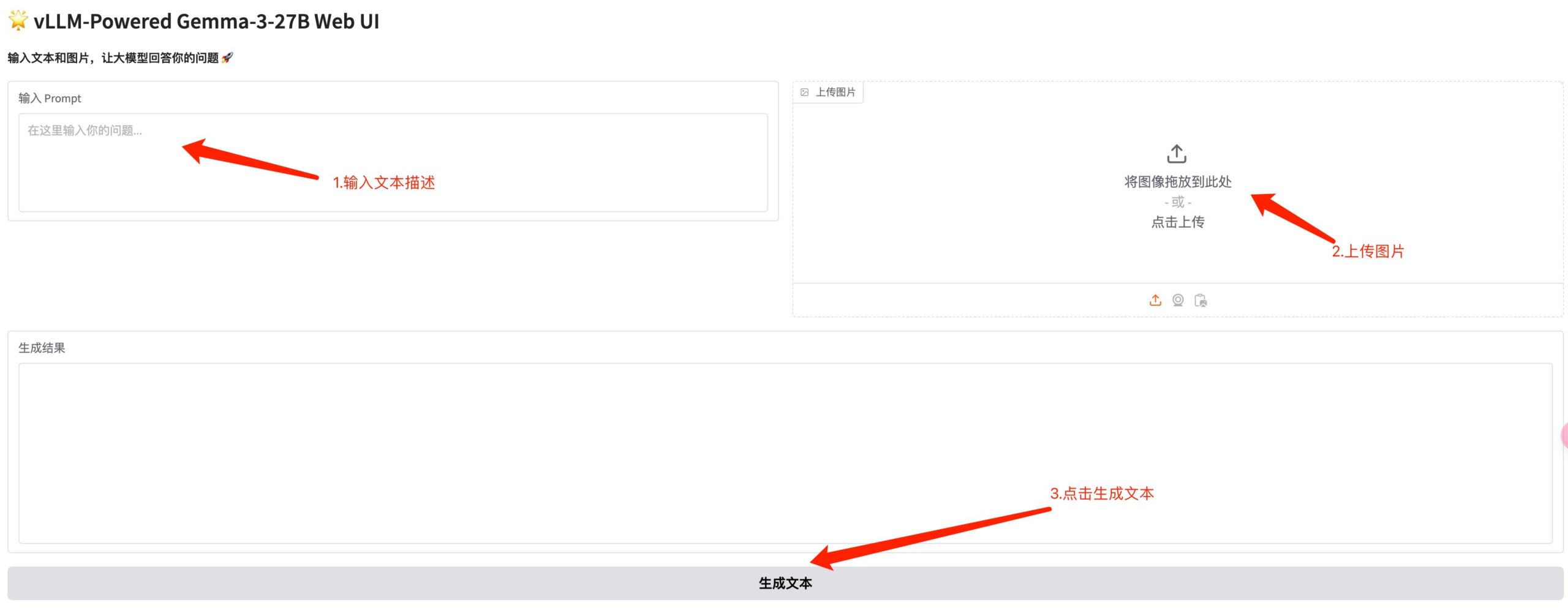

1. After starting the container, click the API address to enter the Web interface. Due to the large model, it takes about 3 minutes to display the WebUI interface, otherwise it will display "Bad Gateway"

2. After entering the webpage, you can perform model inference

- Text conversation: Enter text directly and have a text conversation without uploading photos

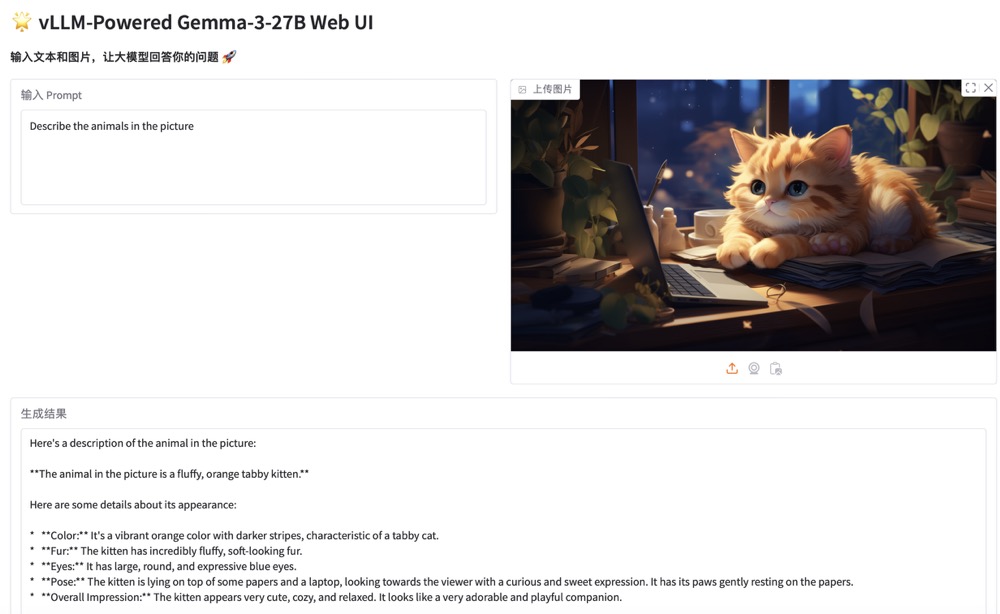

- Image understanding: Input text and images to generate corresponding model understanding

* Run the example

Exchange and discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.