Command Palette

Search for a command to run...

IQuest-Coder-V1 Technical Report

IQuest-Coder-V1 Technical Report

Abstract

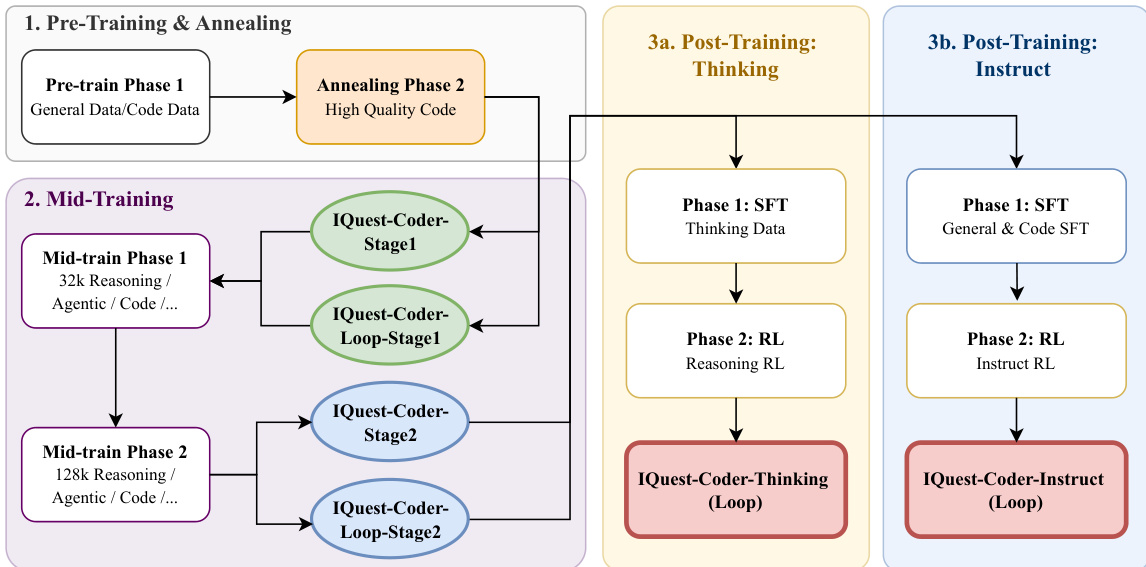

In this report, we introduce the IQuest-Coder-V1 series-(7B/14B/40B/40B-Loop), a new familyof code large language models (LLMs). Moving beyond static code representations, we pro-pose the code-flow multi-stage training paradigm, which captures the dynamic evolution ofsoftware logic through different phases of the pipeline. Our models are developed through theevolutionary pipeline, starting with the intial pre-training consisting of code facts, repository,and completion data. Following that, we implement a specialized mid-training stage that inte-grates reasoning and agentic trajectories in 32k-context and repository-scale in 128k-context toforge deep logical foundations. The models are then finalized with post-training of specializedcoding capabilities, which is bifurcated into two specialized paths: the thinking path (utilizingreasoning-driven RL) and the instruct path (optimized for general assistance). IQuest-Coder-V1achieves state-of-the-art performance among competitive models across critical dimensions ofcode intelligence: agentic software engineering, competitive programming, and complex tooluse. To address deployment constraints, the IQuest-Coder-V1-Loop variant introduces a recur-rent mechanism designed to optimize the trade-off between model capacity and deploymentfootprint, offering an architecturally enhanced path for efficient efficacy-efficiency trade-off.We believe the release of the IQuest-Coder-V1 series, including the complete white-box chainof checkpoints from pre-training bases to the final thinking and instruct models, will advanceresearch in autonomous code intelligence and real-world agentic systems.

One-sentence Summary

The IQuest-Coder-V1 team introduces the 7B/14B/40B/40B-Loop code LLM family, trained via a code-flow multi-stage paradigm that evolves software logic through pre-training on code facts and repositories, mid-training with reasoning and agentic trajectories in 32k and 128k contexts, and dual-path post-training via reasoning-driven RL and instruction optimization, achieving state-of-the-art performance in agentic software engineering, competitive programming, and complex tool use.

Key Contributions

- We introduce the code-flow multi-stage training paradigm, which evolves software logic through pre-training on code facts and repositories, mid-training with reasoning and agentic trajectories in 32k–128k-context, and bifurcated post-training via reasoning-driven RL and instruction-tuning for thinking and instruct paths.

- IQuest-Coder-V1 achieves state-of-the-art performance on agentic software engineering, competitive programming, and complex tool use benchmarks, including LiveCodeBench v6 (via the 40B-Loop-Thinking model) and other tasks evaluated on the 40B-Loop-Instruct variant.

- The IQuest-Coder-V1-Loop variant incorporates a recurrent mechanism to optimize the capacity-efficiency trade-off for deployment, and the full open-sourced training pipeline—from pre-training bases to final models—enables reproducibility and advances autonomous code intelligence research.

Introduction

The authors leverage a novel code-flow multi-stage training paradigm to build IQuest-Coder-V1, a family of code LLMs designed to model the dynamic evolution of software logic across development phases. Prior models often treat code as static, limiting their ability to handle complex, real-world engineering tasks that require reasoning over repository-scale context and evolving logic. To overcome this, the authors introduce an evolutionary pipeline with specialized mid-training using 32k and 128k context windows, followed by bifurcated post-training paths—reasoning-driven RL for deep analysis and instruction-tuning for general assistance. Their main contribution includes state-of-the-art performance across agentic software engineering, competitive programming, and tool use, plus the IQuest-Coder-V1-Loop variant, which uses recurrent mechanisms to optimize deployment efficiency without sacrificing capability. They also open-source the full training chain to accelerate research in autonomous code systems.

Dataset

The authors use a multi-stage, highly curated dataset to train IQuest-Coder, combining web-scale corpora with domain-specific code and reasoning data. Here’s how the dataset is structured and used:

-

General Corpus (Stage 1):

- Sourced primarily from Common Crawl, cleaned via regex filters and hierarchical deduplication (exact + fuzzy matching using embedding models).

- Decontaminated to exclude overlaps with standard benchmarks.

- Code snippets undergo AST analysis to verify syntactic validity, supporting code-flow training.

- Domain-specific proxy classifiers (small models trained to emulate larger ones) filter data by information density, educational value, and toxicity — outperforming FastText baselines.

- CodeSimpleQA-Instruct (66M samples) is integrated to inject factual, objective Q&A pairs generated via LLMs under constraints for time-invariance and single correct answers.

-

Repository Evolution Data:

- Built as triplets: (R_old, P, R_new), where R_old and R_new are code states from the 40%-80% lifecycle percentile (mature phase), and P is the patch between them.

- Ensures temporal continuity and meaningful code changes, avoiding early instability or late fragmentation.

-

Code Completion Data (FIM Format):

- Constructed at file and repo levels using Fill-In-the-Middle (FIM) format: ||.

- File-level: single document split via random character or line boundaries.

- Repo-level: adds semantically similar snippets from the same repo as context.

- Syntax-based construction uses ASTs to extract expression-, statement-, and function-level segments, preserving structural integrity.

- Task formats include: <fim_prefix>{pre}|{suf}|{mid}|{im_end}|> (file) and <repo_name>{repo} <|file_sep|>{path1} {content1} <|file_sep|>{path2} {content2} <|file_SEP|>{path3} ... {pre}|{suf} <fim_middle|>{mid}|{im_end}|> (repo).

-

Mid-Training (Stage 2):

- Uses same core data categories: Reasoning QA (math, coding, logic), Agent trajectories, code commits, and FIM data.

- Reasoning QA teaches structured decomposition and consistency; Agent trajectories simulate closed-loop intelligence via action-observation-revision cycles with feedback (logs, errors, tests).

- Stage 2.1 trains at 32K context; Stage 2.2 extends to 128K to enable repository-scale reasoning.

-

Evaluation Benchmarks:

- CrossCodeEval (Python, Java, TypeScript, C#) tests cross-file completion.

- Aider’s Polyglot benchmark (C++, Go, Java, JavaScript, Python, Rust) evaluates multilingual editing on 225 hardest Exercism problems.

All data undergoes strict quality filtering and structural validation to support scalable, high-fidelity code modeling.

Method

The authors leverage a structured, multi-phase training pipeline—termed the Code-Flow pipeline—to systematically evolve logical and agentic capabilities in the IQuest-Coder-V1 series. The framework is organized into three primary stages: pre-training and annealing, mid-training, and bifurcated post-training, each designed to progressively specialize the model while preserving general intelligence. The process begins with pre-training and annealing, where the model is first exposed to a mixture of general and code-specific data in Stage 1, followed by an annealing phase focused exclusively on high-quality, curated code corpora. This primes the model’s representations for complex reasoning tasks by reinforcing syntactic and semantic patterns inherent in real-world codebases. Refer to the framework diagram for the flow of this initial phase.  Mid-training introduces two sequential phases that progressively scale context length and task complexity. In Mid-train Phase 1, the model is trained on 32k-context data encompassing reasoning, agentic, and code tasks, yielding intermediate checkpoints such as IQuest-Coder-Stage1 and LoopCoder-Base-Stage1. This phase is critical for establishing a scaffold for long-horizon reasoning. Mid-train Phase 2 extends context to 128k while maintaining similar data distributions, producing IQuest-Coder-Stage2 and LoopCoder-Base-Stage2, which serve as the foundation for post-training. The authors emphasize that injecting 32k reasoning trajectories after annealing—but before post-training—stabilizes performance under distribution shifts, a finding validated through ablation studies. Post-training bifurcates into two distinct pathways: Thinking and Instruct. The Thinking path begins with supervised fine-tuning (SFT) on datasets containing explicit reasoning traces, followed by reinforcement learning (RL) optimized for reasoning fidelity. This yields LoopCoder-Thinking, which exhibits emergent autonomous error-recovery in long-horizon tasks such as software engineering and competitive programming. The Instruct path, in contrast, applies SFT on general and code instruction-following data, followed by RL tuned for instruction adherence, producing LoopCoder-Instruct. Both paths utilize the LoopCoder architecture, which employs a recurrent transformer design with two fixed iterations: the first processes input embeddings with position-shifted hidden states, while the second computes a gated mixture of global attention (attending to all key-value pairs from iteration 1) and local causal attention (attending only to preceding tokens in iteration 2). This architecture enables iterative refinement over complex code segments without requiring token-shifting mechanisms. The training infrastructure supports this multi-stage pipeline through fused gated attention kernels to reduce memory bandwidth, context parallelism for ultra-long context training, and deterministic re-computation for silent error detection. Data construction is model-centric, relying on frontier LLMs to generate training samples under automated verification, including execution-based validation for objective domains and multi-agent debate for subjective ones. Supervised fine-tuning employs aggressive sequence packing, cosine annealing with extended low-rate phases, and a three-phase curriculum that sequences data by difficulty to ensure stable convergence. Reinforcement learning leverages the GRPO algorithm with clip-Higher strategy, trained on test case pass rates without KL penalties, and is further enhanced by the SWE-RL framework, which formulates software engineering as an interactive RL environment with tool-based actions and rewards based on test suite passage and efficiency regularization. The authors also formalize multilingual code scaling laws by incorporating language proportions p=(p1,ldots,pK) into the loss function: mathcalL(N,D;p)=AcdotN−alphaN(p)+BcdotDgamma−alphaD(p)+Linfty(p) where alphaN(p)=sumkpkalphaNk, alphaD(p)=sumkpkalphaDk, and Linfty(p)=sumppLinftyk are proportion-weighted averages of language-specific parameters. The effective data term captures cross-lingual transfer: Dx=Dallleft(1+gammasumLieqLjpLipLjauijight) with auij as the transfer coefficient derived from empirical synergy gains. The final fitted scaling law under optimal multilingual allocation is: mathcalL∗(N,D)=A∗cdotN−alphaN∗+B∗cdotD−alphaD∗+Linfty∗ where alphaD∗=0.6859, alphaN∗=0.2186, and Linfty∗=0.2025. This formulation enables efficient allocation of multilingual code data to maximize performance on both generation and translation tasks.

Mid-training introduces two sequential phases that progressively scale context length and task complexity. In Mid-train Phase 1, the model is trained on 32k-context data encompassing reasoning, agentic, and code tasks, yielding intermediate checkpoints such as IQuest-Coder-Stage1 and LoopCoder-Base-Stage1. This phase is critical for establishing a scaffold for long-horizon reasoning. Mid-train Phase 2 extends context to 128k while maintaining similar data distributions, producing IQuest-Coder-Stage2 and LoopCoder-Base-Stage2, which serve as the foundation for post-training. The authors emphasize that injecting 32k reasoning trajectories after annealing—but before post-training—stabilizes performance under distribution shifts, a finding validated through ablation studies. Post-training bifurcates into two distinct pathways: Thinking and Instruct. The Thinking path begins with supervised fine-tuning (SFT) on datasets containing explicit reasoning traces, followed by reinforcement learning (RL) optimized for reasoning fidelity. This yields LoopCoder-Thinking, which exhibits emergent autonomous error-recovery in long-horizon tasks such as software engineering and competitive programming. The Instruct path, in contrast, applies SFT on general and code instruction-following data, followed by RL tuned for instruction adherence, producing LoopCoder-Instruct. Both paths utilize the LoopCoder architecture, which employs a recurrent transformer design with two fixed iterations: the first processes input embeddings with position-shifted hidden states, while the second computes a gated mixture of global attention (attending to all key-value pairs from iteration 1) and local causal attention (attending only to preceding tokens in iteration 2). This architecture enables iterative refinement over complex code segments without requiring token-shifting mechanisms. The training infrastructure supports this multi-stage pipeline through fused gated attention kernels to reduce memory bandwidth, context parallelism for ultra-long context training, and deterministic re-computation for silent error detection. Data construction is model-centric, relying on frontier LLMs to generate training samples under automated verification, including execution-based validation for objective domains and multi-agent debate for subjective ones. Supervised fine-tuning employs aggressive sequence packing, cosine annealing with extended low-rate phases, and a three-phase curriculum that sequences data by difficulty to ensure stable convergence. Reinforcement learning leverages the GRPO algorithm with clip-Higher strategy, trained on test case pass rates without KL penalties, and is further enhanced by the SWE-RL framework, which formulates software engineering as an interactive RL environment with tool-based actions and rewards based on test suite passage and efficiency regularization. The authors also formalize multilingual code scaling laws by incorporating language proportions p=(p1,ldots,pK) into the loss function: mathcalL(N,D;p)=AcdotN−alphaN(p)+BcdotDgamma−alphaD(p)+Linfty(p) where alphaN(p)=sumkpkalphaNk, alphaD(p)=sumkpkalphaDk, and Linfty(p)=sumppLinftyk are proportion-weighted averages of language-specific parameters. The effective data term captures cross-lingual transfer: Dx=Dallleft(1+gammasumLieqLjpLipLjauijight) with auij as the transfer coefficient derived from empirical synergy gains. The final fitted scaling law under optimal multilingual allocation is: mathcalL∗(N,D)=A∗cdotN−alphaN∗+B∗cdotD−alphaD∗+Linfty∗ where alphaD∗=0.6859, alphaN∗=0.2186, and Linfty∗=0.2025. This formulation enables efficient allocation of multilingual code data to maximize performance on both generation and translation tasks.

Experiment

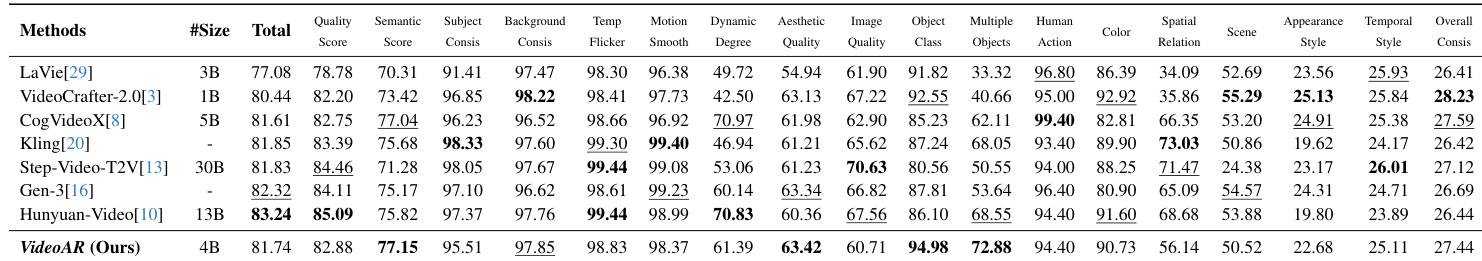

Our model is evaluated across diverse code generation, reasoning, efficiency, and agentic tasks against a broad set of state-of-the-art baselines including Claude, GPT, Gemini, Qwen, and StarCoder2. It achieves strong results on EvalPlus, BigCodeBench, FullStackBench, and LiveCodeBench for code generation; excels in forward and inverse reasoning on CRUXEval; and scores competitively on Mercury for runtime efficiency and on Spider and BIRD for Text-to-SQL. In agentic settings, it attains a 76.2 score on SWE-bench Verified and performs robustly on Terminal-Bench and general tool-use benchmarks like Mind2Web and BFCL, while maintaining high safety compliance across Tulu 3 benchmarks with balanced refusal behavior on harmful prompts.

The authors evaluate code generation performance across six programming languages, reporting scores and relative changes compared to baseline models. Results show consistent gains in most language pairs, with notable improvements in Java and TypeScript, while some cases show slight declines in Python and C#. Java-to-JavaScript shows a 12.62% improvement over baseline Python-to-C# and Python-to-Rust show declines of 1.69% and 2.72% TypeScript-to-JavaScript and Go-to-Rust show gains of 4.69% and 2.86%

The authors evaluate multiple code generation models across Python, Java, TypeScript, and C#, reporting exact match and edit similarity scores. IQuest-Coder-V1-40B achieves the highest average edit similarity and strong exact match scores, outperforming other 20B+ models in most languages. Results indicate competitive performance in both functional correctness and structural similarity across programming languages. IQuest-Coder-V1-40B leads in average edit similarity (85.7) among 20B+ models It achieves top exact match in Java (57.9) and strong scores in Python (49.0) and C# (63.4) StarCoder2-7B underperforms with lowest average exact match (8.3) and edit similarity (70.8)

The authors evaluate their model across agentic coding and general tool-use tasks, comparing it with open-source and closed-API models. Results show the model achieves competitive scores on Terminal-Bench, SWE-Verified, Mind2Web, and BFCL V3, particularly excelling in SWE-Verified with a 76.2 score. Performance is strong relative to similarly sized open models and approaches some closed-source systems. IQuest-Coder-V1-40B-Loop-Instruct scores 76.2 on SWE-Verified, outperforming most open models. The model achieves 52.5 on Terminal-Bench and 64.3 on Mind2Web, showing broad agentic capability. Performance on BFCL V3 reaches 73.9, indicating strong multi-step tool-use reasoning.

The authors evaluate multiple code-focused language models on Text to SQL tasks using Bird and Spider benchmarks. Results show that IQuest-Coder-V1-40B-Instruct achieves 70.5 on Bird and 92.2 on Spider, outperforming most open-source models and matching or exceeding several closed-source systems. The the the table highlights performance differences across model sizes and architectures, with closed-API models generally showing strong execution accuracy. IQuest-Coder-V1-40B-Instruct leads open-source models with 92.2 Spider accuracy Closed-API models like Gemini-3-Flash-preview score 87.2 on Spider Qwen3-Coder-480B-A35B-Instruct achieves 81.2 on Spider despite lower Bird score

The authors evaluate multiple code-focused language models across CruxEval and LiveCodeBench benchmarks, grouping them by parameter scale and architecture. Results show that IQuest-Coder-V1 variants, particularly the 40B Loop-Thinking model, achieve top scores in Output-COT and LiveCodeBench V6, outperforming many larger or closed-source models. Closed-API models like Gemini-3 and Claude-Opus-4.5 remain competitive but do not consistently surpass the best open models in all metrics. IQuest-Coder-V1-40B-Loop-Thinking leads in Output-COT with 99.4 and LiveCodeBench V6 with 81.1 Closed-API models like Gemini-3-Flash-preview score high in CruxEval but trail in LiveCodeBench V6 Qwen3-235B-A22B-Thinking-2507 shows strong CruxEval Output-COT at 89.5 but lower LiveCodeBench scores

The authors evaluate IQuest-Coder-V1-40B across cross-language code generation, agentic coding, Text-to-SQL, and code reasoning benchmarks, showing consistent gains in Java and TypeScript translation while noting slight declines in Python and C#. The model achieves top edit similarity (85.7) and exact match scores in Java (57.9), C# (63.4), and Python (49.0), outperforming most 20B+ models, and excels in agentic tasks with a 76.2 on SWE-Verified, 52.5 on Terminal-Bench, and 73.9 on BFCL V3. In Text-to-SQL, it leads open-source models with 92.2 on Spider and 70.5 on Bird, while its Loop-Thinking variant tops Output-COT (99.4) and LiveCodeBench V6 (81.1), rivaling or surpassing several closed-source systems.