Command Palette

Search for a command to run...

Vibe AIGC : Un nouveau paradigme pour la génération de contenu par orchestration agente

Vibe AIGC : Un nouveau paradigme pour la génération de contenu par orchestration agente

Jiaheng Liu Yuanxing Zhang Shihao Li Xinping Lei

Résumé

Au cours de la dernière décennie, la trajectoire de l’intelligence artificielle générative (IA) a été dominée par un paradigme centré sur les modèles, guidé par les lois d’échelle. Malgré des progrès significatifs en matière de fidélité visuelle, cette approche a rencontré un « plafond d’utilisabilité », manifesté par l’Écart Intentions-Exécution (c’est-à-dire la disparité fondamentale entre l’intention de haut niveau d’un créateur et la nature stochastique et opaque des modèles actuels à une seule étape). Dans ce papier, inspiré par la notion de Vibe Coding, nous introduisons Vibe AIGC, un nouveau paradigme de génération de contenus fondé sur une orchestration agente, représentant la synthèse autonome de workflows hiérarchiques multi-agents. Dans ce cadre, le rôle de l’utilisateur dépasse l’ingénierie traditionnelle de prompts, évoluant vers celui d’un Commandant qui fournit un « Vibe », une représentation de haut niveau englobant les préférences esthétiques, la logique fonctionnelle, etc. Un Meta-Planificateur central agit alors comme un architecte système, décomposant ce « Vibe » en pipelines agents exécutables, vérifiables et adaptables. En passant de l’inférence stochastique à une orchestration logique, Vibe AIGC comble l’écart entre l’imagination humaine et l’exécution machine. Nous soutenons que ce changement redéfinira l’économie collaborative homme-machine, transformant l’IA d’un moteur d’inférence fragile en un partenaire d’ingénierie systémique robuste, au service de la démocratisation de la création d’actifs numériques complexes à horizon long.

One-sentence Summary

Researchers from Nanjing University and Kuaishou Technology propose Vibe AIGC, a multi-agent orchestration framework that replaces stochastic generation with logical pipelines, enabling users to command complex outputs via high-level “Vibe” prompts—bridging intent-execution gaps and democratizing long-horizon digital creation.

Key Contributions

- The paper identifies the "Intent-Execution Gap" as a critical limitation of current model-centric AIGC systems, where stochastic single-shot generation fails to align with users’ high-level creative intent, forcing reliance on inefficient prompt engineering.

- It introduces Vibe AIGC, a new paradigm that replaces monolithic inference with hierarchical multi-agent orchestration, where a Commander provides a high-level “Vibe” and a Meta-Planner decomposes it into verifiable, adaptive workflows.

- Drawing inspiration from Vibe Coding, the framework repositions AI as a system-level engineering partner, enabling scalable, long-horizon content creation by shifting focus from model scaling to intelligent agentic coordination.

Introduction

The authors leverage the emerging concept of Vibe Coding to propose Vibe AIGC, a new paradigm that shifts content generation from single-model inference to hierarchical multi-agent orchestration. Current AIGC tools face a persistent Intent-Execution Gap: users must manually engineer prompts to coax coherent outputs from black-box models, a process that’s stochastic, inefficient, and ill-suited for complex, long-horizon tasks like video production or narrative design. Prior approaches—whether scaling models or stitching together fixed workflows—fail to bridge this gap because they remain tool-centric and lack adaptive, verifiable reasoning. The authors’ main contribution is a system where users act as Commanders, supplying a high-level “Vibe” (aesthetic, functional, and contextual intent), which a Meta-Planner decomposes into executable, falsifiable agent pipelines. This moves AI from fragile inference engine to collaborative engineering partner, enabling scalable, intent-driven creation of complex digital assets.

Method

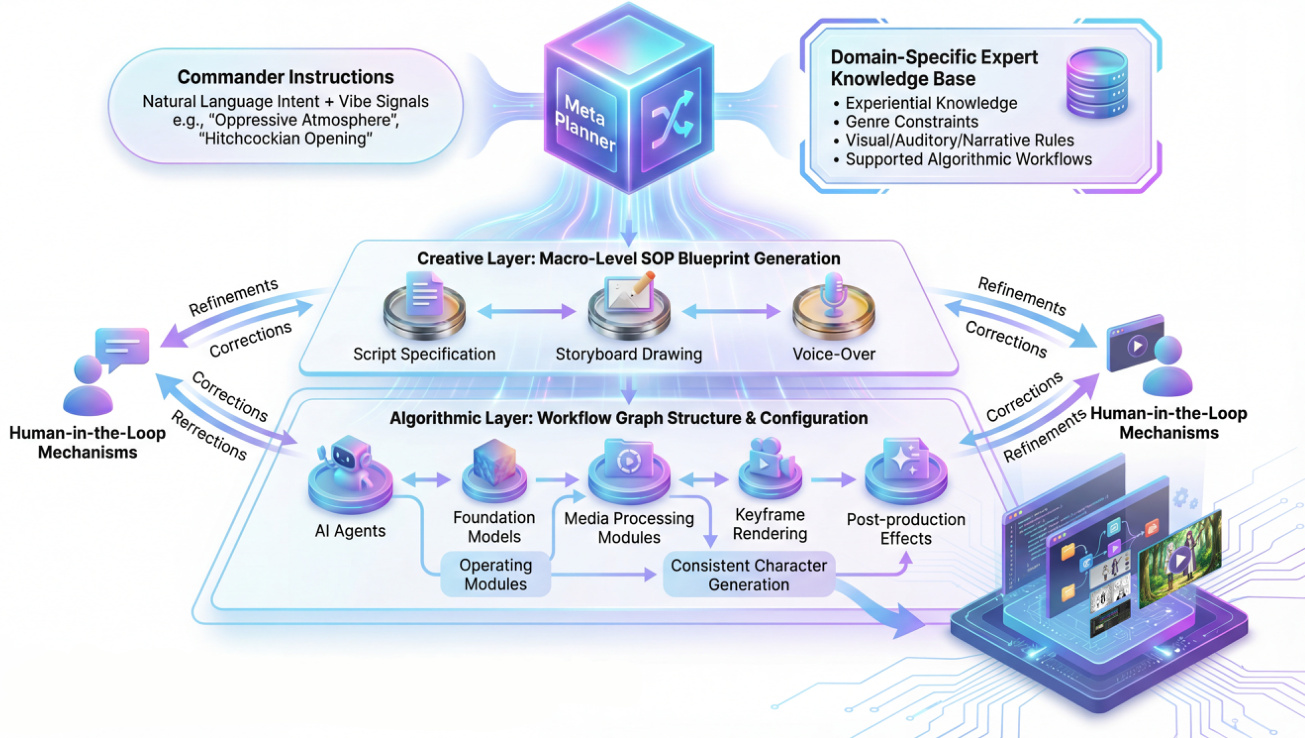

The authors leverage a hierarchical, intent-driven architecture to bridge the semantic gap between abstract creative directives and precise, executable media generation workflows. At the core of this system is the Meta Planner, which functions not as a content generator but as a system architect that translates natural language “Commander Instructions”—often laden with subjective “Vibe” signals such as “oppressive atmosphere” or “Hitchcockian suspense”—into structured, domain-aware execution plans. This transformation is enabled by tight integration with a Domain-Specific Expert Knowledge Base, which encodes professional heuristics, genre constraints, and algorithmic workflows. For instance, the phrase “Hitchcockian suspense” is deconstructed into concrete directives: dolly zoom camera movements, high-contrast lighting, dissonant musical intervals, and narrative pacing based on information asymmetry. This process externalizes implicit creative knowledge, mitigating the hallucinations and mediocrity common in general-purpose LLMs.

As shown in the figure below, the architecture operates across two primary layers: the Creative Layer and the Algorithmic Layer. The Creative Layer generates a macro-level SOP blueprint—encompassing script specification, storyboard drawing, and voice-over planning—based on the parsed intent. This blueprint is then propagated to the Algorithmic Layer, which dynamically constructs and configures a workflow graph composed of AI Agents, foundation models, and media processing modules. The system adapts its orchestration topology based on task complexity: a simple image generation may trigger a linear pipeline, while a full music video demands a graph incorporating script decomposition, consistent character generation, keyframe rendering, and post-production effects. Crucially, the Meta Planner also configures operational hyperparameters—such as sampling steps and denoising strength—to ensure industrial-grade fidelity.

Human-in-the-loop mechanisms are embedded throughout the pipeline, allowing for real-time refinements and corrections at both the creative and algorithmic levels. This closed-loop design ensures that the system remains responsive to evolving user intent while maintaining technical consistency. The Meta Planner’s reasoning is not static; it dynamically grows the workflow from the top down, perceiving the user’s “Vibe” in real time, disambiguating intent via expert knowledge, and ultimately producing a precise, executable workflow graph. This architecture represents a paradigm shift from fragmented, manual, or end-to-end black-box systems toward a unified, agentic, and semantically grounded framework for creative content generation.