Command Palette

Search for a command to run...

MMFineReason : Réduire l'écart de raisonnement multimodal grâce à des méthodes centrées sur les données ouvertes

MMFineReason : Réduire l'écart de raisonnement multimodal grâce à des méthodes centrées sur les données ouvertes

Honglin Lin Zheng Liu Yun Zhu Chonghan Qin Juekai Lin Xiaoran Shang Conghui He Wentao Zhang Lijun Wu

Résumé

Les avancées récentes dans les modèles multimodaux vision-langage (VLM) ont permis des progrès significatifs en raisonnement visuel. Toutefois, les systèmes open-source restent en retard par rapport aux solutions propriétaires, principalement en raison du manque de données de raisonnement de haute qualité. Les jeux de données existants couvrent de manière limitée des domaines exigeants tels que les schémas scientifiques, techniques et mathématiques (STEM) ou les énigmes visuelles, et manquent de annotations cohérentes et détaillées de type « chaîne de raisonnement » (Chain-of-Thought, CoT), essentielles pour activer de fortes capacités de raisonnement. Pour combler cet écart, nous introduisons MMFineReason, un grand jeu de données multimodal de raisonnement comprenant 1,8 million d’échantillons et 5,1 milliards de tokens de solutions, doté d’annotations de raisonnement de haute qualité extraites à partir de Qwen3-VL-235B-A22B-Thinking. Ce jeu de données est construit à travers une pipeline systématique en trois étapes : (1) collecte à grande échelle et standardisation des données, (2) génération de justifications CoT, et (3) sélection approfondie fondée sur la qualité du raisonnement et la prise en compte de la difficulté. Le jeu résultant couvre des problèmes STEM, des énigmes visuelles, des jeux et des schémas complexes, chaque échantillon étant annoté avec des traces de raisonnement ancrées visuellement. Nous fine-tunons Qwen3-VL-Instruct sur MMFineReason afin de développer des versions MMFineReason-2B/4B/8B. Nos modèles établissent de nouveaux états de l’art pour leur taille. Notamment, MMFineReason-4B dépasse Qwen3-VL-8B-Thinking, tandis que MMFineReason-8B surpasse même Qwen3-VL-30B-A3B-Thinking, s’approchant de Qwen3-VL-32B-Thinking, démontrant une efficacité remarquable en termes de paramètres. De façon cruciale, nous mettons en évidence un phénomène du type « moins, c’est plus » grâce à notre stratégie de filtrage fondée sur la difficulté : un sous-ensemble composé de seulement 7 % (123 000 échantillons) atteint une performance comparable à celle du jeu de données complet. En outre, nous révélons un effet synergique selon lequel une composition de données orientée vers le raisonnement améliore simultanément les capacités générales du modèle.

One-sentence Summary

Researchers from Shanghai AI Lab, Peking University, and Shanghai Jiao Tong University introduce MMFineReason, a 1.8M-sample multimodal reasoning dataset distilled from Qwen3-VL-235B, enabling their fine-tuned MMFinereason-2B/4B/8B models to surpass larger proprietary systems via efficient, difficulty-filtered training and synergistic data composition.

Key Contributions

- We introduce MMFineReason, a 1.8M-sample multimodal reasoning dataset with 5.1B tokens, built via a three-stage pipeline that collects, distills Chain-of-Thought rationales from Qwen3-VL-235B-A22B-Thinking, and filters for quality and difficulty to address scarcity in STEM and puzzle domains.

- Fine-tuning Qwen3-VL-Instruct on MMFineReason yields MMFineReason-2B/4B/8B models that set new SOTA for their size class, with MMFineReason-4B surpassing Qwen3-VL-8B-Thinking and MMFineReason-8B outperforming Qwen3-VL-30B-A3B-Thinking while approaching Qwen3-VL-32B-Thinking.

- We reveal a “less is more” effect: a difficulty-filtered 7% subset (123K samples) matches full-dataset performance, and show reasoning-focused data composition improves both specialized and general capabilities, validated through ablation studies on training strategies and data mixtures.

Introduction

The authors leverage a data-centric approach to address the gap between open-source and proprietary Vision Language Models (VLMs) in multimodal reasoning. While prior efforts have expanded multimodal data quantity, they still lack high-quality, consistent Chain-of-Thought annotations—especially for STEM diagrams, visual puzzles, and complex diagrams—due to data scarcity and annotation cost. Existing datasets also suffer from fragmented annotation styles and insufficient reasoning depth, limiting reproducible research. The authors’ main contribution is MMFineReason, a 1.8M-sample dataset with 5.1B tokens of distilled, visually grounded reasoning traces generated via a three-stage pipeline: aggregation, CoT distillation from Qwen3-VL-235B, and difficulty-aware filtering. Fine-tuning Qwen3-VL-Instruct on this data yields MMFineReason-2B/4B/8B models that outperform much larger proprietary baselines—showing that just 7% of the data can match full-dataset performance, revealing a “less is more” efficiency. Their work also uncovers that reasoning-focused data improves general capabilities and provides practical training recipes for future multimodal model development.

Dataset

The authors use MMFineReason, a large-scale, open-source multimodal reasoning dataset built entirely with locally deployed models and no closed-source APIs. Here’s how it’s composed, processed, and used:

-

Dataset Composition & Sources:

The dataset aggregates and refines over 2.3M samples from 30+ open-source multimodal datasets, including FineVision, BMMR, Euclid30K, Zebra-CoT-Physics, GameQA-140K, and MMR1. It covers four domains: Mathematics (79.4%), Science (13.8%), Puzzle/Game (4.6%), and General/OCR (2.2%). The final training set, MMFineReason-1.8M, retains 1.77M high-quality samples after filtering. -

Key Subset Details:

- Mathematics: Dominated by MMR1 (1.27M), supplemented by WaltonColdStart, ViRL39K, Euclid30K, and others for geometric and symbolic diversity.

- Science: Anchored by VisualWebInstruct (157K) and BMMR (54.6K), with smaller additions like TQA and ScienceQA.

- Puzzle/Game: Led by GameQA-140K (71.7K), plus Raven, VisualSphinx, and PuzzleQA for abstract reasoning.

- General/OCR: Only 38.7K samples from LLaVA-CoT, used as regularization to preserve visual perception without diluting reasoning focus.

All datasets undergo strict filtering: multi-image, overly simple, or highly specialized (e.g., medical) data are excluded.

-

Processing & Metadata:

- Images are cleaned: corrupted or unreadable images are discarded; oversized images (>2048px) are resized while preserving aspect ratio; all are converted to RGB.

- Text is standardized: non-English questions are translated to English; noise (links, indices, formatting) is removed; shallow prompts are rewritten to demand step-by-step reasoning.

- Metadata includes: source.id, original-question/answer, standardized image/question/answer, augmented annotations (Qwen3-VL-235B-generated captions and reasoning traces), and metrics (pass rate, consistency flags).

- All samples receive 100% dense image captions (avg. 609 tokens), far exceeding prior datasets.

-

Annotation & Filtering:

- Qwen3-VL-235B-A22B-Thinking generates CoT explanations using a 4-phase framework and outputs them in a strict template: reasoning in

...blocks, final answer in<answer>...</answer>tags. - Filtering stages:

- Template/length validation (discard <100 words or malformed structure).

- N-gram deduplication (remove CoTs with 50-gram repeats ≥3 times).

- Correctness verification (compare extracted answer with ground truth; discard mismatches).

- Difficulty filtering: Qwen3-VL-4B-Thinking attempts each question 4 times; samples with 0% pass rate (123K) are kept for hard SFT; those with <100% pass rate (586K) are used for ablation studies.

- Qwen3-VL-235B-A22B-Thinking generates CoT explanations using a 4-phase framework and outputs them in a strict template: reasoning in

-

Model Training Use:

- The 1.8M dataset is split: 40K sampled for RL training, remainder for SFT.

- Mixtures prioritize challenging samples via pass-rate filtering to accelerate convergence.

- CoT lengths are significantly longer than baselines (avg. 2,910 tokens vs. HoneyBee’s 1,063), with Puzzle/Game tasks averaging 4,810 tokens, reflecting intensive reasoning demands.

- Visual content is 75.3% STEM/diagrammatic (geometric, logic, plots), ensuring symbolic reasoning focus while retaining diverse natural images for generalization.

This pipeline enables reproducible, high-quality training for open-source VLMs to close the gap with proprietary systems.

Method

The authors leverage a multi-stage framework designed to process and solve mathematical problems by integrating data construction, annotation, selection, and model training into a cohesive pipeline. The overall architecture begins with data construction, where raw data undergoes standardization and cleaning to prepare it for downstream tasks. This stage is followed by data annotation, in which a model performs thinking distillation and captioning to generate structured textual descriptions from the input. As shown in the figure below, the annotated data is then subjected to data selection, where template validation and redundancy checks filter the data into high-quality, difficulty-balanced subsets.

The selected data is used to train a student model through supervised fine-tuning, where the model learns step-by-step reasoning from annotated data. This process is enhanced by reinforcement learning, which employs a reward mechanism to optimize the model’s performance on test tasks, using methods such as RLVR or GSPO. Concurrently, data mixing combines diverse problem types—such as math, science, puzzles, and general VQA—into a unified training set, with ratio balancing and OCR integration to ensure diversity and coverage across domains.

The framework further incorporates a structured answer extraction process, guided by a set of prioritized rules that extract the final answer from a solution. These rules emphasize preserving LaTeX formatting, avoiding simplification, and handling multiple solutions or word-based answers appropriately. The extracted answer is then verified by comparing the generated response against a reference solution, focusing on semantic equivalence and factual correctness. The evaluation process assesses whether the generated answer conveys the same conclusion as the reference, with outputs formatted to include a concise analysis and a judgment of equivalence or difference.

Experiment

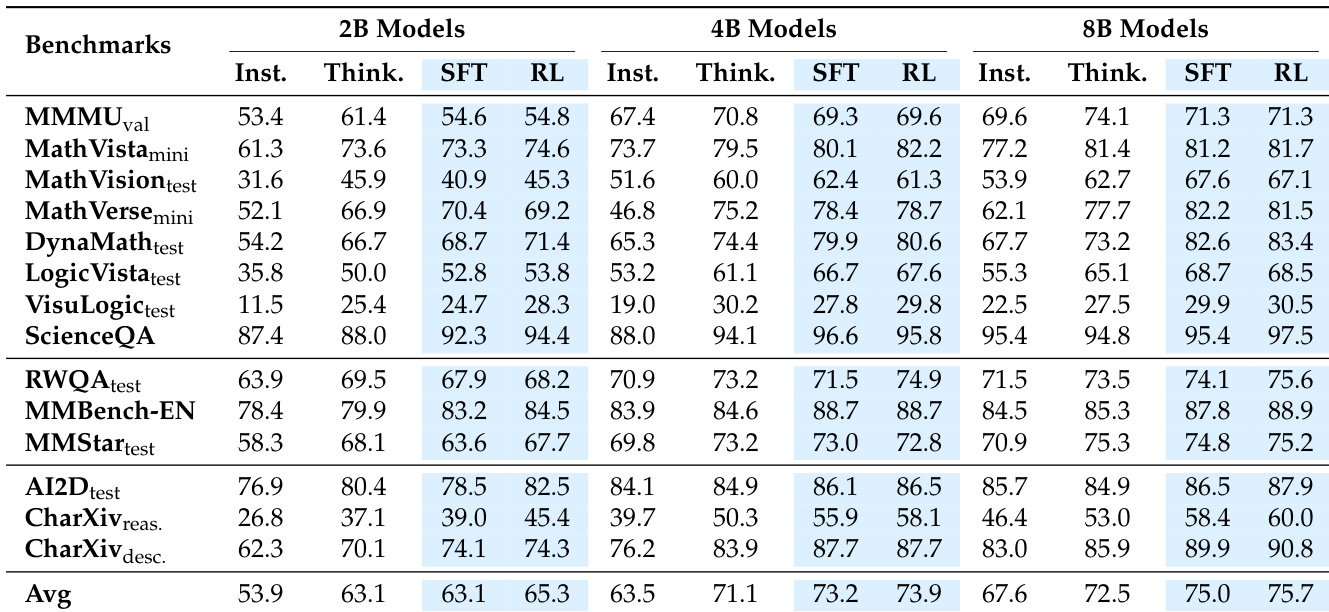

- Data-centric strategies enable strong scaling: MMFineReason models trained on 5.1B tokens surpass Qwen3-VL-30B-A3B-Thinking and approach GPT-5-mini-high performance.

- Difficulty-aware filtering yields 93% of full-dataset performance using only 7% of data (MMFineReason-123K), confirming high data efficiency.

- Reasoning-focused data mixing improves both general and reasoning tasks simultaneously, even with minimal general data.

- Ultra-high resolution (2048²) offers limited gains for reasoning tasks but remains beneficial for general vision benchmarks like RealWorldQA.

- Caption augmentation provides marginal or no benefit once reasoning chains are mature, as CoT already encodes sufficient visual context.

- MFR-8B achieves 83.4% on DynaMath, outperforming Qwen3-VL-32B-Thinking (82.0%) and Qwen3-VL-30B-A3B-Thinking (76.7%); on MathVerse, it reaches 81.5%, nearing Qwen3-VL-32B-Thinking (82.6%).

- MFR-8B outperforms HoneyBee-8B and OMR-7B by >30 points on MathVision (67.1% vs. 37.4%/36.6%), demonstrating superior reasoning chain quality.

- SFT drives major reasoning gains (e.g., +13.66% on MathVision for 8B), while RL enhances generalization on chart and real-world tasks (e.g., +4.04% on AI2D for 2B).

- MMFineReason-1.8M achieves 75.7 on benchmarks, outperforming HoneyBee-2.5M (65.1) and MMR1-1.6M (67.4), despite smaller data volume.

- MMFineReason-123K achieves 73.3, matching or exceeding full-scale baselines using only ~5% of their data volume.

- MFR-4B surpasses Qwen3-VL-8B-Thinking (73.9 vs. 72.5), proving data quality compensates for 2x smaller model size.

- MFR-8B sets new SOTA for its class (75.7), exceeding Qwen3-VL-30B-A3B-Thinking (74.5) and Gemini-2.5-Flash (75.0).

- ViRL39K achieves 98.9% of MMR1’s performance using only 2.4% of its data, confirming diminishing returns beyond curated data thresholds.

- WeMath2.0-SFT (814 samples) achieves 70.98%, rivaling datasets 1000x larger, validating high-density instruction as a capability trigger.

- Puzzle/game datasets (e.g., GameQA-140K, Raven) underperform due to mismatched reasoning styles; geometry-only data (Geo3K, Geo170K) shows limited generalization.

- Broad disciplinary coverage (e.g., BMMR) outperforms narrow domains (GameQA-140K), highlighting diversity as a stronger driver than task depth.

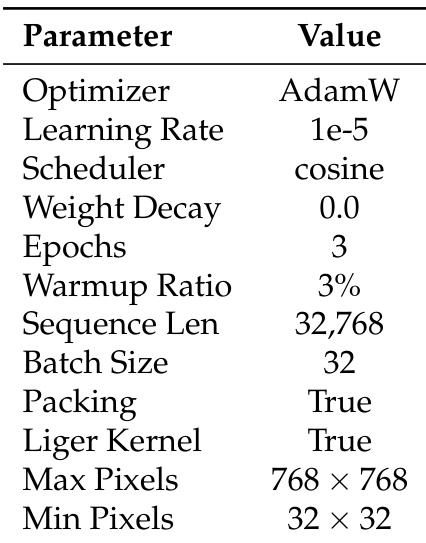

The authors use AdamW with a cosine scheduler and a learning rate of 1e-5 for supervised fine-tuning, training for 3 epochs with a sequence packing length of 32,768 and enabling the Liger Kernel. The model processes images resized to a maximum of 768 × 768 pixels during training, with a minimum resolution of 32 × 32 pixels.

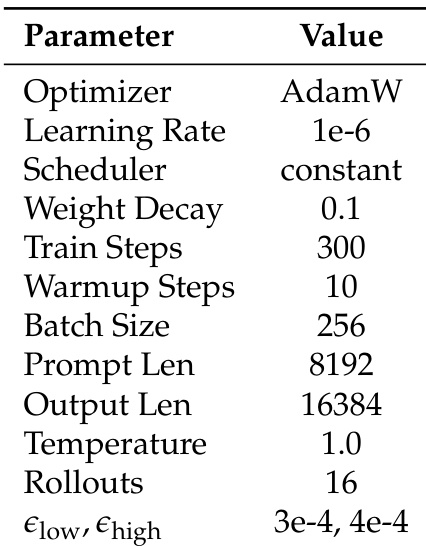

The authors use a constant learning rate scheduler and AdamW optimizer for the reinforcement learning stage, with 300 training steps and 16 rollouts per prompt. The model is trained with a temperature of 1.0 and a prompt length of 8192 tokens, using KL-divergence penalties set to 3e-4 and 4e-4.

The authors use a multi-stage training approach with supervised fine-tuning (SFT) and reinforcement learning (RL) to evaluate the performance of MMFineReason models across various benchmarks. Results show that SFT drives major gains in reasoning tasks, while RL enhances generalization on general understanding and chart reasoning benchmarks, with the 8B model achieving state-of-the-art results on its size class and outperforming larger open-source and closed-source models.

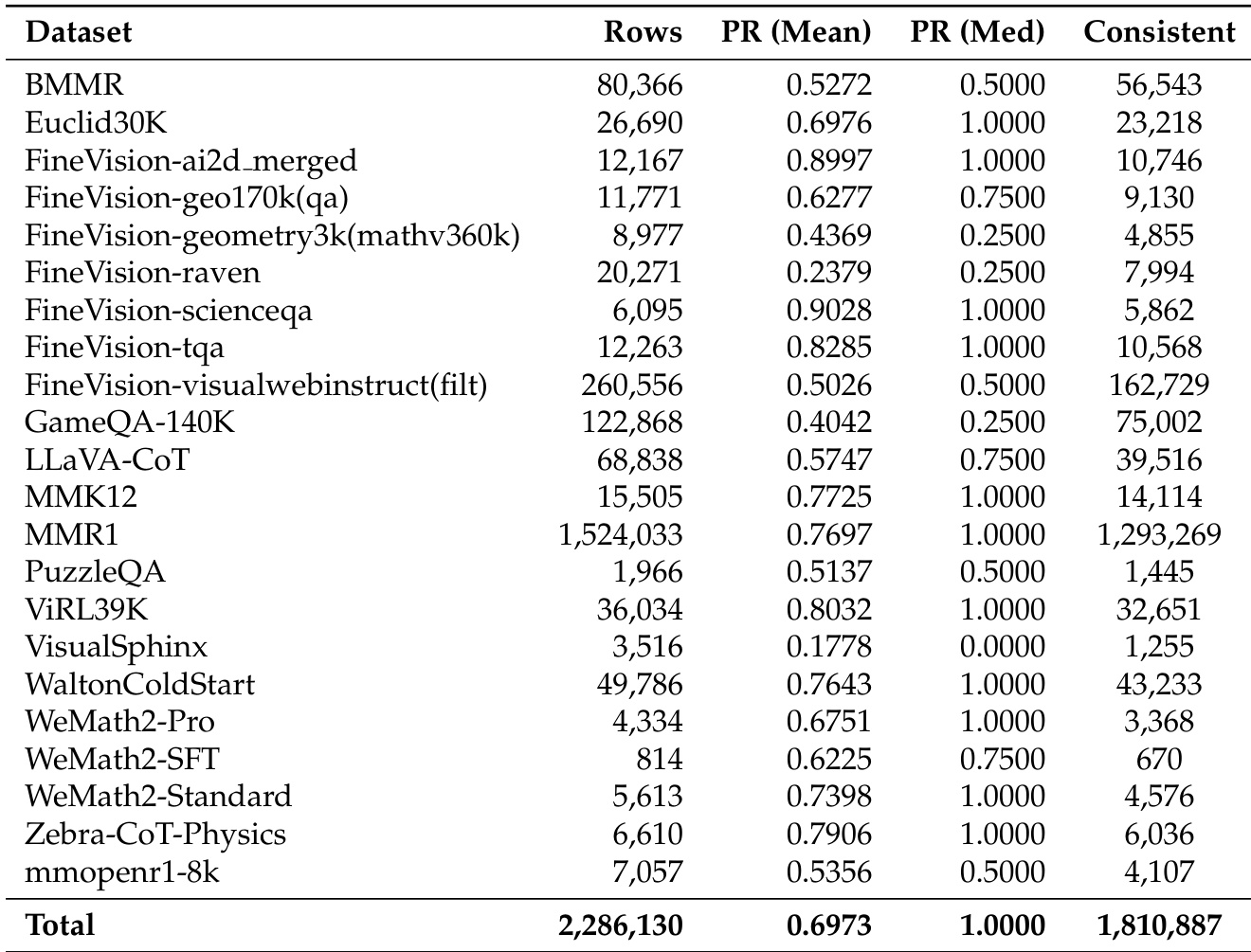

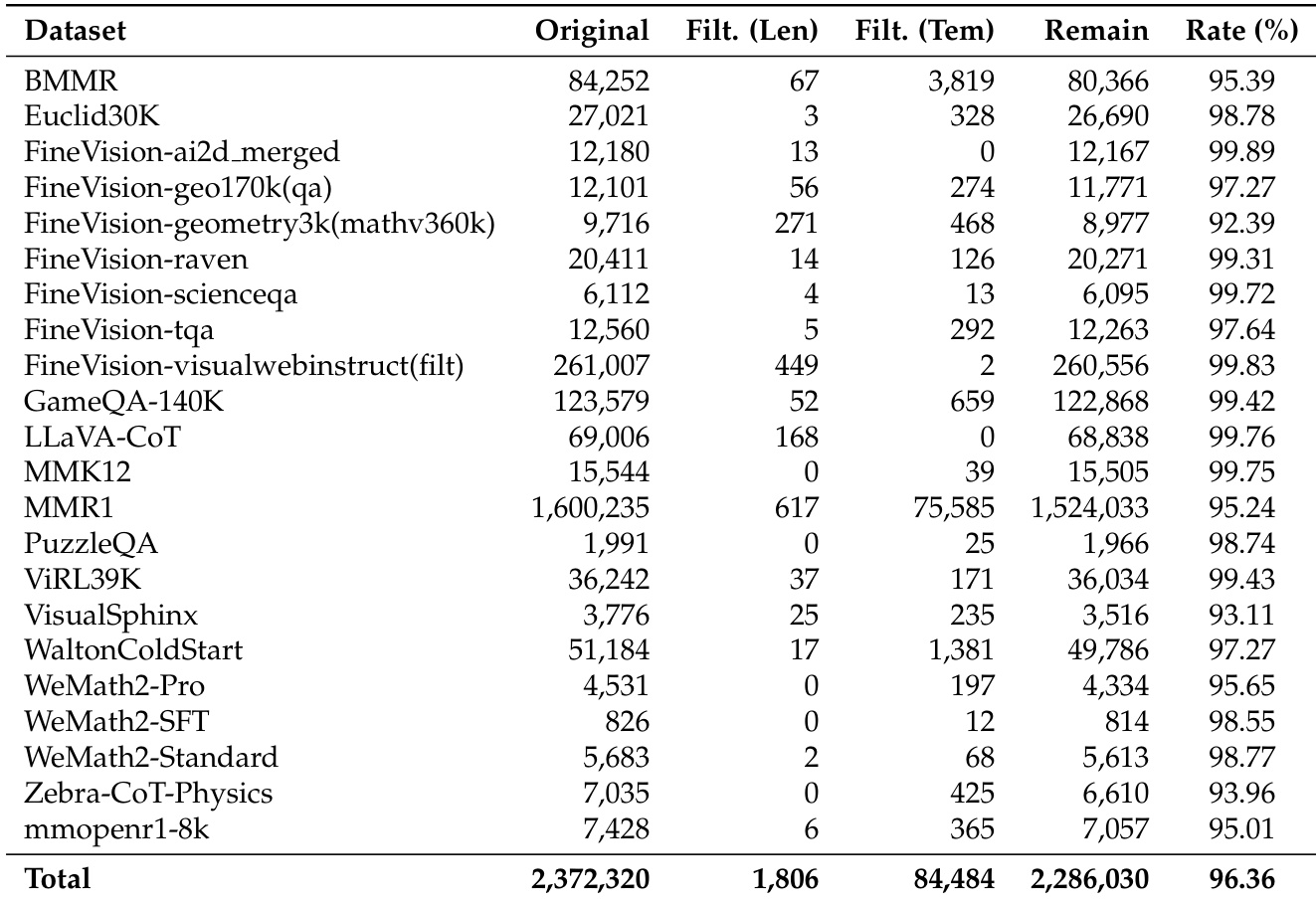

The authors use the table to quantify the impact of their filtering process on dataset size and quality, showing that filtering reduces the total number of samples from 2,372,320 to 1,806 while maintaining a high retention rate of 96.36%. The results indicate that the filtering strategy effectively removes low-quality or redundant data, particularly in datasets like MMR1 and FineVision-visualwebinstruct, which see significant reductions in sample count, while preserving high-quality reasoning data.

The authors use the table to compare the performance of various datasets on reasoning tasks, with metrics including mean pass rate (PR), median pass rate, and consistency. Results show that datasets like FineVision-ai2d_merged and FineVision-scienceqa achieve high mean pass rates, while others such as FineVision-geometry3k and FineVision-raven have significantly lower performance, indicating substantial variation in difficulty across datasets.