Command Palette

Search for a command to run...

BayesianVLA : Décomposition bayésienne des modèles vision-langage-action à l’aide de requêtes d’action latentes

BayesianVLA : Décomposition bayésienne des modèles vision-langage-action à l’aide de requêtes d’action latentes

Shijie Lian Bin Yu Xiaopeng Lin Laurence T. Yang Zhaolong Shen Changti Wu Yuzhuo Miao Cong Huang Kai Chen

Résumé

Les modèles Vision-Language-Action (VLA) ont montré un grand potentiel pour la manipulation robotique, mais peinent souvent à généraliser à de nouvelles instructions ou à des scénarios multi-tâches complexes. Nous identifions une pathologie critique dans les paradigmes actuels d’entraînement, où la collecte de données pilotée par des objectifs engendre un biais dans les jeux de données. Dans de tels jeux de données, les instructions linguistiques sont fortement prévisibles à partir des observations visuelles seules, entraînant une disparition de l’information mutuelle conditionnelle entre les instructions et les actions — un phénomène que nous désignons par « effondrement de l’information ». En conséquence, les modèles dégénèrent en politiques exclusivement visuelles, ignorant les contraintes linguistiques et échouant dans des conditions hors distribution (OOD). Pour remédier à cela, nous proposons BayesianVLA, un cadre novateur qui impose le respect des instructions grâce à une décomposition bayésienne. En introduisant des requêtes d’actions latentes apprenables, nous construisons une architecture à deux branches afin d’estimer à la fois un a priori exclusivement visuel p(a∣v) et une postérieure conditionnée par le langage π(a∣v,ℓ). Nous optimisons ensuite la politique pour maximiser l’information mutuelle ponctuelle (PMI) conditionnelle entre actions et instructions. Cette fonction objectif pénalise efficacement le raccourci visuel et récompense les actions qui expliquent explicitement la commande linguistique. Sans nécessiter de nouvelles données, BayesianVLA améliore considérablement la généralisation. Des expériences étendues sur SimplerEnv et RoboCasa révèlent des gains substantiels, notamment une amélioration de 11,3 % sur le défi OOD du benchmark SimplerEnv, validant ainsi la capacité de notre approche à ancrer robustement le langage dans l’action.

One-sentence Summary

Researchers from HUST, ZGCA, and collaborators propose BayesianVLA, a framework that combats instruction-ignoring in VLA models via Bayesian decomposition and Latent Action Queries, boosting OOD generalization by 11.3% on SimplerEnv without new data.

Key Contributions

- We identify "Information Collapse" in VLA training, where goal-driven datasets cause language instructions to become predictable from visuals alone, leading models to ignore language and fail in OOD settings.

- We introduce BayesianVLA, a dual-branch framework using Latent Action Queries to separately model vision-only priors and language-conditioned posteriors, optimized via conditional PMI to enforce explicit instruction grounding.

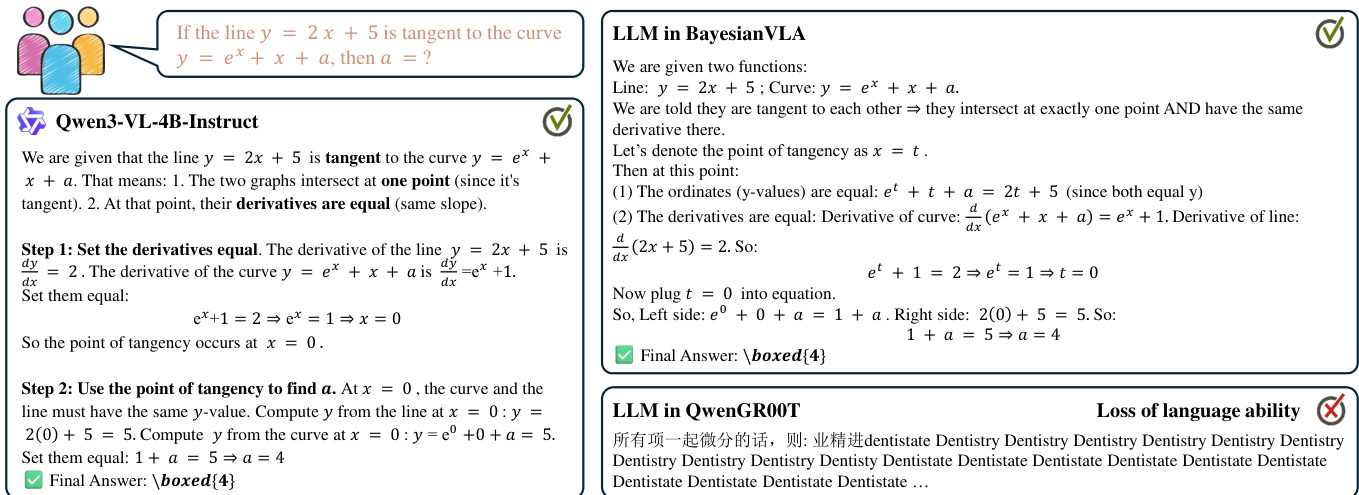

- BayesianVLA achieves state-of-the-art results without new data, including an 11.3% OOD improvement on SimplerEnv and preserves text-only conversational abilities of the backbone VLM.

Introduction

The authors leverage Vision-Language-Action (VLA) models to enable robots to follow natural language instructions, but identify a critical flaw: in goal-driven datasets, visual observations alone can predict instructions, causing models to ignore language and rely on “vision shortcuts.” This leads to poor generalization in out-of-distribution or ambiguous scenarios. To fix this, they introduce BayesianVLA, which uses Bayesian decomposition and learnable Latent Action Queries to train a dual-branch policy—one estimating a vision-only prior and the other a language-conditioned posterior—optimized to maximize the mutual information between actions and instructions. Their method requires no new data and significantly improves OOD performance, while preserving the model’s core language understanding, making it more robust and reliable for real-world deployment.

Method

The authors leverage a Bayesian framework to address the vision shortcut problem in Vision-Language-Action (VLA) models, where the conditional mutual information between instructions and actions collapses due to deterministic mappings from vision to language in goal-driven datasets. To counteract this, they propose maximizing the conditional Pointwise Mutual Information (PMI) between actions and instructions, which is formalized as the Log-Likelihood Ratio (LLR) between the posterior policy and the vision-only prior. This objective, derived from information-theoretic principles, encourages the model to learn action representations that carry instruction-specific semantics not predictable from vision alone.

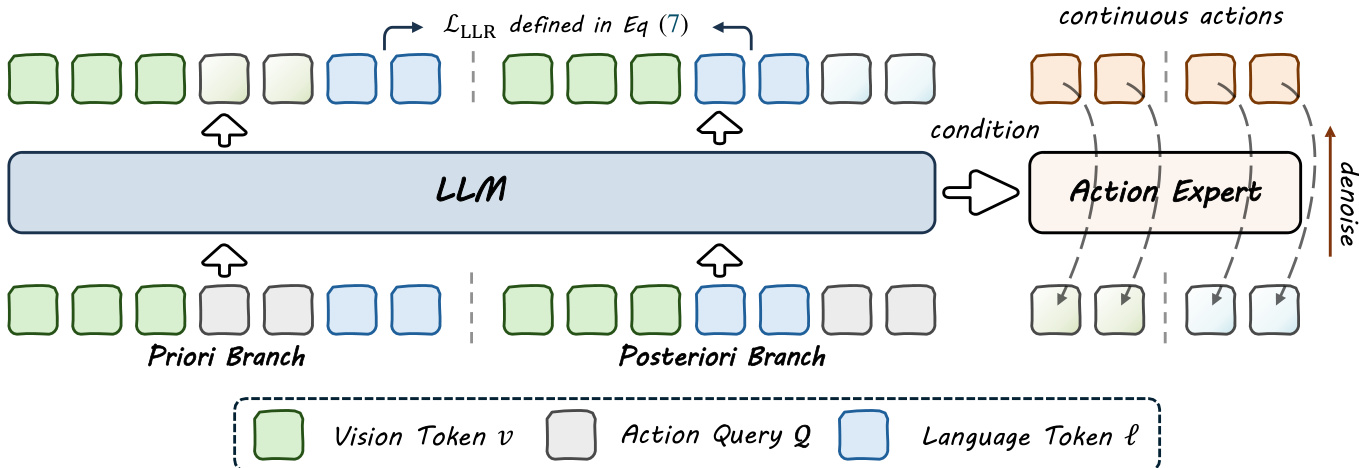

As shown in the figure below, the proposed BayesianVLA framework operates through a dual-branch training strategy that shares a single Large Language Model (LLM) backbone while maintaining distinct input structures for each branch. The core innovation lies in the use of Latent Action Queries, which are learnable tokens appended to the input sequence to serve as a dedicated bottleneck interface between the LLM and the continuous action head. This design enables precise control over the information flow by leveraging the causal masking of decoder-only models, allowing the queries to attend to different subsets of the input depending on their position.

In the Priori Branch, the input sequence is structured as [v,Q,ℓ], where v is the visual observation, Q is the set of action queries, and ℓ is the language instruction. Due to the causal attention mask, the queries can attend to the visual input but not to the language instruction, resulting in hidden states HQprior that encode only vision-dependent information. These features are used to predict the action a via a flow-matching loss Lprior, effectively learning the dataset's inherent action bias p(a∣v).

In the Posteriori Branch, the input sequence is arranged as [v,ℓ,Q], allowing the queries to attend to both the visual and language inputs. This produces hidden states HQpost that encode the full context of vision and language, which are used to predict the expert action a with a main flow-matching loss Lmain. The two branches are trained simultaneously, sharing the same LLM weights.

To explicitly maximize the LLR objective, the framework computes the difference in log-probabilities of the language tokens between the two branches. The LLR loss is defined as LLLR=logp(ℓ∣v,HQprior)−sg(logp(ℓ∣v)), where the stop-gradient operator prevents the model from degrading the baseline language model capability. This term is optimized to force the action representations to carry information that explains the instruction.

The total training objective combines the action prediction losses from both branches with the LLR regularization term: Ltotal=(1−λ)LFM(ψ;HQpost)+λLFM(ψ;HQprior)−βLLLR. The action decoder is trained using the Rectified Flow Matching objective, where the Diffusion Transformer predicts the velocity field for action trajectories conditioned on the query features. During inference, only the Posteriori Branch is executed to generate actions, ensuring no additional computational overhead compared to standard VLA baselines.

Experiment

- Pilot experiments reveal that standard VLA models often learn vision-only policies p(a|v) instead of true language-conditioned policies π(a|v,ℓ), even when trained on goal-driven datasets.

- On RoboCasa (24 tasks), vision-only models achieve 44.6% success vs. 47.8% for language-conditioned baselines, showing minimal reliance on instructions due to visual-task correlation.

- On LIBERO Goal, vision-only models drop to 9.8% success (vs. 98.0% baseline) when scenes map to multiple tasks, exposing failure to resolve ambiguity without language.

- On BridgeDataV2, vision-only models match full models in training loss (0.13 vs. 0.08) but fail catastrophically (near 0%) on OOD SimplerEnv, confirming overfitting to visual shortcuts.

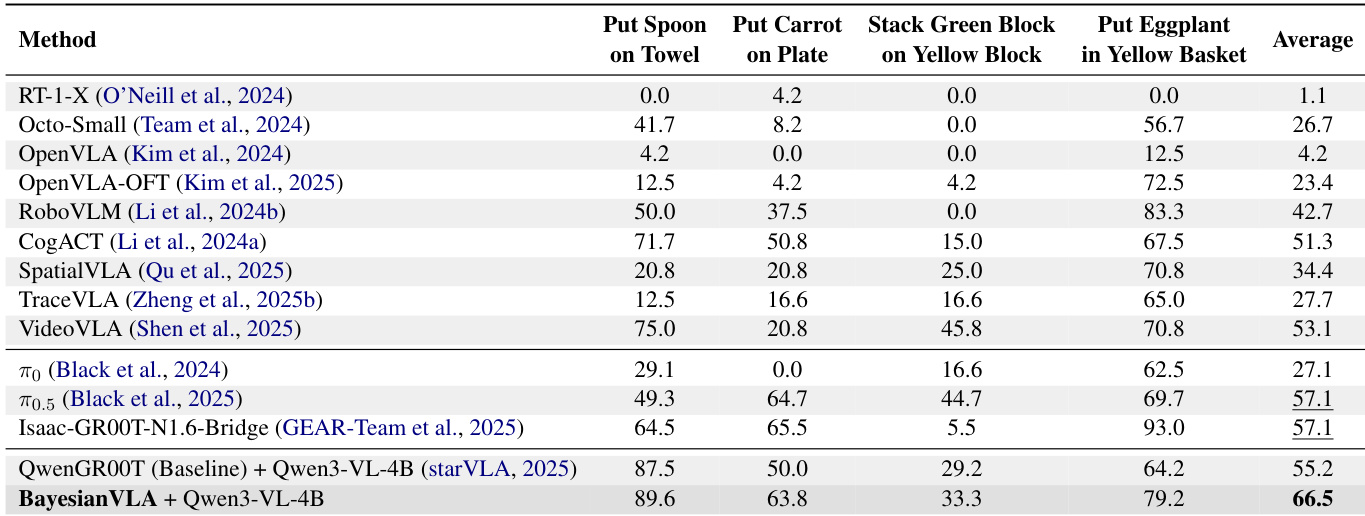

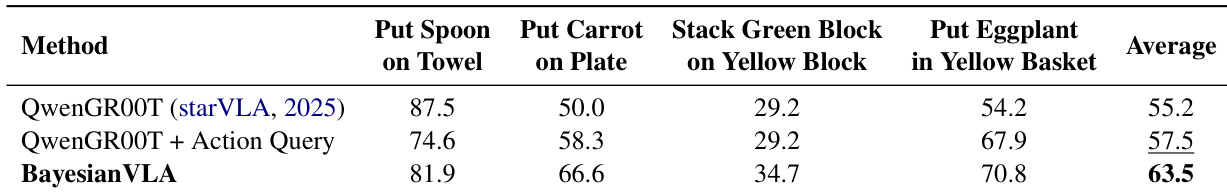

- BayesianVLA achieves 66.5% avg success on SimplerEnv (vs. 55.2% baseline), with +11.3% absolute gain, excelling in tasks like “Put Carrot on Plate” (+13.6%) and “Put Eggplant in Yellow Basket” (+15.0%).

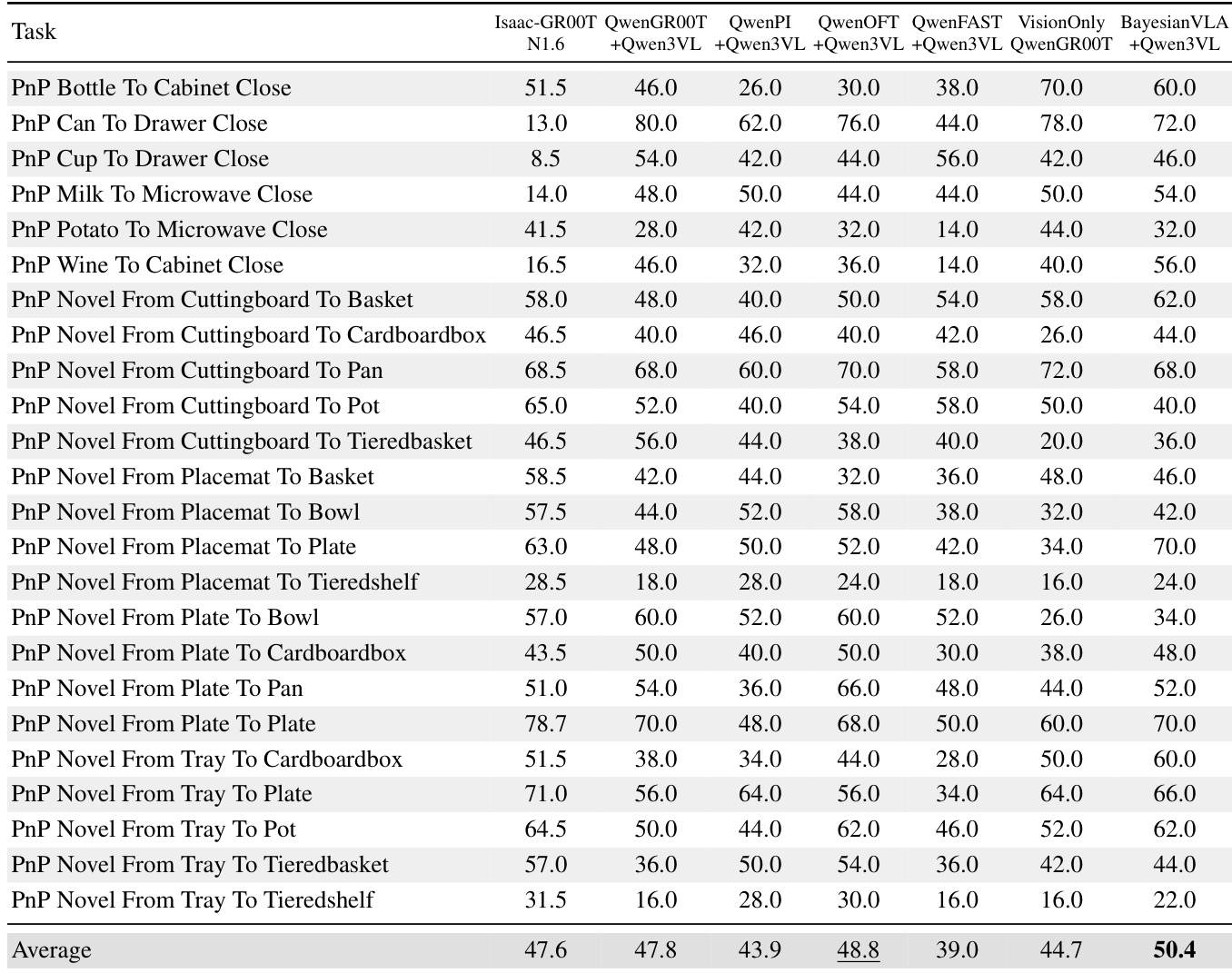

- On RoboCasa, BayesianVLA reaches 50.4% avg success (vs. 47.8% baseline), outperforming all competitors and notably improving on ambiguous tasks like “PnP Novel From Placemat To Plate” (70.0% vs. 34.0% vision-only).

- Ablations confirm Bayesian decomposition drives core gains (+6.0% over +Action Query alone), while latent action queries improve efficiency by reducing DiT complexity from O(N²) to O(K²).

- Future work includes scaling to larger models (e.g., Qwen3VL-8B), real-world testing, and expanding to RoboTwin/LIBERO benchmarks.

The authors use the SimplerEnv benchmark to evaluate BayesianVLA, which is trained on BridgeDataV2 and Fractal datasets. Results show that BayesianVLA achieves a state-of-the-art average success rate of 66.5%, significantly outperforming the baseline QwenGR00T (55.2%) and other strong competitors, with notable improvements in tasks requiring precise object manipulation.

The authors use the SimplerEnv benchmark to evaluate the performance of BayesianVLA against baseline models, with results showing that BayesianVLA achieves a state-of-the-art average success rate of 63.5%, outperforming the QwenGR00T baseline by 8.3 percentage points. This improvement is particularly notable in tasks requiring precise object manipulation, such as "Put Carrot on Plate" and "Put Eggplant in Yellow Basket," where BayesianVLA demonstrates significant gains over the baseline. The results confirm that the proposed Bayesian decomposition effectively mitigates the vision shortcut by encouraging the model to rely on language instructions rather than visual cues alone.

The authors use the RoboCasa benchmark to evaluate VLA models, where the VisionOnly baseline achieves a high success rate of 44.7%, indicating that the model can perform well without language instructions due to visual shortcuts. BayesianVLA surpasses all baselines, achieving an average success rate of 50.4% and demonstrating significant improvements in tasks where the vision-only policy fails, such as "PnP Novel From Placemat To Plate," confirming that the method effectively mitigates the vision shortcut by leveraging language for disambiguation.