Command Palette

Search for a command to run...

Le piège de la flexibilité : pourquoi les limites d’ordre arbitraire restreignent le potentiel de raisonnement des modèles linguistiques à diffusion

Le piège de la flexibilité : pourquoi les limites d’ordre arbitraire restreignent le potentiel de raisonnement des modèles linguistiques à diffusion

Résumé

La diffusion des grands modèles linguistiques (dLLMs) rompt avec la contrainte rigide d’ordre gauche à droite des LLMs traditionnels, permettant ainsi la génération de tokens selon des ordres arbitraires. Intuitivement, cette flexibilité implique un espace de solutions strictement plus vaste que la trajectoire autoregressive fixe, ouvrant théoriquement un potentiel de raisonnement supérieur pour des tâches générales telles que les mathématiques ou la programmation. En conséquence, de nombreuses recherches ont exploité l’apprentissage par renforcement (RL) afin d’extraire les capacités de raisonnement des dLLMs. Dans cet article, nous révétons une réalité contre-intuitive : la génération selon un ordre arbitraire, dans sa forme actuelle, réduit plutôt qu’elle n’élargit la frontière de raisonnement des dLLMs. Nous observons que les dLLMs ont tendance à exploiter cette flexibilité pour contourner les tokens à forte incertitude, qui sont pourtant essentiels à l’exploration, entraînant ainsi un effondrement prématuré de l’espace de solutions. Cette observation remet en question l’hypothèse fondamentale des approches de RL existantes pour les dLLMs, où des complexités considérables — telles que la gestion de trajectoires combinatoires ou des probabilités intractables — sont fréquemment consacrées à préserver cette flexibilité. Nous démontrons qu’un raisonnement efficace est mieux obtenu en renonçant intentionnellement à l’ordre arbitraire et en appliquant à la place une optimisation de politique standard, appelée Group Relative Policy Optimization (GRPO). Notre approche, JustGRPO, est minimaliste mais étonnamment efficace (par exemple, 89,1 % de précision sur GSM8K), tout en conservant pleinement la capacité de décodage parallèle des dLLMs. Page du projet : https://nzl-thu.github.io/the-flexibility-trap

One-sentence Summary

Researchers from Tsinghua University and Alibaba Group reveal that arbitrary-order generation in diffusion LLMs (dLLMs) paradoxically narrows reasoning potential by bypassing high-uncertainty logical tokens. They propose JustGRPO, a minimalist RL method using standard autoregressive training that boosts performance (e.g., 89.1% on GSM8K) while preserving parallel decoding.

Key Contributions

- Diffusion LLMs’ arbitrary-order generation, while theoretically expansive, paradoxically narrows reasoning potential by letting models bypass high-uncertainty tokens that are critical for exploring diverse solution paths, as measured by Pass@k on benchmarks like GSM8K and MATH.

- The paper reveals that this “flexibility trap” stems from entropy degradation: models prioritize low-entropy tokens first, collapsing branching reasoning paths before they can be explored, unlike autoregressive decoding which forces confrontation with uncertainty at critical decision points.

- To counter this, the authors propose JustGRPO — a minimalist method that trains dLLMs under standard autoregressive order using Group Relative Policy Optimization — achieving strong results (e.g., 89.1% on GSM8K) while preserving parallel decoding at inference, without complex diffusion-specific RL adaptations.

Introduction

The authors leverage diffusion language models (dLLMs), which theoretically support arbitrary token generation order, to challenge the assumption that this flexibility enhances reasoning. Prior work assumed arbitrary-order decoding could unlock richer reasoning paths, leading to complex reinforcement learning (RL) methods designed to handle combinatorial trajectories and intractable likelihoods — but these approaches often rely on unstable approximations. The authors reveal that, counterintuitively, arbitrary order causes models to bypass high-uncertainty tokens critical for exploring diverse reasoning paths, collapsing the solution space prematurely. Their main contribution, JustGRPO, discards arbitrary-order complexity and trains dLLMs using standard autoregressive RL (Group Relative Policy Optimization), achieving strong results (e.g., 89.1% on GSM8K) while preserving parallel decoding at inference.

Dataset

- The authors use the official training splits of mathematical reasoning datasets, adhering to standard protocols from prior work (Zhao et al., 2025; Ou et al., 2025).

- For code generation, they adopt AceCoder-87K (Zeng et al., 2025), then filter it using the DiffuCoder pipeline (Gong et al., 2025) to retain 21K challenging samples that include verifiable unit tests.

- The data is used directly as training input without further mixture ratios or cropping; no metadata construction or additional preprocessing is mentioned beyond the described filtering.

Method

The authors leverage a diffusion-based framework for language modeling, where the core mechanism operates through a masked diffusion process. The model, referred to as a Masked Diffusion Model (MDM), generates sequences by iteratively denoising a partially masked input state xt, which is initialized from a fully masked sequence. This process is governed by a continuous time variable t∈[0,1], representing the masking ratio. In the forward process, each token in the clean sequence x0 is independently masked with probability t, resulting in the distribution q(xtk∣x0k), which either retains the original token or replaces it with a [MASK] token. Unlike traditional Gaussian diffusion models, MDMs directly predict the clean token at masked positions. A neural network pθ(x0∣xt) estimates the original token distribution, and the model is trained by minimizing the Negative Evidence Lower Bound, which simplifies to a weighted cross-entropy loss over the masked tokens.

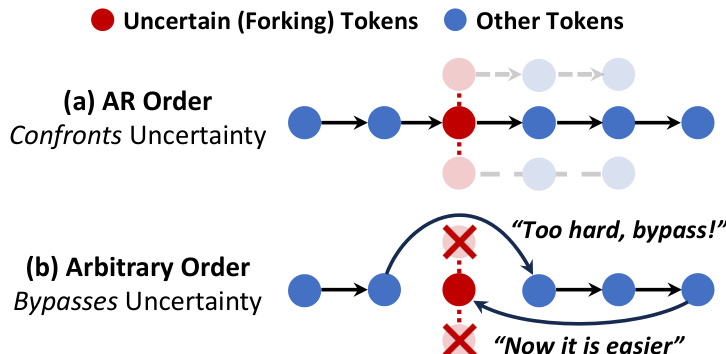

As shown in the figure below, the model's generation process can be constrained to follow an autoregressive (AR) order, where tokens are generated sequentially from left to right, or it can follow an arbitrary order, where tokens are generated in a non-sequential manner. The AR order approach confronts uncertainty by generating tokens in a structured, sequential fashion, which is beneficial for reasoning tasks. In contrast, the arbitrary order approach bypasses uncertainty by allowing for non-sequential generation, which can lead to suboptimal outcomes as indicated by the "Too hard, bypass!" and "Now it is easier" annotations. This distinction highlights the importance of the generation order in the model's performance.

To bridge the gap between the sequence-level denoising architecture of diffusion models and the autoregressive policy framework, the authors propose a method called JustGRPO. This method explicitly forgoes arbitrary-order generation during the reinforcement learning stage, transforming the diffusion language model into a well-defined autoregressive policy πθAR. The autoregressive policy is defined by constructing an input state x~t where the past tokens are observed and the future tokens are masked. The probability of the next token ot given the history o<t is defined as the softmax of the model logits at the position corresponding to ot. This formulation enables the direct application of standard Group Relative Policy Optimization (GRPO) to diffusion language models. The GRPO objective maximizes a clipped surrogate function with a KL regularization term, where the advantage is computed by standardizing the reward against the group statistics. This approach allows the model to achieve the reasoning depth of autoregressive models while preserving the inference speed of diffusion models.

Experiment

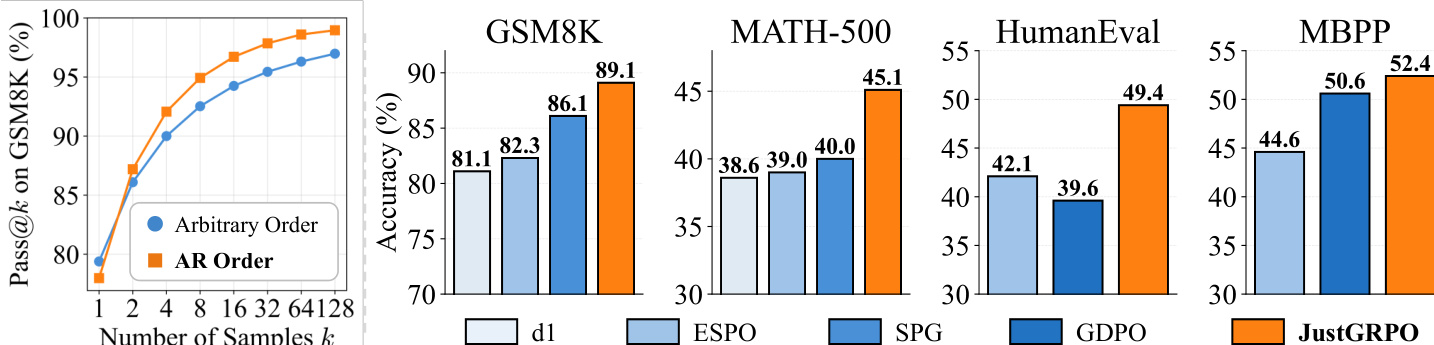

- Evaluated reasoning potential via Pass@k on dLLMs (LLaDA-Instruct, Dream-Instruct, LLaDA 1.5) across GSM8K, MATH500, HumanEval, MBPP: AR decoding outperforms arbitrary order in scaling with k, revealing broader solution space coverage (e.g., AR solves 21.3% more HumanEval problems at k=1024).

- Identified “entropy degradation” in arbitrary order: bypassing high-entropy logical tokens (e.g., “Therefore”, “Since”) collapses reasoning paths into low-entropy, pattern-matching trajectories, reducing exploration.

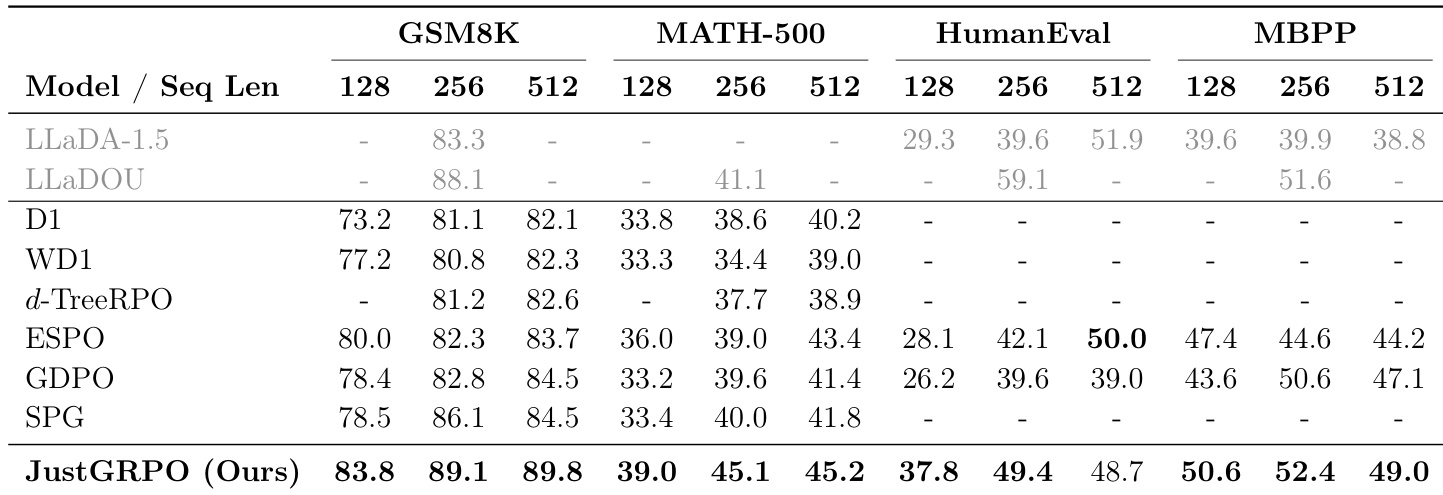

- Introduced JustGRPO: enforcing AR order during RL training on LLaDA-Instruct yields state-of-the-art results—89.1% on GSM8K (↑3.0% over SPG), 6.1% gain on MATH-500 over ESPO—with consistent gains across sequence lengths (128, 256, 512).

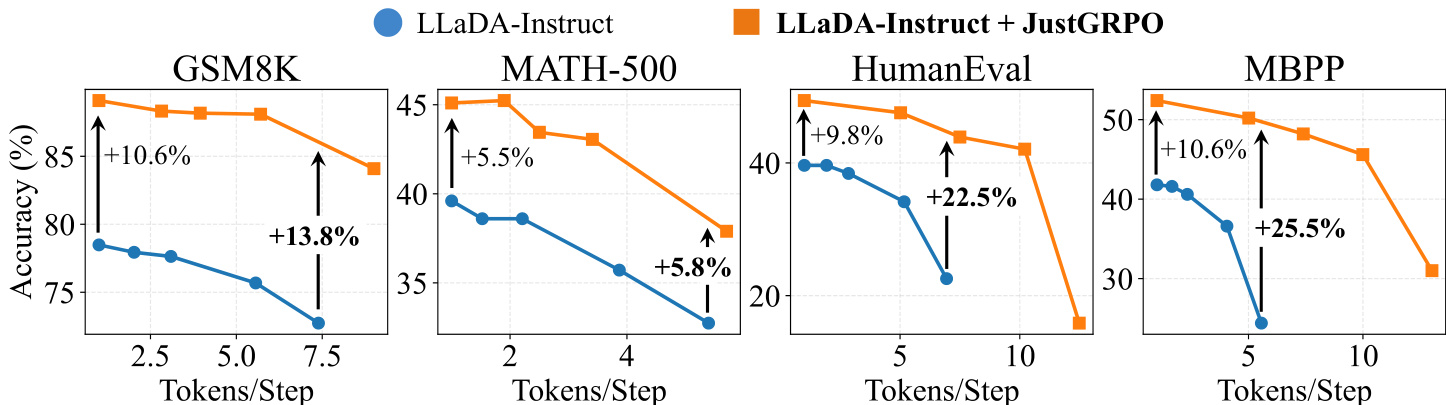

- JustGRPO preserves parallel decoding: under EB sampler, accuracy improves with parallelism (e.g., +25.5% on MBPP at ~5 tokens/step vs. +10.6% at 1 token/step), indicating robust reasoning manifold.

- Ablations confirm findings: smaller block sizes (more AR-like) improve Pass@k; higher temperatures help arbitrary order but can’t match AR; advanced samplers correlate highly with AR (0.970) but still underperform.

- Training efficiency: JustGRPO surpasses approximation-based ESPO in accuracy-wall-clock time trade-off; heuristic gradient restriction to top-25% entropy tokens accelerates convergence without performance loss.

The authors use JustGRPO to train diffusion language models with an autoregressive constraint during reinforcement learning, achieving state-of-the-art performance across multiple reasoning and coding benchmarks. Results show that this approach consistently outperforms methods designed for arbitrary-order decoding, with significant accuracy gains on GSM8K, MATH-500, HumanEval, and MBPP, while also preserving the model's parallel decoding capabilities at inference.

The authors use Pass@k to measure reasoning potential, showing that while arbitrary order performs competitively at k=1, AR order demonstrates significantly stronger scaling behavior as the number of samples increases. Results show that JustGRPO achieves state-of-the-art performance across all benchmarks, outperforming prior methods on GSM8K, MATH-500, HumanEval, and MBPP, with consistent gains across different generation lengths.

The authors use a system-level comparison to evaluate the performance of JustGRPO against existing reinforcement learning methods on reasoning and coding benchmarks. Results show that JustGRPO achieves state-of-the-art performance across all tasks and sequence lengths, outperforming previous methods such as SPG and ESPO, particularly on GSM8K and MATH-500, indicating that enforcing autoregressive order during training enhances reasoning capabilities.

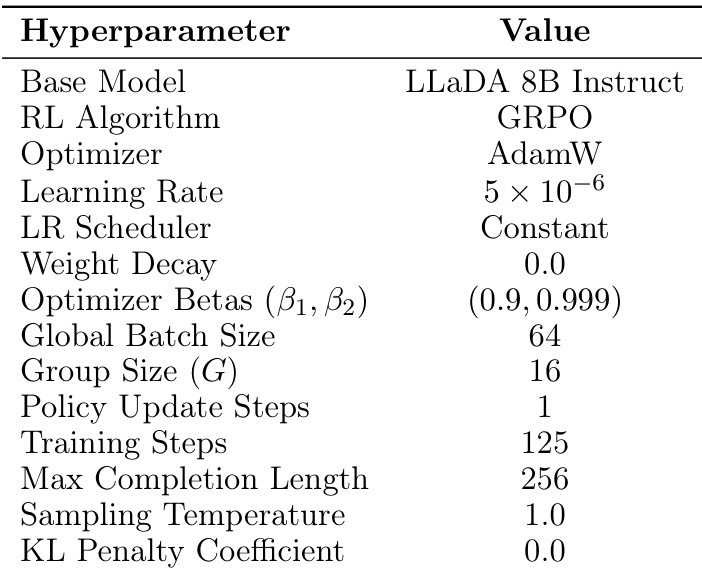

The authors use the GRPO algorithm with a base model of LLaDA 8B Instruct, training it for 125 steps with a constant learning rate of 5 × 10⁻⁶ and a group size of 16. The model achieves strong performance with a sampling temperature of 1.0 and no KL penalty, indicating that exact likelihood computation during training is effective despite higher computational cost.