Command Palette

Search for a command to run...

CaricatureGS : Exagérer les visages en Splatting Gaussien 3D par la courbure gaussienne

CaricatureGS : Exagérer les visages en Splatting Gaussien 3D par la courbure gaussienne

Eldad Matmon Amit Bracha Noam Rotstein Ron Kimmel

Résumé

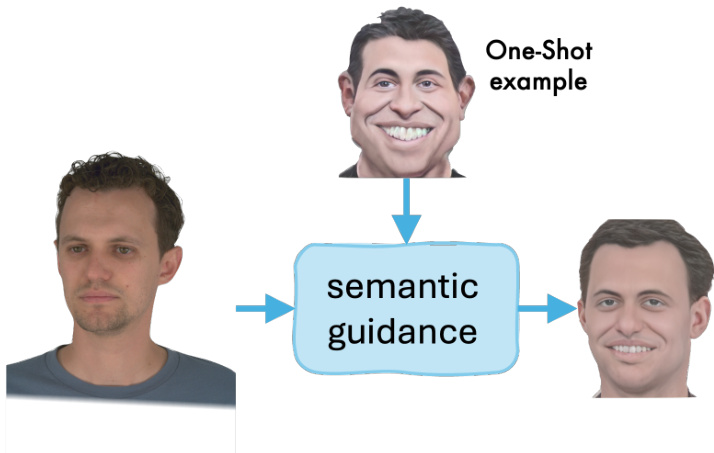

Nous introduisons un cadre de caricature 3D photoréaliste et contrôlable pour les visages. Nous partons d'une technique intrinsèque d'exagération de surface basée sur la courbure gaussienne, qui, lorsqu'elle est combinée à une texture, tend à produire des rendus trop lissés. Pour remédier à ce problème, nous recourons au 3D Gaussian Splatting (3DGS), une méthode récemment démontrée pour générer des avatars à vue libre photoréalistes. À partir d'une séquence multivue, nous extrayons un maillage FLAME, résolvons une équation de Poisson pondérée par la courbure, et obtenons ainsi une version exagérée. Toutefois, une déformation directe des Gaussiens conduit à de mauvais résultats, ce qui nécessite la synthèse d'images de caricature pseudo-vérité en déformant chaque trame vers sa représentation 2D exagérée à l'aide de transformations affines locales. Nous proposons ensuite un schéma d'entraînement alternant supervision réelle et supervision synthétisée, permettant à une seule collection de Gaussiens de représenter à la fois des avatars naturels et exagérés. Ce schéma améliore la fidélité, supporte des éditions locales et permet un contrôle continu de l'intensité de la caricature. Afin d'assurer des déformations en temps réel, nous introduisons une interpolation efficace entre les surfaces d'origine et exagérée. Nous analysons également et montrons que cette interpolation présente une déviation bornée par rapport aux solutions analytiques. Dans des évaluations quantitatives et qualitatives, nos résultats surpassent les approches antérieures, offrant des avatars caricaturaux photoréalistes et contrôlés géométriquement.

One-sentence Summary

The authors from Technion – Israel Institute of Technology propose a photorealistic 3D caricaturization framework using 3D Gaussian Splatting with a curvature-weighted Poisson deformation and alternating real-synthetic supervision, enabling controllable, high-fidelity caricature avatars with real-time interpolation and local editability, outperforming prior methods in both geometry control and visual realism.

Key Contributions

- The paper addresses the challenge of generating photorealistic 3D caricature avatars by combining intrinsic Gaussian curvature-based surface exaggeration with 3D Gaussian Splatting (3DGS), overcoming the over-smoothing issue that arises when applying traditional geometric exaggeration to textured 3D meshes.

- It introduces a novel training scheme that alternates between real multiview images and pseudo-ground-truth caricature images, synthesized via per-triangle local affine transformations, enabling a single Gaussian set to represent both natural and exaggerated facial appearances with high fidelity.

- The method supports real-time, continuous control over caricature intensity through efficient interpolation between original and exaggerated surfaces, and demonstrates superior performance in both quantitative metrics and qualitative evaluations compared to prior approaches.

Introduction

The authors leverage 3D Gaussian Splatting (3DGS) for creating photorealistic caricature avatars by combining curvature-driven geometric deformation with a mesh-rigged 3DGS representation. This approach addresses a key limitation in prior work—where most methods either focused on photorealistic rendering without exaggeration or applied caricature effects only to appearance, leaving geometry unchanged. By using per-triangle Local Affine Transforms (LAT) to warp a neutral FLAME mesh into a caricatured version, they generate pseudo-ground-truth image pairs that guide joint optimization of both neutral and exaggerated views. The main contribution is a unified framework where a single set of 3D Gaussians learns to render both natural and exaggerated appearances while preserving identity and expression, enabling controllable, geometry-aware caricatures that remain photorealistic under large deformations.

Dataset

- The dataset is NeRSemble [20], a multi-view facial performance dataset captured using 16 synchronized high-resolution cameras arranged spatially around the subject.

- It includes 10 scripted sequences: 4 emotion-driven (EMO) and 6 expression-driven (EXP), plus one additional free self-reenactment sequence.

- The authors adopt the same train/validation/test split as in [21], ensuring consistency for fair comparison, with a training schedule of 120,000 iterations.

- Data is processed to support multi-view rendering and facial animation modeling, with no explicit cropping mentioned, but the original high-resolution camera captures are used as input.

- Metadata for each sequence is constructed based on the script type (EMO or EXP) and performance context, enabling controlled training and evaluation.

- The dataset is used in a mixture of training ratios aligned with the original sequence types, with the full set of sequences contributing to model training and validation.

Method

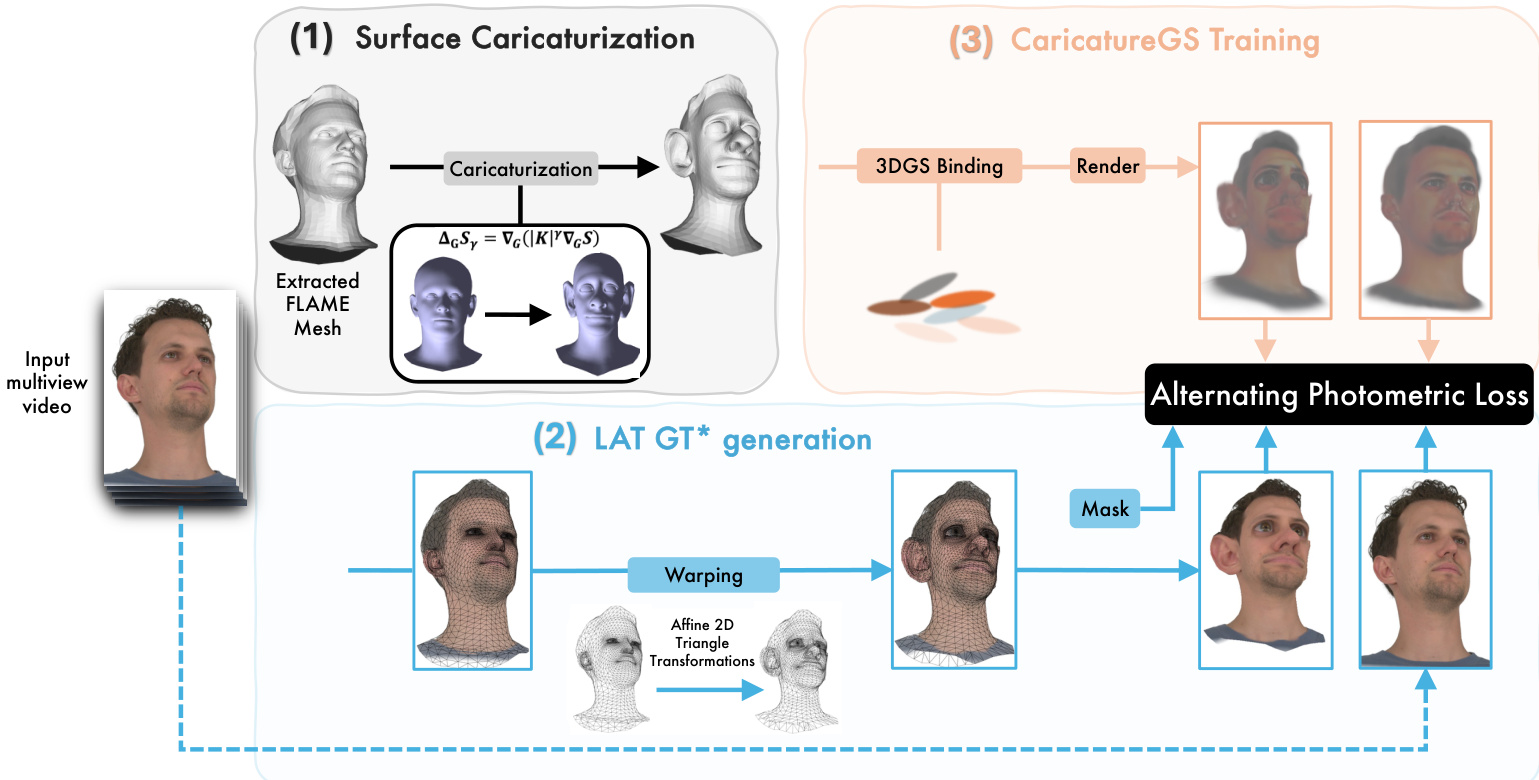

The proposed framework for photorealistic and controllable 3D caricaturization leverages a multi-stage pipeline that integrates geometric deformation, pseudo-ground-truth generation, and a specialized training scheme for 3D Gaussian Splatting (3DGS). The overall architecture, illustrated in the figure below, begins with an input multiview video, from which a temporally consistent FLAME mesh is extracted. This mesh serves as the foundation for the subsequent steps.

The first stage, surface caricaturization, applies a curvature-driven deformation to the extracted FLAME mesh. This process is formulated as a weighted Poisson equation on the surface, where the weights are defined by the Gaussian curvature K(p) raised to a power γ, i.e., w(γ)=∣K∣γ. This formulation allows for the exaggeration of facial features based on their intrinsic curvature, with higher curvature regions being amplified more. The solution to this equation, Sγ, is obtained by solving a discrete least-squares problem using the Laplace-Beltrami operator. To enable localized control, the method also supports constrained deformation by imposing boundary conditions on specific vertices, allowing for targeted exaggerations.

The second stage generates pseudo-ground-truth caricature images (GT*) to supervise the 3DGS training. Since real caricature images are unavailable, the authors synthesize GT* by warping the original input frames. This is achieved through Local Affine Transformations (LAT), which exploit the per-triangle correspondence between the original and deformed meshes. For each triangle in the deformed mesh, a unique affine map is computed to warp the corresponding pixels from the original image. To handle occlusions and ensure robustness, a 2D triangle-level mask is generated to identify unreliable regions, and a spatial mask is applied to freeze the parameters of Gaussians corresponding to areas like hair, which are difficult to warp reliably.

The third stage, CaricatureGS Training, involves optimizing a single set of 3D Gaussian primitives. These Gaussians are rigged to the original FLAME mesh, and their attributes (position, scale, rotation, opacity, and color) are updated based on a photometric loss. The key innovation is an alternating training scheme that stochastically switches between real input frames and the synthesized GT* images. This joint optimization allows the Gaussian set to learn both natural and caricatured appearances simultaneously. The use of masks during GT* steps prevents the propagation of artifacts from unreliable warping, while the real frames provide essential supervision to fill in occluded regions and preserve fine details like hair. This shared representation enables the model to generalize across a continuous range of caricature intensities.

Experiment

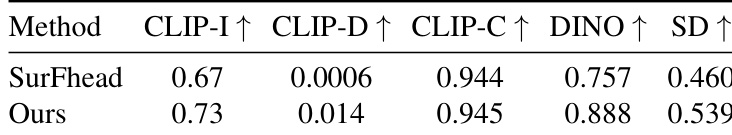

- Evaluated on NeRSemble dataset using photorealistic rendering and identity preservation as main axes, comparing against SurFHead baseline with unconstrained exaggeration (γ_f = 0.25).

- Achieved superior performance across all metrics: CLIP-I, CLIP-D, CLIP-C, DINO, and SD, demonstrating better caricature intent alignment, identity preservation, and multi-view consistency.

- Outperformed diffusion-based mesh-free editing (GaussianEditor) in geometry stability, specular consistency, and multi-view coherence.

- Ablation confirmed alternating supervision (original and GT* frames) is essential—training on either domain alone leads to overfitting and artifacts, while alternating enables smooth interpolation and high fidelity across caricature intensities.

- On NeRSemble dataset, achieved high-quality caricaturization with 256 test frames, 4 emotions, 6 expressions, and 10 subjects, using 120K training iterations on a single RTX 3090.

Results show that the proposed method outperforms SurFHead across all evaluated metrics, achieving higher CLIP-I, CLIP-D, CLIP-C, DINO, and SD scores. This indicates improved alignment with the intended caricature intent, better identity preservation, and greater consistency across views compared to the baseline.