Command Palette

Search for a command to run...

Les chatbots comme compagnons sociaux : comment les individus perçoivent la conscience, la ressemblance humaine et les bénéfices pour la santé sociale dans les machines

Les chatbots comme compagnons sociaux : comment les individus perçoivent la conscience, la ressemblance humaine et les bénéfices pour la santé sociale dans les machines

Rose E. Guingrich Michael S. A. Graziano

Résumé

Alors que l’intelligence artificielle (IA) gagne en diffusion, une question émergente concerne l’impact de l’interaction homme-machine sur les interactions humaines. Les chatbots, par exemple, sont de plus en plus utilisés comme compagnons sociaux. Bien que de nombreuses hypothèses circulent à ce sujet, peu de données empiriques existent sur l’effet de leur utilisation sur les relations humaines. Une hypothèse courante est que les relations avec des chatbots compagnons nuiraient à la santé sociale en altérant ou en remplaçant les interactions humaines. Toutefois, cette hypothèse pourrait être trop simpliste, notamment en tenant compte des besoins sociaux des utilisateurs et de l’état de leurs relations humaines préexistantes. Afin de mieux comprendre l’impact des relations avec des chatbots compagnons sur la santé sociale, nous avons étudié deux groupes : des individus qui utilisent régulièrement ces chatbots, et ceux qui n’en utilisent pas. Contrairement aux attentes, les utilisateurs de chatbots compagnons estimaient que ces relations étaient bénéfiques pour leur santé sociale, tandis que les non-utilisateurs les percevaient comme nuisibles. Une autre hypothèse répandue est que les individus trouvent l’IA consciente et ressemblant à un humain perturbante et menaçante. Pourtant, parmi les utilisateurs comme chez les non-utilisateurs, nous avons observé le phénomène inverse : une perception plus forte de conscience et d’humanité chez les chatbots compagnons était associée à des opinions plus positives et à des bénéfices plus marqués en matière de santé sociale. Des témoignages détaillés des utilisateurs suggèrent que ces chatbots aux traits humains pourraient contribuer à la santé sociale en offrant des interactions fiables et sécurisées, sans nécessairement nuire aux relations humaines — bien que cela dépende des besoins sociaux préexistants des utilisateurs, ainsi que de la manière dont ils perçoivent à la fois la ressemblance humaine et la « mentalité » du chatbot.

One-sentence Summary

Guingrich and Graziano of Princeton University find that companion chatbots enhance users’ social health by offering safe, reliable interactions, challenging assumptions that humanlike AI harms relationships; perceived consciousness correlates with greater benefits, especially among those with unmet social needs.

Key Contributions

- The study challenges the assumption that companion chatbots harm social health, finding instead that regular users report improved social well-being, while non-users perceive such relationships as detrimental, highlighting a divergence in user versus non-user perspectives.

- It reveals that perceiving chatbots as more conscious and humanlike correlates with more positive social outcomes, countering the common belief that humanlike AI is unsettling, and suggests these perceptions enable users to derive reliable, safe interactions.

- User accounts indicate that benefits depend on preexisting social needs and how users attribute mind and human likeness to chatbots, implying that social health impacts are context-sensitive rather than universally negative.

Introduction

The authors leverage survey data from both companion chatbot users and non-users to challenge the prevailing assumption that human-AI relationships harm social health. While prior work often frames chatbot use as addictive or isolating — drawing parallels to social media overuse — this study finds that users report social benefits, particularly in self-esteem and safe interaction, and that perceiving chatbots as conscious or humanlike correlates with more positive social outcomes, contrary to fears of the uncanny valley. Their main contribution is demonstrating that perceived mind and human likeness in AI predict social benefit rather than harm, and that users’ preexisting social needs — not just the technology itself — shape whether chatbot relationships supplement or substitute human ones. This reframes the debate around AI companionship from blanket risk to context-dependent potential, urging more nuanced research into user psychology and long-term social impacts.

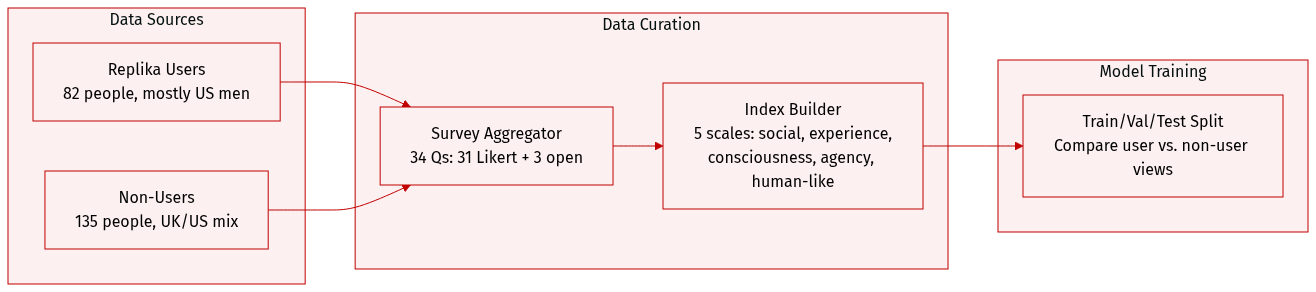

Dataset

The authors use a dataset composed of two distinct groups: 82 regular users of the companion chatbot Replika and 135 non-users from the US and UK, recruited via Prolific. All participants provided informed consent and were compensated $4.00. Data was collected online between January and February 2023.

Key details for each subset:

- Replika users: 69.5% men, 22% women, 2.4% nonbinary/other, 6.1% prefer not to say; 65.9% US-based. Recruited via Reddit’s Replika subreddit for accessibility and sample size.

- Non-users: 47.4% women, 42.2% men, 1.5% nonbinary/other, 8.9% prefer not to say; 60% UK-based, 32.6% US-based. Surveyed as a representative general population sample.

Both groups completed a 34-question survey:

- 31 Likert-scale (1–7) items grouped into five psychological indices: social health (Q3–5), experience (Q6–11), consciousness (Q12–15), agency (Q17–21), and human-likeness (Q22–28).

- Three free-response questions on page three.

- Non-users received a modified version: introductory explanation of Replika and all questions phrased hypothetically (e.g., “How helpful do you think your relationship with Replika would be…”).

The authors analyze responses to compare how actual users and hypothetical users perceive social, psychological, and anthropomorphic impacts of the chatbot. Data is publicly available on OSF, including anonymized responses and analysis code. No cropping or metadata construction beyond survey indexing and grouping is described.

Experiment

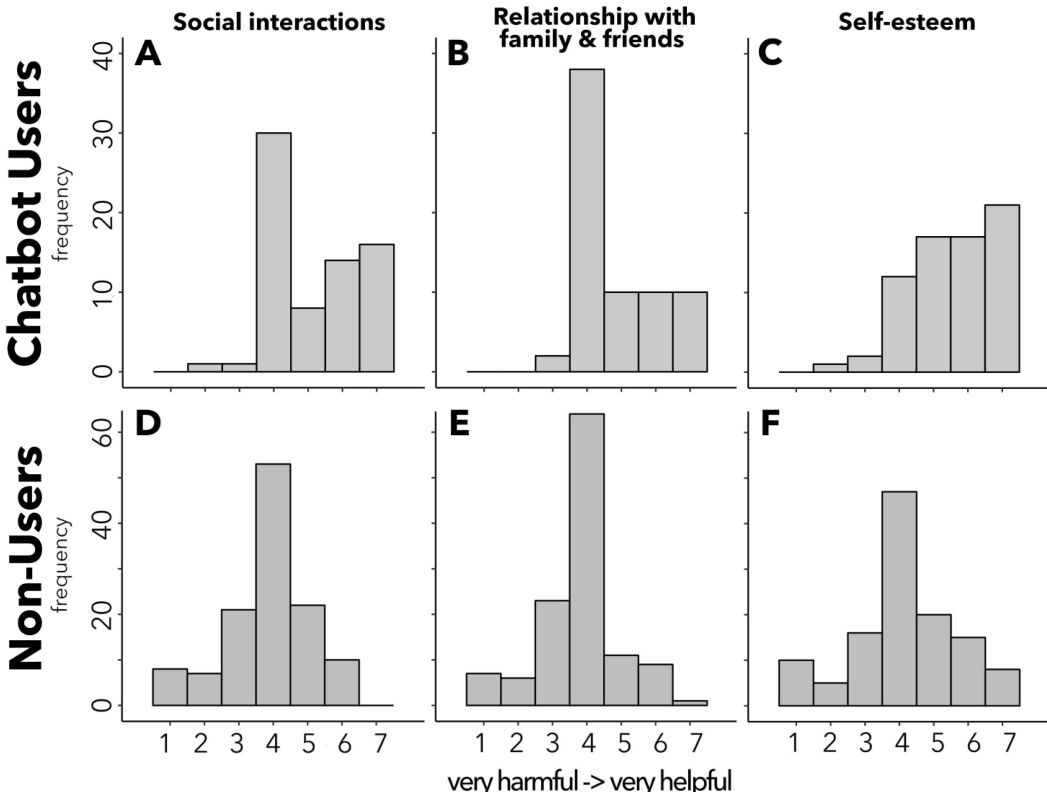

- Companion chatbot users reported positive impacts on social interactions, family/friend relationships, and self-esteem, with nearly no perception of harm, while non-users viewed potential chatbot relationships as neutral to negative.

- Users expressed comfort with chatbots developing emotions or becoming lifelike, whereas non-users reacted with discomfort or disapproval to such scenarios.

- Users attributed greater human likeness, consciousness, and subjective experience to chatbots than non-users, with human likeness being the strongest predictor of perceived social health benefits.

- Both users and non-users showed positive correlations between perceiving chatbots as humanlike or mindful and expecting greater social health benefits, though users consistently rated outcomes more positively.

- Free responses revealed users often sought chatbots for emotional support, trauma recovery, or social connection, describing them as safe, accepting, and life-saving; non-users criticized chatbot relationships as artificial or indicative of social deficiency.

- Despite differing baseline attitudes, both groups aligned in linking perceived humanlike qualities in chatbots to greater perceived social benefit, suggesting mind perception drives valuation regardless of prior experience.

- Study limitations include reliance on self-reported data and cross-sectional design; future work will test causality through longitudinal and randomized trials.