Command Palette

Search for a command to run...

HyperNetworks

Date

Tags

HyperNetworks is a neural network structure that has some differences in model parameterization compared to traditional neural networks.HyperNetworks"In HyperNetworks, a neural network is used to generate the weights or other parameters of another neural network. This generated network is called a hypernetwork, and the network generated by it is called a target network.

Typically, HyperNetwork receives some additional inputs, such as the input of the target network, and then generates the parameters of the target network. A key advantage of this approach is that it can dynamically generate the parameters of the target network, making the model more flexible to adapt to different tasks or environments.

HyperNetworks has potential applications in fields such as meta-learning and architecture search. By using HyperNetworks, the model structure or parameters can be automatically adjusted and optimized, thereby improving the generalization ability and adaptability of the model.

HyperNetworks in Stable Diffusion

HyperNetworks is an early adopter of Stable Diffusion Novel AI The fine-tuning technique developed by . It is a small neural network attached to the Stable Diffusion model to modify its style.

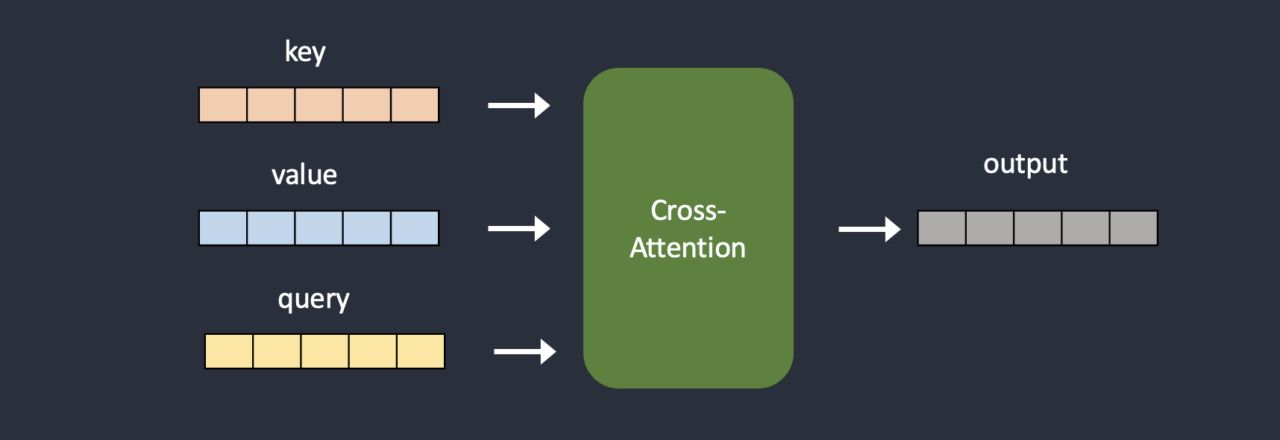

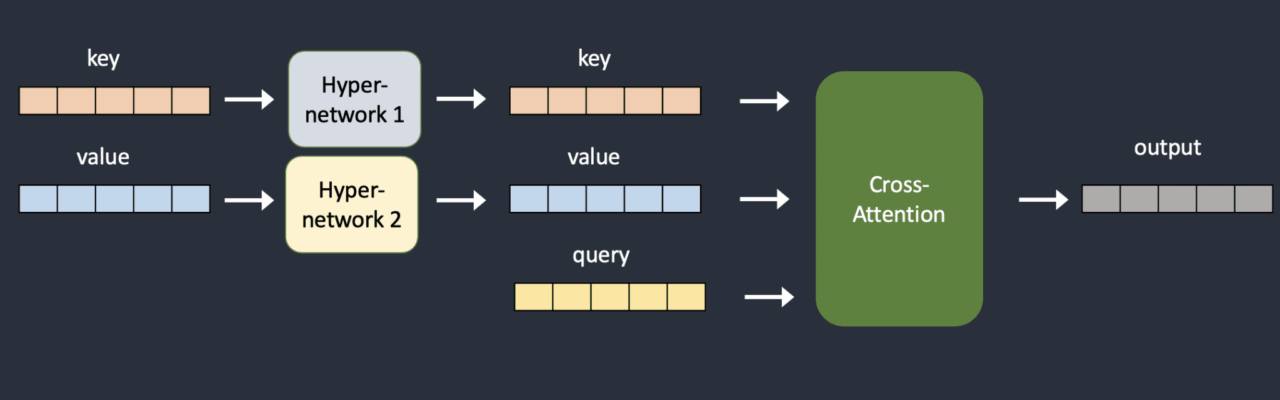

HyperNetworks are usually a simple neural network: a fully connected linear network with loss and activation functions. Just like what you learn in the introductory neural network course. They hijack the criss-cross attention module by inserting two networks to transform the key vector and the query vector. Below is a comparison of the original model architecture and the hijacked model architecture.

During training, Stable Diffusion models are locked, but attached HyperNetworks allow changes. Since HyperNetworks are small, training is fast and requires limited resources. Training can be done on regular computers.

References

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.