Command Palette

Search for a command to run...

Rotary Position Embedding (RoPE)

Date

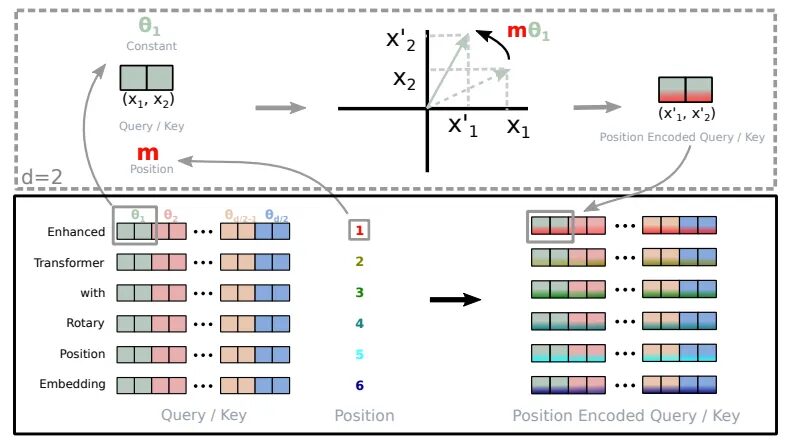

Rotary Position Embedding (RoPE) is a paper titledRoformer: Enhanced Transformer With Rotray Position Embedding"A position encoding method that can integrate relative position information dependency into self-attention and improve the performance of transformer architecture.It is a positional encoding widely used in large models, including but not limited to Llama, Baichuan, ChatGLM, Qwen, etc. Due to computing resource limitations, most large models are currently trained with a smaller context length. In reasoning, if the pre-trained length is exceeded, the performance of the model will be significantly reduced. As a result, many works based on RoPE length extrapolation have emerged, aiming to enable large models to achieve better results beyond the pre-trained length. Therefore, it is crucial to understand the underlying principles of RoPE for length extrapolation of the RoPE-base model.

The basic principle of RoPE

The basic principle of RoPE is to encode each position as a rotation vector whose length and direction are related to the position information. Specifically, for a sequence of length n, RoPE encodes each position i as a rotation vector pe_i, which is defined as follows:

pe_i = (sin(iomega), cos(iomega))

Here, omega is a hyperparameter that controls the frequency of the rotation vector.

Advantages of RoPE

What makes RoPE unique is its ability to seamlessly integrate explicit relative position dependencies into the model’s self-attention mechanism. This dynamic approach has three advantages:

- Flexibility in sequence length: Traditional positional embeddings usually require a maximum sequence length to be defined, limiting their adaptability. On the other hand, RoPE is very flexible. It can generate positional embeddings on the fly for sequences of arbitrary length.

- Reduce dependencies between tokens: RoPE is very smart in modeling the relationships between tokens. As tokens get farther away from each other in the sequence, RoPE naturally reduces the token dependencies between them. This gradual weakening is more consistent with the way humans understand language.

- Enhanced self-attention: RoPE equips the linear self-attention mechanism with relative position encoding, a feature not present in traditional absolute position encoding. This enhancement allows for more precise exploitation of token embeddings.

Implementation of rotation encoding (taken from Roformer)

Traditional absolute position encoding is like specifying that a word appears at position 3, 5, or 7, regardless of context. In contrast,RoPE allows the model to understand how words are related to each other.It recognizes that word A often appears after word B and before word C. This dynamic understanding enhances the model’s performance.

Implementation of RoPE

Break down the code for Rotational Position Encoding (RoPE) to understand how it is implemented.

precompute_theta_pos_frequenciesThe function calculates a special value of RoPE. First, define a function calledthetaThe hyperparameters to controlAmplitude of rotationSmaller values produce smaller rotations. It then uses the calculatedGroup rotation anglethetaThe function also creates a list of positions in the sequence and calculates the rotation angle by taking the outer product of the position list and the rotation angle.The amount each position should be rotatedFinally, it converts these values into complex numbers in polar coordinate form with fixed size, which is like a cipher to represent position and rotation.apply_rotary_embeddingsThe function takes values and augments them with rotation information. It first converts the input valueThe last dimension is divided intoRepresents the real and imaginary parts of. These pairs are then combined into a single complex number. Next, the functionMultiply the precomputed complex number by the input, effectively applying the rotation. Finally, it converts the result back to real numbers and reshapes the data, ready for further processing.

References

【1】https://www.bolzjb.com/archives/PiBBdbZ7.html

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.