Command Palette

Search for a command to run...

Hunyuan-GameCraft-1.0: Interactive Game Video Generation Framework

Date

Size

589.47 MB

License

Other

Paper URL

1. Tutorial Introduction

Hunyuan-GameCraft-1.0 is a high-dynamic interactive game video generation framework jointly launched by Tencent Hunyuan team and Huazhong University of Science and Technology in August 2025. It achieves fine-grained motion control and supports complex interactive inputs by unifying keyboard and mouse input into a shared camera representation space. The framework introduces a hybrid history-conditional training strategy, which can autoregressively expand video sequences, preserve game scene information, and ensure long-term temporal coherence. Based on model distillation technology, Hunyuan-GameCraft-1.0 significantly improves inference speed and is suitable for real-time deployment in complex interactive environments. The model is trained on a large-scale AAA game dataset, demonstrating superior visual fidelity, realism, and motion controllability, significantly outperforming existing models. Related research papers are available. Hunyuan-GameCraft: High-dynamic Interactive Game Video Generation with Hybrid History Condition .

This tutorial uses four RTX 4090 graphics cards as computing resources.

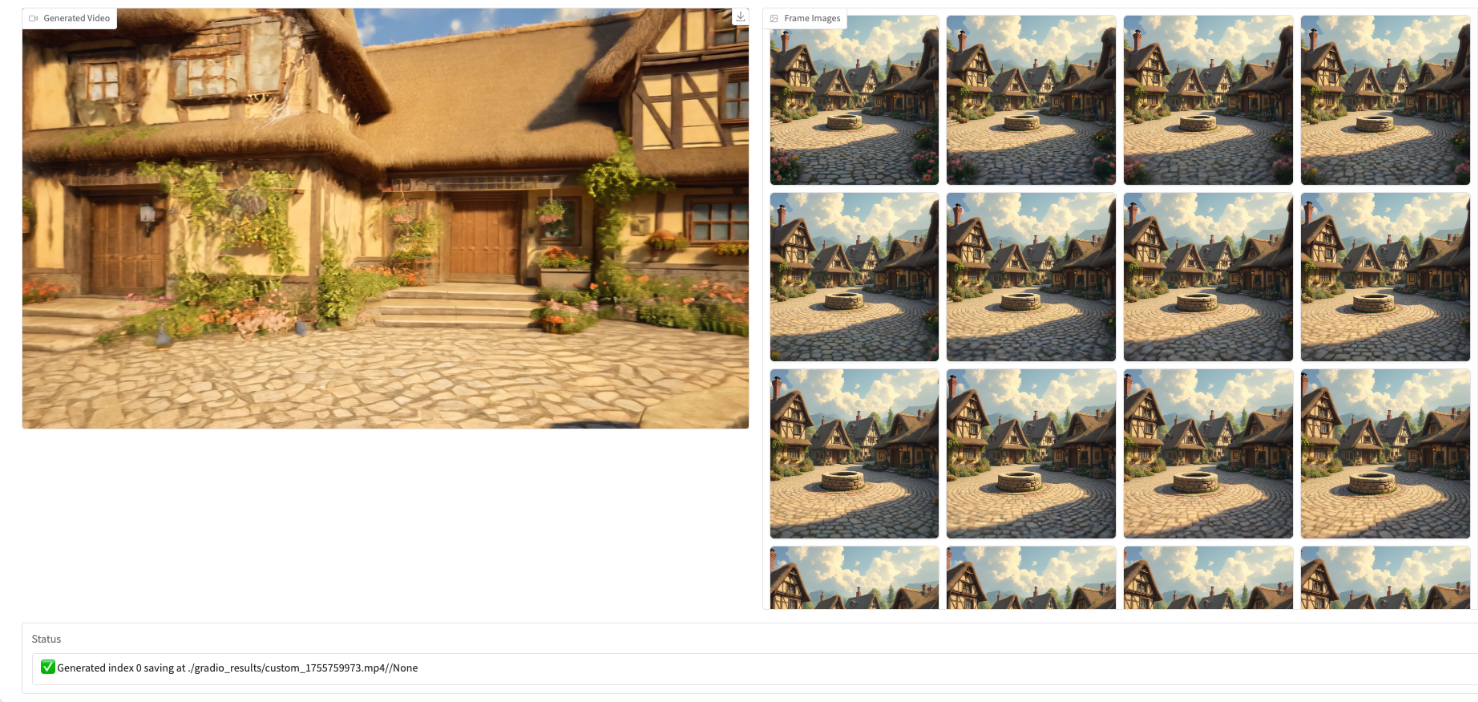

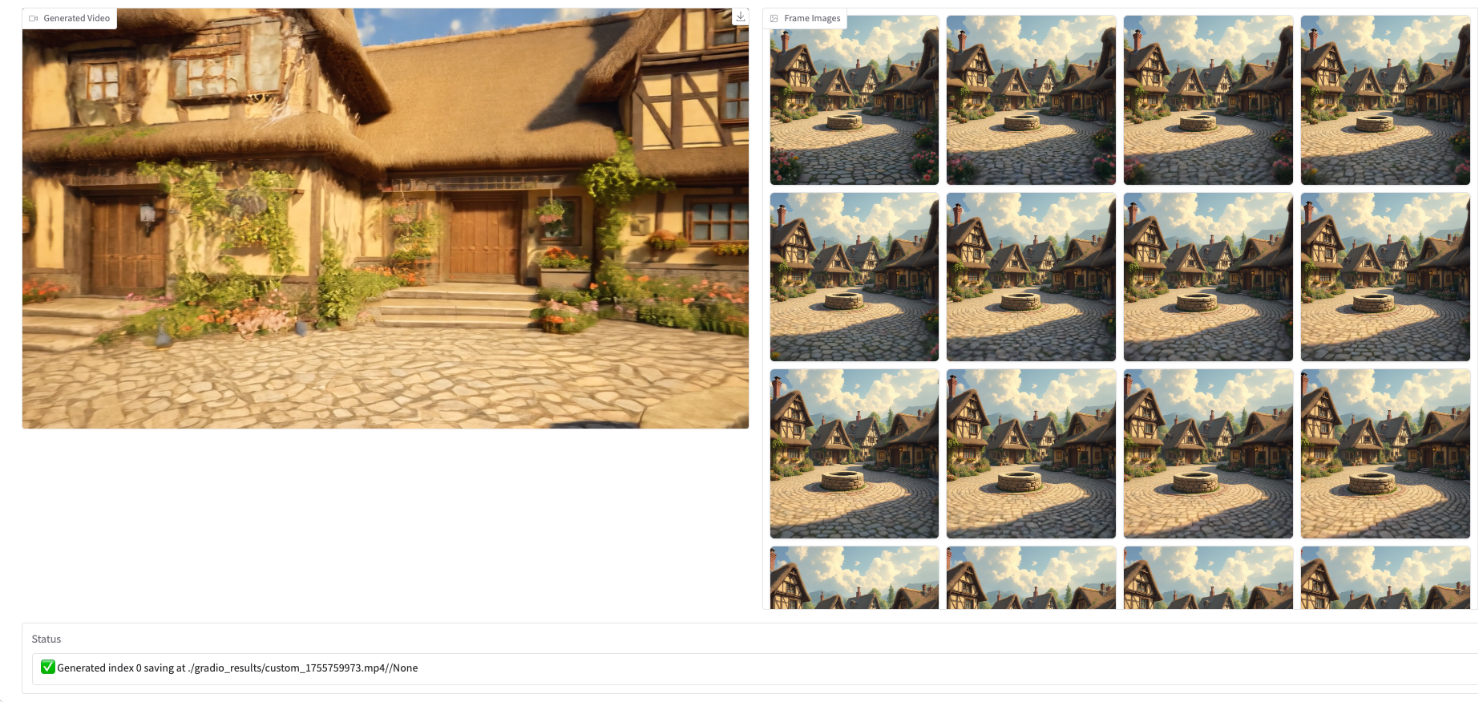

2. Effect display

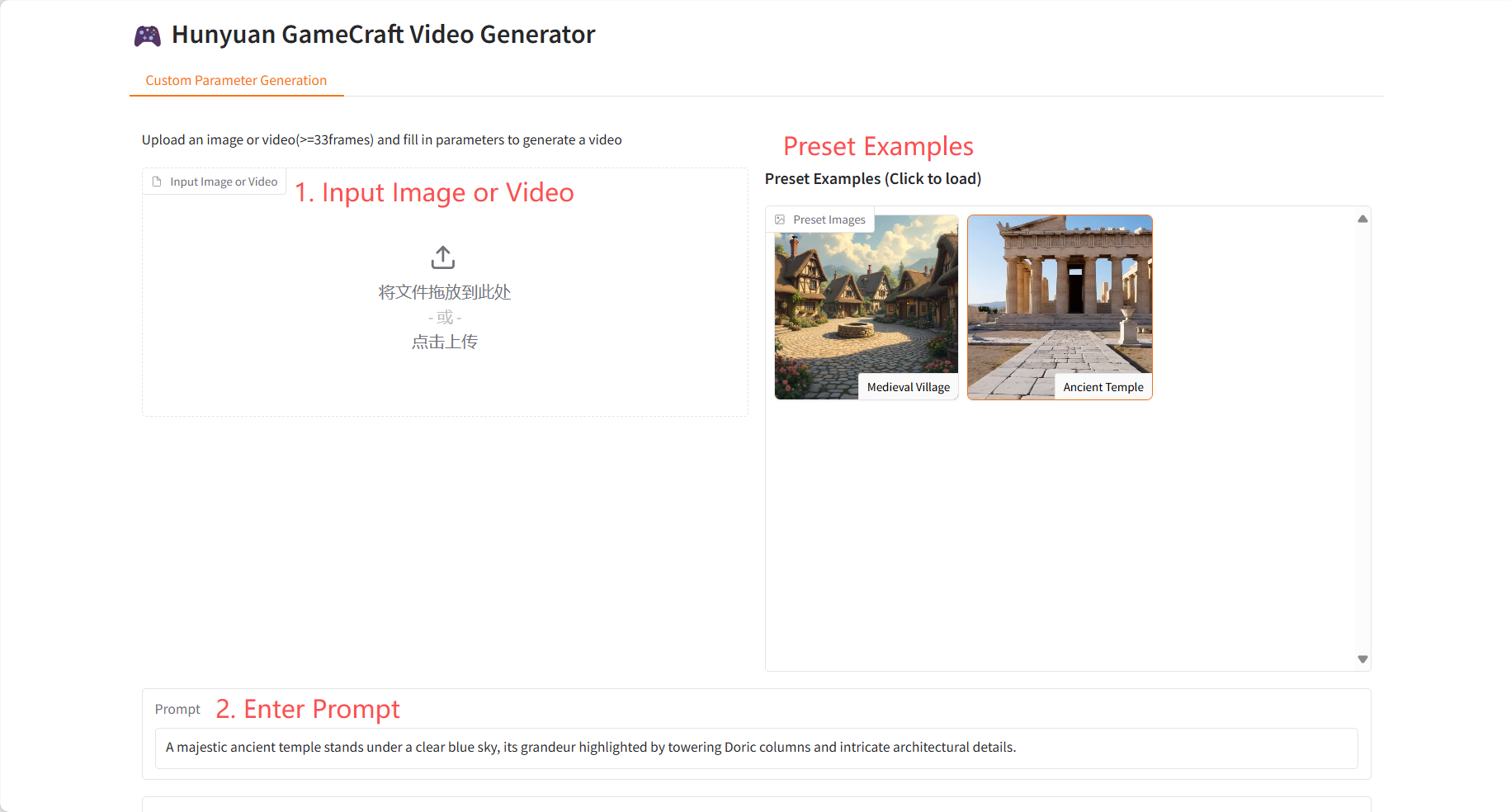

3. Operation steps

1. Start the container

2. Usage steps

If "Bad Gateway" is displayed, it means that the model is initializing. Since the model is large, please wait for about 5-6 minutes and refresh the page. Make sure that the model initialization is complete before operating the Gradio interface.

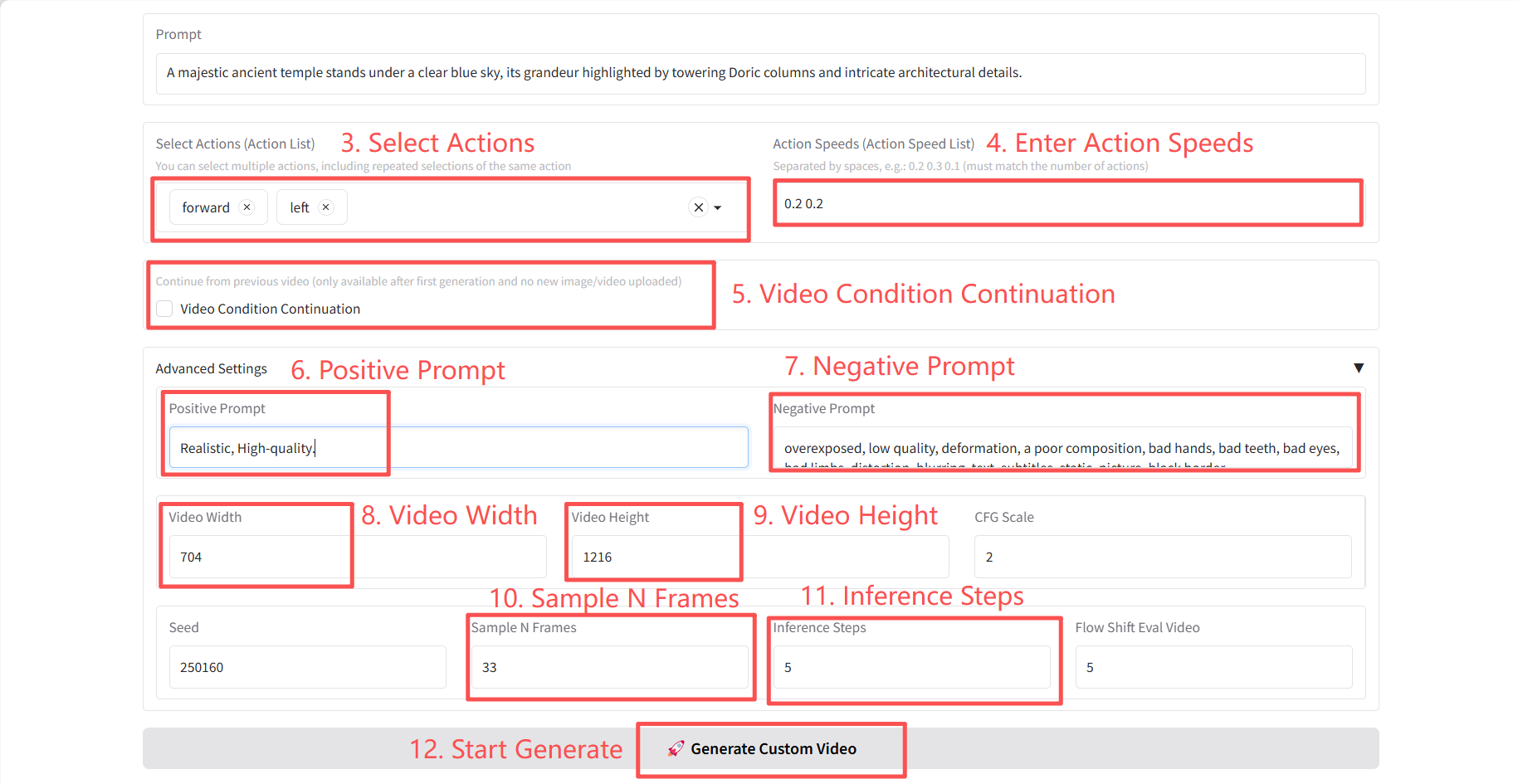

Note: The more inference steps/video frames, the better the generated effect, but the longer the inference generation time will be. Please set the inference steps/video frames appropriately (when the inference steps are 5 and the video frames are 33, it takes about 15 minutes to generate a video).

Specific parameters:

- Select Actions (Action List):

- forward: Move forward in a straight line along the current direction (directly in front of the camera/carrier) without changing the direction.

- left: Move horizontally to the left of the current direction without changing the direction.

- right: Move horizontally to the right of the current direction without changing the direction.

- backward: Move backward in a straight line in the opposite direction of the current direction without changing the direction.

- up_rot: Rotates the view/pitch angle upward around the horizontal axis (pitch ↑). Only the view direction is changed, not the position.

- right_rot: Rotates the camera to the right around the vertical axis (yaw →), changing only the orientation, not the position.

- left_rot: Rotates the camera left around the vertical axis (yaw ←), changing only the orientation, not the position.

- down_rot: Rotates the view/pitch angle downward around the horizontal axis (pitch↓), changing only the view direction, not the position.

- CFG Scale: Controls the influence of the prompt word on the generated result. The larger the value, the more it fits the prompt word.

- Sample N Frames: The total number of frames of the generated video.

- Inference Steps: The number of iterative optimization steps for video generation.

- Flow Shift Eval Video: Parameters that control the smoothness of the video.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

Thanks to Github user SuperYang Deployment of this tutorial. The reference information of this project is as follows:

@misc{li2025hunyuangamecrafthighdynamicinteractivegame,

title={Hunyuan-GameCraft: High-dynamic Interactive Game Video Generation with Hybrid History Condition},

author={Jiaqi Li and Junshu Tang and Zhiyong Xu and Longhuang Wu and Yuan Zhou and Shuai Shao and Tianbao Yu and Zhiguo Cao and Qinglin Lu},

year={2025},

eprint={2506.17201},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2506.17201},

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.