Command Palette

Search for a command to run...

Hunyuan-MT-7B: Translation Model Demo

Date

Size

1.34 GB

License

Other

1. Tutorial Introduction

Hunyuan-MT-7B is a lightweight translation model released by the Tencent Hunyuan team in September 2025. With only 7 billion parameters, it supports translation between 33 languages and five ethnic Chinese languages/dialects. It achieved outstanding performance, winning first place in 30 of 31 language categories at the Association for Computational Linguistics (ACL) WMT2025 competition. The model accurately understands internet slang, classical Chinese poetry, and social conversations, providing contextualized translation. It proposes a comprehensive training paradigm, from pre-training to integrated reinforcement learning. It boasts fast inference speed, and performance is further improved by 30% after processing with Tencent's proprietary AngelSlim compression tool.

This tutorial uses resources for a single RTX 4090 card.

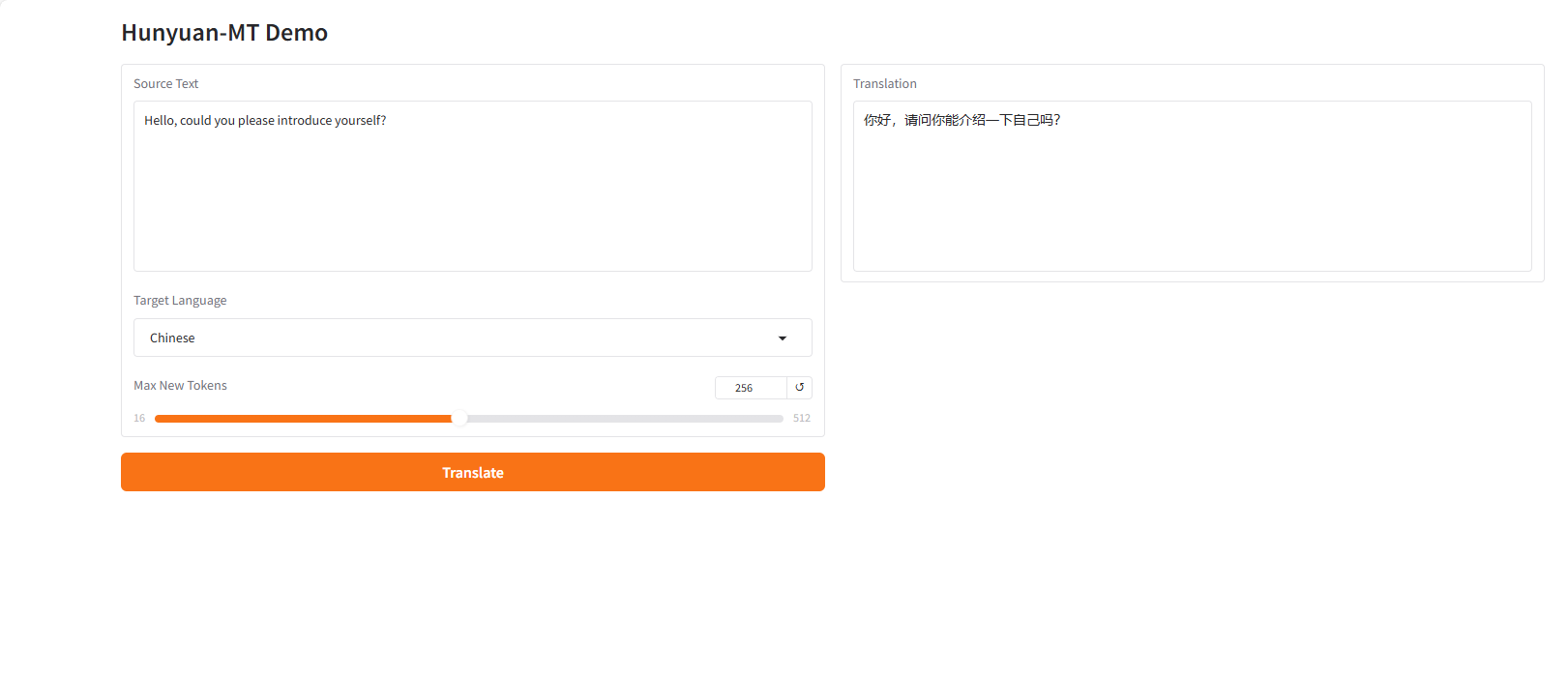

2. Project Examples

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

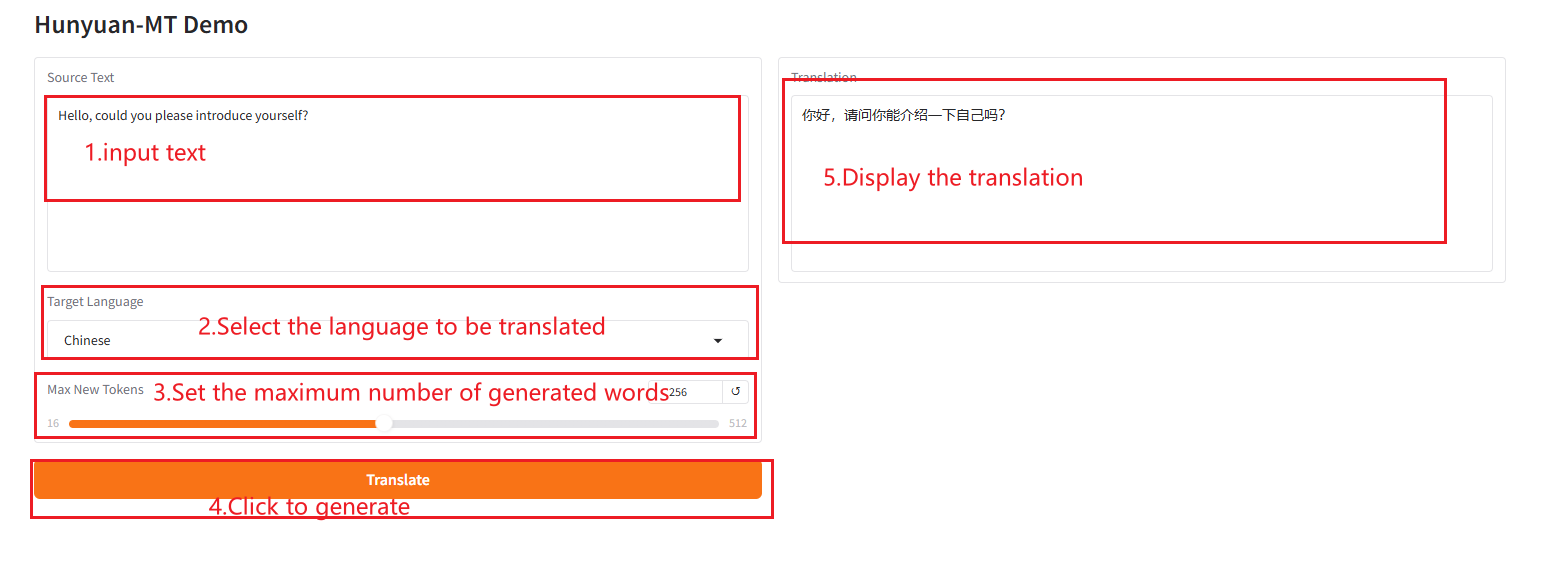

2. Usage steps

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@misc{hunyuanmt2025,

title={Hunyuan-MT Technical Report},

author={Mao Zheng, Zheng Li, Bingxin Qu, Mingyang Song, Yang Du, Mingrui Sun, Di Wang, Tao Chen, Jiaqi Zhu, Xingwu Sun, Yufei Wang, Can Xu, Chen Li, Kai Wang, Decheng Wu},

howpublished={\url{https://github.com/Tencent-Hunyuan/Hunyuan-MT}},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.