Command Palette

Search for a command to run...

MiniCPM-V4.0: Extremely Efficient Large-Scale End-to-End Model

1. Tutorial Introduction

MiniCPM-V 4.0 is an extremely efficient edge-side large model open-sourced in August 2025 by the Natural Language Processing Laboratory of Tsinghua University in collaboration with Wallfacer Intelligence. MiniCPM-V 4.0 is built on SigLIP2-400M and MiniCPM4-3B, with a total parameter count of 4.1B. It inherits the powerful single-image, multi-image, and video understanding performance of MiniCPM-V 2.6, with significantly improved efficiency. Wallfacer Intelligence also simultaneously open-sourced iOS applications for iPhone and iPad. In the OpenCompass benchmark, MiniCPM-V 4.0's image understanding capabilities surpass GPT-4.1-mini-20250414, Qwen2.5-VL-3B-Instruct, and InternVL2.5-8B. Related research papers are available. MiniCPM-V: A GPT-4V Level MLLM on Your Phone .

This tutorial uses a single RTX 4090 graphics card as computing resource. Three examples are provided for testing: Chat with single or multiple images, Chat with video, and Few shot.

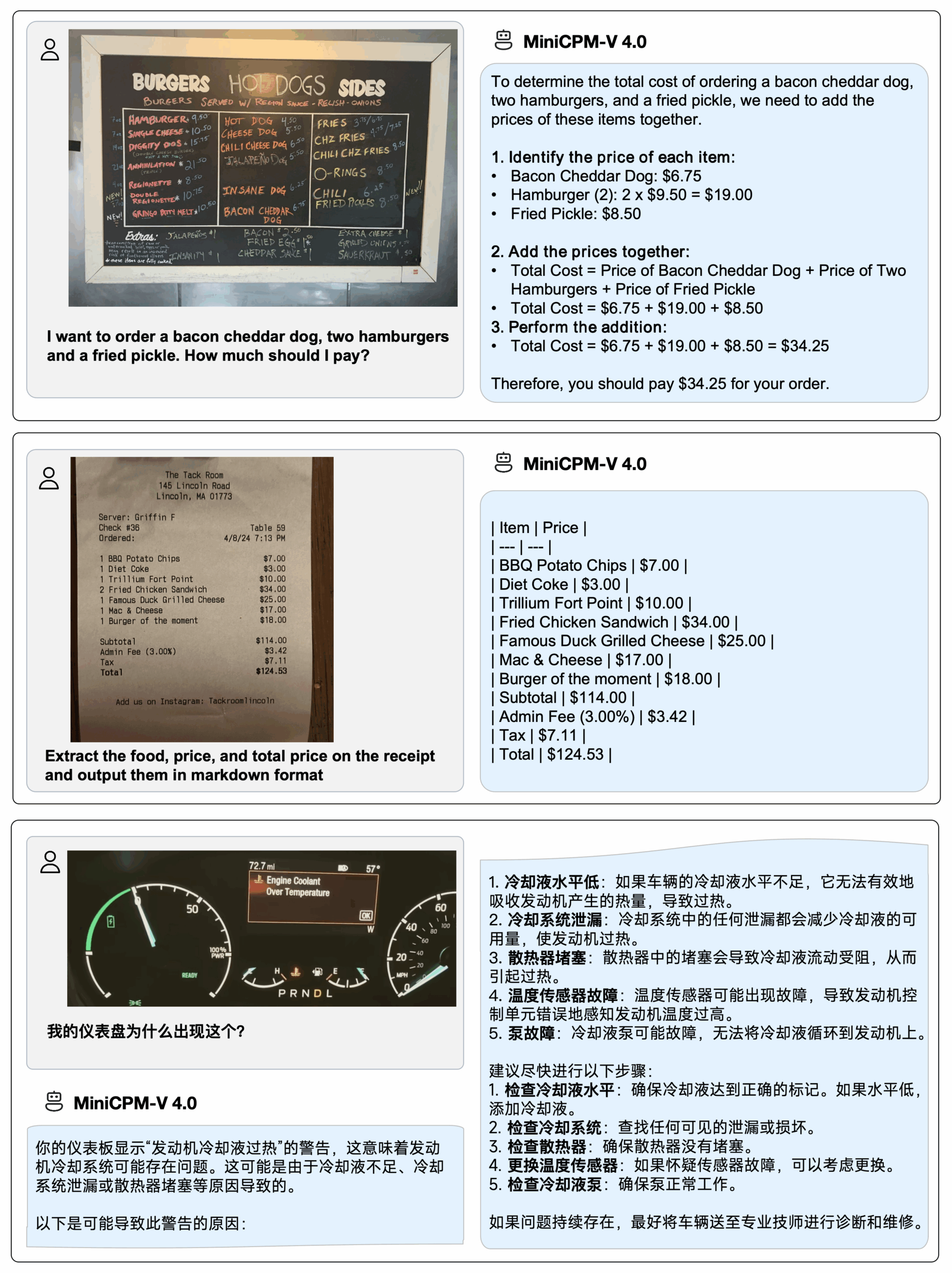

2. Effect display

Deploy MiniCPM-V 4.0 on iPhone 16 Pro Max,iOS DemoThe demo video is a raw, unedited screen recording:

3. Operation steps

1. Start the container

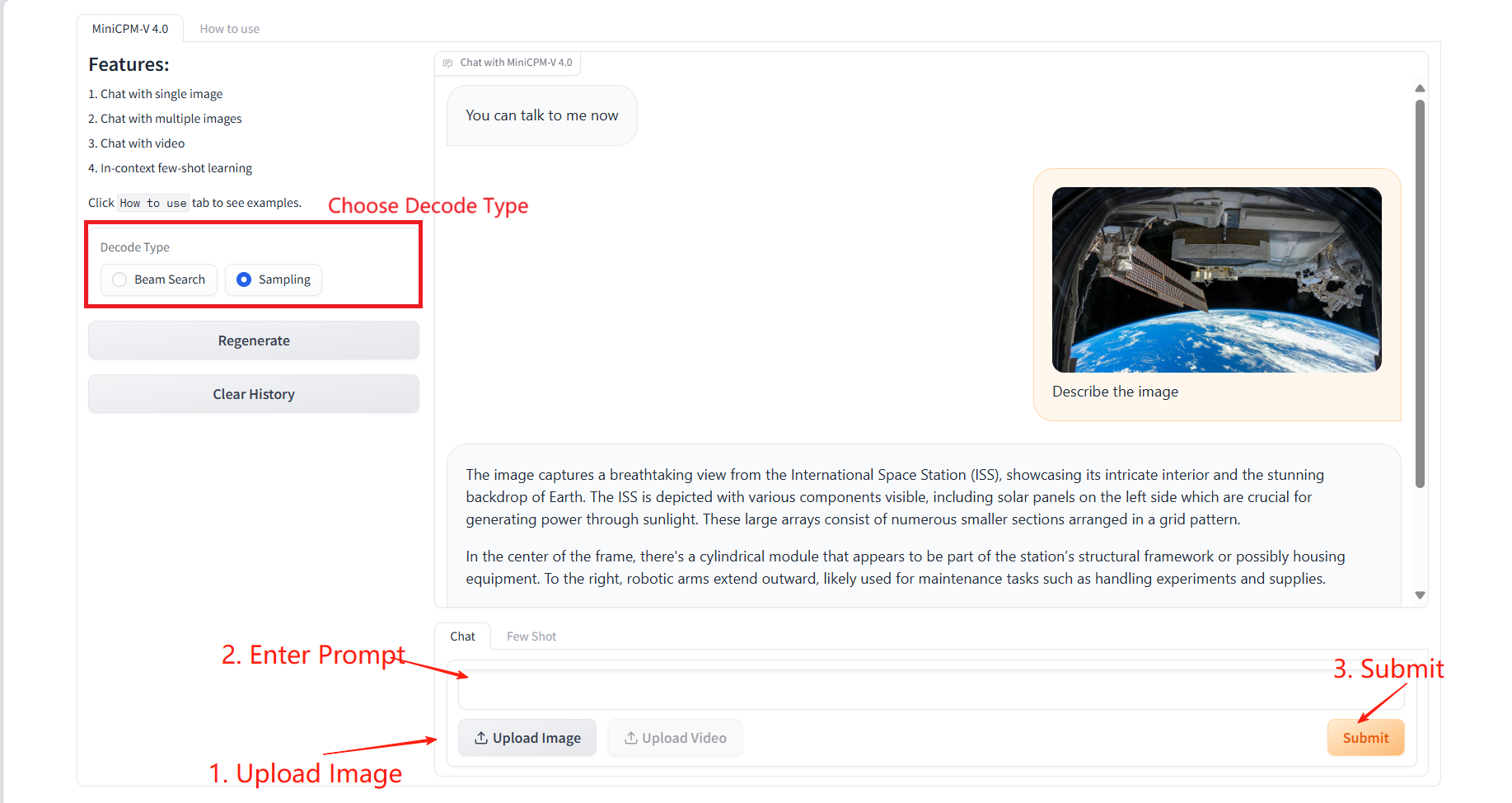

2. Usage steps

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

Click "How to use" to view the usage guide.

1. Chat with single or multiple images

Specific parameters:

- Decode Type:

- Beam Search: A highly deterministic decoding method that attempts to retain the most likely candidate sequences. It is more suitable for scenarios that require accurate and consistent output.

- Sampling: Randomly sampling the next word based on a probability distribution. The output is more creative but may be unstable.

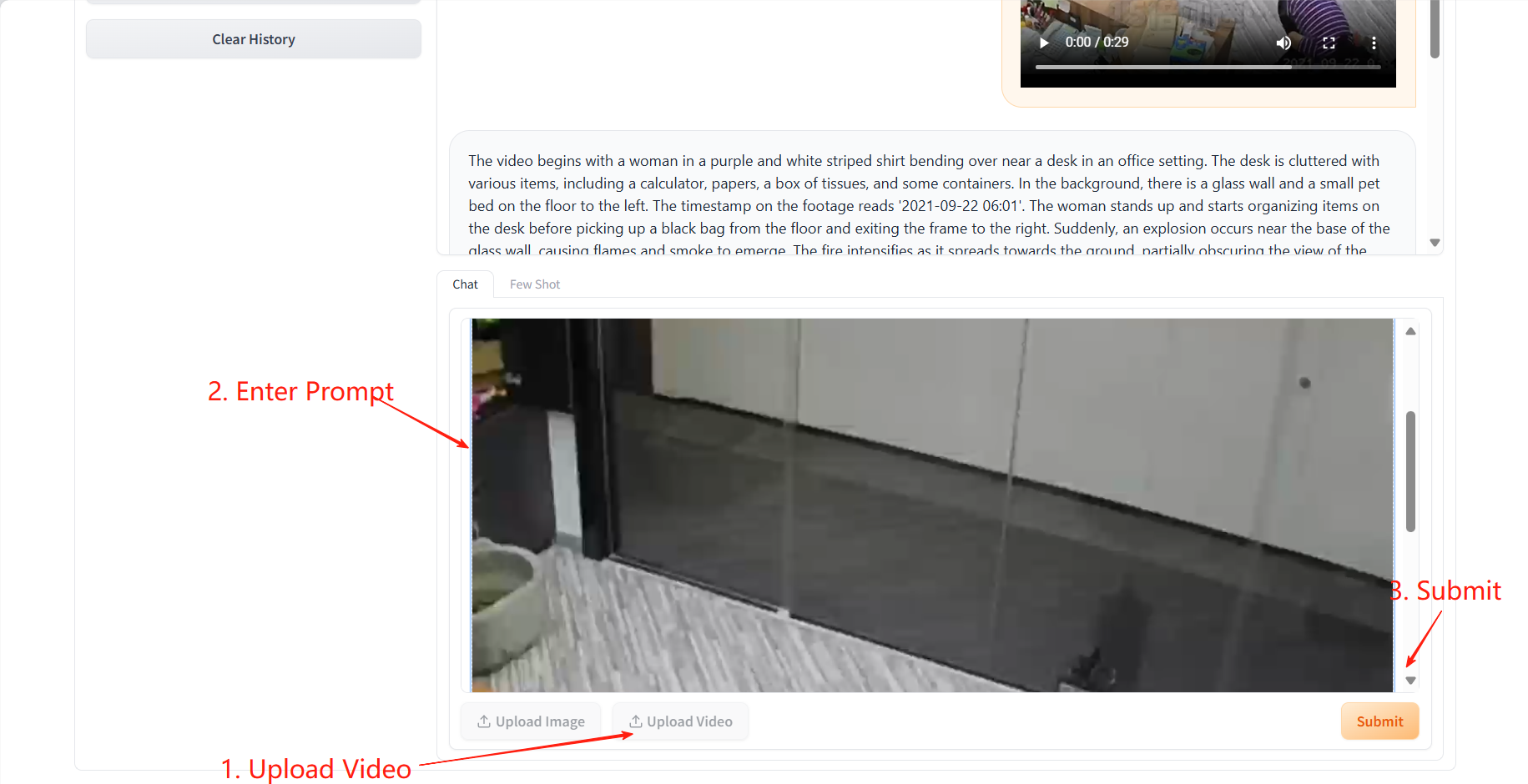

2. Chat with video

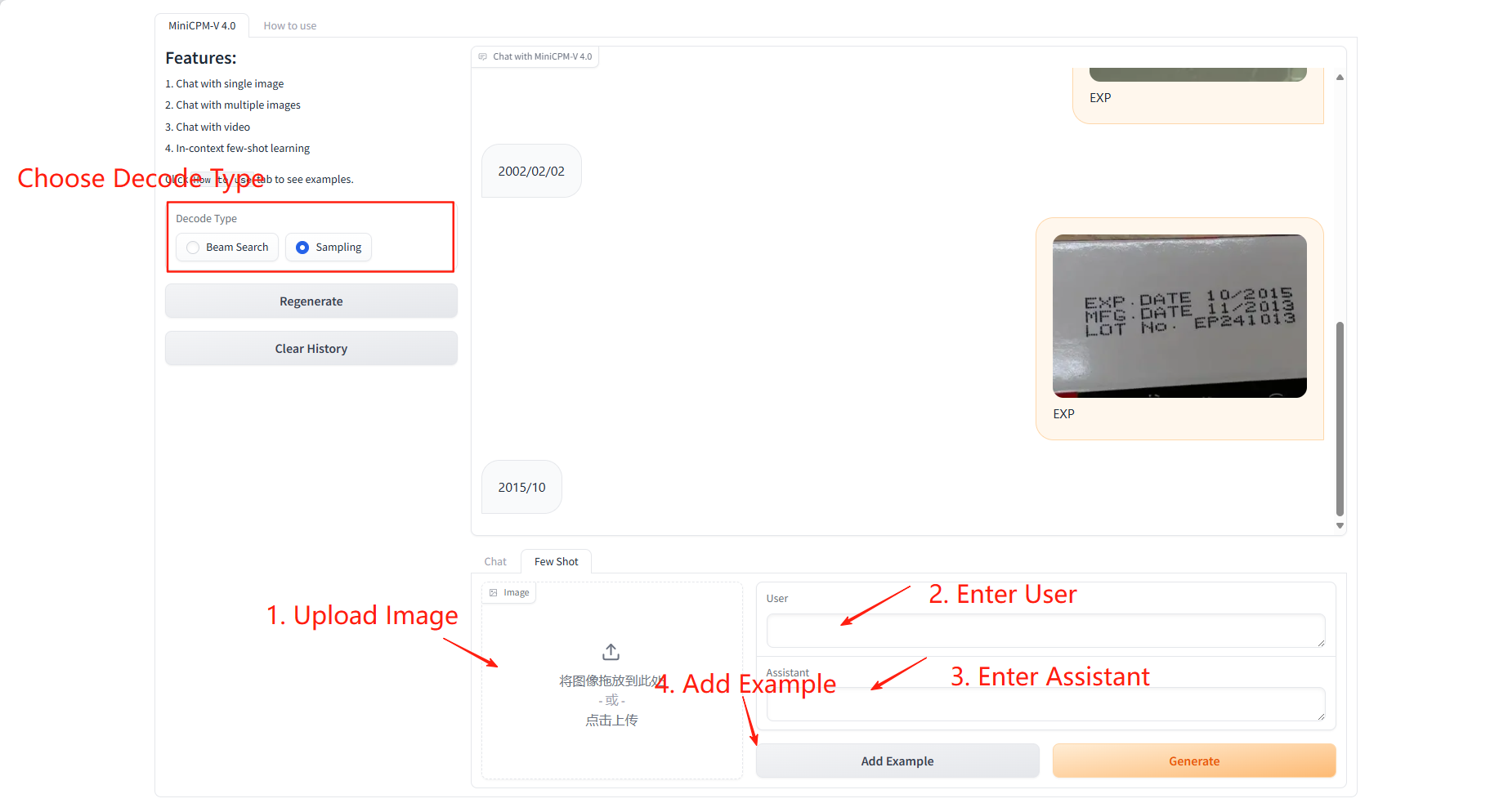

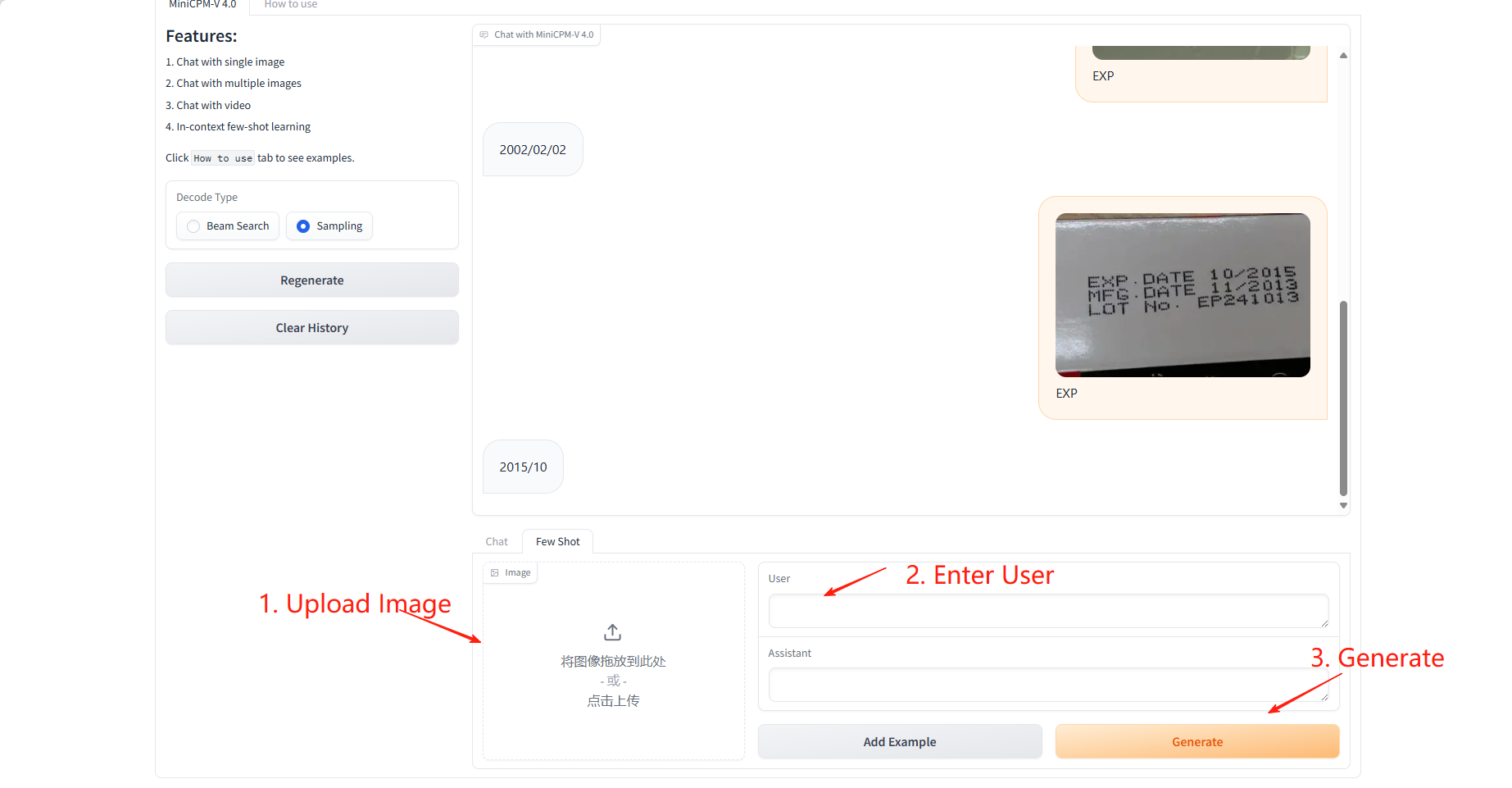

3. Few shot

Sample Learning

Specific parameters:

- User: Enter the field to be predicted or learned for this sample.

- Assistant: Enter the value corresponding to the field to be learned for this sample.

Result prediction

Citation Information

The citation information for this project is as follows:

@article{yao2024minicpm,

title={MiniCPM-V: A GPT-4V Level MLLM on Your Phone},

author={Yao, Yuan and Yu, Tianyu and Zhang, Ao and Wang, Chongyi and Cui, Junbo and Zhu, Hongji and Cai, Tianchi and Li, Haoyu and Zhao, Weilin and He, Zhihui and others},

journal={Nat Commun 16, 5509 (2025)},

year={2025}

}

@article{yao2024minicpm,

title={MiniCPM-V: A GPT-4V Level MLLM on Your Phone},

author={Yao, Yuan and Yu, Tianyu and Zhang, Ao and Wang, Chongyi and Cui, Junbo and Zhu, Hongji and Cai, Tianchi and Li, Haoyu and Zhao, Weilin and He, Zhihui and others},

journal={arXiv preprint arXiv:2408.01800},

year={2024}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.