Command Palette

Search for a command to run...

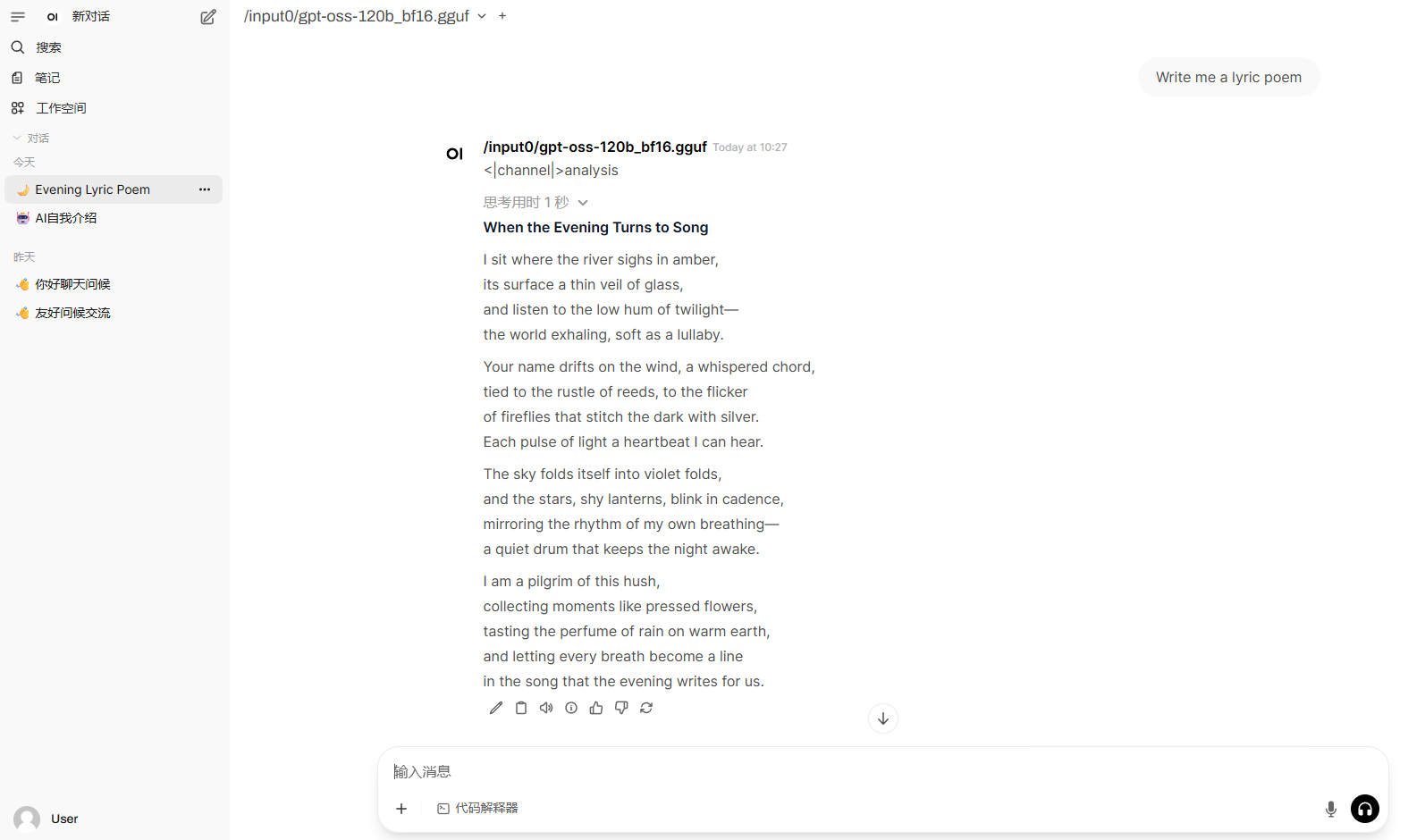

llama.cpp+Open-Webui Deploy gpt-oss-120b

1. Tutorial Introduction

gpt-oss-120b is an open-source inference model launched by OpenAI in August 2025. It targets strong reasoning, agent-based tasks, and diverse development scenarios. Based on the MoE architecture, this model supports a context length of 128k and offers performance comparable to the closed-source o4-mini and o3-mini. It excels in tool invocation, few-shot function calls, chained reasoning, and health question-answering.

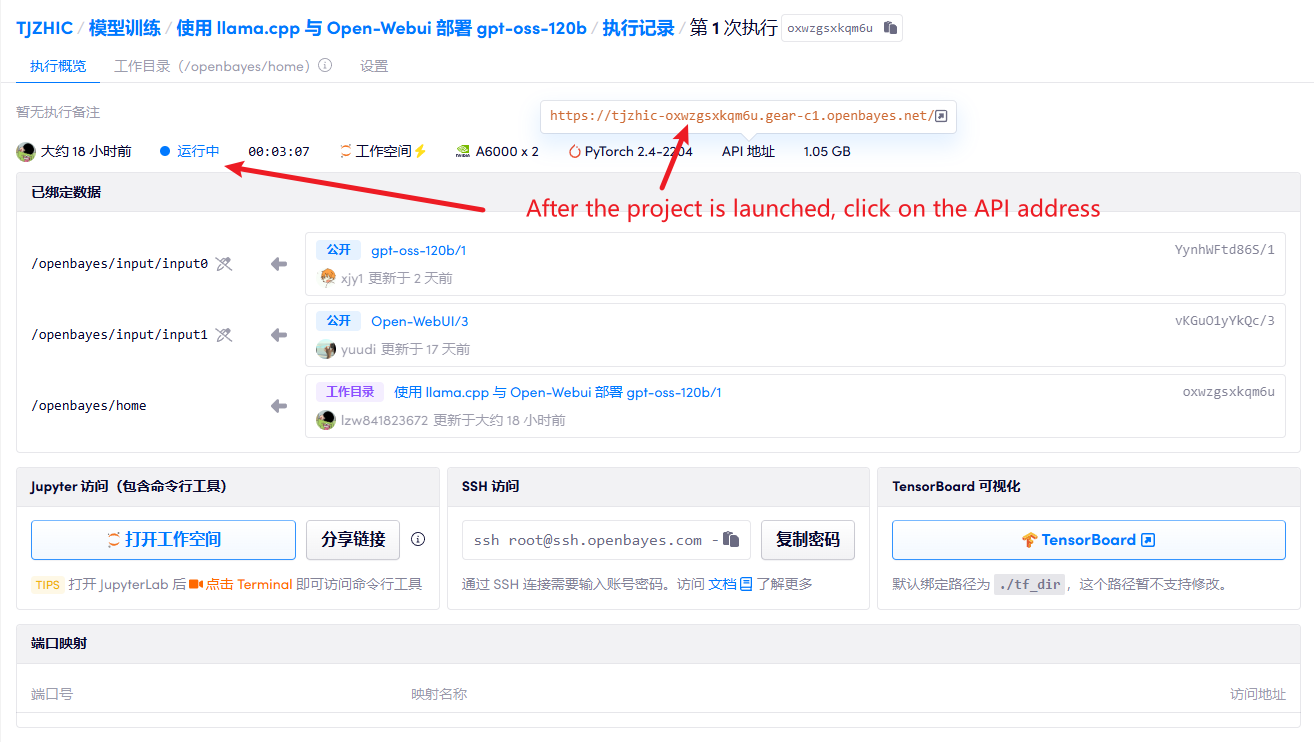

This tutorial uses dual-card RTX A6000 resources.

2. Project Examples

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

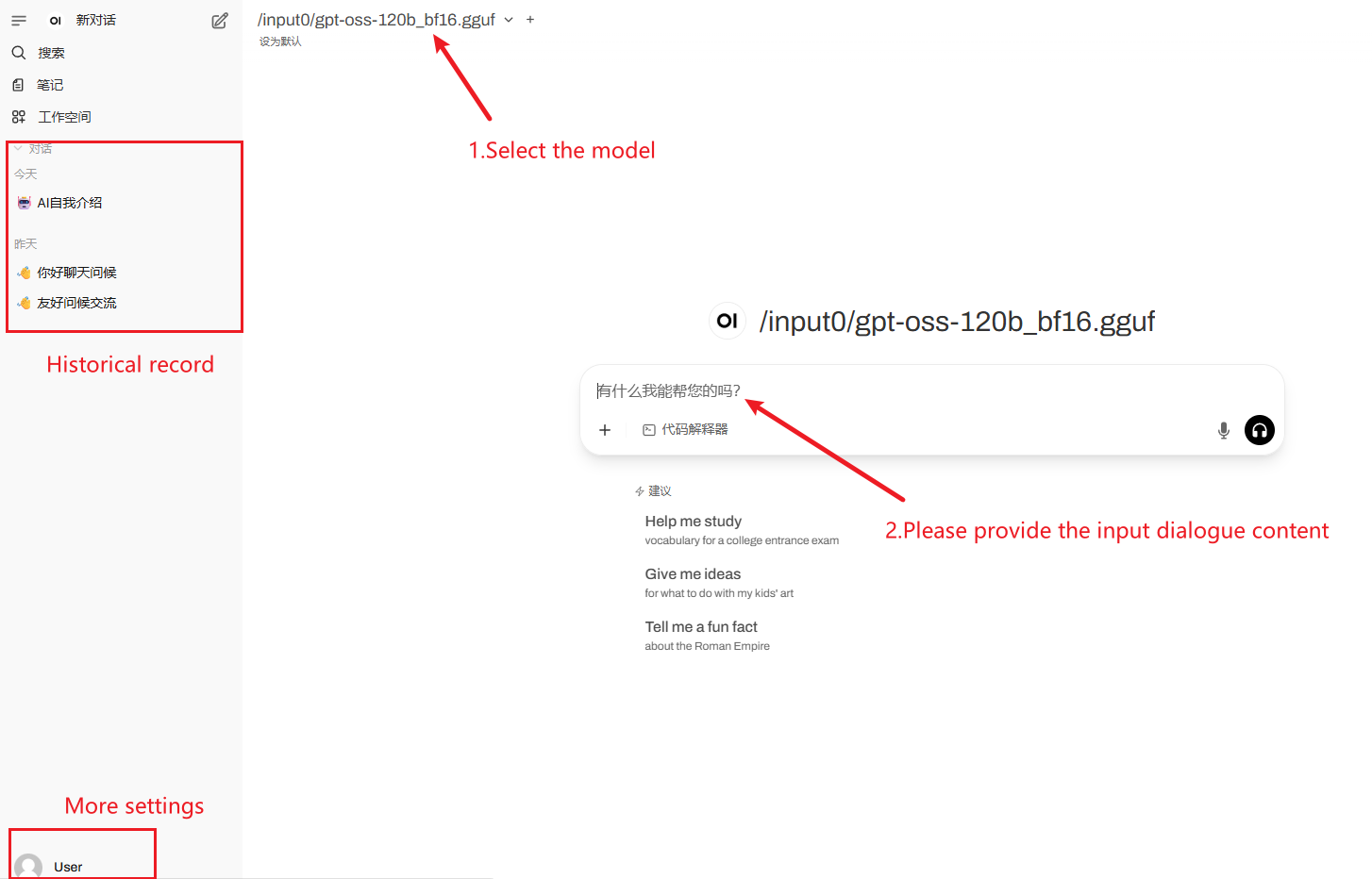

2. After entering the webpage, you can start a conversation with the model

If "Model" is not displayed, it means the model is being initialized. Since the model is large, please wait about 2-3 minutes and refresh the page.

How to use

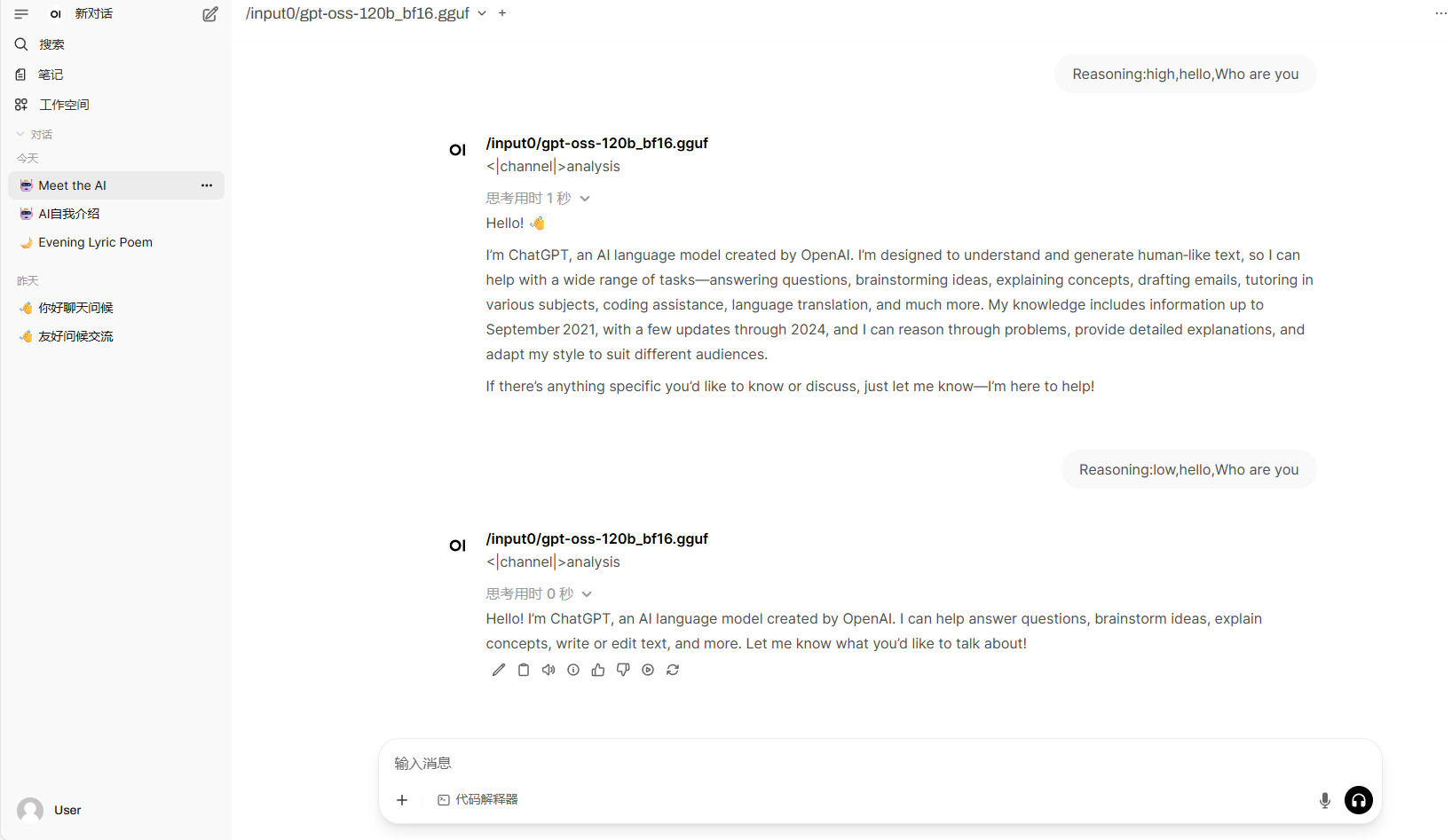

Notice: In this tutorial, you can modify the reasoning level of the model by adding "Reasoning: low/medium/high" before the prompt word.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.