Command Palette

Search for a command to run...

Deploy EXAONE-4.0-32B Using vLLM + Open WebUI

1. Tutorial Introduction

EXAONE-4.0 is a next-generation hybrid reasoning AI model launched by LG AI Research Institute in South Korea on July 15, 2025. It is also the first hybrid reasoning AI model in South Korea. This model integrates general natural language processing capabilities with advanced reasoning capabilities verified by EXAONE Deep, achieving breakthroughs in challenging fields such as mathematics, science, and programming. The model supports MCP and function call functionality, providing a technical foundation for Agentic AI. Its released 32B professional model has passed six national professional certification written examinations. Its latest global high-difficulty benchmark test scores are as follows: Knowledge Reasoning: MMLU-Pro 81.8 points, Programming Ability: LiveCodeBench v6 66.7 points, Scientific Literacy: GPQA-Diamond 75.4 points, Mathematical Ability: AIME 2025 85.3 points. Related research papers are available. EXAONE 4.0: Unified Large Language Models Integrating Non-reasoning and Reasoning Modes .

This tutorial uses the resources for the dual-SIM A6000.

2. Project Examples

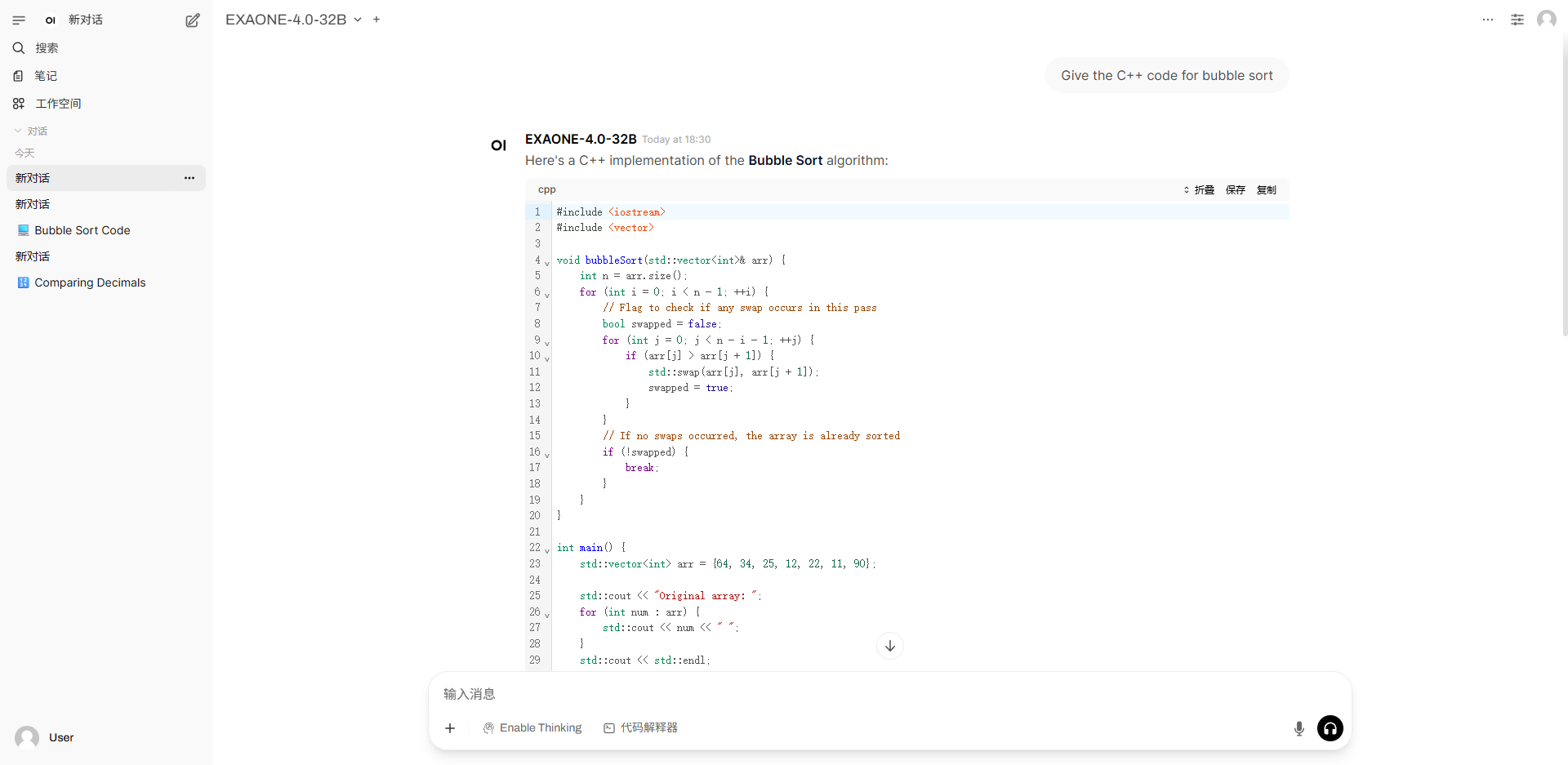

1. Turn off thinking mode

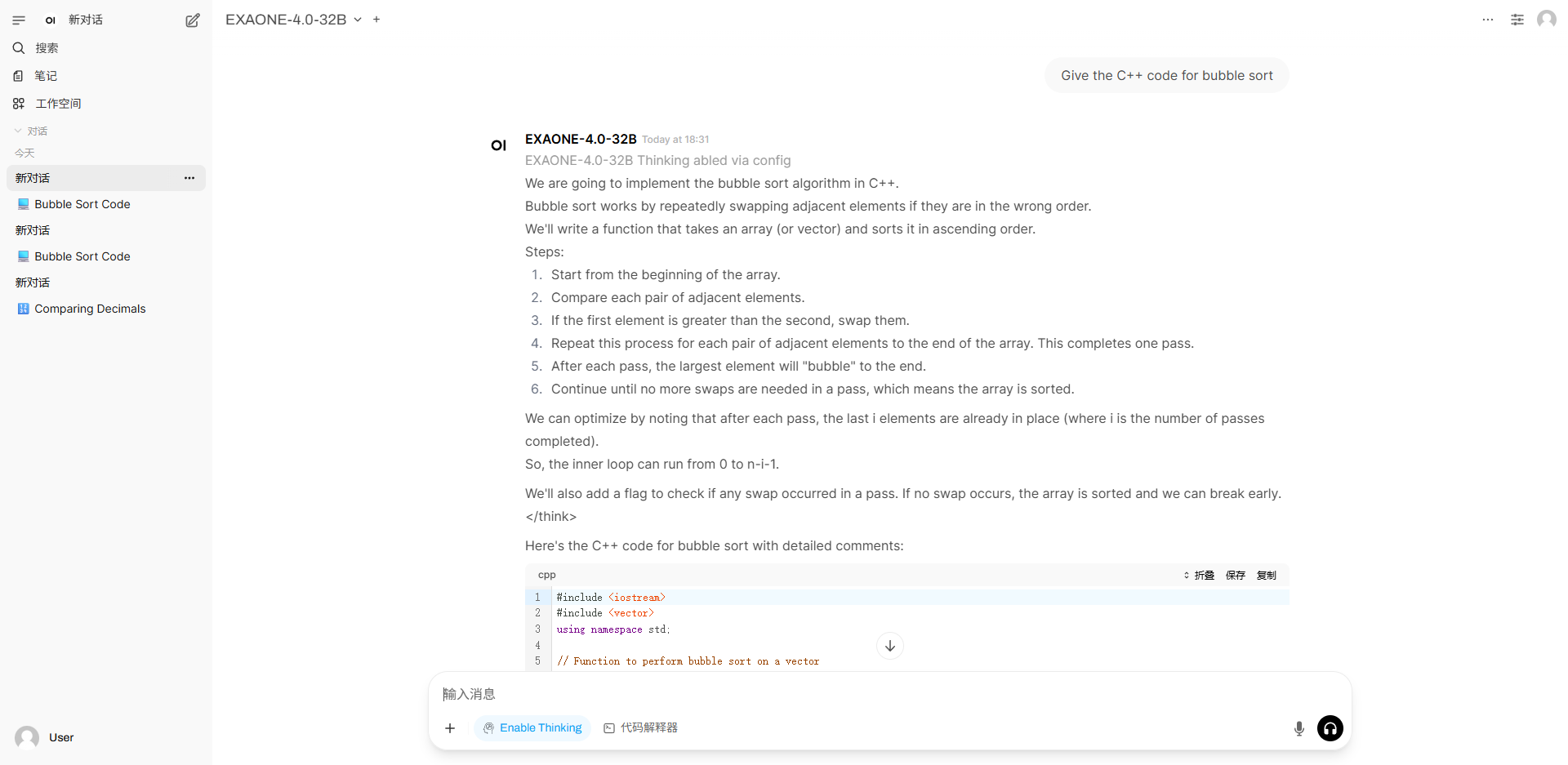

2. Start thinking mode

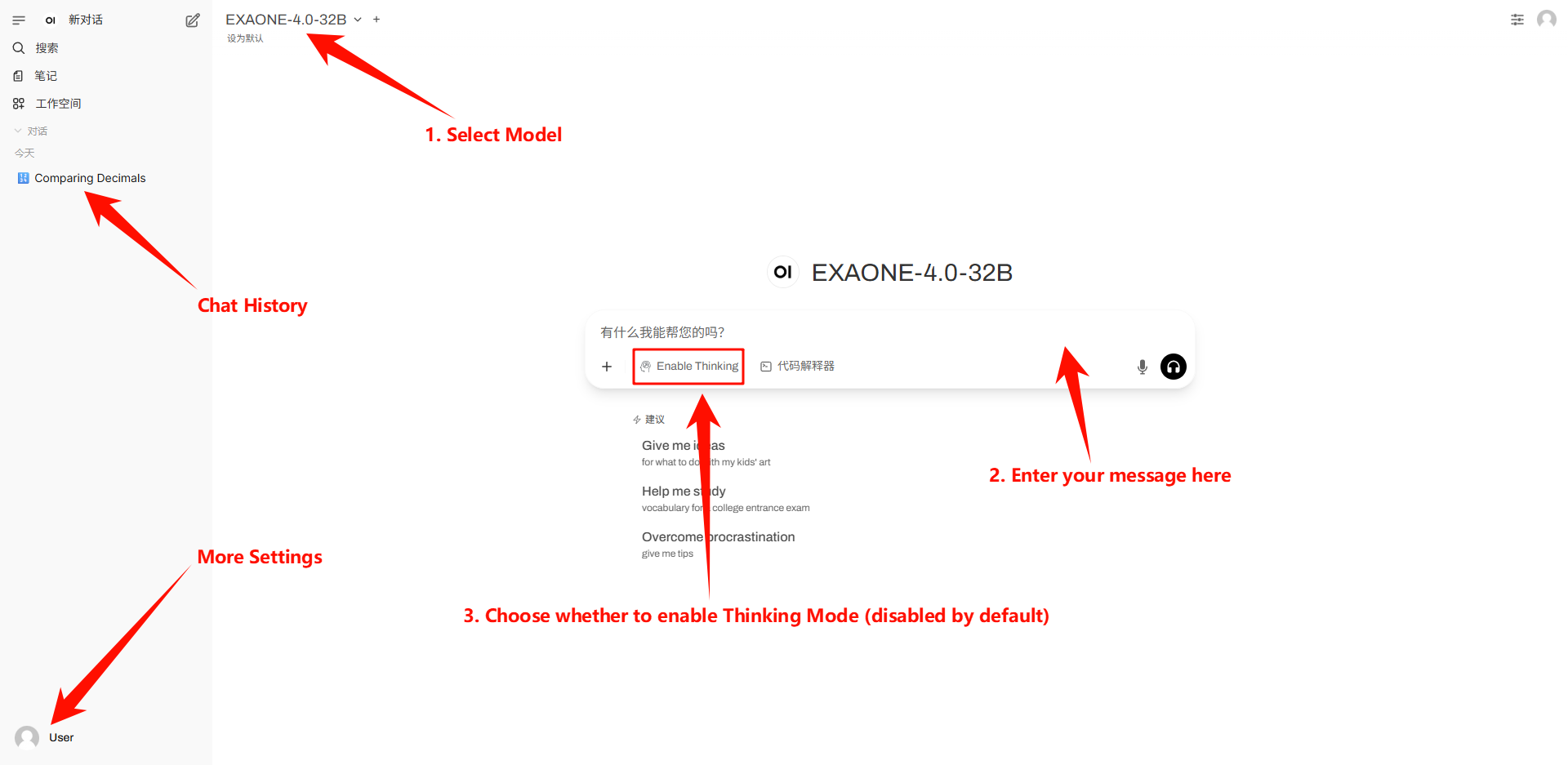

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

2. After entering the webpage, you can start a conversation with the model

If "Model" is not displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

How to use

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@article{exaone-4.0,

title={EXAONE 4.0: Unified Large Language Models Integrating Non-reasoning and Reasoning Modes},

author={{LG AI Research}},

journal={arXiv preprint arXiv:2507.11407},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.