Command Palette

Search for a command to run...

HealthGPT: AI Medical Assistant

Date

Size

600.63 MB

License

Apache 2.0

GitHub

Paper URL

1. Tutorial Introduction

HealthGPT, a large-scale medical visual language model (Med-LVLM) jointly released on March 16, 2025, by Zhejiang University, University of Electronic Science and Technology of China, Alibaba, Hong Kong University of Science and Technology, and National University of Singapore, achieves a unified framework for medical visual understanding and generation tasks through heterogeneous knowledge adaptation technology. It employs an innovative heterogeneous low-rank adaptation (H-LoRA) technique, storing knowledge for visual understanding and generation tasks in independent plugins to avoid conflicts between tasks. HealthGPT provides two versions: HealthGPT-M3 (3.8 billion parameters) and HealthGPT-L14 (14 billion parameters), based on the Phi-3-mini and Phi-4 pre-trained language models, respectively. The model introduces hierarchical visual perception (HVP) and a three-stage learning strategy (TLS) to optimize visual feature learning and task adaptation capabilities. Related research papers are available. HealthGPT: A Medical Large Vision-Language Model for Unifying Comprehension and Generation via Heterogeneous Knowledge Adaptation It has been included in ICML 2025 and selected as a Spotlight.

This tutorial uses resources for a single RTX A6000 card. English is recommended.

The project provides two models of models:

- HealthGPT-M3: A smaller version optimized for speed and reduced memory usage.

- HealthGPT-L14: A larger version designed for higher performance and more complex tasks.

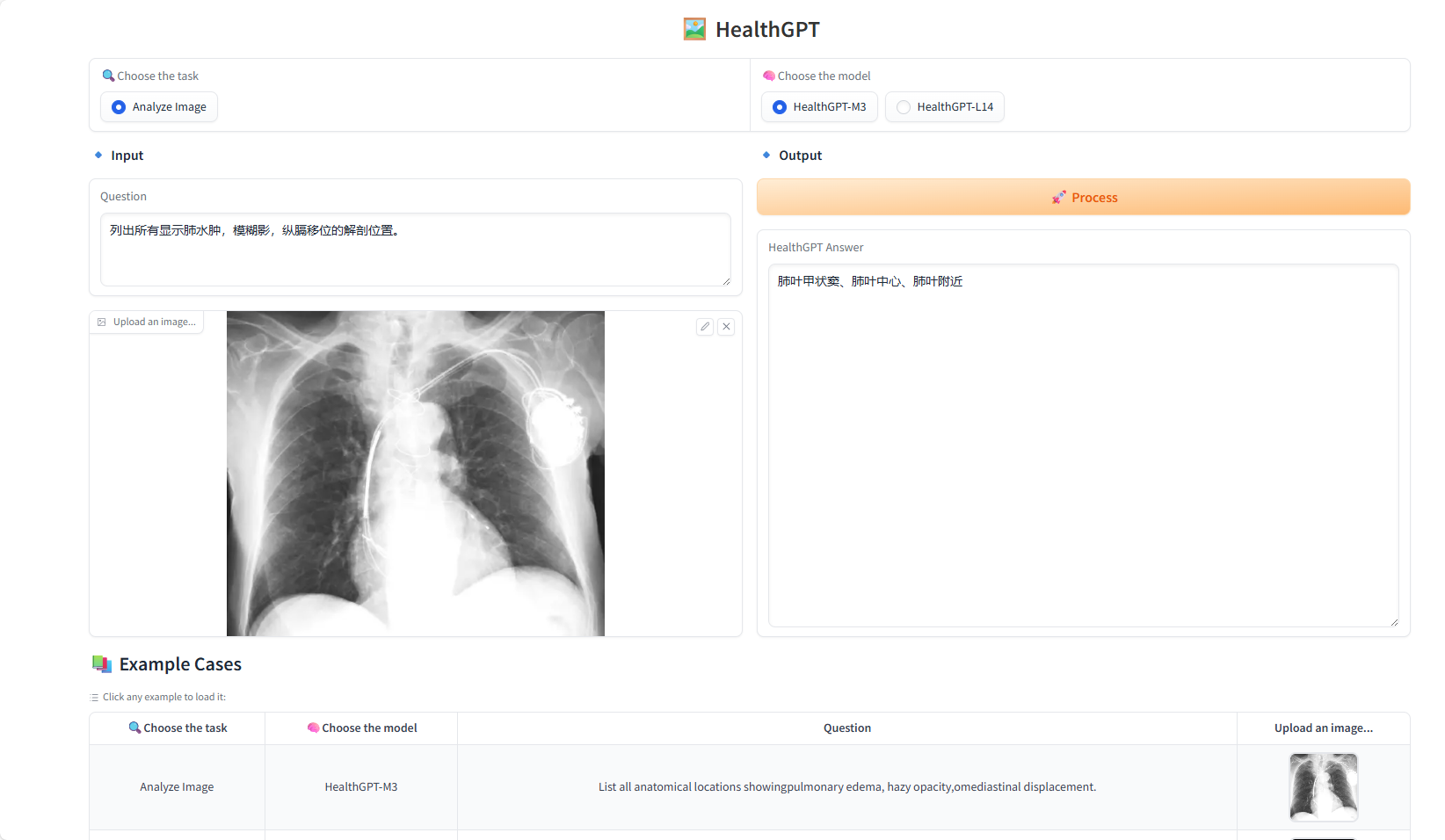

2. Project Examples

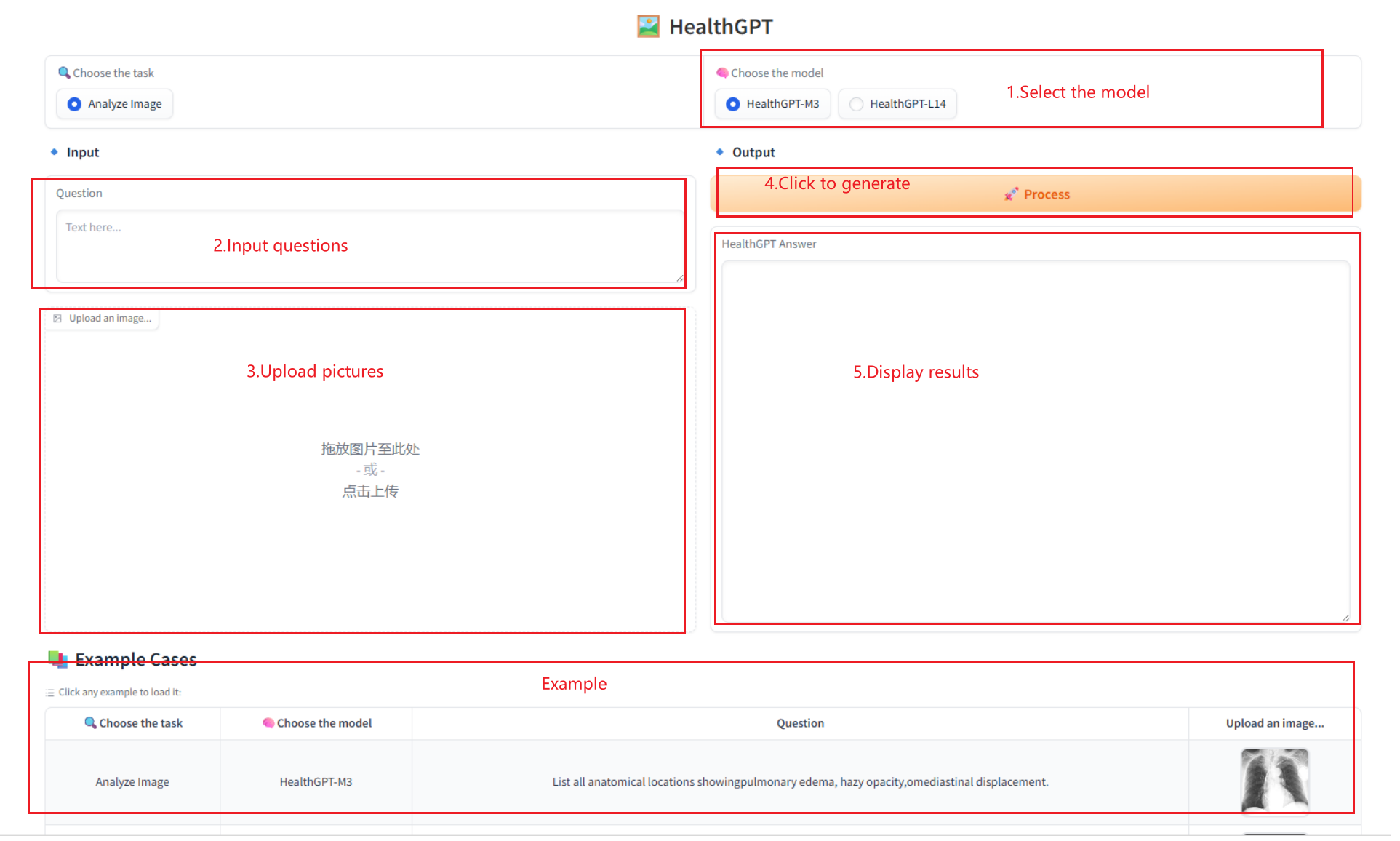

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

2. Usage steps

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

Thanks to Github user xxxjjjyyy1 Deployment of this tutorial. The reference information of this project is as follows:

@misc{lin2025healthgptmedicallargevisionlanguage,

title={HealthGPT: A Medical Large Vision-Language Model for Unifying Comprehension and Generation via Heterogeneous Knowledge Adaptation},

author={Tianwei Lin and Wenqiao Zhang and Sijing Li and Yuqian Yuan and Binhe Yu and Haoyuan Li and Wanggui He and Hao Jiang and Mengze Li and Xiaohui Song and Siliang Tang and Jun Xiao and Hui Lin and Yueting Zhuang and Beng Chin Ooi},

year={2025},

eprint={2502.09838},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2502.09838},

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.