Command Palette

Search for a command to run...

OmniGen2: Exploring Advanced Multimodal Generation

Date

Size

1.62 GB

Tags

License

Apache 2.0

GitHub

Paper URL

1. Tutorial Introduction

OmniGen2 is an open-source multimodal generative model released by the Beijing Academy of Artificial Intelligence (BAAI) on June 16, 2025. It aims to provide a unified solution for various generative tasks, including text-to-image generation, image editing, and context generation. Unlike OmniGen v1, OmniGen2 designs two independent decoding paths for text and image modalities, employing non-shared parameters and separate image segmenters. This design allows OmniGen2 to be built upon existing multimodal understanding models without needing to re-adapt to VAE inputs, thus retaining its original text generation capabilities. Its core innovations lie in its dual-path architecture and self-reflection mechanism, setting a new benchmark for current open-source multimodal models. Related research papers are available. OmniGen2: Exploration to Advanced Multimodal Generation .

The computing resources of this tutorial use a single RTX A6000 card, and the English prompts are currently more effective.

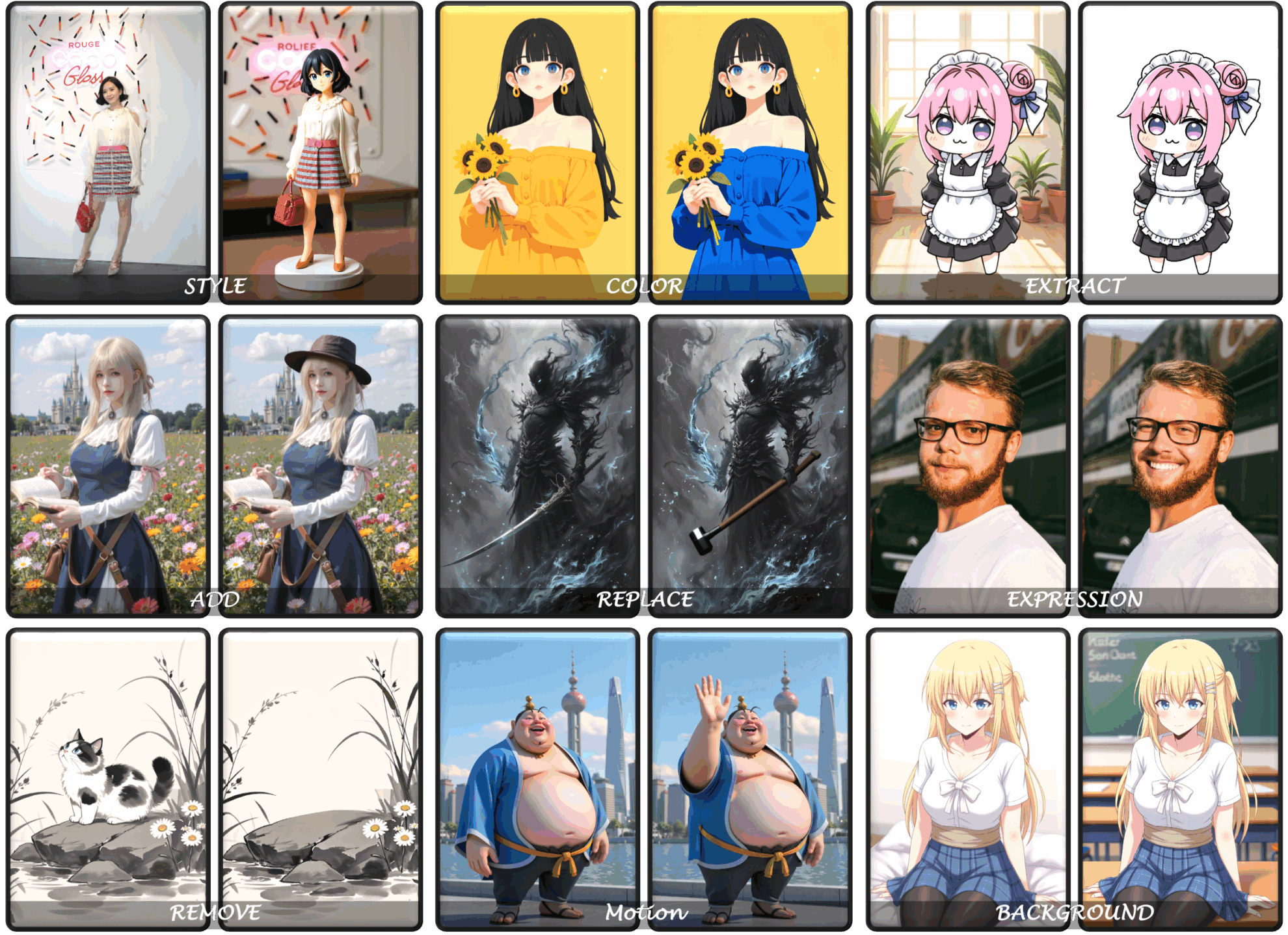

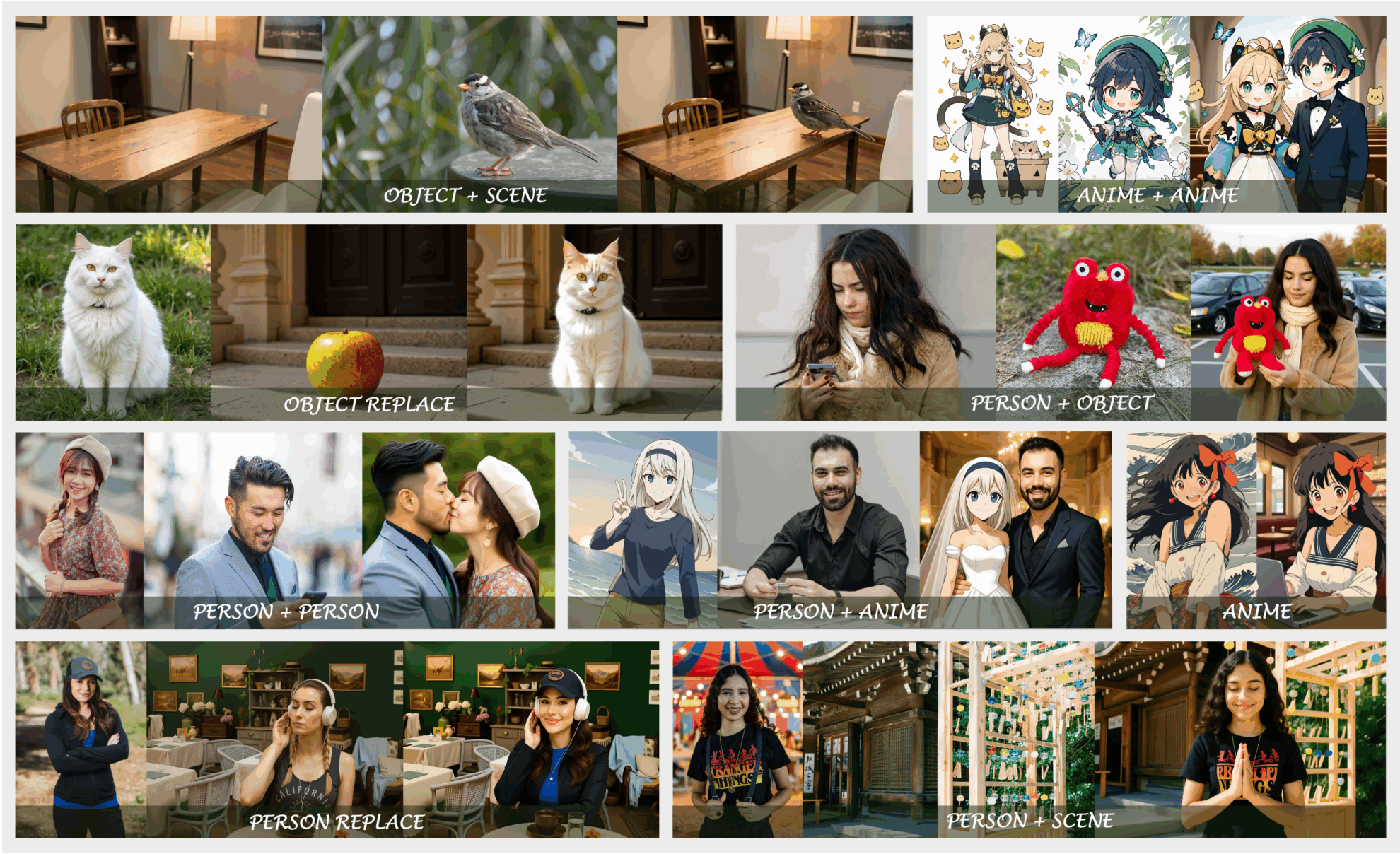

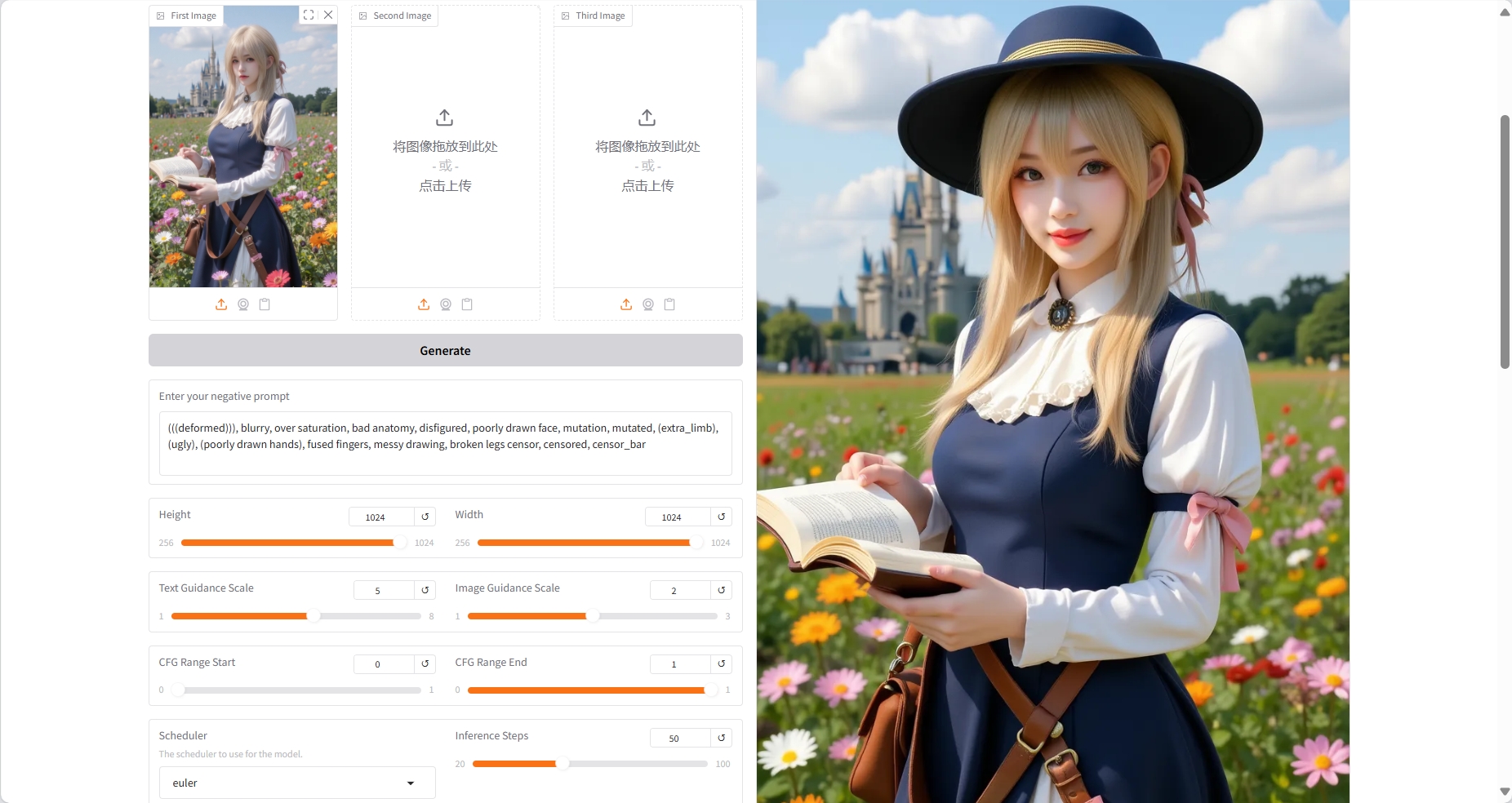

2. Effect display

Some examples of effects with OmniGen2:

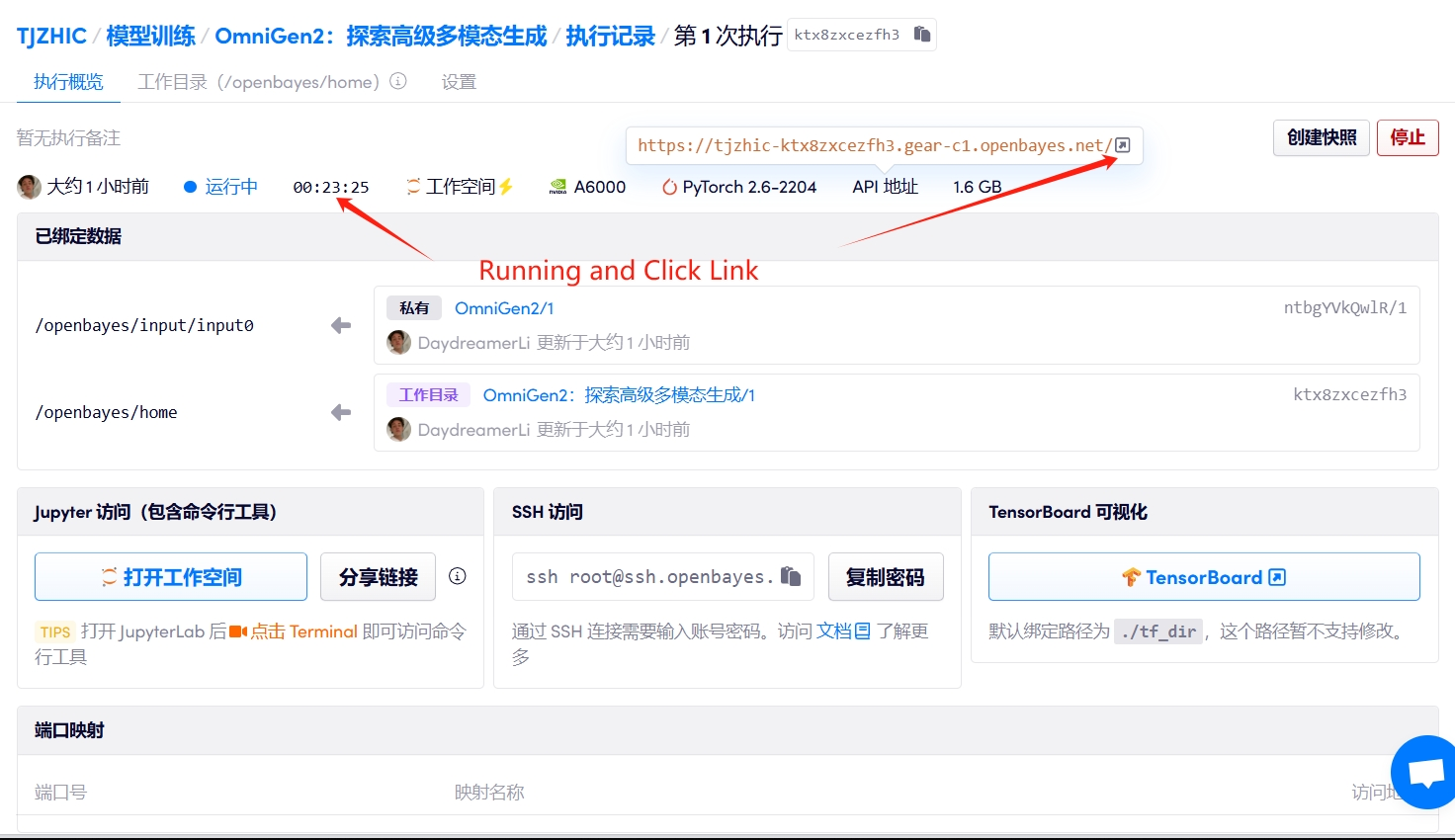

3. Operation steps

1. Start the container

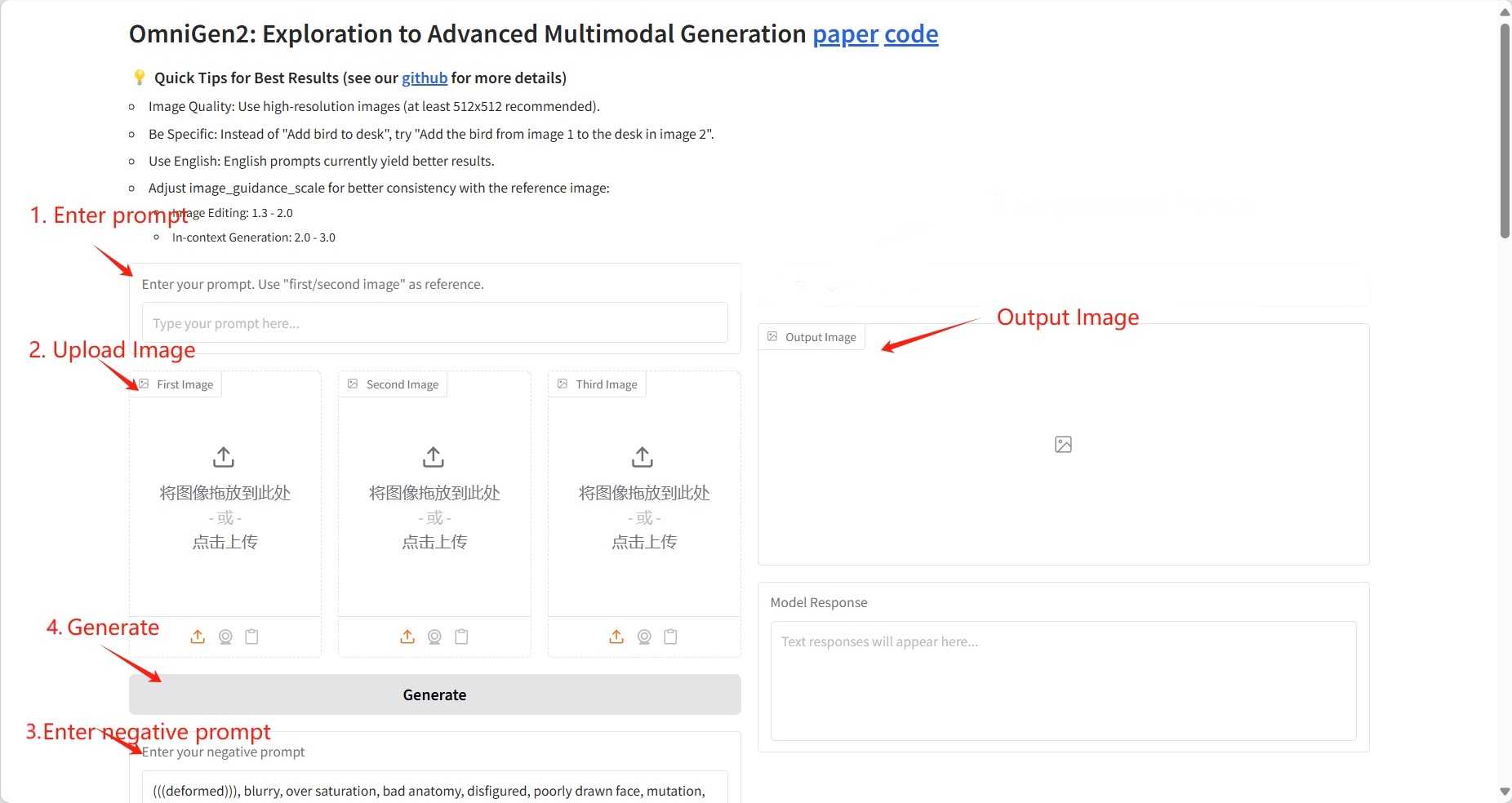

2. Usage steps

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

The first example is image description, the second and third examples are viz images, and the remaining examples are image editing.

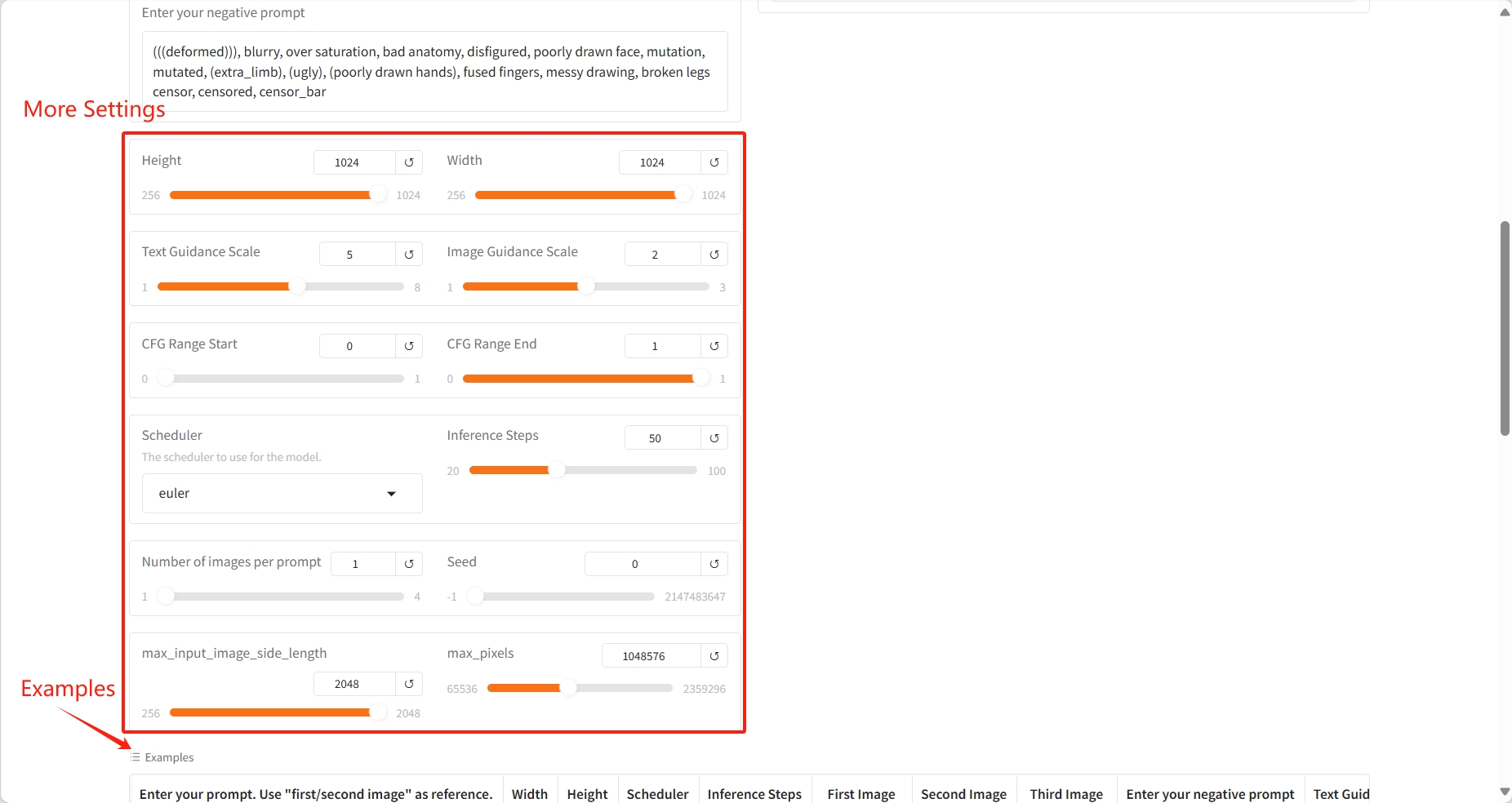

Specific parameters:

- Height: height.

- Width: width.

- Text Guidance Scale: Text guidance scale.

- Image Guidance Scale: Image guidance scale.

- CFG Range Start: Range start.

- CFG Range End: Range end.

- Scheduler: Scheduler.

- Inference Steps: Inference steps.

- Number of images per prompt: The number of images per prompt.

- Seed: seed.

- max_input_image_side_length: Maximum input image side length.

- max_pixels: Maximum pixels.

result

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@article{wu2025omnigen2,

title={OmniGen2: Exploration to Advanced Multimodal Generation},

author={Chenyuan Wu and Pengfei Zheng and Ruiran Yan and Shitao Xiao and Xin Luo and Yueze Wang and Wanli Li and Xiyan Jiang and Yexin Liu and Junjie Zhou and Ze Liu and Ziyi Xia and Chaofan Li and Haoge Deng and Jiahao Wang and Kun Luo and Bo Zhang and Defu Lian and Xinlong Wang and Zhongyuan Wang and Tiejun Huang and Zheng Liu},

journal={arXiv preprint arXiv:2506.18871},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.