Command Palette

Search for a command to run...

MAGI-1: The world's First large-scale Autoregressive Video Generation Model

Date

Size

31.53 MB

License

Apache 2.0

GitHub

Paper URL

1. Tutorial Introduction

Magi-1, officially released on April 21, 2025, by the Chinese AI company SendAI, is the world's first large-scale autoregressive video generation model. It generates videos by predicting a series of video blocks through autoregression, defined as fixed-length segments of consecutive frames. After training, Magi-1 can denoise each block of noise that monotonically increases over time, supports causal temporal modeling, and naturally supports streaming generation. It achieves powerful performance on image-to-video tasks conditioned on text instructions, providing high temporal consistency and scalability, which is achieved through multiple algorithmic innovations and a dedicated infrastructure stack. Related research papers are available. MAGI-1: Autoregressive Video Generation at Scale .

This tutorial uses resources for a single RTX 4090 card, and the text only supports English.

2. Project Examples

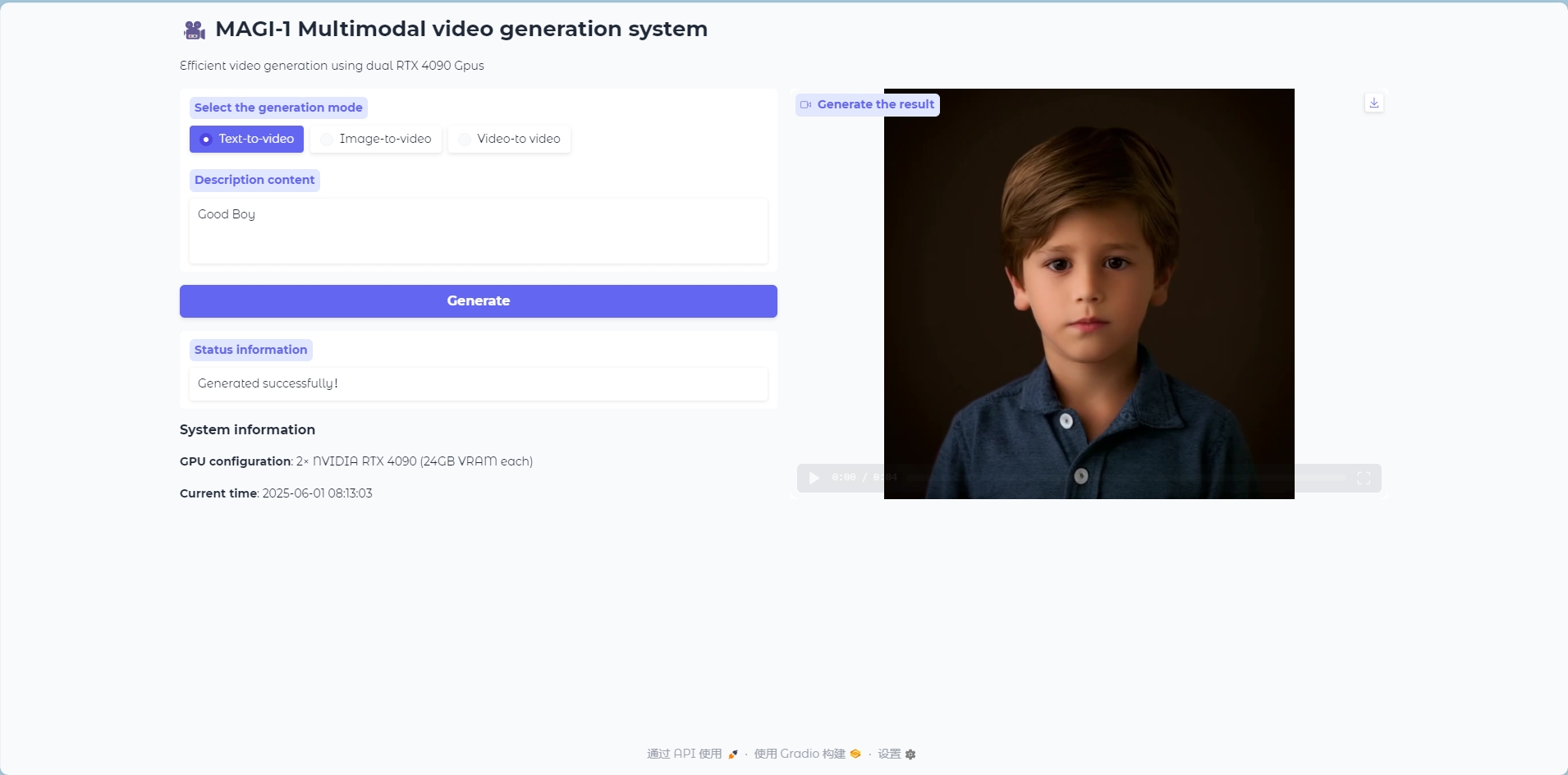

Text to Video Mode

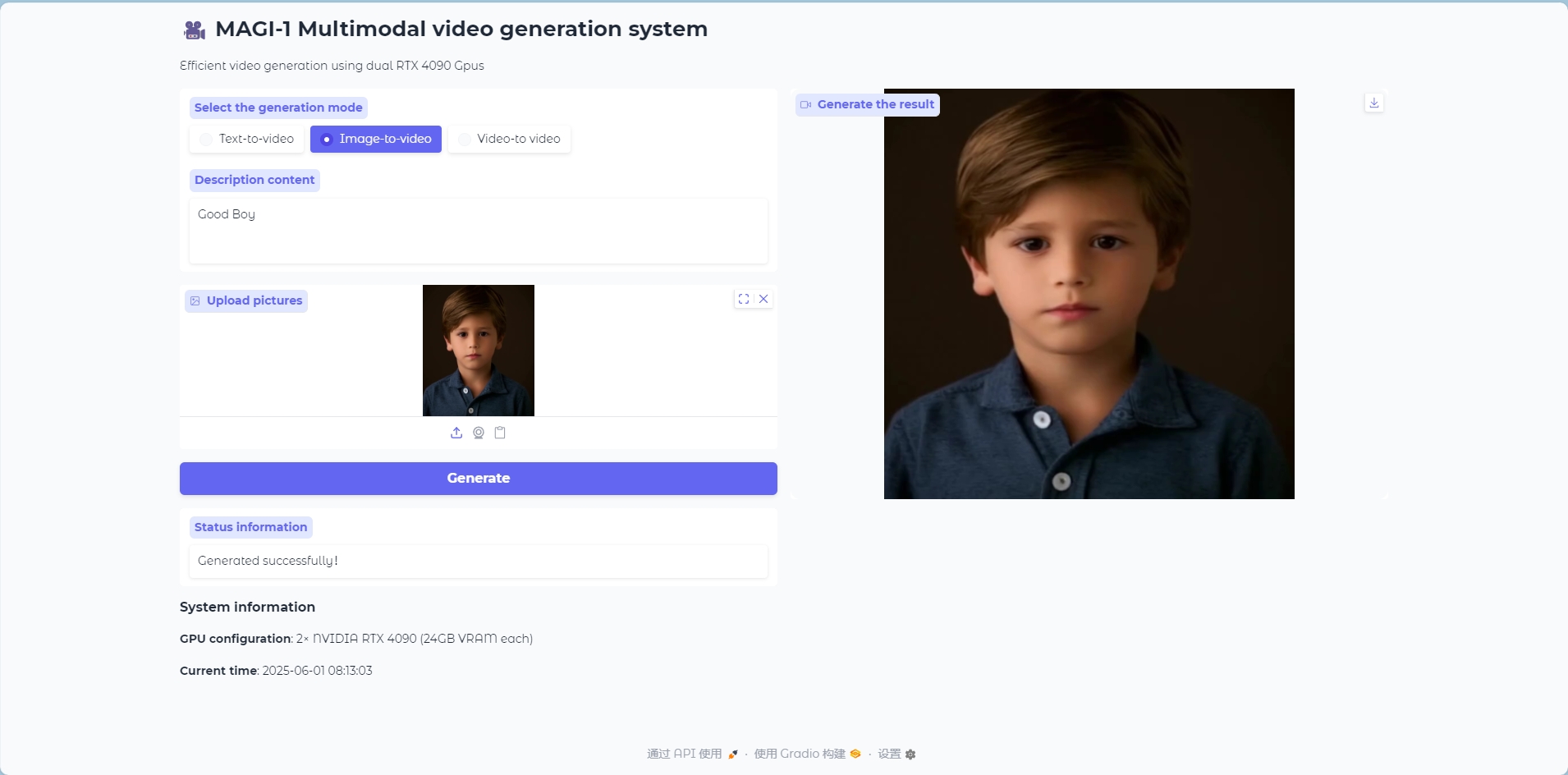

Image to Video Mode

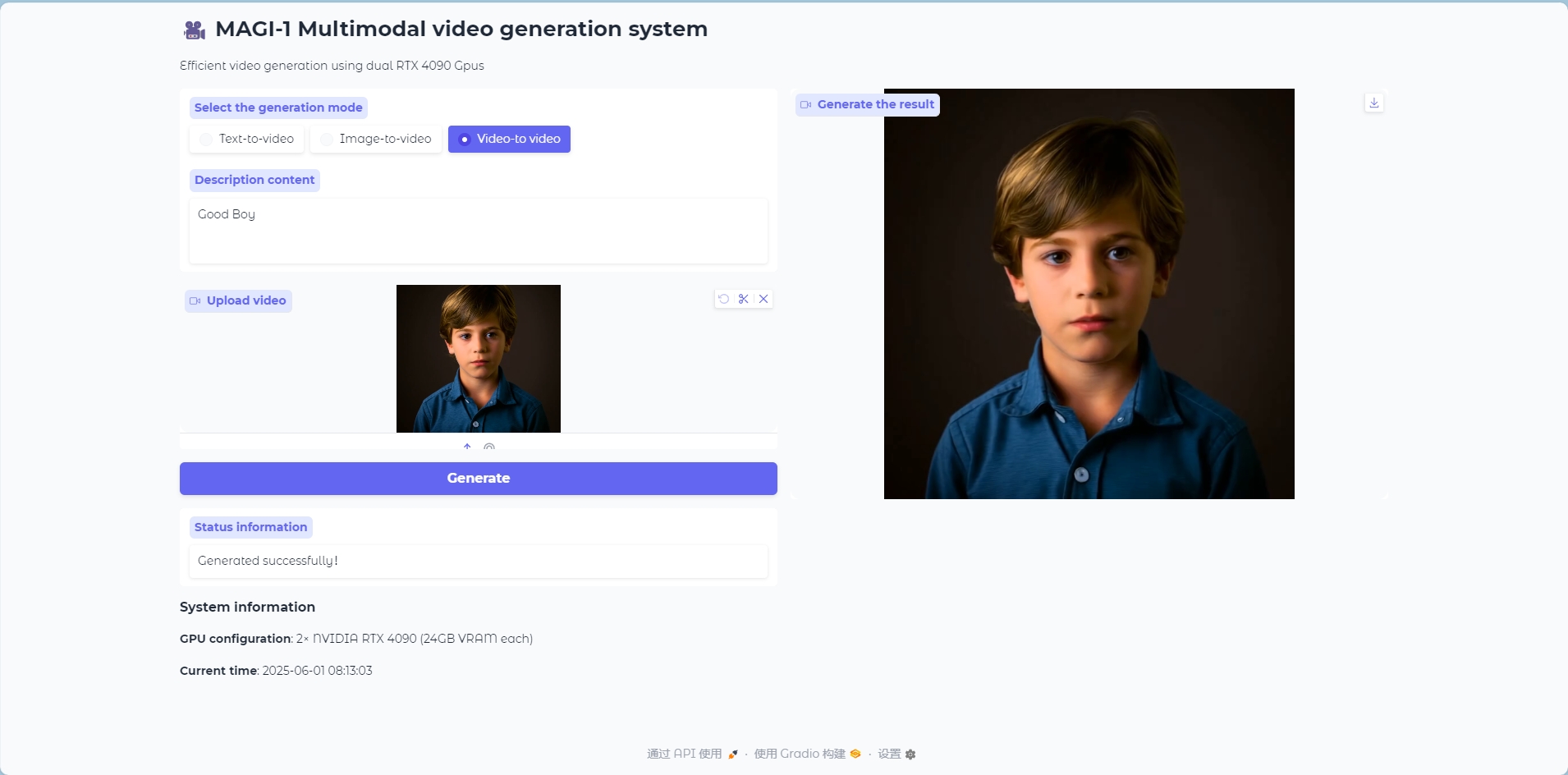

Video to Video Mode

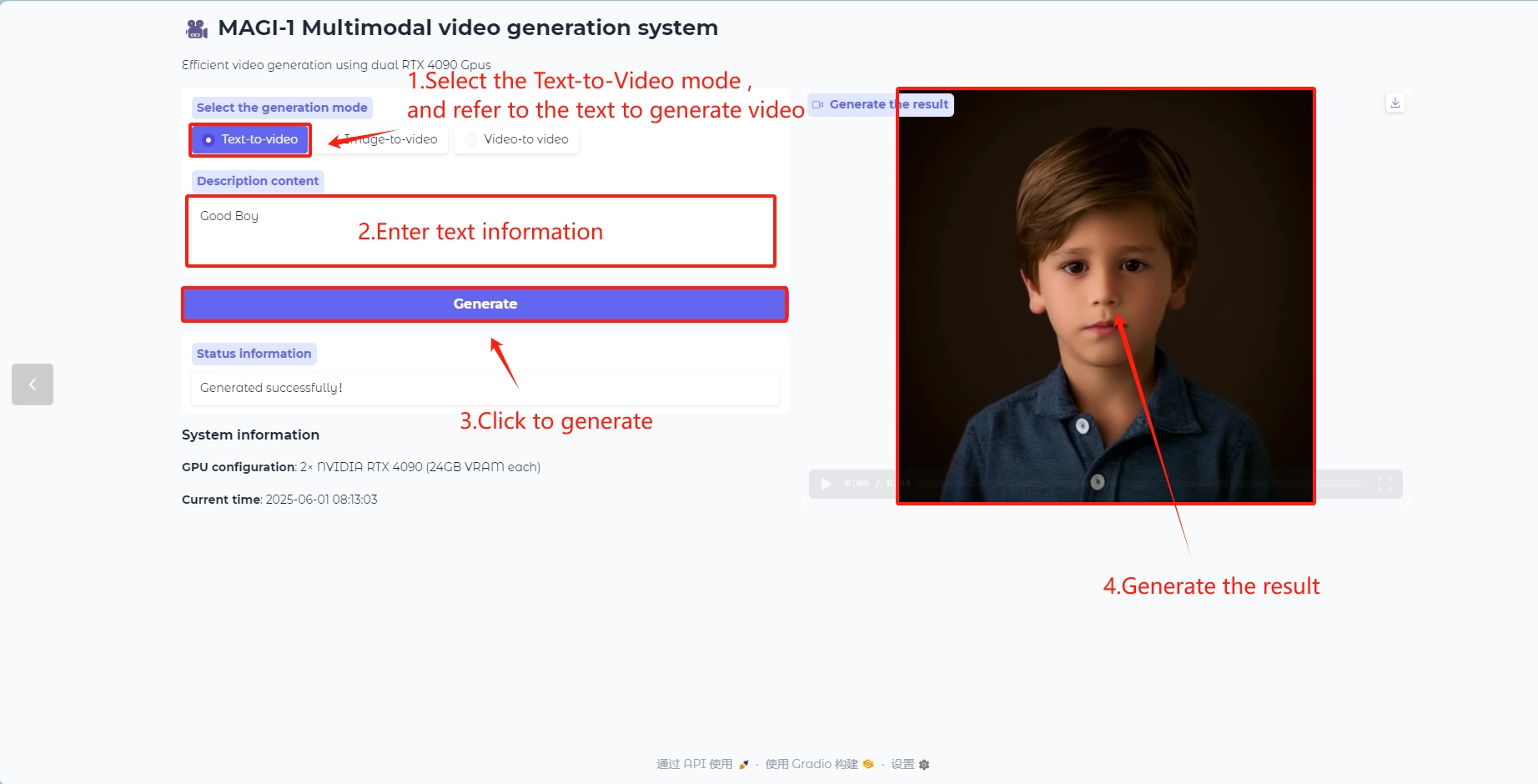

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

2. After entering the webpage, you can start a language dialogue with the model

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait 1-2 minutes and refresh the page. It takes about five minutes for the model to generate a video, so please be patient.

How to use

Text to Video Model

Generate video frames with text content

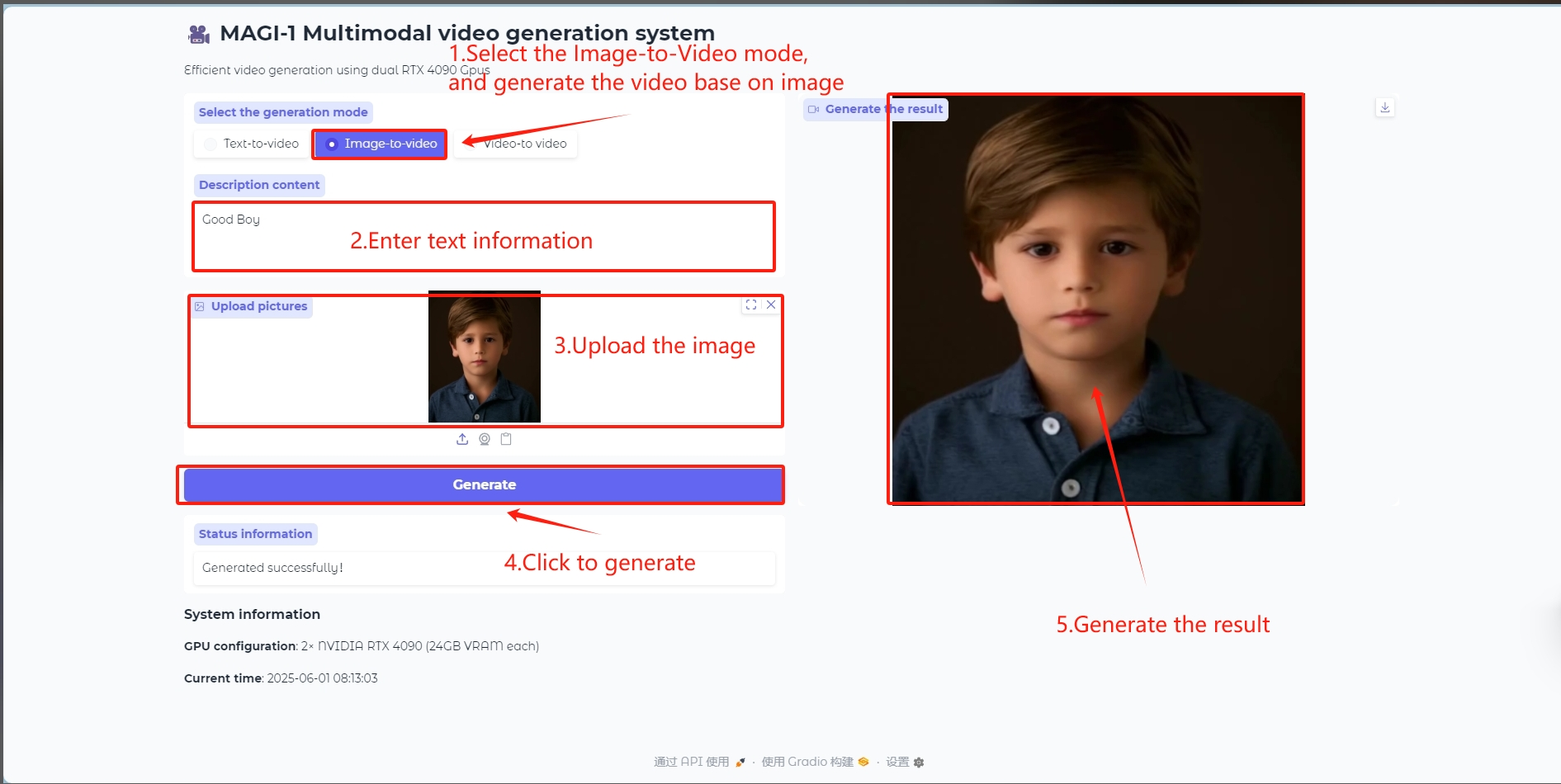

Image to Video Model

Input an image as a reference to generate a video frame

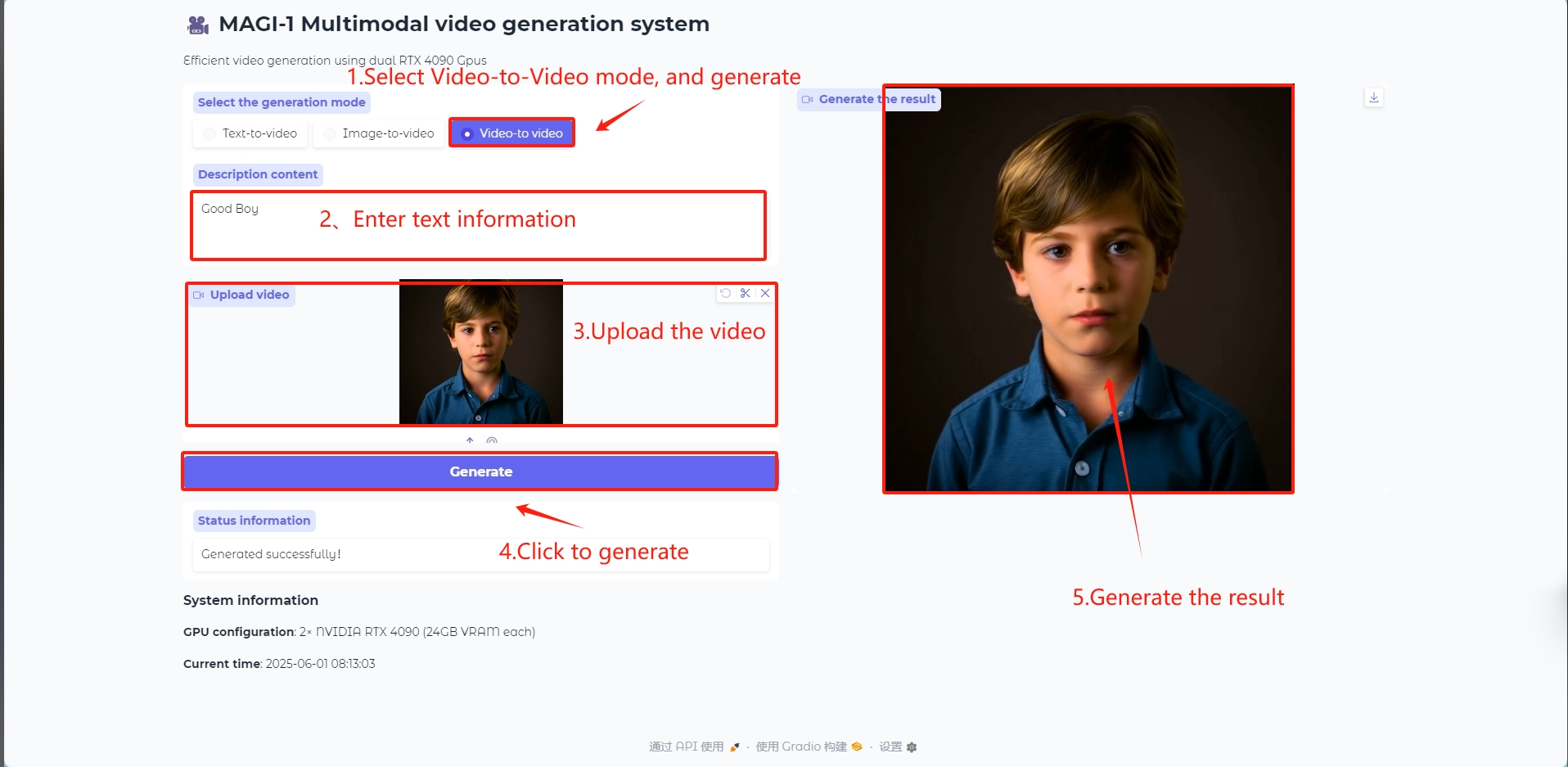

Video to Video Model

Input a video as a reference to generate video frames

In the runtime_config of the 4.5B_distill_quant_config.json file in the /openbayes/home/MAGI-1/example/4.5B path, you can change the parameters of the generated video, such as num_frames, video_size_h, video_size_w, and fps.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

V. Citation Information

Thanks to GitHub user kjasdk For the deployment of this tutorial, the project reference information is as follows:

@misc{ai2025magi1autoregressivevideogeneration,

title={MAGI-1: Autoregressive Video Generation at Scale},

author={Sand. ai and Hansi Teng and Hongyu Jia and Lei Sun and Lingzhi Li and Maolin Li and Mingqiu Tang and Shuai Han and Tianning Zhang and W. Q. Zhang and Weifeng Luo and Xiaoyang Kang and Yuchen Sun and Yue Cao and Yunpeng Huang and Yutong Lin and Yuxin Fang and Zewei Tao and Zheng Zhang and Zhongshu Wang and Zixun Liu and Dai Shi and Guoli Su and Hanwen Sun and Hong Pan and Jie Wang and Jiexin Sheng and Min Cui and Min Hu and Ming Yan and Shucheng Yin and Siran Zhang and Tingting Liu and Xianping Yin and Xiaoyu Yang and Xin Song and Xuan Hu and Yankai Zhang and Yuqiao Li},

year={2025},

eprint={2505.13211},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.13211},

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.