Command Palette

Search for a command to run...

vLLM+Open WebUI Deployment QwenLong-L1-32B

Date

Size

96.23 MB

Tags

License

Apache 2.0

Paper URL

1. Tutorial Introduction

QwenLong-L1-32B is a long-text reasoning model released on May 26, 2025, by Tongyi Lab and Alibaba Group. This model is the first long-text reasoning model trained using reinforcement learning (RL), focusing on solving problems such as poor memory and logical inconsistencies that traditional large-scale models encounter when handling extremely long contexts (e.g., 120,000 tokens). It breaks through the context limitations of traditional large-scale models, providing a low-cost, high-performance solution for high-precision scenarios such as finance and law. Related research papers are available. QwenLong-L1: Towards Long-Context Large Reasoning Models with Reinforcement Learning .

This tutorial uses dual-card RTX A6000 resources.

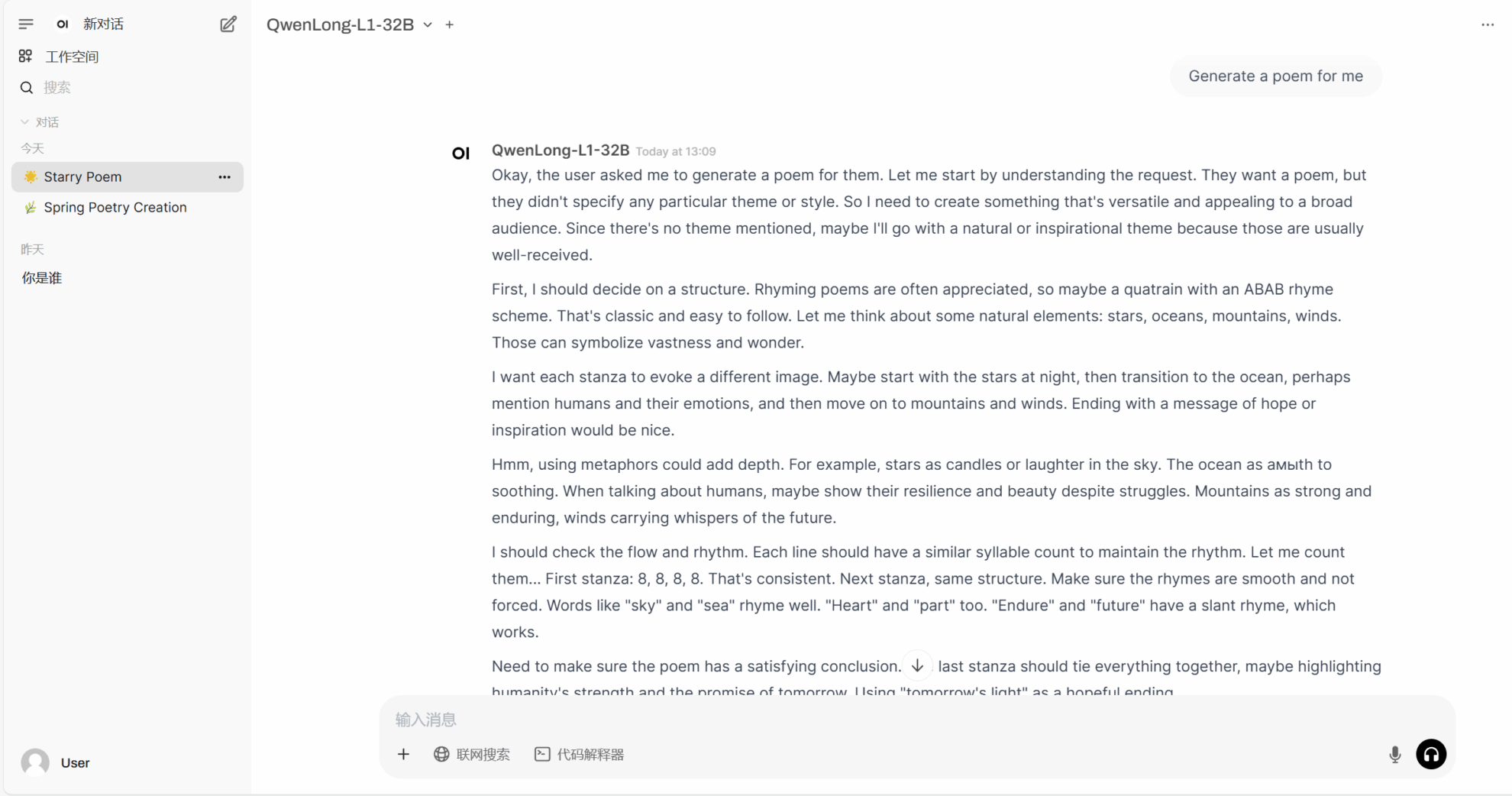

2. Project Examples

3. Operation steps

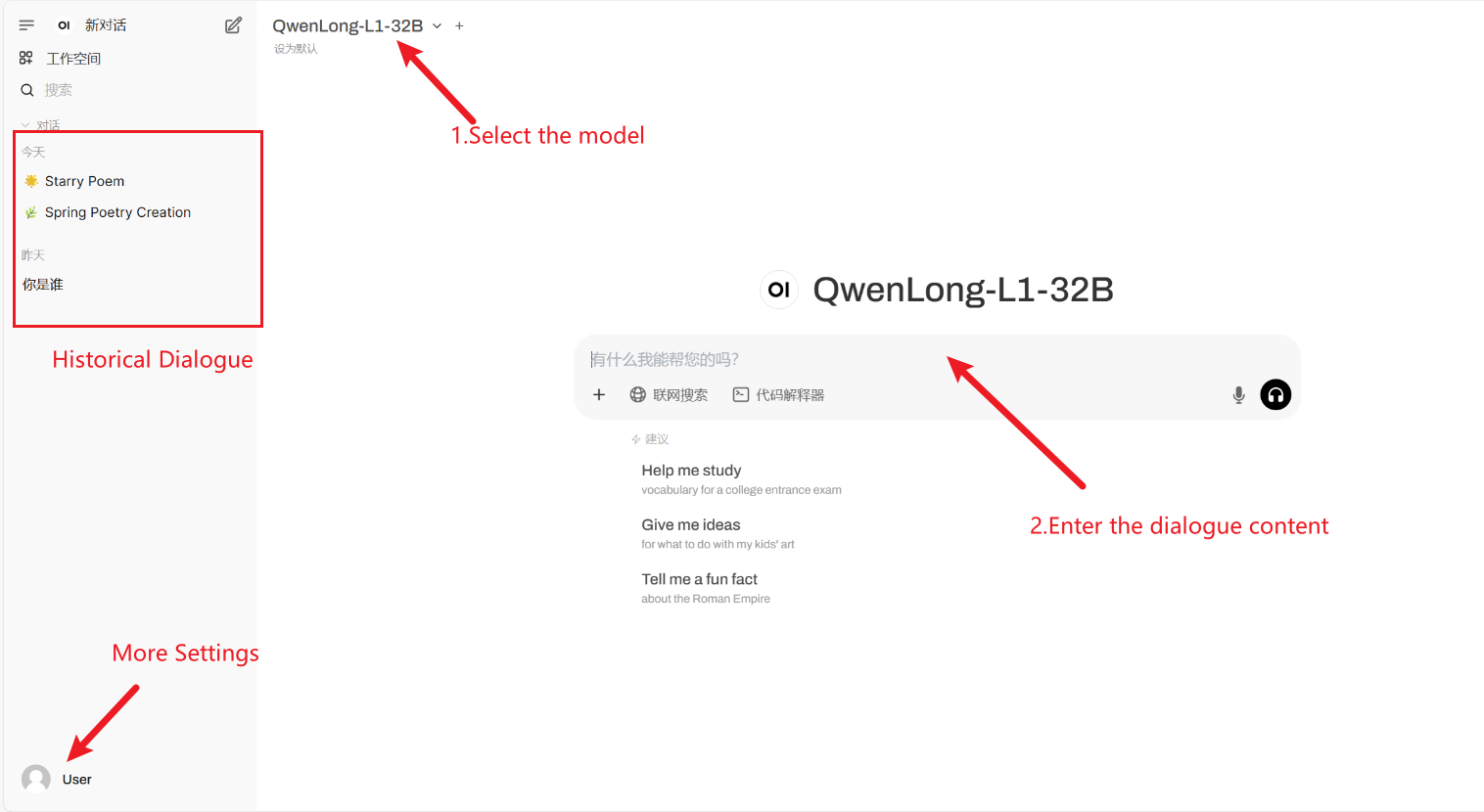

1. After starting the container, click the API address to enter the Web interface

If "Model" is not displayed, it means the model is being initialized. Since the model is large, please wait about 2-3 minutes and refresh the page.

2. After entering the webpage, you can start a conversation with the model

How to use

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

Thanks to Github user xxxjjjyyy1 Deployment of this tutorial. The reference information of this project is as follows:

@article{wan2025qwenlongl1,

title={QwenLong-L1: : Towards Long-Context Large Reasoning Models with Reinforcement Learning},

author={Fanqi Wan, Weizhou Shen, Shengyi Liao, Yingcheng Shi, Chenliang Li, Ziyi Yang, Ji Zhang, Fei Huang, Jingren Zhou, Ming Yan},

journal={arXiv preprint arXiv:2505.17667},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.