Command Palette

Search for a command to run...

VGGT: A General 3D Vision Model

1. Tutorial Introduction

VGGT, a feedforward neural network released on March 28, 2025, by the Meta AI team and the Visual Geometry Group (VGG) at the University of Oxford, can directly infer all key 3D properties of a scene from one, several, or hundreds of views within seconds. These properties include external and internal camera parameters, point maps, depth maps, and 3D point trajectories. It also boasts simplicity and efficiency, completing reconstruction within a second, even surpassing alternative methods that require post-processing using visual geometry optimization techniques. The related paper is as follows: VGGT: Visual Geometry Grounded TransformerIt has been accepted by CVPR 2025 and won the Best Paper Award at CVPR 2025.

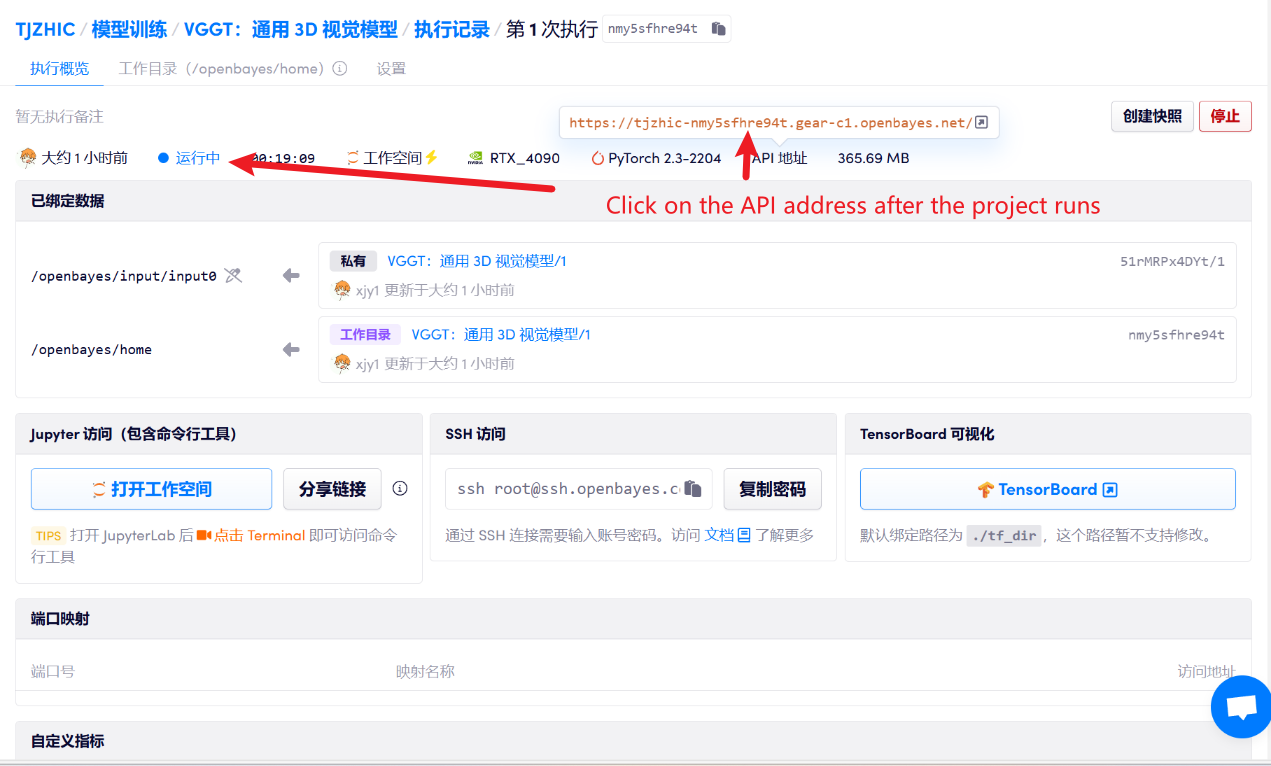

This tutorial uses resources for a single RTX 4090 card.

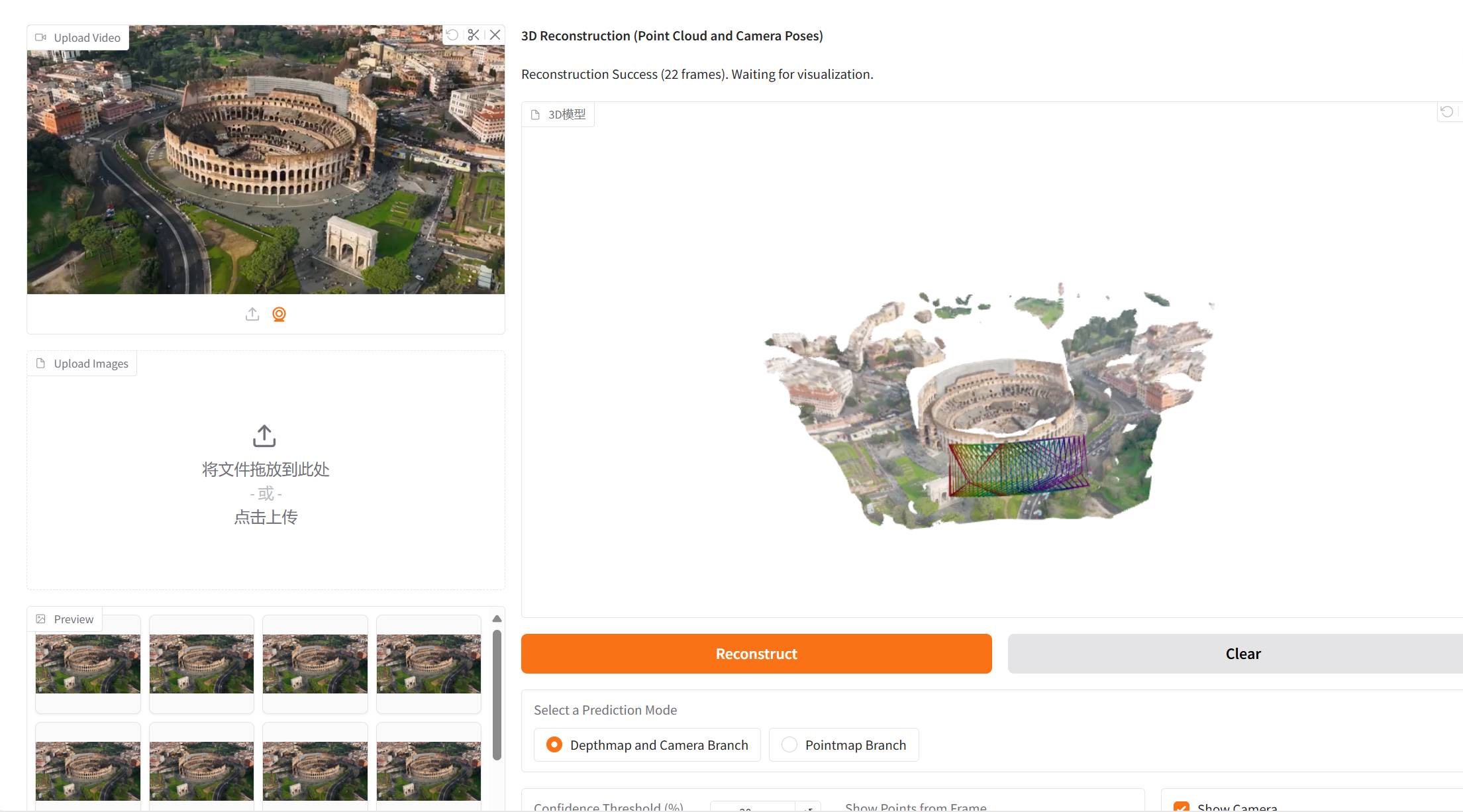

2. Project Examples

3. Operation steps

1. After starting the container, click the API address to enter the Web interface

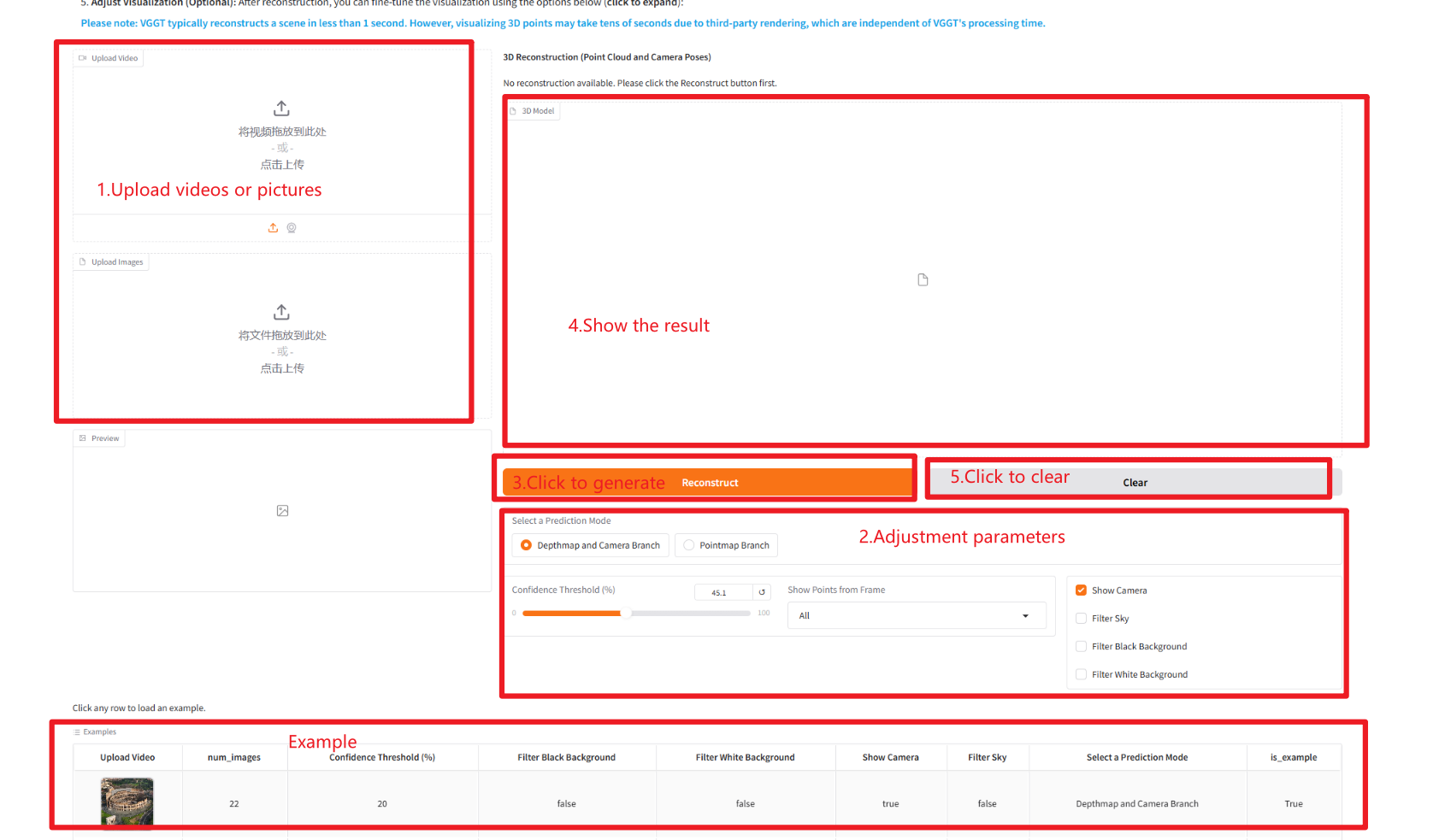

2. Once you enter the webpage, you can use the model

If "Bad Gateway" is displayed, it means the model is initializing. Since the model is large, please wait about 2-3 minutes and refresh the page.

How to use

Parameter Description:

- Select a Prediction Mode:

- Depthmap and Camera Branch: Reconstruction using depth map and camera pose branches.

- Pointmap Branch: Use the point cloud branch directly for reconstruction.

- Confidence Threshold: Confidence threshold, used to filter out results with higher confidence in the model output.

- Show Points from Frame: Whether to display the points extracted from the selected frame.

- Show Camera: Whether to display the camera position.

- Filter Sky: Whether to filter sky points.

- Filter Black Background: Whether to filter points with black background.

- Filter White Background: Whether to filter points with white background.

4. Discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

The citation information for this project is as follows:

@inproceedings{wang2025vggt,

title={VGGT: Visual Geometry Grounded Transformer},

author={Wang, Jianyuan and Chen, Minghao and Karaev, Nikita and Vedaldi, Andrea and Rupprecht, Christian and Novotny, David},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2025}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.