Command Palette

Search for a command to run...

Deploy Qwen3-30B-A3B Using vLLM+Open-webUI

1. Tutorial Introduction

The Qwen3 project was released by the Ali Qwen team in 2025. The relevant technical report is Qwen3: Think Deeper, Act Faster .

Qwen3 is the latest generation of large language models in the Qwen series, providing comprehensive dense models and mixed expert (MoE) models. Based on rich training experience, Qwen3 has made breakthrough progress in reasoning, instruction following, agent capabilities and multi-language support. The latest version of Qwen3 has the following features:

- Full size dense and mixed expert models: 0.6B, 1.7B, 4B, 8B, 14B, 32B and 30B-A3B, 235B-A22B

- Supported byThinking Patterns(for complex logical reasoning, mathematics, and coding) and non-thinking mode (for efficient general conversation)Seamless Switching, ensuring optimal performance in various scenarios.

- Significantly enhanced reasoning capabilities, surpassing the previous QwQ (in thinking mode) and Qwen2.5 instruction model (in non-thinking mode) in mathematics, code generation, and common sense logic reasoning.

- Superior alignment with human preferences, excels in creative writing, role-playing, multi-turn conversations, and command following, providing a more natural, engaging, and immersive conversational experience.

- Excels in intelligent agent capabilities, can accurately integrate external tools in both thinking and non-thinking modes, and leads in open source models in complex agent-based tasks.

- It supports more than 100 languages and dialects, and has powerful multi-language understanding, reasoning, command following and generation capabilities.

This tutorial uses the resources for the dual-SIM A6000.

👉 This project provides a model of:

- Qwen3-30B-A3B Model

2. Operation steps

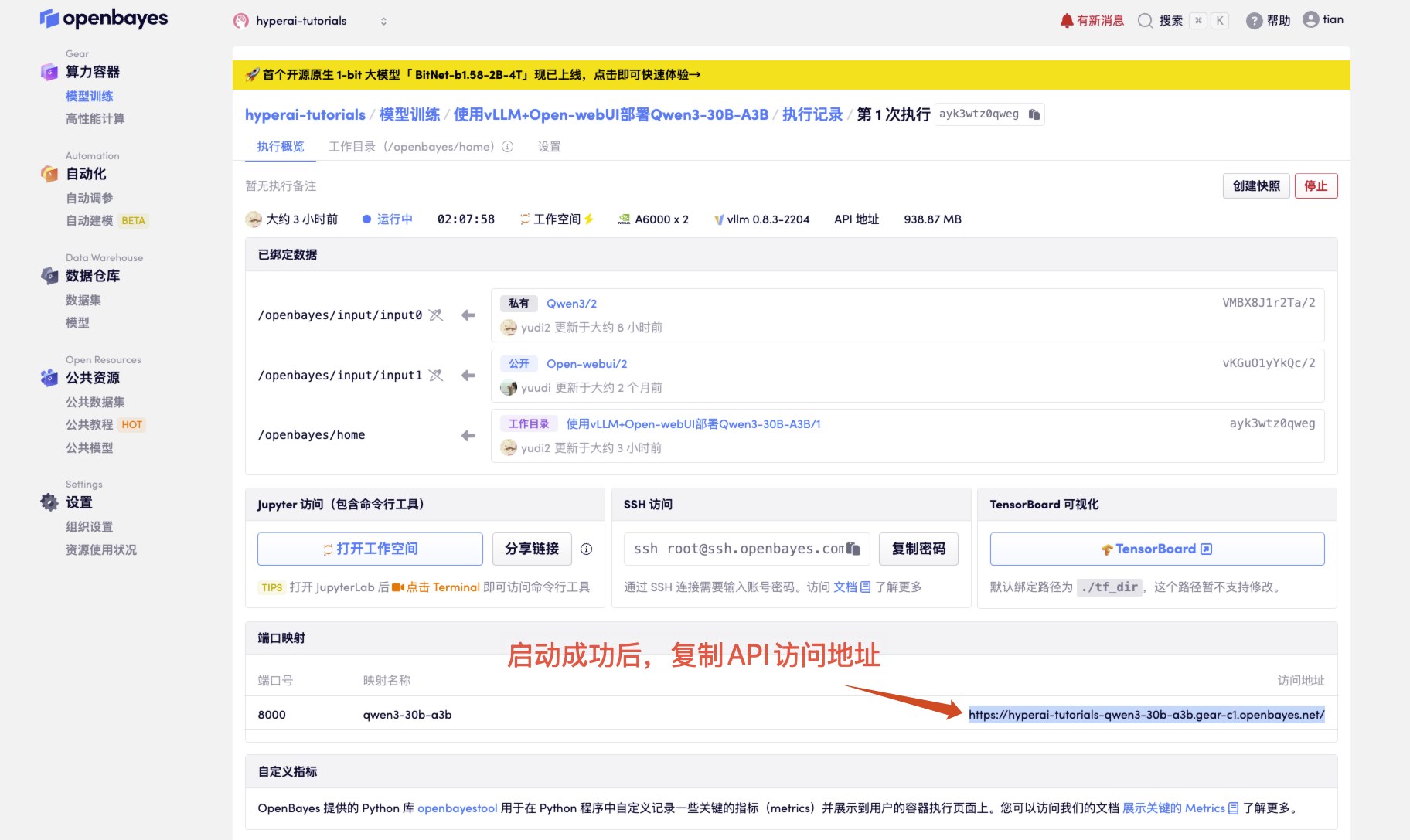

- After starting the container, click the API address to enter the Web interface

If "Model" is not displayed, it means the model is being initialized. Since the model is large, please wait about 1-2 minutes and refresh the page.

2. After entering the webpage, you can start a conversation with the model

How to use

3. OpenAI API Call Guide

The following is an optimized description of the API call method, with a clearer structure and added practical details:

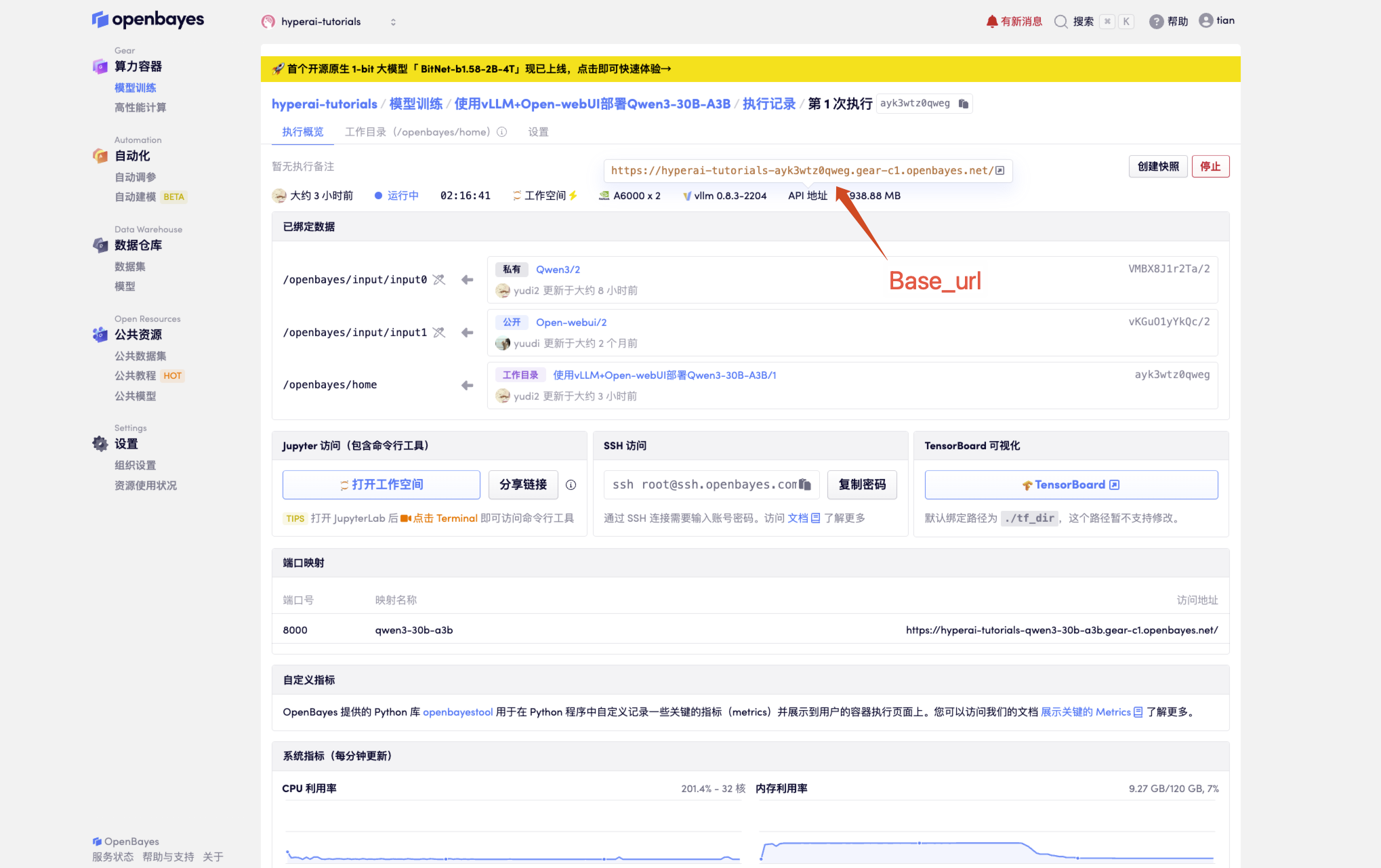

1. Get basic configuration

# 必要参数配置

BASE_URL = "<API 地址>/v1" # 生产环境

MODEL_NAME = "Qwen3-30B-A3B" # 默认模型名称

API_KEY = "Empty" # 未设置 API_KEY

Get the API address

2. Different calling methods

2.1 Native Python call

import openai

# 创建 OpenAI 客户端实例

client = openai.OpenAI(

api_key=API_KEY, # 请替换为你的实际 API Key

base_url=BASE_URL # 替换为你的实际 base_url

)

# 发送聊天消息

response = client.chat.completions.create(

model=MODEL_NAME,

messages=[

{"role": "user", "content": "你好!"}

],

temperature=0.7,

)

# 输出回复内容

print(response.choices[0].message.content)

# 方法 2:requests 库(更灵活)

import requests

headers = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json"

}

data = {

"model": MODEL_NAME,

"messages": [{"role": "user", "content": "你好!"}]

}

response = requests.post(f"{BASE_URL}/chat/completions", headers=headers, json=data)

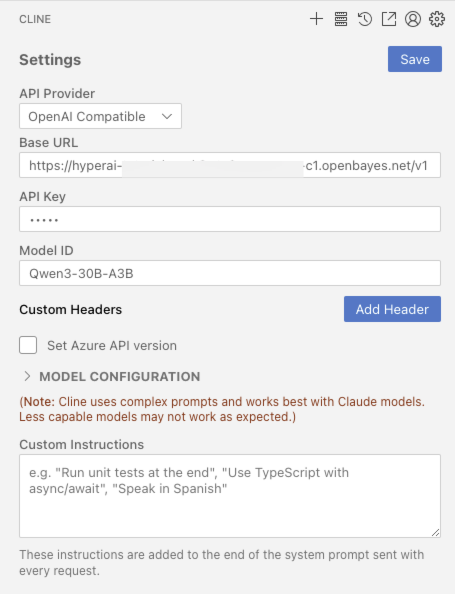

2.2 Development Tool Integration

If VScode is installed officially CLINE Plugins

2.3 cURL call

curl <BASE_URL>/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": <MODEL_NAME>,

"messages": [{"role": "user", "content": "你好!"}]

}'

Exchange and discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Citation Information

grateful ZV-Liu For the production of this tutorial, the project reference information is as follows:

@misc{glm2024chatglm,

title={ChatGLM: A Family of Large Language Models from GLM-130B to GLM-4 All Tools},

author={Team GLM and Aohan Zeng and Bin Xu and Bowen Wang and Chenhui Zhang and Da Yin and Diego Rojas and Guanyu Feng and Hanlin Zhao and Hanyu Lai and Hao Yu and Hongning Wang and Jiadai Sun and Jiajie Zhang and Jiale Cheng and Jiayi Gui and Jie Tang and Jing Zhang and Juanzi Li and Lei Zhao and Lindong Wu and Lucen Zhong and Mingdao Liu and Minlie Huang and Peng Zhang and Qinkai Zheng and Rui Lu and Shuaiqi Duan and Shudan Zhang and Shulin Cao and Shuxun Yang and Weng Lam Tam and Wenyi Zhao and Xiao Liu and Xiao Xia and Xiaohan Zhang and Xiaotao Gu and Xin Lv and Xinghan Liu and Xinyi Liu and Xinyue Yang and Xixuan Song and Xunkai Zhang and Yifan An and Yifan Xu and Yilin Niu and Yuantao Yang and Yueyan Li and Yushi Bai and Yuxiao Dong and Zehan Qi and Zhaoyu Wang and Zhen Yang and Zhengxiao Du and Zhenyu Hou and Zihan Wang},

year={2024},

eprint={2406.12793},

archivePrefix={arXiv},

primaryClass={id='cs.CL' full_name='Computation and Language' is_active=True alt_name='cmp-lg' in_archive='cs' is_general=False description='Covers natural language processing. Roughly includes material in ACM Subject Class I.2.7. Note that work on artificial languages (programming languages, logics, formal systems) that does not explicitly address natural-language issues broadly construed (natural-language processing, computational linguistics, speech, text retrieval, etc.) is not appropriate for this area.'}

}Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.