Command Palette

Search for a command to run...

One-click Deployment Qwen2.5-VL-32B-Instruct

Date

Size

1015.01 MB

1. Tutorial Introduction

Qwen2.5-VL-32B-Instruct is a multimodal large model open sourced by the Alibaba Tongyi Qianwen team on March 24, 2025, and released under the Apache 2.0 protocol. Based on the Qwen2.5-VL series, this model is optimized through reinforcement learning technology and achieves a breakthrough in multimodal capabilities with a 32B parameter scale.

🚀 Qwen2.5-VL-32B shocking upgrade! Stronger visual AI, smarter multi-modal assistant! 🌟

🔥 Core feature upgrades

- Fine-grained visual analysis: In professional fields such as medical image analysis and engineering drawing recognition, the model demonstrates pixel-level content capture capabilities and supports multi-graph association reasoning and spatiotemporal dimension analysis

- Output style optimization:The output content of the model is closer to human expression habits in terms of format specifications and information detail, and can generate solutions with clear structure and rigorous logic, especially in complex scenarios.

- Mathematical Reasoning Breakthrough: For complex mathematical problems including multi-variable equations and geometric proofs, the model improves the accuracy of problem solving to the industry-leading level through algorithm optimization.

This tutorial uses Qwen2.5-VL-32B as a demonstration, and the computing resources are A6000*2.

2. Operation steps

1. After starting the container, click the API address to enter the Web interface

If "Model" is not displayed, it means the model is being initialized. Since the model is large, please wait about 1-2 minutes and refresh the page.

2. After entering the webpage, you can start a conversation with the model

This tutorial supports "online search". After this function is turned on, the reasoning speed will slow down, which is normal.

3. Interface call example

This container uses open-webui to call the API service of Qwen2.5-VL-32B by default. If you need to use it locally, you can refer to the calling example below.Contains examples of using cURL and Python.

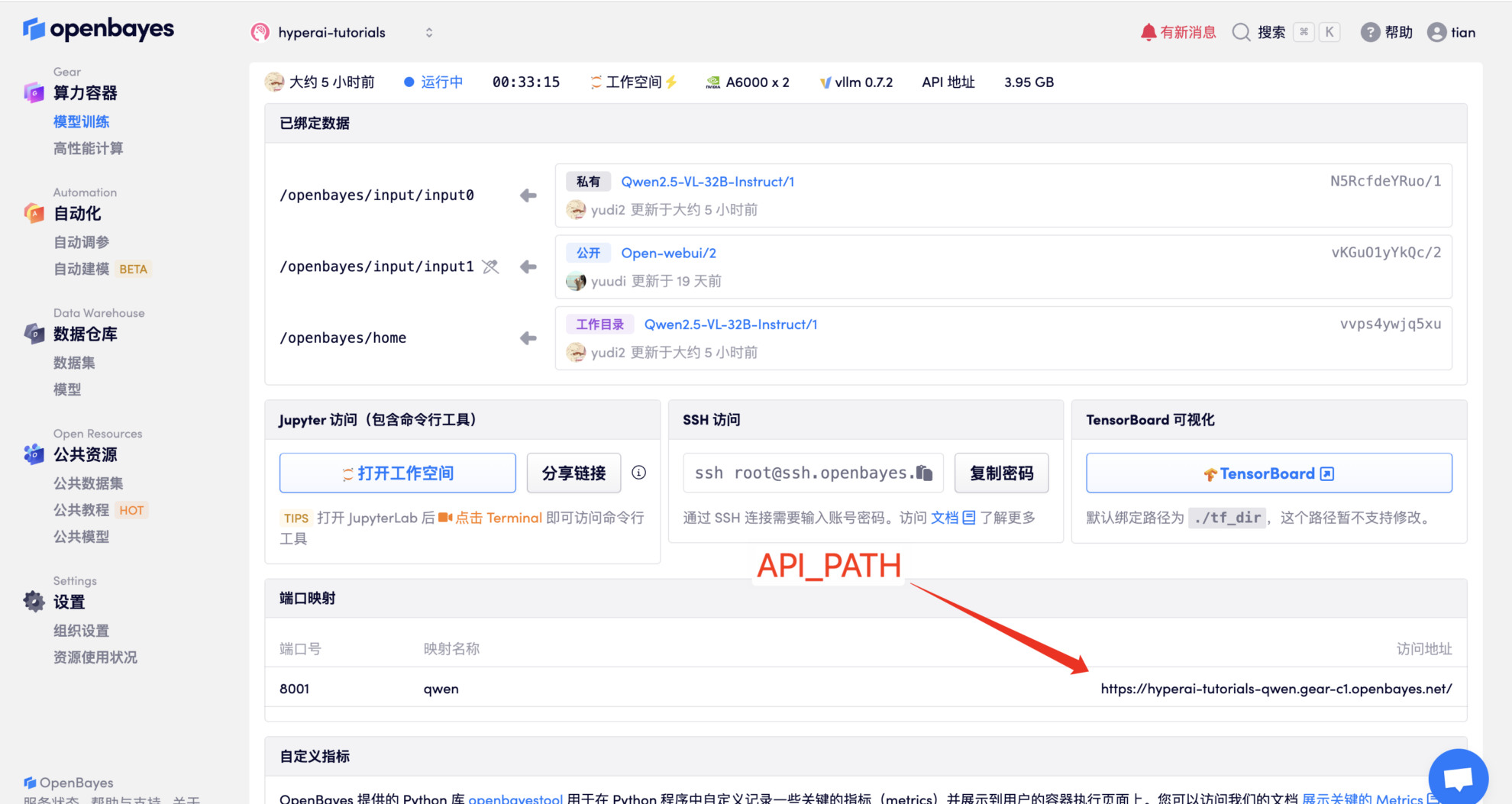

Get API_PATH as shown:

🔹 Call using cURL

You can use curl The command calls the API directly:

curl {API_PATH}/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer Empty" \

-d '{

"model": "Qwen2.5-VL-32B-Instruct",

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "请理解图片中的公式,并做详细解释"},

{"type": "image_url", "image_url": {"url": "https://images2018.cnblogs.com/blog/1203675/201805/1203675-20180525100048863-1610672614.png"}}

]

}

],

"max_tokens": 1024

}'

🐍 Using Python to call

Please make sure to install openai Libraries:

pip install openai

Then use the following Python code:

from openai import OpenAI

client = OpenAI(api_key="Empty", base_url="{API_PATH}/v1/")

response = client.chat.completions.create(

model="Qwen2.5-VL-32B-Instruct",

messages = [

{

"role": "user",

"content": [

{"type": "text", "text": "请理解图片中的公式,并做详细解释"},

{"type": "image_url", "image_url": {"url": "https://images2018.cnblogs.com/blog/1203675/201805/1203675-20180525100048863-1610672614.png"}}

]

}

],

max_tokens=1000

)

print(response.choices[0].message.content)

In this way, you can use the appropriate method to call Qwen2.5-VL-32B API in different environments! 🚀

Exchange and discussion

🖌️ If you see a high-quality project, please leave a message in the background to recommend it! In addition, we have also established a tutorial exchange group. Welcome friends to scan the QR code and remark [SD Tutorial] to join the group to discuss various technical issues and share application effects↓

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.