Command Palette

Search for a command to run...

One-click Deployment of ChemVLM-26B

ChemVLM: Exploring the power of large multimodal language models in chemistry

Tutorial Introduction

ChemVLM is the first open source multimodal large language model for the chemistry field launched by the Shanghai Artificial Intelligence Laboratory in 2024. The model aims to solve the incompatibility between chemical image understanding and text analysis. By combining the advantages of Visual Transformer (ViT), Multilayer Perceptron (MLP) and Large Language Model (LLM), it achieves comprehensive reasoning of chemical images and texts. ChemVLM is based on the VIT-MLP-LLM architecture, uses ChemLLM-20B as the basic large model, enhances the model's ability to understand and utilize chemical text knowledge, and uses InternVIT-6B as an image encoder. In addition, the research team carefully selected high-quality data including molecules, reaction formulas, and chemical test data from the field of chemistry, and constructed a bilingual multimodal question-answering dataset to further improve model performance.

Run steps

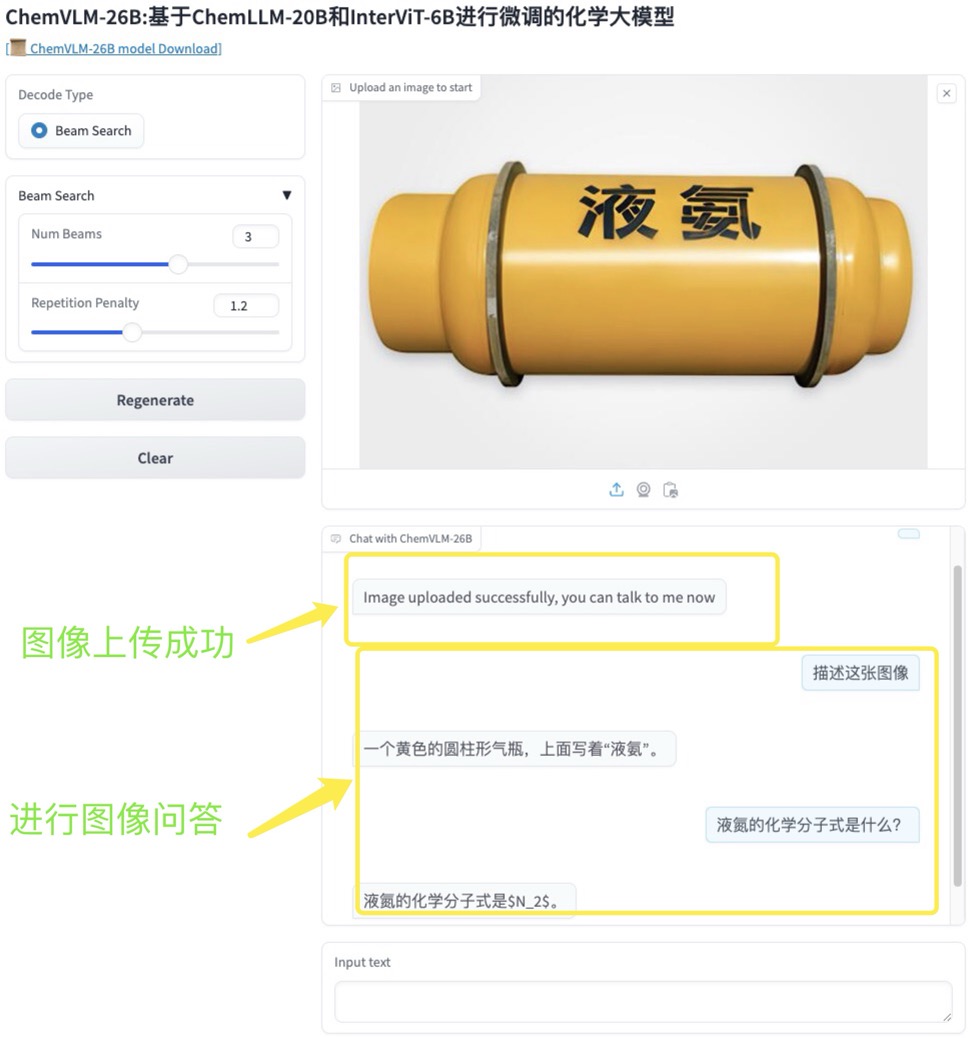

1. 克隆并成功启动容器后点击 API 地址即可进入 Web 界面(由于模型较大,成功启动容器后需要等待约 2 分钟才会在 API 地址显示 Web 界面)

2. 可以选择设置相关采样参数(不同的采样参数效果可能不同),然后上传化学图像继续与模型进行对话,

例如下图

点击提交即可看到模型输出结果

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.