Command Palette

Search for a command to run...

One-click Deployment of the Puke Chemical Large Model ChemLLM-7B-chat Demo

Tutorial Introduction

This tutorial is a one-click deployment demo of ChemLLM-7B-Chat. You only need to clone and start the container and directly copy the generated API address to experience the inference of the model.

ChemLLM-7B-Chat is the first open-source large-scale language model for chemistry and molecular science, "ChemLLM," released in 2024 by the Shanghai Artificial Intelligence Laboratory (Shanghai AI Lab), and is built on InternLM-2. Related research papers include... ChemLLM: A Chemical Large Language Model .

Relying on the excellent multilingual capabilities of the Shusheng·PuYu 2.0 base model, Puke Chemistry, after professional chemical knowledge training, also has excellent Chinese-English translation capabilities in chemistry, which can help chemical researchers overcome language barriers, accurately translate the special terms in chemical literature, and acquire more chemical knowledge.

In addition, the research team also open-sourced ChemData700K dataset, Chinese and English versions of ChemPref-10K dataset, C-MHChem datasetand ChemBench4K Chemical Ability Evaluation Benchmark Dataset.

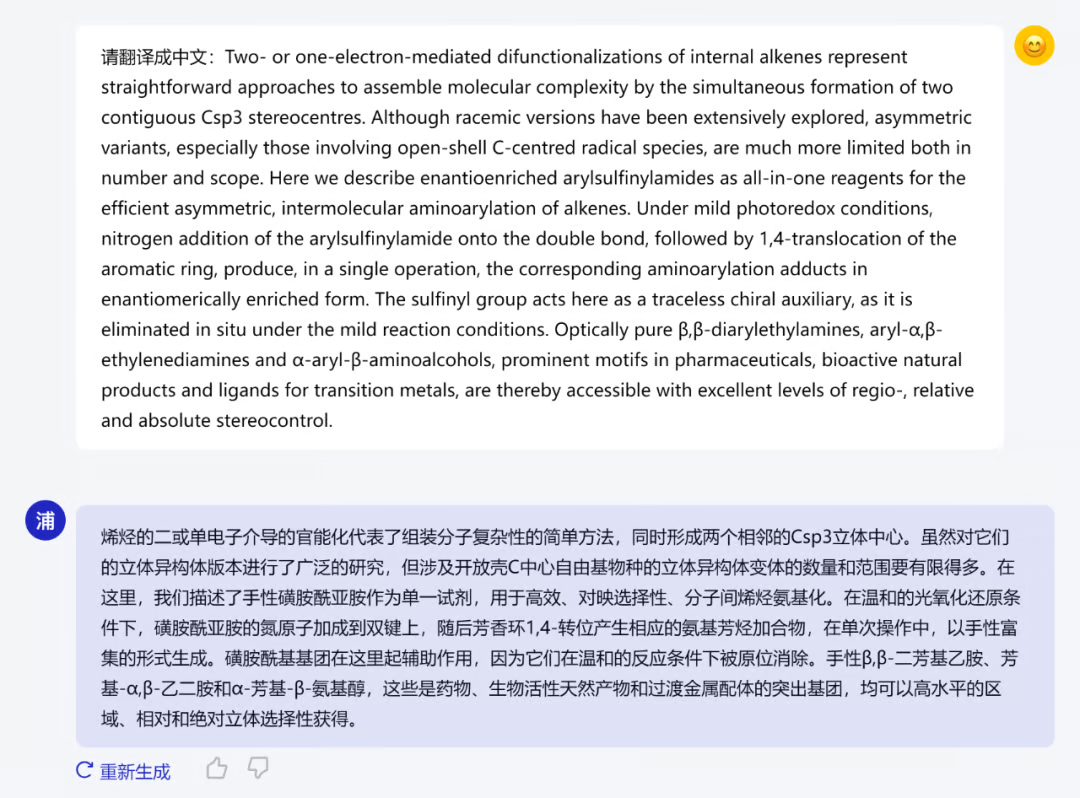

The figure below shows the abstract of a paper translated by Pu Ke Chemistry and published in the journal Nature Chemistry on January 16, 2024.

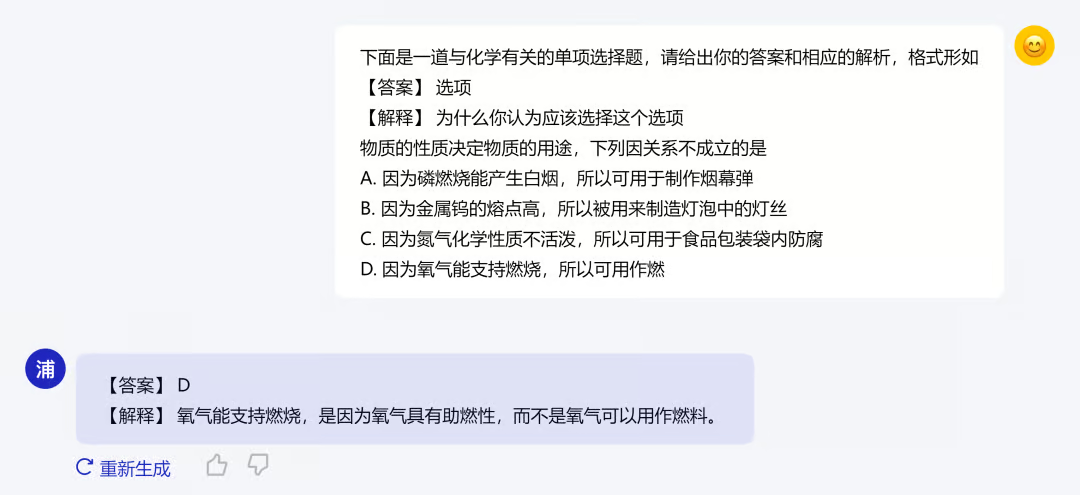

In addition to professional chemistry knowledge training, Pu Ke Chemistry also conducts junior high and high school knowledge learning. When answering junior high and high school chemistry questions, not only can you give the answer, but you can also give a specific explanation. The following figure shows an example:

Deploy the inference step

This tutorial has deployed the model and environment. You can directly use the large model for reasoning dialogue according to the tutorial instructions. The specific tutorial is as follows:

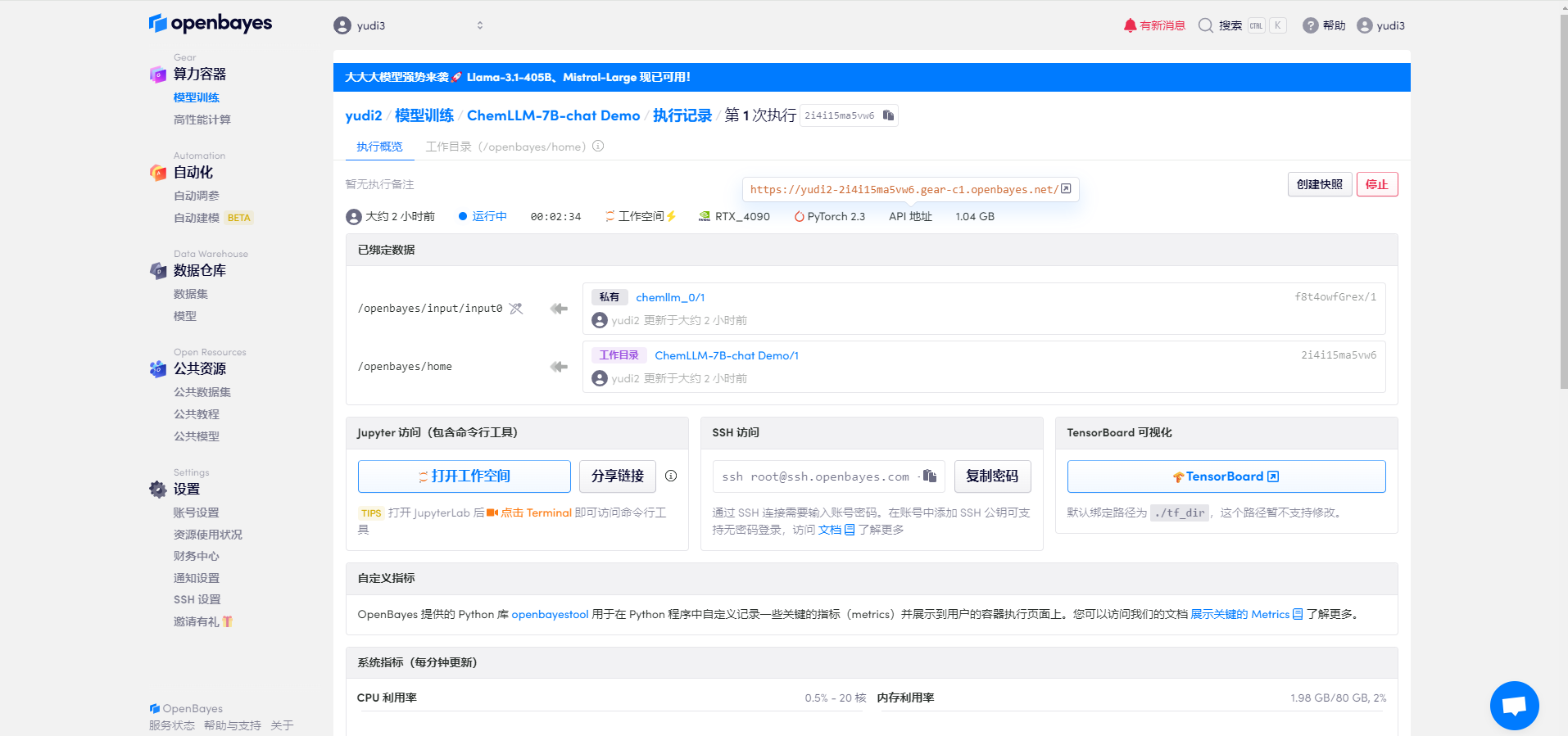

1. Model configuration

After the resources are configured, start the container and click the link at the API address to enter the Demo interface (since the model needs to be loaded after the container is successfully started, it takes about half a minute to open the webpage)

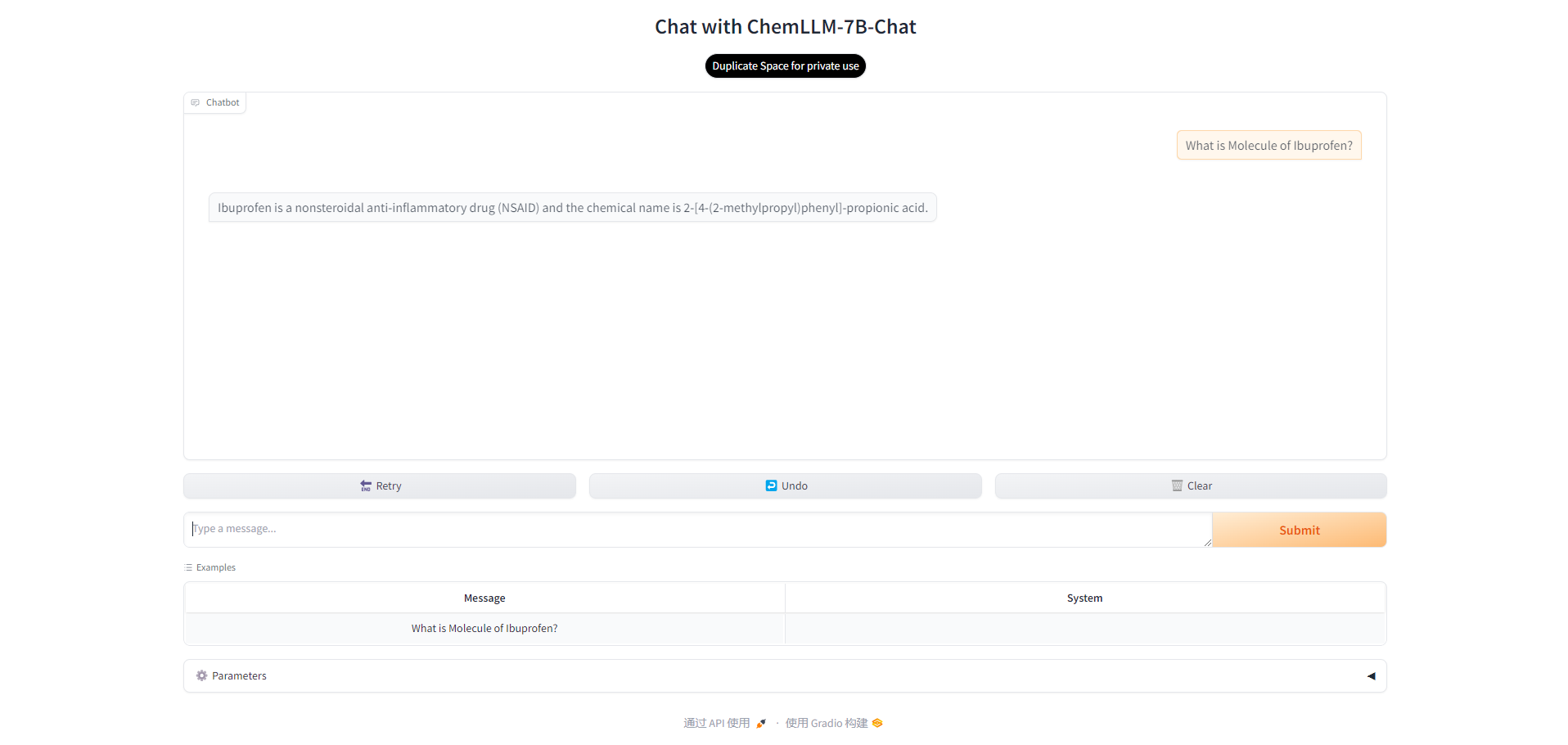

2. Open the interface

After a while, you will see the model interface, and you can now talk to the model. You can enter sample questions or questions you want to ask.

3. Parameter adjustment

There are also multiple parameters in the model that can be adjusted. Among them:

- Temperature: Used to adjust the randomness of generated text. The lower the value, the more likely the model will choose words with the highest probability, and the result will be more predictable; the higher the value, the more likely the model will explore words with lower probability, and the generated text will be more diverse but may contain more errors.

- Max New Tokens: specifies the maximum number of words that the model can generate when generating text. By limiting the number of words generated, you can control the length of the output and prevent the generation of text that is too long or too short.

You can adjust the parameters according to your needs.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.