Command Palette

Search for a command to run...

The Animated Version of "The Three-Body Problem" Has Started production. Will It Really Be Released in 2021?

Recently, the official trailer of the animated version of "The Three-Body Problem" was released on Bilibili, announcing that the final film will be released in 2021. The short 3-minute video attracted the attention of millions of people. How long will we have to wait to see "The Three-Body Problem" this time?

This week, the first official PV (Promotion Video) of the animated version of "The Three-Body Problem" was released on Bilibili. As soon as the video was released, it gained extremely high popularity and a large number of clicks. As of now, this video has been played nearly 10 million times on Bilibili.

The first official PV of the animation "Three Body"

The extremely popular "Three-Body Problem" has difficulty in being adapted into a film or TV series

The novel "The Three-Body Problem" was first serialized in "Science Fiction World" in 2006. Due to its wonderful story, lofty ideas and grand theme, it quickly became a science fiction masterpiece sought after by thousands of people.

Since then, the trilogy has been released as individual volumes, and has been sold well both at home and abroad. Even Zuckerberg and Obama have praised it highly. In 2015, it won the Hugo Award, the highest award in the science fiction world, and has become one of the most influential Chinese novels in the world in modern times.

However, the road to the screen for "The Three-Body Problem" seems to be extremely difficult. The filming of the movie version of "The Three-Body Problem" started in 2015, but there is no news of its release yet.

The complexity of the story, the hardcore science fiction elements, and the extremely rich imaginative scenes are all huge challenges.

At a time when the film version cannot make a breakthrough, making it into an animation may be able to avoid many restrictions. Because animation itself also relies on imagination, it can be played wildly, and there is not much actual upper limit on the picture and visual expression.

Perhaps it is for this reason that prompted Bilibili to join forces with The Three-Body Universe and Yihua Kaitian to jointly create an animated version of "The Three-Body Problem".

Why is animation so difficult to make?

In recent years, the best animation works have taken a long time to come out.

The Monkey King: Hero is Back, which boosted the domestic animation industry.It took eight years to polish, and finally delivered a satisfactory answer, and also won a box office of 956 million.

Nezha: The Devil Child Comes into the World took five years to produce and involved more than 1,600 people., and it earned 4.9 billion at the box office, becoming the second highest-grossing film in Chinese film history.

High-quality animation is a huge project, and it is a hard job: a lot of character creation, storyboard animation, and special effects production.A short video of just over ten seconds may require several teams’ efforts over several months.

Fortunately, the development of AI technology is bringing some help to the production of animation. Perhaps some new AI technologies can help provide more efficient methods or ideas to create a more perfect "The Three-Body" animation.

Black technology to speed up animation: make pictures move by themselves

For skilled animators, it is not difficult to complete a character construction that fits the image of the person, but making them move as required may require a lot of extra work.

For example, drawing multiple motion shots in 2D animation, or motion capture technology in 3D animation. But now, AI can automatically "wake up" photos and make them move.

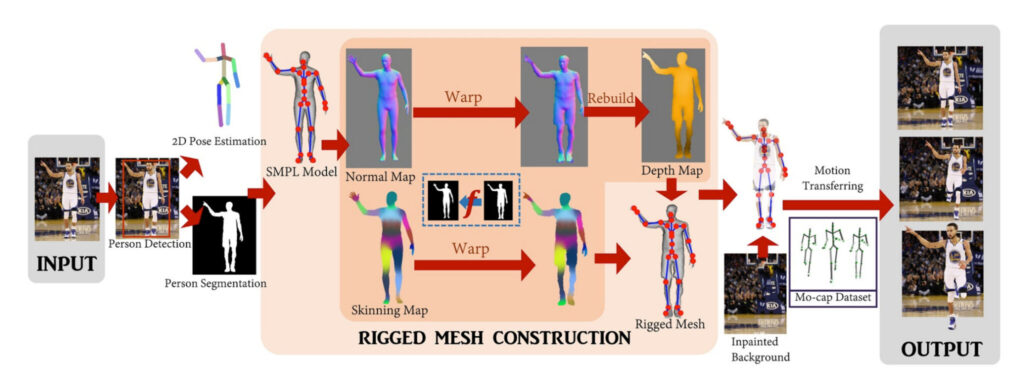

Researchers from the University of Washington and Facebook published a study some time ago that used AI to transform characters or figures in static images or paintings into 3D animations.

The technology is a deep learning model developed on the PyTorch framework. The software segments people from images, then overlays a 3D mesh, and then animates the mesh to make photos or paintings come alive.

We perform person detection, 2D pose estimation, and person segmentation on the image using off-the-shelf algorithms. We then fit a SMPL (Skinned Multi-Person Linear Model, a 3D person model) template model to the 2D pose and project it into the image as a normal map and skin map.

The core of this step is to find the mapping between the silhouette of the person and the SMPL silhouette, pass the SMPL normal/skin map to the output, and build the depth map by integrating the moving normal map.

This process was repeated to simulate the back view of the model, combined with the depth map and skin map to create a fully rigged 3D mesh. The mesh was further textured and finally animated using a motion capture sequence on a repaired background.

In other words, as long as there is a full-body frontal image of the character, this technology can be used to obtain a moving scene. It reconstructs the way of virtual image from a single image, and explores the technical possibilities of modeling from pictures to characters.

Black technology to speed up animation: automatically convert text into animation

For adapted works, if you want to be completely faithful to the original work and simplify the operation to the greatest extent, the most ideal way may be to use text to directly generate animation. At this point, AI technology is constantly improving.

In 2018, researchers at the University of Illinois and the Allen Institute for Artificial Intelligence developed an AI model called CRAFT (Composition, Retrieval, and Fusion Network) that can generate corresponding animated scenes from footage from The Flintstones based on text descriptions (or titles).

The final AI model was trained on more than 25,000 video clips. Each video in the footage is 3 seconds long and 75 frames long, and is labeled and annotated with the characters in the scene and the content of the scene.

The AI model learns to match videos with text descriptions and establishes a set of parameters. It can eventually convert the provided text description into a fan-made derivative of the animation series, including characters, props, and scene locations learned from the video.

If this is just simple editing and splicing, then today's AI can do much more.

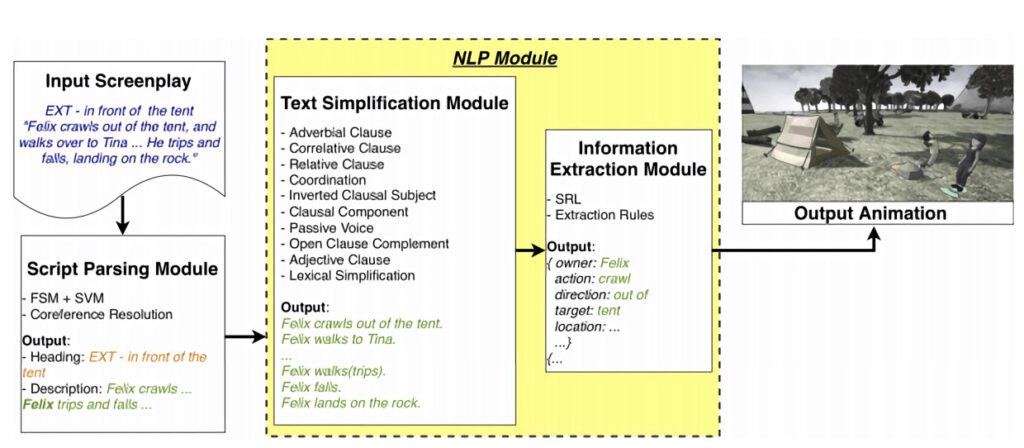

In April of this year, scientists from Disney and Rutgers University published a paper that fed the AI model 996 scripts and eventually taught the AI to automatically generate animations based on text descriptions.

In order for AI to achieve the generation of videos from text, it is necessary for it to "understand" the text and then generate the corresponding animation. To do this, they used a neural network with multiple module components.

The model consists of three parts: the first is a script parsing module that automatically parses out the scenes in the script text; the second is a natural language processing module that can extract the main descriptive sentences and refine the action representation; and the last is a generation module that converts action instructions into animation sequences.

The researchers compiled a corpus of scene descriptions collected and collated from freely available movie scripts. It consists of 525,708 descriptions containing 1,402,864 sentences, of which 920,817 contain at least one action.

After establishing the mapping between the description language and the video, we can generate simple animation clips by inputting the script. In the test experiment, the rationality of the generated animation was 68%.

If the technology were more mature, the information deviation that would occur during the adaptation process would no longer exist.

2021, animation "The Three-Body Problem" we are waiting for you

Although there is still some distance before these technologies can be used in the production of "The Three-Body Problem" animation, these fresh ideas will bring some new ideas to the production of animation.

From the current perspective, as long as there is enough data and training, the day when AI models learn to generate animations should not be far away.

Back to the "Three-Body" animation, with the strong production team of Three-Body Universe and Yihua Kaitian, as well as the strong support of Bilibili, a lot of chips have been added to this animation.

2021, rain or shine, we'll be waiting for you!

-- over--